Volvo Cars

29 Jun 2024

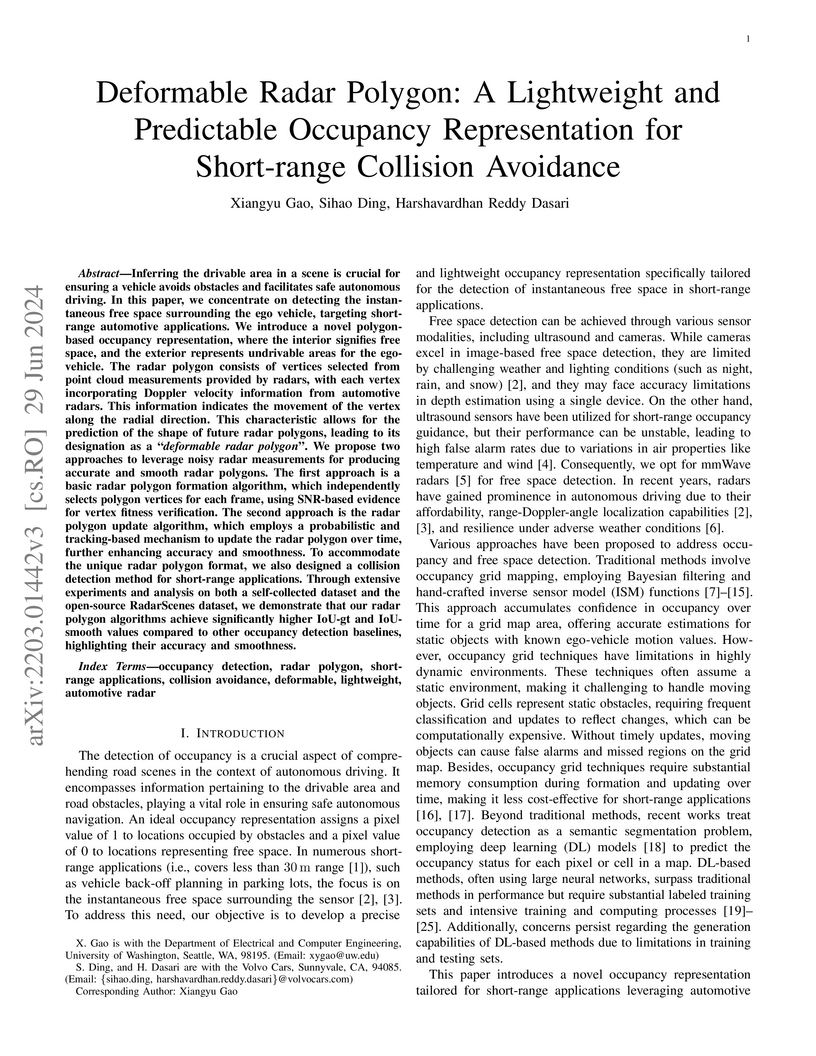

Inferring the drivable area in a scene is crucial for ensuring a vehicle

avoids obstacles and facilitates safe autonomous driving. In this paper, we

concentrate on detecting the instantaneous free space surrounding the ego

vehicle, targeting short-range automotive applications. We introduce a novel

polygon-based occupancy representation, where the interior signifies free

space, and the exterior represents undrivable areas for the ego-vehicle. The

radar polygon consists of vertices selected from point cloud measurements

provided by radars, with each vertex incorporating Doppler velocity information

from automotive radars. This information indicates the movement of the vertex

along the radial direction. This characteristic allows for the prediction of

the shape of future radar polygons, leading to its designation as a

``deformable radar polygon". We propose two approaches to leverage noisy radar

measurements for producing accurate and smooth radar polygons. The first

approach is a basic radar polygon formation algorithm, which independently

selects polygon vertices for each frame, using SNR-based evidence for vertex

fitness verification. The second approach is the radar polygon update

algorithm, which employs a probabilistic and tracking-based mechanism to update

the radar polygon over time, further enhancing accuracy and smoothness. To

accommodate the unique radar polygon format, we also designed a collision

detection method for short-range applications. Through extensive experiments

and analysis on both a self-collected dataset and the open-source RadarScenes

dataset, we demonstrate that our radar polygon algorithms achieve significantly

higher IoU-gt and IoU-smooth values compared to other occupancy detection

baselines, highlighting their accuracy and smoothness.

Bird's Eye View (BEV) representations are tremendously useful for perception-related automated driving tasks. However, generating BEVs from surround-view fisheye camera images is challenging due to the strong distortions introduced by such wide-angle lenses. We take the first step in addressing this challenge and introduce a baseline, F2BEV, to generate discretized BEV height maps and BEV semantic segmentation maps from fisheye images. F2BEV consists of a distortion-aware spatial cross attention module for querying and consolidating spatial information from fisheye image features in a transformer-style architecture followed by a task-specific head. We evaluate single-task and multi-task variants of F2BEV on our synthetic FB-SSEM dataset, all of which generate better BEV height and segmentation maps (in terms of the IoU) than a state-of-the-art BEV generation method operating on undistorted fisheye images. We also demonstrate discretized height map generation from real-world fisheye images using F2BEV. Our dataset is publicly available at this https URL

Predicting future trajectories of nearby objects, especially under occlusion, is a crucial task in autonomous driving and safe robot navigation. Prior works typically neglect to maintain uncertainty about occluded objects and only predict trajectories of observed objects using high-capacity models such as Transformers trained on large datasets. While these approaches are effective in standard scenarios, they can struggle to generalize to the long-tail, safety-critical scenarios. In this work, we explore a conceptual framework unifying trajectory prediction and occlusion reasoning under the same class of structured probabilistic generative model, namely, switching dynamical systems. We then present some initial experiments illustrating its capabilities using the Waymo open dataset.

Changes and updates in the requirement artifacts, which can be frequent in the automotive domain, are a challenge for SafetyOps. Large Language Models (LLMs), with their impressive natural language understanding and generating capabilities, can play a key role in automatically refining and decomposing requirements after each update. In this study, we propose a prototype of a pipeline of prompts and LLMs that receives an item definition and outputs solutions in the form of safety requirements. This pipeline also performs a review of the requirement dataset and identifies redundant or contradictory requirements. We first identified the necessary characteristics for performing HARA and then defined tests to assess an LLM's capability in meeting these criteria. We used design science with multiple iterations and let experts from different companies evaluate each cycle quantitatively and qualitatively. Finally, the prototype was implemented at a case company and the responsible team evaluated its efficiency.

Using continuous development, deployment, and monitoring (CDDM) to understand

and improve applications in a customer's context is widely used for non-safety

applications such as smartphone apps or web applications to enable rapid and

innovative feature improvements. Having demonstrated its potential in such

domains, it may have the potential to also improve the software development for

automotive functions as some OEMs described on a high level in their financial

company communiqus. However, the application of a CDDM strategy also faces

challenges from a process adherence and documentation perspective as required

by safety-related products such as autonomous driving systems (ADS) and guided

by industry standards such as ISO-26262 and ISO21448. There are publications on

CDDM in safety-relevant contexts that focus on safety-critical functions on a

rather generic level and thus, not specifically ADS or automotive, or that are

concentrating only on software and hence, missing out the particular context of

an automotive OEM: Well-established legacy processes and the need of their

adaptations, and aspects originating from the role of being a system integrator

for software/software, hardware/hardware, and hardware/software. In this paper,

particular challenges from the automotive domain to better adopt CDDM are

identified and discussed to shed light on research gaps to enhance CDDM,

especially for the software development of safe ADS. The challenges are

identified from today's industrial well-established ways of working by

conducting interviews with domain experts and complemented by a literature

study.

The research introduces a closed-loop, simulation-guided pipeline that automatically generates, assesses, and iteratively refines Large Language Model (LLM)-produced code for safety-critical autonomous driving functions, demonstrating improved code quality and efficiency in an industrial setting. This system leverages an industrial-grade simulator to provide natural language feedback to the LLM for bug fixing, successfully achieving functional code for complex tasks like Unsupervised Collision Avoidance by Evasive Manoeuvre (CAEM).

Annotated driving scenario trajectories are crucial for verification and

validation of autonomous vehicles. However, annotation of such trajectories

based only on explicit rules (i.e. knowledge-based methods) may be prone to

errors, such as false positive/negative classification of scenarios that lie on

the border of two scenario classes, missing unknown scenario classes, or even

failing to detect anomalies. On the other hand, verification of labels by

annotators is not cost-efficient. For this purpose, active learning (AL) could

potentially improve the annotation procedure by including an annotator/expert

in an efficient way. In this study, we develop a generic active learning

framework to annotate driving trajectory time series data. We first compute an

embedding of the trajectories into a latent space in order to extract the

temporal nature of the data. Given such an embedding, the framework becomes

task agnostic since active learning can be performed using any classification

method and any query strategy, regardless of the structure of the original time

series data. Furthermore, we utilize our active learning framework to discover

unknown driving scenario trajectories. This will ensure that previously unknown

trajectory types can be effectively detected and included in the labeled

dataset. We evaluate our proposed framework in different settings on novel

real-world datasets consisting of driving trajectories collected by Volvo Cars

Corporation. We observe that active learning constitutes an effective tool for

labelling driving trajectories as well as for detecting unknown classes.

Expectedly, the quality of the embedding plays an important role in the success

of the proposed framework.

23 Feb 2016

The relationship between a driver's glance pattern and corresponding head rotation is highly complex due to its nonlinear dependence on the individual, task, and driving context. This study explores the ability of head pose to serve as an estimator for driver gaze by connecting head rotation data with manually coded gaze region data using both a statistical analysis approach and a predictive (i.e., machine learning) approach. For the latter, classification accuracy increased as visual angles between two glance locations increased. In other words, the greater the shift in gaze, the higher the accuracy of classification. This is an intuitive but important concept that we make explicit through our analysis. The highest accuracy achieved was 83% using the method of Hidden Markov Models (HMM) for the binary gaze classification problem of (1) the forward roadway versus (2) the center stack. Results suggest that although there are individual differences in head-glance correspondence while driving, classifier models based on head-rotation data may be robust to these differences and therefore can serve as reasonable estimators for glance location. The results suggest that driver head pose can be used as a surrogate for eye gaze in several key conditions including the identification of high-eccentricity glances. Inexpensive driver head pose tracking may be a key element in detection systems developed to mitigate driver distraction and inattention.

12 Feb 2020

There is some ambiguity of what agile means in both research and practice.

Authors have suggested a diversity of different definitions, through which it

is difficult to interpret what agile really is. The concept, however, exists in

its implementation through agile practices. In this vision paper, we argue that

adopting an agile approach boils down to being more responsive to change. To

support this claim, we relate agile principles, practices, the agile manifesto,

and our own experiences to this core definition. We envision that agile

transformations would be, and are, much easier using this definition and

contextualizing its implications.

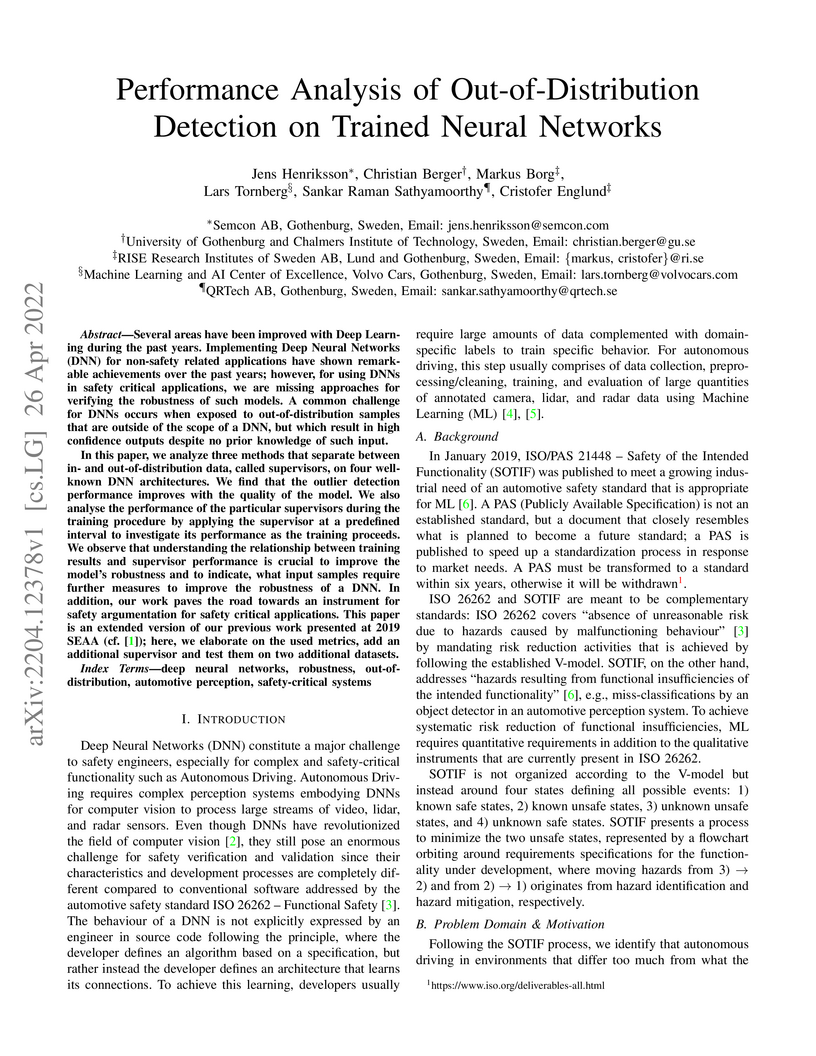

Several areas have been improved with Deep Learning during the past years. Implementing Deep Neural Networks (DNN) for non-safety related applications have shown remarkable achievements over the past years; however, for using DNNs in safety critical applications, we are missing approaches for verifying the robustness of such models. A common challenge for DNNs occurs when exposed to out-of-distribution samples that are outside of the scope of a DNN, but which result in high confidence outputs despite no prior knowledge of such input.

In this paper, we analyze three methods that separate between in- and out-of-distribution data, called supervisors, on four well-known DNN architectures. We find that the outlier detection performance improves with the quality of the model. We also analyse the performance of the particular supervisors during the training procedure by applying the supervisor at a predefined interval to investigate its performance as the training proceeds. We observe that understanding the relationship between training results and supervisor performance is crucial to improve the model's robustness and to indicate, what input samples require further measures to improve the robustness of a DNN. In addition, our work paves the road towards an instrument for safety argumentation for safety critical applications. This paper is an extended version of our previous work presented at 2019 SEAA (cf. [1]); here, we elaborate on the used metrics, add an additional supervisor and test them on two additional datasets.

05 Jun 2024

Software-in-the-loop (SIL) simulation is a widely used method for the rapid development and testing of autonomous vehicles because of its flexibility and efficiency. This paper presents a case study on the validation of an in-house developed SIL simulation toolchain. The presented validation process involves the design and execution of a set of representative scenarios on the test track. To align the test track runs with the SIL simulations, a synchronization approach is proposed, which includes refining the scenarios by fine-tuning the parameters based on data obtained from vehicle testing. The paper also discusses two metrics used for evaluating the correlation between the SIL simulations and the vehicle testing logs. Preliminary results are presented to demonstrate the effectiveness of the proposed validation process

01 Jul 2022

Randomised field experiments, such as A/B testing, have long been the gold

standard for evaluating software changes. In the automotive domain, running

randomised field experiments is not always desired, possible, or even ethical.

In the face of such limitations, we develop a framework BOAT (Bayesian causal

modelling for ObvservAtional Testing), utilising observational studies in

combination with Bayesian causal inference, in order to understand real-world

impacts from complex automotive software updates and help software development

organisations arrive at causal conclusions. In this study, we present three

causal inference models in the Bayesian framework and their corresponding cases

to address three commonly experienced challenges of software evaluation in the

automotive domain. We develop the BOAT framework with our industry

collaborator, and demonstrate the potential of causal inference by conducting

empirical studies on a large fleet of vehicles. Moreover, we relate the causal

assumption theories to their implications in practise, aiming to provide a

comprehensive guide on how to apply the causal models in automotive software

engineering. We apply Bayesian propensity score matching for producing balanced

control and treatment groups when we do not have access to the entire user

base, Bayesian regression discontinuity design for identifying covariate

dependent treatment assignments and the local treatment effect, and Bayesian

difference-in-differences for causal inference of treatment effect overtime and

implicitly control unobserved confounding factors. Each one of the

demonstrative case has its grounds in practise, and is a scenario experienced

when randomisation is not feasible. With the BOAT framework, we enable online

software evaluation in the automotive domain without the need of a fully

randomised experiment.

25 Apr 2022

Randomized field experiments are the gold standard for evaluating the impact of software changes on customers. In the online domain, randomization has been the main tool to ensure exchangeability. However, due to the different deployment conditions and the high dependence on the surrounding environment, designing experiments for automotive software needs to consider a higher number of restricted variables to ensure conditional exchangeability. In this paper, we show how at Volvo Cars we utilize causal graphical models to design experiments and explicitly communicate the assumptions of experiments. These graphical models are used to further assess the experiment validity, compute direct and indirect causal effects, and reason on the transportability of the causal conclusions.

01 Sep 2025

The electrification and automation of mobility are reshaping how cities operate on-demand transport systems. Managing Electric Autonomous Mobility-on-Demand (EAMoD) fleets effectively requires coordinating dispatch, rebalancing, and charging decisions under multiple uncertainties, including travel demand, travel time, energy consumption, and charger availability. We address this challenge with a combined stochastic and robust model predictive control (MPC) framework. The framework integrates spatio-temporal Bayesian neural network forecasts with a multi-stage stochastic optimization model, formulated as a large-scale mixed-integer linear program. To ensure real-time applicability, we develop a tailored Nested Benders Decomposition that exploits the scenario tree structure and enables efficient parallelized solution. Stochastic optimization is employed to anticipate demand and infrastructure variability, while robust constraints on energy consumption and travel times safeguard feasibility under worst-case realizations. We evaluate the framework using high-fidelity simulations of San Francisco and Chicago. Compared with deterministic, reactive, and robust baselines, the combined stochastic and robust approach reduces median passenger waiting times by up to 36% and 95th-percentile delays by nearly 20%, while also lowering rebalancing distance by 27% and electricity costs by more than 35%. We also conduct a sensitivity analysis of battery size and vehicle efficiency, finding that energy-efficient vehicles maintain stable performance even with small batteries, whereas less efficient vehicles require larger batteries and greater infrastructure support. Our results emphasize the importance of jointly optimizing predictive control, vehicle capabilities, and infrastructure planning to enable scalable, cost-efficient EAMoD operations.

We have recently observed the commercial roll-out of robotaxis in various countries. They are deployed within an operational design domain (ODD) on specific routes and environmental conditions, and are subject to continuous monitoring to regain control in safety-critical situations. Since ODDs typically cover urban areas, robotaxis must reliably detect vulnerable road users (VRUs) such as pedestrians, bicyclists, or e-scooter riders. To better handle such varied traffic situations, end-to-end AI, which directly compute vehicle control actions from multi-modal sensor data instead of only for perception, is on the rise. High quality data is needed for systematically training and evaluating such systems within their OOD. In this work, we propose PCICF, a framework to systematically identify and classify VRU situations to support ODD's incident analysis. We base our work on the existing synthetic dataset SMIRK, and enhance it by extending its single-pedestrian-only design into the MoreSMIRK dataset, a structured dictionary of multi-pedestrian crossing situations constructed systematically. We then use space-filling curves (SFCs) to transform multi-dimensional features of scenarios into characteristic patterns, which we match with corresponding entries in MoreSMIRK. We evaluate PCICF with the large real-world dataset PIE, which contains more than 150 manually annotated pedestrian crossing videos. We show that PCICF can successfully identify and classify complex pedestrian crossings, even when groups of pedestrians merge or split. By leveraging computationally efficient components like SFCs, PCICF has even potential to be used onboard of robotaxis for OOD detection for example. We share an open-source replication package for PCICF containing its algorithms, the complete MoreSMIRK dataset and dictionary, as well as our experiment results presented in: this https URL

Traffic sign identification using camera images from vehicles plays a critical role in autonomous driving and path planning. However, the front camera images can be distorted due to blurriness, lighting variations and vandalism which can lead to degradation of detection performances. As a solution, machine learning models must be trained with data from multiple domains, and collecting and labeling more data in each new domain is time consuming and expensive. In this work, we present an end-to-end framework to augment traffic sign training data using optimal reinforcement learning policies and a variety of Generative Adversarial Network (GAN) models, that can then be used to train traffic sign detector modules. Our automated augmenter enables learning from transformed nightime, poor lighting, and varying degrees of occlusions using the LISA Traffic Sign and BDD-Nexar dataset. The proposed method enables mapping training data from one domain to another, thereby improving traffic sign detection precision/recall from 0.70/0.66 to 0.83/0.71 for nighttime images.

Deep Neural Networks (DNN) have improved the quality of several non-safety related products in the past years. However, before DNNs should be deployed to safety-critical applications, their robustness needs to be systematically analyzed. A common challenge for DNNs occurs when input is dissimilar to the training set, which might lead to high confidence predictions despite proper knowledge of the input. Several previous studies have proposed to complement DNNs with a supervisor that detects when inputs are outside the scope of the network. Most of these supervisors, however, are developed and tested for a selected scenario using a specific performance metric. In this work, we emphasize the need to assess and compare the performance of supervisors in a structured way. We present a framework constituted by four datasets organized in six test cases combined with seven evaluation metrics. The test cases provide varying complexity and include data from publicly available sources as well as a novel dataset consisting of images from simulated driving scenarios. The latter we plan to make publicly available. Our framework can be used to support DNN supervisor evaluation, which in turn could be used to motive development, validation, and deployment of DNNs in safety-critical applications.

Gathering data and identifying events in various traffic situations remains an essential challenge for the systematic evaluation of a perception system's performance. Analyzing large-scale, typically unstructured, multi-modal, time series data obtained from video, radar, and LiDAR is computationally demanding, particularly when meta-information or annotations are missing. We compare Optical Flow (OF) and Deep Learning (DL) to feed computationally efficient event detection via space-filling curves on video data from a forward-facing, in-vehicle camera. Our first approach leverages unexpected disturbances in the OF field from vehicle surroundings; the second approach is a DL model trained on human visual attention to predict a driver's gaze to spot potential event locations. We feed these results to a space-filling curve to reduce dimensionality and achieve computationally efficient event retrieval. We systematically evaluate our concept by obtaining characteristic patterns for both approaches from a large-scale virtual dataset (SMIRK) and applied our findings to the Zenseact Open Dataset (ZOD), a large multi-modal, real-world dataset, collected over two years in 14 different European countries. Our results yield that the OF approach excels in specificity and reduces false positives, while the DL approach demonstrates superior sensitivity. Both approaches offer comparable processing speed, making them suitable for real-time applications.

05 Nov 2020

The purpose of this paper is to suggest additional aspects of social

psychology that could help when making sense of autonomous agile teams. To make

use of well-tested theories in social psychology and instead see how they

replicated and differ in the autonomous agile team context would avoid

reinventing the wheel. This was done, as an initial step, through looking at

some very common agile practices and relate them to existing findings in

social-psychological research. The two theories found that I argue could be

more applied to the software engineering context are social identity theory and

group socialization theory. The results show that literature provides

social-psychological reasons for the popularity of some agile practices, but

that scientific studies are needed to gather empirical evidence on these

under-researched topics. Understanding deeper psychological theories could

provide a better understanding of the psychological processes when building

autonomous agile team, which could then lead to better predictability and

intervention in relation to human factors.

10 Nov 2021

A/B testing is gaining attention in the automotive sector as a promising tool

to measure causal effects from software changes. Different from the web-facing

businesses, where A/B testing has been well-established, the automotive domain

often suffers from limited eligible users to participate in online experiments.

To address this shortcoming, we present a method for designing balanced control

and treatment groups so that sound conclusions can be drawn from experiments

with considerably small sample sizes. While the Balance Match Weighted method

has been used in other domains such as medicine, this is the first paper to

apply and evaluate it in the context of software development. Furthermore, we

describe the Balance Match Weighted method in detail and we conduct a case

study together with an automotive manufacturer to apply the group design method

in a fleet of vehicles. Finally, we present our case study in the automotive

software engineering domain, as well as a discussion on the benefits and

limitations of the A/B group design method.

There are no more papers matching your filters at the moment.