Wisconsin Institute for Discovery

In many interactive decision-making settings, there is latent and unobserved information that remains fixed. Consider, for example, a dialogue system, where complete information about a user, such as the user's preferences, is not given. In such an environment, the latent information remains fixed throughout each episode, since the identity of the user does not change during an interaction. This type of environment can be modeled as a Latent Markov Decision Process (LMDP), a special instance of Partially Observed Markov Decision Processes (POMDPs). Previous work established exponential lower bounds in the number of latent contexts for the LMDP class. This puts forward a question: under which natural assumptions a near-optimal policy of an LMDP can be efficiently learned? In this work, we study the class of LMDPs with {\em prospective side information}, when an agent receives additional, weakly revealing, information on the latent context at the beginning of each episode. We show that, surprisingly, this problem is not captured by contemporary settings and algorithms designed for partially observed environments. We then establish that any sample efficient algorithm must suffer at least Ω(K2/3)-regret, as opposed to standard Ω(K) lower bounds, and design an algorithm with a matching upper bound.

02 Oct 2025

All inverse problems rely on data to recover unknown parameters, yet not all data are equally informative. This raises the central question of data selection. A distinctive challenge in PDE-based inverse problems is their inherently infinite-dimensional nature: both the parameter space and the design space are infinite, which greatly complicates the selection process. Somewhat unexpectedly, randomized numerical linear algebra (RNLA), originally developed in very different contexts, has provided powerful tools for addressing this challenge. These methods are inherently probabilistic, with guarantees typically stating that information is preserved with probability at least 1-p when using N randomly selected, weighted samples. Here, the notion of information can take different mathematical forms depending on the setting. In this review, we survey the problem of data selection in PDE-based inverse problems, emphasize its unique infinite-dimensional aspects, and highlight how RNLA strategies have been adapted and applied in this context.

30 Nov 2016

In this paper, we consider the problem of learning high-dimensional tensor regression problems with low-rank structure. One of the core challenges associated with learning high-dimensional models is computation since the underlying optimization problems are often non-convex. While convex relaxations could lead to polynomial-time algorithms they are often slow in practice. On the other hand, limited theoretical guarantees exist for non-convex methods. In this paper we provide a general framework that provides theoretical guarantees for learning high-dimensional tensor regression models under different low-rank structural assumptions using the projected gradient descent algorithm applied to a potentially non-convex constraint set Θ in terms of its \emph{localized Gaussian width}. We juxtapose our theoretical results for non-convex projected gradient descent algorithms with previous results on regularized convex approaches. The two main differences between the convex and non-convex approach are: (i) from a computational perspective whether the non-convex projection operator is computable and whether the projection has desirable contraction properties and (ii) from a statistical upper bound perspective, the non-convex approach has a superior rate for a number of examples. We provide three concrete examples of low-dimensional structure which address these issues and explain the pros and cons for the non-convex and convex approaches. We supplement our theoretical results with simulations which show that, under several common settings of generalized low rank tensor regression, the projected gradient descent approach is superior both in terms of statistical error and run-time provided the step-sizes of the projected descent algorithm are suitably chosen.

07 Sep 2020

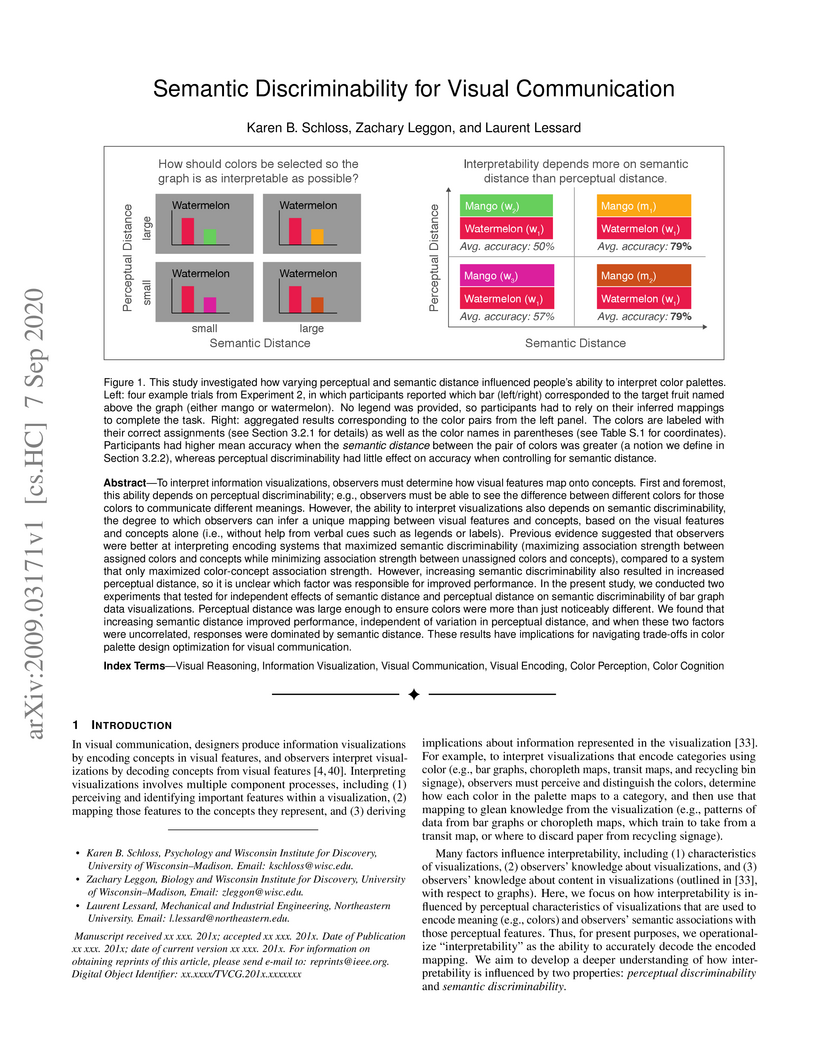

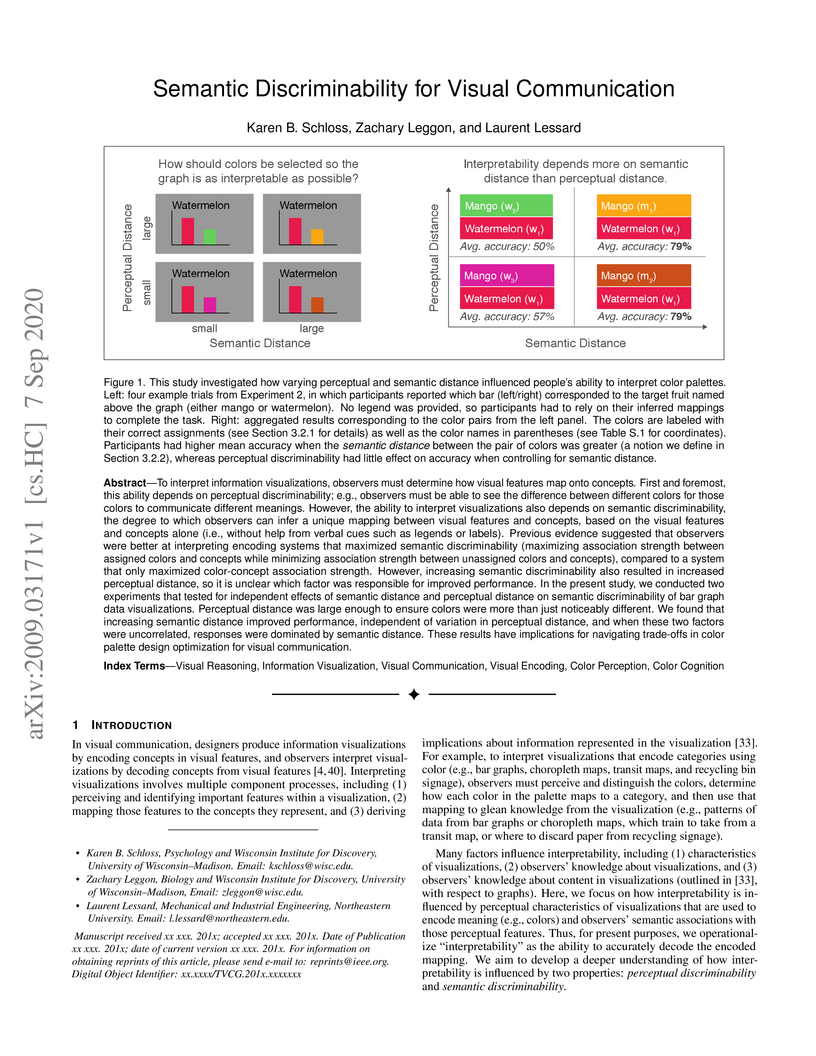

To interpret information visualizations, observers must determine how visual features map onto concepts. First and foremost, this ability depends on perceptual discriminability; e.g., observers must be able to see the difference between different colors for those colors to communicate different meanings. However, the ability to interpret visualizations also depends on semantic discriminability, the degree to which observers can infer a unique mapping between visual features and concepts, based on the visual features and concepts alone (i.e., without help from verbal cues such as legends or labels). Previous evidence suggested that observers were better at interpreting encoding systems that maximized semantic discriminability (maximizing association strength between assigned colors and concepts while minimizing association strength between unassigned colors and concepts), compared to a system that only maximized color-concept association strength. However, increasing semantic discriminability also resulted in increased perceptual distance, so it is unclear which factor was responsible for improved performance. In the present study, we conducted two experiments that tested for independent effects of semantic distance and perceptual distance on semantic discriminability of bar graph data visualizations. Perceptual distance was large enough to ensure colors were more than just noticeably different. We found that increasing semantic distance improved performance, independent of variation in perceptual distance, and when these two factors were uncorrelated, responses were dominated by semantic distance. These results have implications for navigating trade-offs in color palette design optimization for visual communication.

07 Sep 2020

To interpret information visualizations, observers must determine how visual features map onto concepts. First and foremost, this ability depends on perceptual discriminability; e.g., observers must be able to see the difference between different colors for those colors to communicate different meanings. However, the ability to interpret visualizations also depends on semantic discriminability, the degree to which observers can infer a unique mapping between visual features and concepts, based on the visual features and concepts alone (i.e., without help from verbal cues such as legends or labels). Previous evidence suggested that observers were better at interpreting encoding systems that maximized semantic discriminability (maximizing association strength between assigned colors and concepts while minimizing association strength between unassigned colors and concepts), compared to a system that only maximized color-concept association strength. However, increasing semantic discriminability also resulted in increased perceptual distance, so it is unclear which factor was responsible for improved performance. In the present study, we conducted two experiments that tested for independent effects of semantic distance and perceptual distance on semantic discriminability of bar graph data visualizations. Perceptual distance was large enough to ensure colors were more than just noticeably different. We found that increasing semantic distance improved performance, independent of variation in perceptual distance, and when these two factors were uncorrelated, responses were dominated by semantic distance. These results have implications for navigating trade-offs in color palette design optimization for visual communication.

We consider the distributed optimization problem, where a group of agents work together to optimize a common objective by communicating with neighboring agents and performing local computations. For a given algorithm, we use tools from robust control to systematically analyze the performance in the case where the communication network is time-varying. In particular, we assume only that the network is jointly connected over a finite time horizon (commonly referred to as B-connectivity), which does not require connectivity at each time instant. When applied to the distributed algorithm DIGing, our bounds are orders of magnitude tighter than those available in the literature.

16 Sep 2022

We study exact recovery conditions for the linear programming relaxation of the k-median problem in the stochastic ball model (SBM). In Awasthi et al. (2015), the authors give a tight result for the k-median LP in the SBM, saying that exact recovery can be achieved as long as the balls are pairwise disjoint. We give a counterexample to their result, thereby showing that the k-median LP is not tight in low dimension. Instead, we give a near optimal result showing that the k-median LP in the SBM is tight in high dimension. We also show that, if the probability measure satisfies some concentration assumptions, then the k-median LP in the SBM is tight in every dimension. Furthermore, we propose a new model of data called extended stochastic ball model (ESBM), which significantly generalizes the well-known SBM. We then show that exact recovery can still be achieved in the ESBM.

Consider observing a collection of discrete events within a network that

reflect how network nodes influence one another. Such data are common in spike

trains recorded from biological neural networks, interactions within a social

network, and a variety of other settings. Data of this form may be modeled as

self-exciting point processes, in which the likelihood of future events depends

on the past events. This paper addresses the problem of estimating

self-excitation parameters and inferring the underlying functional network

structure from self-exciting point process data. Past work in this area was

limited by strong assumptions which are addressed by the novel approach here.

Specifically, in this paper we (1) incorporate saturation in a point process

model which both ensures stability and models non-linear thresholding effects;

(2) impose general low-dimensional structural assumptions that include

sparsity, group sparsity and low-rankness that allows bounds to be developed in

the high-dimensional setting; and (3) incorporate long-range memory effects

through moving average and higher-order auto-regressive components. Using our

general framework, we provide a number of novel theoretical guarantees for

high-dimensional self-exciting point processes that reflect the role played by

the underlying network structure and long-term memory. We also provide

simulations and real data examples to support our methodology and main results.

Cellular regulatory dynamics is driven by large and intricate networks of interactions at the molecular scale, whose sheer size obfuscates understanding. In light of limited experimental data, many parameters of such dynamics are unknown, and thus models built on the detailed, mechanistic viewpoint overfit and are not predictive. At the other extreme, simple ad hoc models of complex processes often miss defining features of the underlying systems. Here we propose an approach that instead constructs phenomenological, coarse-grained models of network dynamics that automatically adapt their complexity to the amount of available data. Such adaptive models lead to accurate predictions even when microscopic details of the studied systems are unknown due to insufficient data. The approach is computationally tractable, even for a relatively large number of dynamical variables, allowing its software realization, named Sir Isaac, to make successful predictions even when important dynamic variables are unobserved. For example, it matches the known phase space structure for simulated planetary motion data, avoids overfitting in a complex biological signaling system, and produces accurate predictions for a yeast glycolysis model with only tens of data points and over half of the interacting species unobserved.

We consider the NP-hard problem of minimizing a separable concave quadratic function over the integral points in a polyhedron, and we denote by D the largest absolute value of the subdeterminants of the constraint matrix. In this paper we give an algorithm that finds an epsilon-approximate solution for this problem by solving a number of integer linear programs whose constraint matrices have subdeterminants bounded by D in absolute value. The number of these integer linear programs is polynomial in the dimension n, in D and in 1/epsilon, provided that the number k of variables that appear nonlinearly in the objective is fixed. As a corollary, we obtain the first polynomial-time approximation algorithm for separable concave integer quadratic programming with D at most two and k fixed. In the totally unimodular case D=1, we give an improved algorithm that only needs to solve a number of linear programs that is polynomial in 1/epsilon and is independent on n, provided that k is fixed.

Learning good representations of historical contexts is one of the core

challenges of reinforcement learning (RL) in partially observable environments.

While self-predictive auxiliary tasks have been shown to improve performance in

fully observed settings, their role in partial observability remains

underexplored. In this empirical study, we examine the effectiveness of

self-predictive representation learning via future prediction, i.e., predicting

next-step observations as an auxiliary task for learning history

representations, especially in environments with long-term dependencies. We

test the hypothesis that future prediction alone can produce representations

that enable strong RL performance. To evaluate this, we introduce

DRL2, an approach that explicitly decouples representation learning

from reinforcement learning, and compare this approach to end-to-end training

across multiple benchmarks requiring long-term memory. Our findings provide

evidence that this hypothesis holds across different network architectures,

reinforcing the idea that future prediction performance serves as a reliable

indicator of representation quality and contributes to improved RL performance.

The preservation of soil health is a critical challenge in the 21st century due to its significant impact on agriculture, human health, and biodiversity. We provide the first deep investigation of the predictive potential of machine learning models to understand the connections between soil and biological phenotypes. We investigate an integrative framework performing accurate machine learning-based prediction of plant phenotypes from biological, chemical, and physical properties of the soil via two models: random forest and Bayesian neural network. We show that prediction is improved when incorporating environmental features like soil physicochemical properties and microbial population density into the models, in addition to the microbiome information. Exploring various data preprocessing strategies confirms the significant impact of human decisions on predictive performance. We show that the naive total sum scaling normalization that is commonly used in microbiome research is not the optimal strategy to maximize predictive power. Also, we find that accurately defined labels are more important than normalization, taxonomic level or model characteristics. In cases where humans are unable to classify samples accurately, machine learning model performance is limited. Lastly, we provide domain scientists via a full model selection decision tree to identify the human choices that optimize model prediction power. Our work is accompanied by open source reproducible scripts (this https URL) for maximum outreach among the microbiome research community.

Concepts, both abstract and concrete, elicit a distribution of association

strengths across perceptual color space, which influence aspects of visual

cognition ranging from object recognition to interpretation of information

visualizations. While prior work has hypothesized that color-concept

associations may be learned from the cross-modal statistical structure of

experience, it has been unclear whether natural environments possess such

structure or, if so, whether learning systems are capable of discovering and

exploiting it without strong prior constraints. We addressed these questions by

investigating the ability of GPT-4, a multimodal large language model, to

estimate human-like color-concept associations without any additional training.

Starting with human color-concept association ratings for 71 color set spanning

perceptual color space (\texttt{UW-71}) and concepts that varied in

abstractness, we assessed how well association ratings generated by GPT-4 could

predict human ratings. GPT-4 ratings were correlated with human ratings, with

performance comparable to state-of-the-art methods for automatically estimating

color-concept associations from images. Variability in GPT-4's performance

across concepts could be explained by specificity of the concept's

color-concept association distribution. This study suggests that high-order

covariances between language and perception, as expressed in the natural

environment of the internet, contain sufficient information to support learning

of human-like color-concept associations, and provides an existence proof that

a learning system can encode such associations without initial constraints. The

work further shows that GPT-4 can be used to efficiently estimate distributions

of color associations for a broad range of concepts, potentially serving as a

critical tool for designing effective and intuitive information visualizations.

We propose a stochastic approximation method for approximating the efficient

frontier of chance-constrained nonlinear programs. Our approach is based on a

bi-objective viewpoint of chance-constrained programs that seeks solutions on

the efficient frontier of optimal objective value versus risk of constraint

violation. To this end, we construct a reformulated problem whose objective is

to minimize the probability of constraints violation subject to deterministic

convex constraints (which includes a bound on the objective function value). We

adapt existing smoothing-based approaches for chance-constrained problems to

derive a convergent sequence of smooth approximations of our reformulated

problem, and apply a projected stochastic subgradient algorithm to solve it. In

contrast with exterior sampling-based approaches (such as sample average

approximation) that approximate the original chance-constrained program with

one having finite support, our proposal converges to stationary solutions of a

smooth approximation of the original problem, thereby avoiding poor local

solutions that may be an artefact of a fixed sample. Our proposal also includes

a tailored implementation of the smoothing-based approach that chooses key

algorithmic parameters based on problem data. Computational results on four

test problems from the literature indicate that our proposed approach can

efficiently determine good approximations of the efficient frontier.

23 Jul 2022

Microbiome data require statistical models that can simultaneously decode

microbes' reaction to the environment and interactions among microbes. While a

multiresponse linear regression model seems like a straight-forward solution,

we argue that treating it as a graphical model is flawed given that the

regression coefficient matrix does not encode the conditional dependence

structure between response and predictor nodes as it does not represent the

adjacency matrix. This observation is especially important in biological

settings when we have prior knowledge on the edges from specific experimental

interventions that can only be properly encoded under a conditional dependence

model. Here, we propose a chain graph model with two sets of nodes (predictors

and responses) whose solution yields a graph with edges that indeed represent

conditional dependence and thus, agrees with the experimenter's intuition on

the average behavior of nodes under treatment. The solution to our model is

sparse via Bayesian LASSO. In addition, we propose an adaptive extension so

that different shrinkage can be applied to different edges to incorporate

edge-specific prior knowledge. Our model is computationally inexpensive through

an efficient Gibbs sampling algorithm and can account for binary, counting and

compositional responses via appropriate hierarchical structure. We apply our

model to a human gut and a soil microbial compositional datasets and we

highlight that CG-LASSO can estimate biologically meaningful network structures

in the data. The CG-LASSO software is available as an R package at

this https URL

17 Dec 2017

In this paper, we study the minimax rates and provide an implementable convex algorithm for Poisson inverse problems under weak sparsity and physical constraints. In particular we assume the model yi∼\mboxPoisson(Tai⊤f∗) for 1≤i≤n where T∈R+ is the intensity, and we impose weak sparsity on f∗∈Rp by assuming f∗ lies in an ℓq-ball when rotated according to an orthonormal basis D∈Rp×p. In addition, since we are modeling real physical systems we also impose positivity and flux-preserving constraints on the matrix A=[a1,a2,...,an]⊤ and the function f∗. We prove minimax lower bounds for this model which scale as Rq(Tlogp)1−q/2 where it is noticeable that the rate depends on the intensity T and not the sample size n. We also show that a ℓ1-based regularized least-squares estimator achieves this minimax lower bound, provided a suitable restricted eigenvalue condition is satisfied. Finally we prove that provided n≥K~logp where K~=O(Rq(Tlogp)−q/2) represents an approximate sparsity level, our restricted eigenvalue condition and physical constraints are satisfied for random bounded ensembles. We also provide numerical experiments that validate our mean-squared error bounds. Our results address a number of open issues from prior work on Poisson inverse problems that focuses on strictly sparse models and does not provide guarantees for convex implementable algorithms.

We discuss a set of computational techniques, called Inductive Game Theory, for extracting strategic decision-making rules from time series data and constructing probabilistic social circuits. We construct these circuits by connecting component individuals and groups with strategies in a game and propose an inductive approach to reconstructing the edges. We demonstrate this approach with conflict behavior in a society of pigtailed macaques by identifying significant patterns in decision-making by individuals. With the constructed circuit, we then capture macroscopic features of the system that were not specified in the construction of the initial circuit, providing a mapping between individual level behaviors to collective behaviors over the scale of the group. We extend on previous work in Inductive Game Theory by more efficiently searching the space of possible strategies by grouping individuals into socially relevant sets to produce a more efficient, parsimonious specification of the underlying interactions between components. We discuss how we reduce the dimensionality of these circuits using coarse-graining or compression to build cognitive effective theories for collective behavior.

I consider the many ways in which evolved information-flows are restricted and metabolic resources protected and hidden -- the thesis of living phenomena as evolutionary cryptosystems. I present the information theory of secrecy systems and discuss mechanisms acquired by evolved lineages that encrypt sensitive heritable information with random keys. I explore the idea that complexity science is a cryptographic discipline as "frozen accidents", or various forms of regularized randomness, historically encrypt adaptive dynamics.

Michigan State University

Michigan State University Harvard University

Harvard University Carnegie Mellon UniversityShenzhen Research Institute of Big Data

Carnegie Mellon UniversityShenzhen Research Institute of Big Data University of Southern California

University of Southern California University of MichiganUniversity of TasmaniaHarvard Medical School

University of MichiganUniversity of TasmaniaHarvard Medical School University of Pennsylvania

University of Pennsylvania Johns Hopkins University

Johns Hopkins University University of Wisconsin-Madison

University of Wisconsin-Madison Princeton UniversityDalhousie UniversityUniversity of New HampshireBrigham and Women’s HospitalMorgridge Institute for ResearchUniversity of Maryland School of MedicineUniversity Hospital HeidelbergWisconsin Institute for DiscoveryPhilips HealthcareMoffitt Cancer CenterUniversity of Colorado School of MedicinePhilips Research North AmericaKlaus Tschira Institute for Integrative Computational CardiologyBioeconomy.XYZIn-Q-Tel LabsVan Andel Research Institute

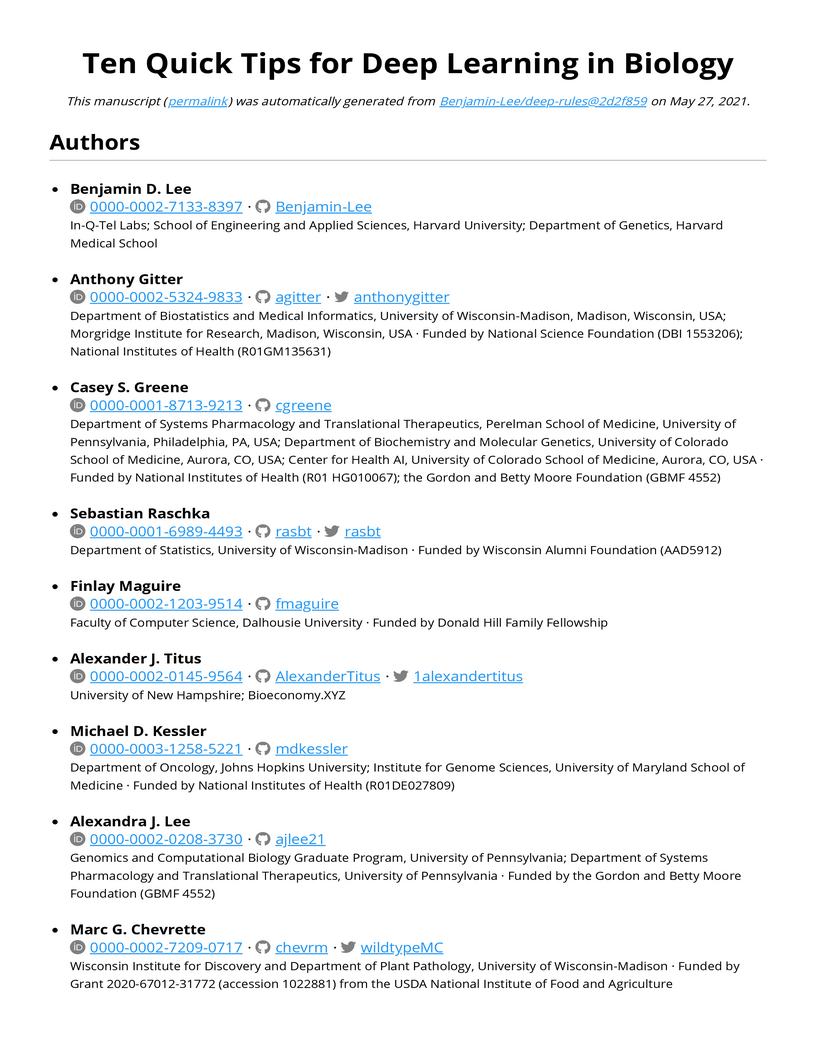

Princeton UniversityDalhousie UniversityUniversity of New HampshireBrigham and Women’s HospitalMorgridge Institute for ResearchUniversity of Maryland School of MedicineUniversity Hospital HeidelbergWisconsin Institute for DiscoveryPhilips HealthcareMoffitt Cancer CenterUniversity of Colorado School of MedicinePhilips Research North AmericaKlaus Tschira Institute for Integrative Computational CardiologyBioeconomy.XYZIn-Q-Tel LabsVan Andel Research InstituteMachine learning is a modern approach to problem-solving and task automation. In particular, machine learning is concerned with the development and applications of algorithms that can recognize patterns in data and use them for predictive modeling. Artificial neural networks are a particular class of machine learning algorithms and models that evolved into what is now described as deep learning. Given the computational advances made in the last decade, deep learning can now be applied to massive data sets and in innumerable contexts. Therefore, deep learning has become its own subfield of machine learning. In the context of biological research, it has been increasingly used to derive novel insights from high-dimensional biological data. To make the biological applications of deep learning more accessible to scientists who have some experience with machine learning, we solicited input from a community of researchers with varied biological and deep learning interests. These individuals collaboratively contributed to this manuscript's writing using the GitHub version control platform and the Manubot manuscript generation toolset. The goal was to articulate a practical, accessible, and concise set of guidelines and suggestions to follow when using deep learning. In the course of our discussions, several themes became clear: the importance of understanding and applying machine learning fundamentals as a baseline for utilizing deep learning, the necessity for extensive model comparisons with careful evaluation, and the need for critical thought in interpreting results generated by deep learning, among others.

16 Apr 2019

Input constrained Model predictive control (MPC) includes an optimization problem which should iteratively be solved at each time-instance. The well-known drawback of model predictive control is the computational cost of the optimization problem. This results in restriction of the application of MPC to systems with slow dynamics, e.g., process control systems and small-scale problems. Therefore, implementing fast numerical optimization algorithms has been a point of interest. Interior-point methods are proved to be appropriate algorithms, from computational cost point-of-vie, to solve input-constrained MPC. In this paper first a modified version of Mehrotra's predictor-corrector algorithm, a famous interior-point algorithm, is extended for quadratic programming problems and then is applied to the constrained model predictive control problems. Results show that as expected, the new algorithm is faster than Matlab solver's algorithm.

There are no more papers matching your filters at the moment.