Ask or search anything...

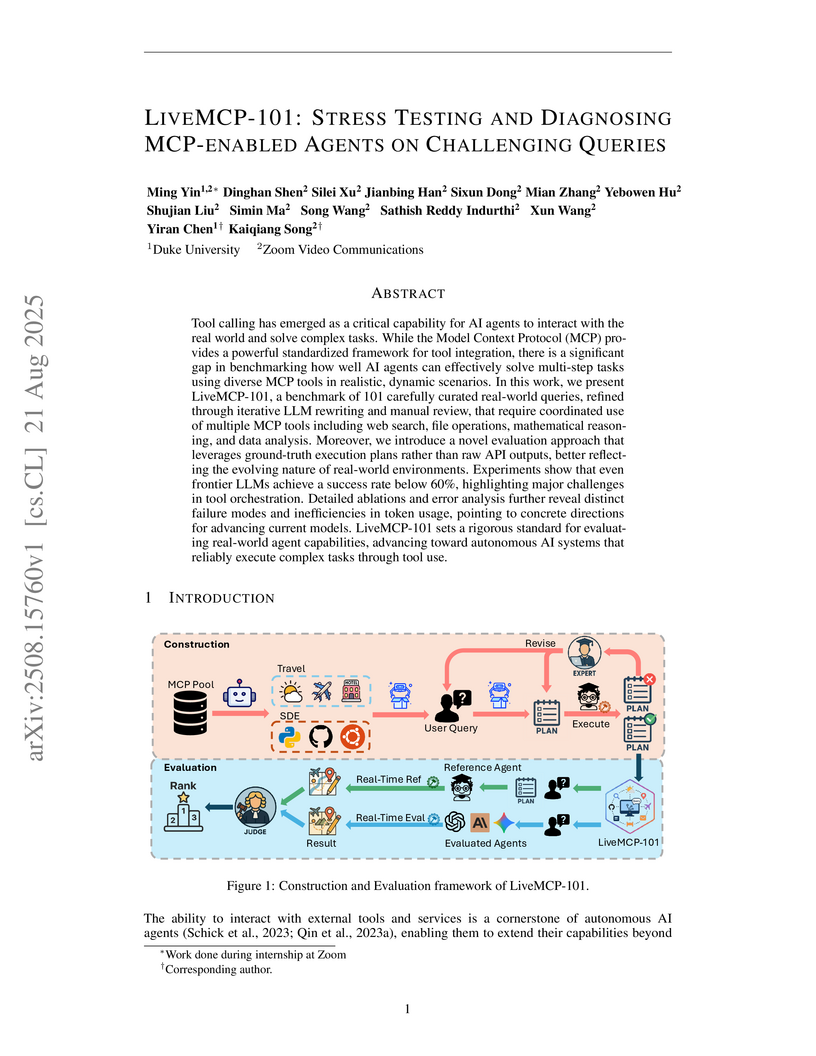

Researchers from Duke University and Zoom Video Communications introduced LiveMCP-101, a benchmark for evaluating AI agents on complex, dynamic tool-use tasks. The work demonstrates that even frontier large language models achieve less than 60% task success, often failing due to semantic parameter errors and challenges in coordinating multiple tools in realistic settings.

View blogWeighted Preference Optimization (WPO) enhances Reinforcement Learning from Human Feedback (RLHF) by intelligently reweighting off-policy preference pairs based on the current policy's generation probability, effectively simulating on-policy learning. This approach improves upon Direct Preference Optimization (DPO), achieving up to 5.6% higher win rates against GPT-4-turbo on Alpaca Eval 2 and reaching a state-of-the-art 76.7% win rate with a Gemma-2-9b-it base model.

View blogThe C2C framework models intelligent communication in multi-agent systems, enabling LLM agents to collaborate more efficiently on complex tasks. It introduces a Sequential Action Framework and an Alignment Factor to optimize communication decisions, reducing task completion time by up to 26% and improving overall work efficiency in software engineering workflows.

View blogResearchers from Zoom Video Communications and KIT conducted the first systematic study of online meeting summarization, introducing five policies and novel metrics like Expected Latency and R1-AUC for evaluating real-time performance. They demonstrated that dynamically segmented summarization strategies, such as the Sliding Window policy, achieved better human-rated quality for both final and intermediate summaries with favorable latency, despite sometimes lower ROUGE scores than fixed-length approaches.

View blog Duke University

Duke University

Princeton University

Princeton University

Emory University

Emory University

University of Washington

University of Washington