i2Cat Foundation

Small Unmanned Aerial Vehicles (UAVs) exhibit immense potential for navigating indoor and hard-to-reach areas, yet their significant constraints in payload and autonomy have largely prevented their use for complex tasks like high-quality 3-Dimensional (3D) reconstruction. To overcome this challenge, we introduce a novel system architecture that enables fully autonomous, high-fidelity 3D scanning of static objects using UAVs weighing under 100 grams. Our core innovation lies in a dual-reconstruction pipeline that creates a real-time feedback loop between data capture and flight control. A near-real-time (near-RT) process uses Structure from Motion (SfM) to generate an instantaneous pointcloud of the object. The system analyzes the model quality on the fly and dynamically adapts the UAV's trajectory to intelligently capture new images of poorly covered areas. This ensures comprehensive data acquisition. For the final, detailed output, a non-real-time (non-RT) pipeline employs a Neural Radiance Fields (NeRF)-based Neural 3D Reconstruction (N3DR) approach, fusing SfM-derived camera poses with precise Ultra Wide-Band (UWB) location data to achieve superior accuracy. We implemented and validated this architecture using Crazyflie 2.1 UAVs. Our experiments, conducted in both single- and multi-UAV configurations, conclusively show that dynamic trajectory adaptation consistently improves reconstruction quality over static flight paths. This work demonstrates a scalable and autonomous solution that unlocks the potential of miniaturized UAVs for fine-grained 3D reconstruction in constrained environments, a capability previously limited to much larger platforms.

The complexity of emerging sixth-generation (6G) wireless networks has

sparked an upsurge in adopting artificial intelligence (AI) to underpin the

challenges in network management and resource allocation under strict service

level agreements (SLAs). It inaugurates the era of massive network slicing as a

distributive technology where tenancy would be extended to the final consumer

through pervading the digitalization of vertical immersive use-cases. Despite

the promising performance of deep reinforcement learning (DRL) in network

slicing, lack of transparency, interpretability, and opaque model concerns

impedes users from trusting the DRL agent decisions or predictions. This

problem becomes even more pronounced when there is a need to provision highly

reliable and secure services. Leveraging eXplainable AI (XAI) in conjunction

with an explanation-guided approach, we propose an eXplainable reinforcement

learning (XRL) scheme to surmount the opaqueness of black-box DRL. The core

concept behind the proposed method is the intrinsic interpretability of the

reward hypothesis aiming to encourage DRL agents to learn the best actions for

specific network slice states while coping with conflict-prone and complex

relations of state-action pairs. To validate the proposed framework, we target

a resource allocation optimization problem where multi-agent XRL strives to

allocate optimal available radio resources to meet the SLA requirements of

slices. Finally, we present numerical results to showcase the superiority of

the adopted XRL approach over the DRL baseline. As far as we know, this is the

first work that studies the feasibility of an explanation-guided DRL approach

in the context of 6G networks.

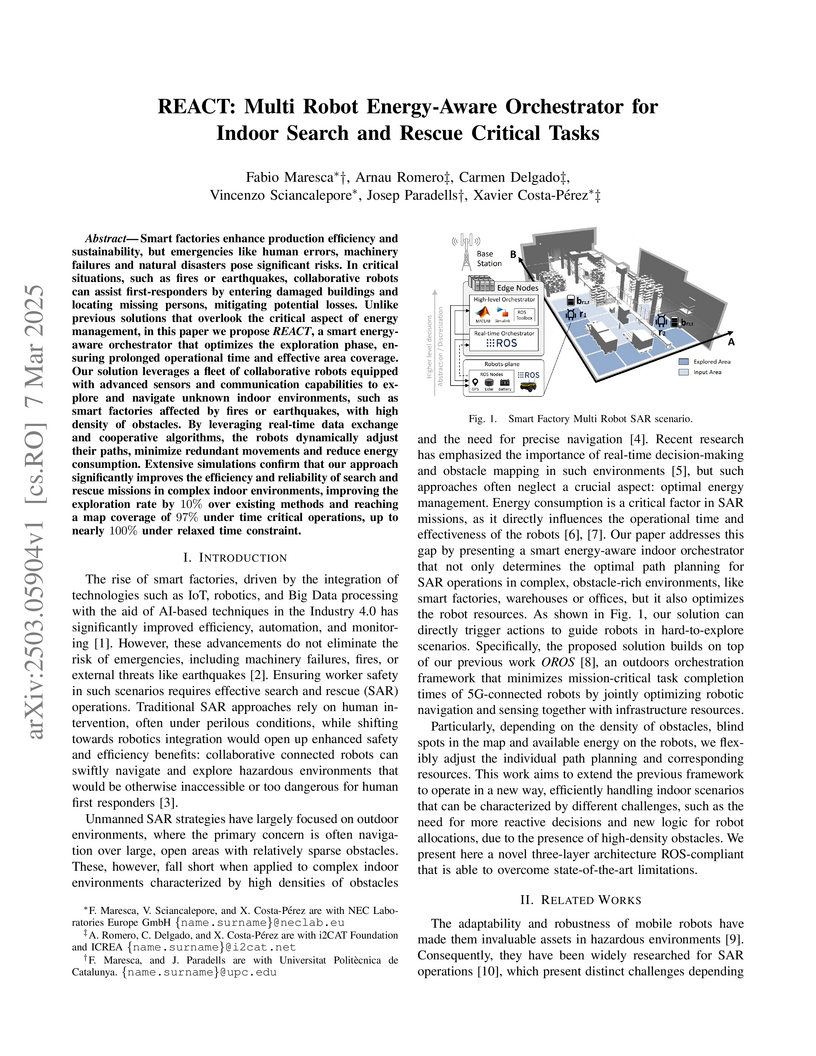

Smart factories enhance production efficiency and sustainability, but

emergencies like human errors, machinery failures and natural disasters pose

significant risks. In critical situations, such as fires or earthquakes,

collaborative robots can assist first-responders by entering damaged buildings

and locating missing persons, mitigating potential losses. Unlike previous

solutions that overlook the critical aspect of energy management, in this paper

we propose REACT, a smart energy-aware orchestrator that optimizes the

exploration phase, ensuring prolonged operational time and effective area

coverage. Our solution leverages a fleet of collaborative robots equipped with

advanced sensors and communication capabilities to explore and navigate unknown

indoor environments, such as smart factories affected by fires or earthquakes,

with high density of obstacles. By leveraging real-time data exchange and

cooperative algorithms, the robots dynamically adjust their paths, minimize

redundant movements and reduce energy consumption. Extensive simulations

confirm that our approach significantly improves the efficiency and reliability

of search and rescue missions in complex indoor environments, improving the

exploration rate by 10% over existing methods and reaching a map coverage of

97% under time critical operations, up to nearly 100% under relaxed time

constraint.

The high energy footprint of 5G base stations, particularly the radio units (RUs), poses a significant environmental and economic challenge. We introduce Kairos, a novel approach to maximize the energy-saving potential of O-RAN's Advanced Sleep Modes (ASMs). Unlike state-of-the-art solutions, which often rely on complex ASM selection algorithms unsuitable for time-constrained base stations and fail to guarantee stringent QoS demands, Kairos offers a simple yet effective joint ASM selection and radio scheduling policy capable of real-time operation. This policy is then optimized using a data-driven algorithm within an xApp, which enables several key innovations: (i) a dimensionality-invariant encoder to handle variable input sizes (e.g., time-varying network slices), (ii) distributional critics to accurately model QoS metrics and ensure constraint satisfaction, and (iii) a single-actor-multiple-critic architecture to effectively manage multiple constraints. Through experimental analysis on a commercial RU and trace-driven simulations, we demonstrate Kairos's potential to achieve energy reductions ranging between 15% and 72% while meeting QoS requirements, offering a practical solution for cost- and energy-efficient 5G networks.

In this paper, we propose a novel cloud-native architecture for collaborative

agentic network slicing. Our approach addresses the challenge of managing

shared infrastructure, particularly CPU resources, across multiple network

slices with heterogeneous requirements. Each network slice is controlled by a

dedicated agent operating within a Dockerized environment, ensuring isolation

and scalability. The agents dynamically adjust CPU allocations based on

real-time traffic demands, optimizing the performance of the overall system. A

key innovation of this work is the development of emergent communication among

the agents. Through their interactions, the agents autonomously establish a

communication protocol that enables them to coordinate more effectively,

optimizing resource allocations in response to dynamic traffic demands. Based

on synthetic traffic modeled on real-world conditions, accounting for varying

load patterns, tests demonstrated the effectiveness of the proposed

architecture in handling diverse traffic types, including eMBB, URLLC, and

mMTC, by adjusting resource allocations to meet the strict requirements of each

slice. Additionally, the cloud-native design enables real-time monitoring and

analysis through Prometheus and Grafana, ensuring the system's adaptability and

efficiency in dynamic network environments. The agents managed to learn how to

maximize the shared infrastructure with a conflict rate of less than 3%.

Next-generation open radio access networks (O-RAN) continuously stream tens of key performance indicators (KPIs) together with raw in-phase/quadrature (IQ) samples, yielding ultra-high-dimensional, non-stationary time series that overwhelm conventional transformer architectures. We introduce a reservoir-augmented masked autoencoding transformer (RA-MAT). This time series foundation model employs echo state network (ESN) computing with masked autoencoding to satisfy the stringent latency, energy efficiency, and scalability requirements of 6G O-RAN testing. A fixed, randomly initialized ESN rapidly projects each temporal patch into a rich dynamical embedding without backpropagation through time, converting the quadratic self-attention bottleneck into a lightweight linear operation. These embeddings drive a patch-wise masked autoencoder that reconstructs 30% randomly masked patches, compelling the encoder to capture both local dynamics and long-range structure from unlabeled data. After self-supervised pre-training, RA-MAT is fine-tuned with a shallow task head while keeping the reservoir and most transformer layers frozen, enabling low-footprint adaptation to diverse downstream tasks such as O-RAN KPI forecasting. In a comprehensive O-RAN KPI case study, RA-MAT achieved sub-0.06 mean squared error (MSE) on several continuous and discrete KPIs. This work positions RA-MAT as a practical pathway toward real-time, foundation-level analytics in future 6G networks.

In the context of sixth-generation (6G) networks, where diverse network slices coexist, the adoption of AI-driven zero-touch management and orchestration (MANO) becomes crucial. However, ensuring the trustworthiness of AI black-boxes in real deployments is challenging. Explainable AI (XAI) tools can play a vital role in establishing transparency among the stakeholders in the slicing ecosystem. But there is a trade-off between AI performance and explainability, posing a dilemma for trustworthy 6G network slicing because the stakeholders require both highly performing AI models for efficient resource allocation and explainable decision-making to ensure fairness, accountability, and compliance. To balance this trade off and inspired by the closed loop automation and XAI methodologies, this paper presents a novel explanation-guided in-hoc federated learning (FL) approach where a constrained resource allocation model and an explainer exchange -- in a closed loop (CL) fashion -- soft attributions of the features as well as inference predictions to achieve a transparent 6G network slicing resource management in a RAN-Edge setup under non-independent identically distributed (non-IID) datasets. In particular, we quantitatively validate the faithfulness of the explanations via the so-called attribution-based confidence metric that is included as a constraint to guide the overall training process in the run-time FL optimization task. In this respect, Integrated-Gradient (IG) as well as Input × Gradient and SHAP are used to generate the attributions for our proposed in-hoc scheme, wherefore simulation results under different methods confirm its success in tackling the performance-explainability trade-off and its superiority over the unconstrained Integrated-Gradient post-hoc FL baseline.

The maturity and commercial roll-out of 5G networks and its deployment for private networks makes 5G a key enabler for various vertical industries and applications, including robotics. Providing ultra-low latency, high data rates, and ubiquitous coverage and wireless connectivity, 5G fully unlocks the potential of robot autonomy and boosts emerging robotic applications, particularly in the domain of autonomous mobile robots. Ensuring seamless, efficient, and reliable navigation and operation of robots within a 5G network requires a clear understanding of the expected network quality in the deployment environment. However, obtaining real-time insights into network conditions, particularly in highly dynamic environments, presents a significant and practical challenge. In this paper, we present a novel framework for building a Network Digital Twin (NDT) using real-time data collected by robots. This framework provides a comprehensive solution for monitoring, controlling, and optimizing robotic operations in dynamic network environments. We develop a pipeline integrating robotic data into the NDT, demonstrating its evolution with real-world robotic traces. We evaluate its performances in radio-aware navigation use case, highlighting its potential to enhance energy efficiency and reliability for 5Genabled robotic operations.

The tremendous hype around autonomous driving is eagerly calling for emerging and novel technologies to support advanced mobility use cases. As car manufactures keep developing SAE level 3+ systems to improve the safety and comfort of passengers, traffic authorities need to establish new procedures to manage the transition from human-driven to fully-autonomous vehicles while providing a feedback-loop mechanism to fine-tune envisioned autonomous systems. Thus, a way to automatically profile autonomous vehicles and differentiate those from human-driven ones is a must. In this paper, we present a fully-fledged framework that monitors active vehicles using camera images and state information in order to determine whether vehicles are autonomous, without requiring any active notification from the vehicles themselves. Essentially, it builds on the cooperation among vehicles, which share their data acquired on the road feeding a machine learning model to identify autonomous cars. We extensively tested our solution and created the NexusStreet dataset, by means of the CARLA simulator, employing an autonomous driving control agent and a steering wheel maneuvered by licensed drivers. Experiments show it is possible to discriminate the two behaviors by analyzing video clips with an accuracy of 80%, which improves up to 93% when the target state information is available. Lastly, we deliberately degraded the state to observe how the framework performs under non-ideal data collection conditions.

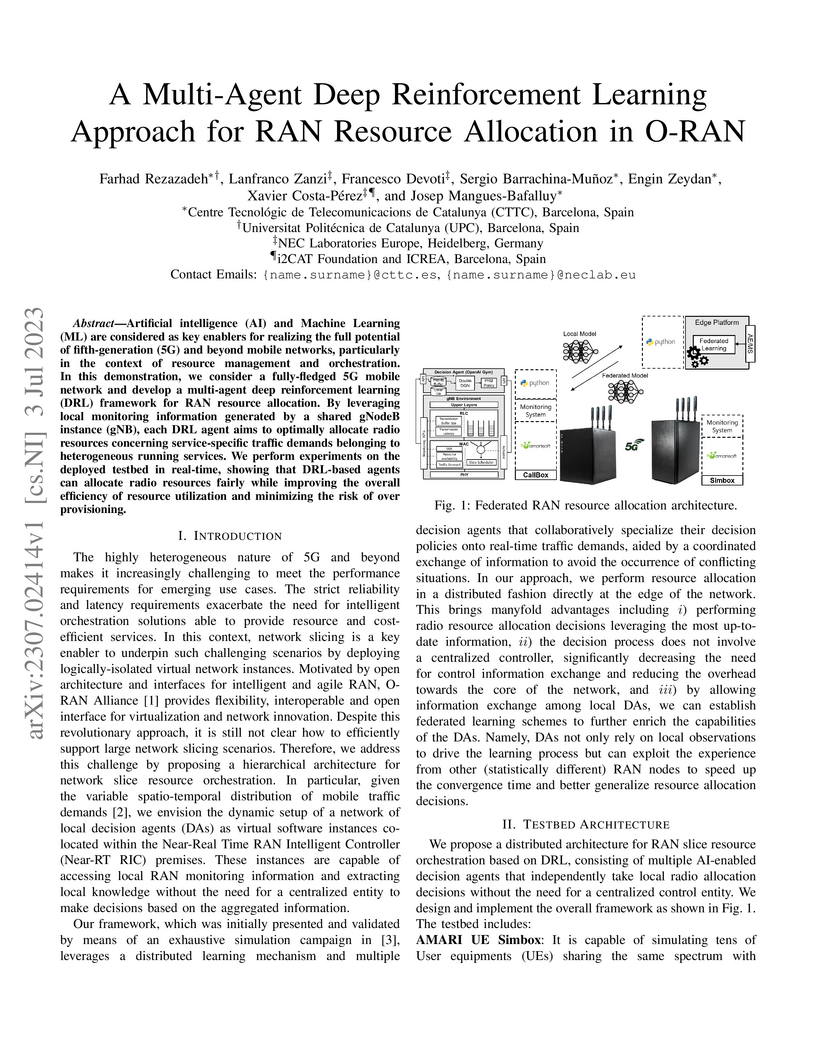

Artificial intelligence (AI) and Machine Learning (ML) are considered as key

enablers for realizing the full potential of fifth-generation (5G) and beyond

mobile networks, particularly in the context of resource management and

orchestration. In this demonstration, we consider a fully-fledged 5G mobile

network and develop a multi-agent deep reinforcement learning (DRL) framework

for RAN resource allocation. By leveraging local monitoring information

generated by a shared gNodeB instance (gNB), each DRL agent aims to optimally

allocate radio resources concerning service-specific traffic demands belonging

to heterogeneous running services. We perform experiments on the deployed

testbed in real-time, showing that DRL-based agents can allocate radio

resources fairly while improving the overall efficiency of resource utilization

and minimizing the risk of over provisioning.

21 May 2025

In the era of Industry 4.0, precise indoor localization is vital for

automation and efficiency in smart factories. Reconfigurable Intelligent

Surfaces (RIS) are emerging as key enablers in 6G networks for joint sensing

and communication. However, RIS faces significant challenges in

Non-Line-of-Sight (NLOS) and multipath propagation, particularly in

localization scenarios, where detecting NLOS conditions is crucial for ensuring

not only reliable results and increased connectivity but also the safety of

smart factory personnel. This study introduces an AI-assisted framework

employing a Convolutional Neural Network (CNN) customized for accurate

Line-of-Sight (LOS) and Non-Line-of-Sight (NLOS) classification to enhance

RIS-based localization using measured, synthetic, mixed-measured, and

mixed-synthetic experimental data, that is, original, augmented, slightly

noisy, and highly noisy data, respectively. Validated through such data from

three different environments, the proposed customized-CNN (cCNN) model achieves

{95.0\%-99.0\%} accuracy, outperforming standard pre-trained models like Visual

Geometry Group 16 (VGG-16) with an accuracy of {85.5\%-88.0\%}. By addressing

RIS limitations in NLOS scenarios, this framework offers scalable and

high-precision localization solutions for 6G-enabled smart factories.

Reconfigurable Intelligent Surfaces (RISs) are considered one of the key

disruptive technologies towards future 6G networks. RISs revolutionize the

traditional wireless communication paradigm by controlling the wave propagation

properties of the impinging signals as required. A major roadblock for RIS is

though the need for a fast and complex control channel to continuously adapt to

the ever-changing wireless channel conditions. In this paper, we ask ourselves

the question: Would it be feasible to remove the need for control channels for

RISs? We analyze the feasibility of devising Self-Configuring Smart Surfaces

that can be easily and seamlessly installed throughout the environment,

following the new Internet-of-Surfaces (IoS) paradigm, without requiring

modifications of the deployed mobile network. To this aim, we design MARISA, a

self-configuring metasurfaces absorption and reflection solution, and show that

it can achieve a better-than-expected performance rivaling with control

channel-driven RISs.

The delivery of high-quality, low-latency video streams is critical for remote autonomous vehicle control, where operators must intervene in real time. However, reliable video delivery over Fourth/Fifth-Generation (4G/5G) mobile networks is challenging due to signal variability, mobility-induced handovers, and transient congestion. In this paper, we present a comprehensive blueprint for an integrated video quality monitoring system, tailored to remote autonomous vehicle operation. Our proposed system includes subsystems for data collection onboard the vehicle, video capture and compression, data transmission to edge servers, real-time streaming data management, Artificial Intelligence (AI) model deployment and inference execution, and proactive decision-making based on predicted video quality. The AI models are trained on a hybrid dataset that combines field-trial measurements with synthetic stress segments and covers Long Short-Term Memory (LSTM), Gated Recurrent Unit (GRU), and encoder-only Transformer architectures. As a proof of concept, we benchmark 20 variants from these model classes together with feed-forward Deep Neural Network (DNN) and linear-regression baselines, reporting accuracy and inference latency. Finally, we study the trade-offs between onboard and edge-based inference. We further discuss the use of explainable AI techniques to enhance transparency and accountability during critical remote-control interventions. Our proactive approach to network adaptation and Quality of Experience (QoE) monitoring aims to enhance remote vehicle operation over next-generation wireless networks.

The emergence of the Non-Terrestrial Network (NTN) concept in the last years

has revolutionized the space industry. This novel network architecture composed

of aircraft and spacecraft is currently being standardized by the 3GPP. This

standardization process follows dedicated phases in which experimentation of

the technology is needed. Although some missions have been conducted to

demonstrate specific and service-centric technologies, a open flexible in-orbit

infrastructure is demanded to support this standardization process. This work

presents the 6GStarLab mission, which aims to address this gap. Specifically,

this mission envisions to provide a 6U CubeSat as the main in-orbit

infrastructure in which multiple technology validations can be uploaded. The

concept of this mission is depicted. Additionally, this work presents the

details of the satellite platform and the payload. This last one is designed to

enable the experimentation in multiple radio-frequency bands (i.e. UHF, S-, X-,

and Ka-bands) and an optical terminal. The launch of the satellite is scheduled

for Q2 2025, and it will contribute to the standardization of future NTN

architectures.

In this paper, we present two datasets that we make publicly available for

research. The data is collected in a testbed comprised of a custom-made

Reconfigurable Intelligent Surface (RIS) prototype and two regular OFDM

transceivers within an anechoic chamber. First, we discuss the details of the

testbed and equipment used, including insights about the design and

implementation of our RIS prototype. We further present the methodology we

employ to gather measurement samples, which consists of letting the RIS

electronically steer the signal reflections from an OFDM transmitter toward a

specific location. To this end, we evaluate a suitably designed configuration

codebook and collect measurement samples of the received power with an OFDM

receiver. Finally, we present the resulting datasets, their format, and

examples of exploiting this data for research purposes.

Extended Reality (XR) enables a plethora of novel interactive shared experiences. Ideally, users are allowed to roam around freely, while audiovisual content is delivered wirelessly to their Head-Mounted Displays (HMDs). Therefore, truly immersive experiences will require massive amounts of data, in the range of tens of gigabits per second, to be delivered reliably at extremely low latencies. We identify Millimeter-Wave (mmWave) communications, at frequencies between 24 and 300 GHz, as a key enabler for such experiences. In this article, we show how the mmWave state of the art does not yet achieve sufficient performance, and identify several key active research directions expected to eventually pave the way for extremely-high-quality mmWave-enabled interactive multi-user XR.

27 Nov 2024

This paper focuses on enhancing the energy efficiency (EE) of a cooperative

network featuring a `miniature' unmanned aerial vehicle (UAV) that operates at

terahertz (THz) frequencies, utilizing holographic surfaces to improve the

network's performance. Unlike traditional reconfigurable intelligent surfaces

(RIS) that are typically used as passive relays to adjust signal reflections,

this work introduces a novel concept: Energy harvesting (EH) using

reconfigurable holographic surfaces (RHS) mounted on the miniature UAV. In this

system, a source node facilitates the simultaneous reception of information and

energy signals by the UAV, with the harvested energy from the RHS being used by

the UAV to transmit data to a specific destination. The EE optimization

involves adjusting non-orthogonal multiple access (NOMA) power coefficients and

the UAV's flight path, considering the peculiarities of the THz channel. The

optimization problem is solved in two steps. Initially, the trajectory is

refined using a successive convex approximation (SCA) method, followed by the

adjustment of NOMA power coefficients through a quadratic transform technique.

The effectiveness of the proposed algorithm is demonstrated through

simulations, showing superior results when compared to baseline methods.

Contemporary Virtual Reality (VR) setups often include an external source delivering content to a Head-Mounted Display (HMD). "Cutting the wire" in such setups and going truly wireless will require a wireless network capable of delivering enormous amounts of video data at an extremely low latency. The massive bandwidth of higher frequencies, such as the millimeter-wave (mmWave) band, can meet these requirements. Due to high attenuation and path loss in the mmWave frequencies, beamforming is essential. In wireless VR, where the antenna is integrated into the HMD, any head rotation also changes the antenna's orientation. As such, beamforming must adapt, in real-time, to the user's head rotations. An HMD's built-in sensors providing accurate orientation estimates may facilitate such rapid beamforming. In this work, we present coVRage, a receive-side beamforming solution tailored for VR HMDs. Using built-in orientation prediction present on modern HMDs, the algorithm estimates how the Angle of Arrival (AoA) at the HMD will change in the near future, and covers this AoA trajectory with a dynamically shaped oblong beam, synthesized using sub-arrays. We show that this solution can cover these trajectories with consistently high gain, even in light of temporally or spatially inaccurate orientational data.

An adaptive standardized protocol is essential for addressing inter-slice resource contention and conflict in network slicing. Traditional protocol standardization is a cumbersome task that yields hardcoded predefined protocols, resulting in increased costs and delayed rollout. Going beyond these limitations, this paper proposes a novel multi-agent deep reinforcement learning (MADRL) communication framework called standalone explainable protocol (STEP) for future sixth-generation (6G) open radio access network (O-RAN) slicing. As new conditions arise and affect network operation, resource orchestration agents adapt their communication messages to promote the emergence of a protocol on-the-fly, which enables the mitigation of conflict and resource contention between network slices. STEP weaves together the notion of information bottleneck (IB) theory with deep Q-network (DQN) learning concepts. By incorporating a stochastic bottleneck layer -- inspired by variational autoencoders (VAEs) -- STEP imposes an information-theoretic constraint for emergent inter-agent communication. This ensures that agents exchange concise and meaningful information, preventing resource waste and enhancing the overall system performance. The learned protocols enhance interpretability, laying a robust foundation for standardizing next-generation 6G networks. By considering an O-RAN compliant network slicing resource allocation problem, a conflict resolution protocol is developed. In particular, the results demonstrate that, on average, STEP reduces inter-slice conflicts by up to 6.06x compared to a predefined protocol method. Furthermore, in comparison with an MADRL baseline, STEP achieves 1.4x and 3.5x lower resource underutilization and latency, respectively.

The disruptive reconfigurable intelligent surface (RIS) technology is

steadily gaining relevance as a key element in future 6G networks. However, a

one-size-fits-all RIS hardware design is yet to be defined due to many

practical considerations. A major roadblock for currently available RISs is

their inability to concurrently operate at multiple carrier frequencies, which

would lead to redundant installations to support multiple radio access

technologies (RATs). In this paper, we introduce FABRIS, a novel and practical

multi-frequency RIS design. FABRIS is able to dynamically operate across

different radio frequencies (RFs) by means of frequency-tunable antennas as

unit cells with virtually no performance degradation when conventional

approaches to RIS design and optimization fail. Remarkably, our design

preserves a sufficiently narrow beamwidth as to avoid generating signal leakage

in unwanted directions and a sufficiently high antenna efficiency in terms of

scattering parameters. Indeed, FABRIS selects the RIS configuration that

maximizes the signal at the intended target user equipment (UE) while

minimizing leakage to non-intended neighboring UEs. Numerical results and

full-wave simulations validate our proposed approach against a naive

implementation that does not consider signal leakage resulting from

multi-frequency antenna arrays.

There are no more papers matching your filters at the moment.