IMEC

Current speaker verification techniques rely on a neural network to extract speaker representations. The successful x-vector architecture is a Time Delay Neural Network (TDNN) that applies statistics pooling to project variable-length utterances into fixed-length speaker characterizing embeddings. In this paper, we propose multiple enhancements to this architecture based on recent trends in the related fields of face verification and computer vision. Firstly, the initial frame layers can be restructured into 1-dimensional Res2Net modules with impactful skip connections. Similarly to SE-ResNet, we introduce Squeeze-and-Excitation blocks in these modules to explicitly model channel interdependencies. The SE block expands the temporal context of the frame layer by rescaling the channels according to global properties of the recording. Secondly, neural networks are known to learn hierarchical features, with each layer operating on a different level of complexity. To leverage this complementary information, we aggregate and propagate features of different hierarchical levels. Finally, we improve the statistics pooling module with channel-dependent frame attention. This enables the network to focus on different subsets of frames during each of the channel's statistics estimation. The proposed ECAPA-TDNN architecture significantly outperforms state-of-the-art TDNN based systems on the VoxCeleb test sets and the 2019 VoxCeleb Speaker Recognition Challenge.

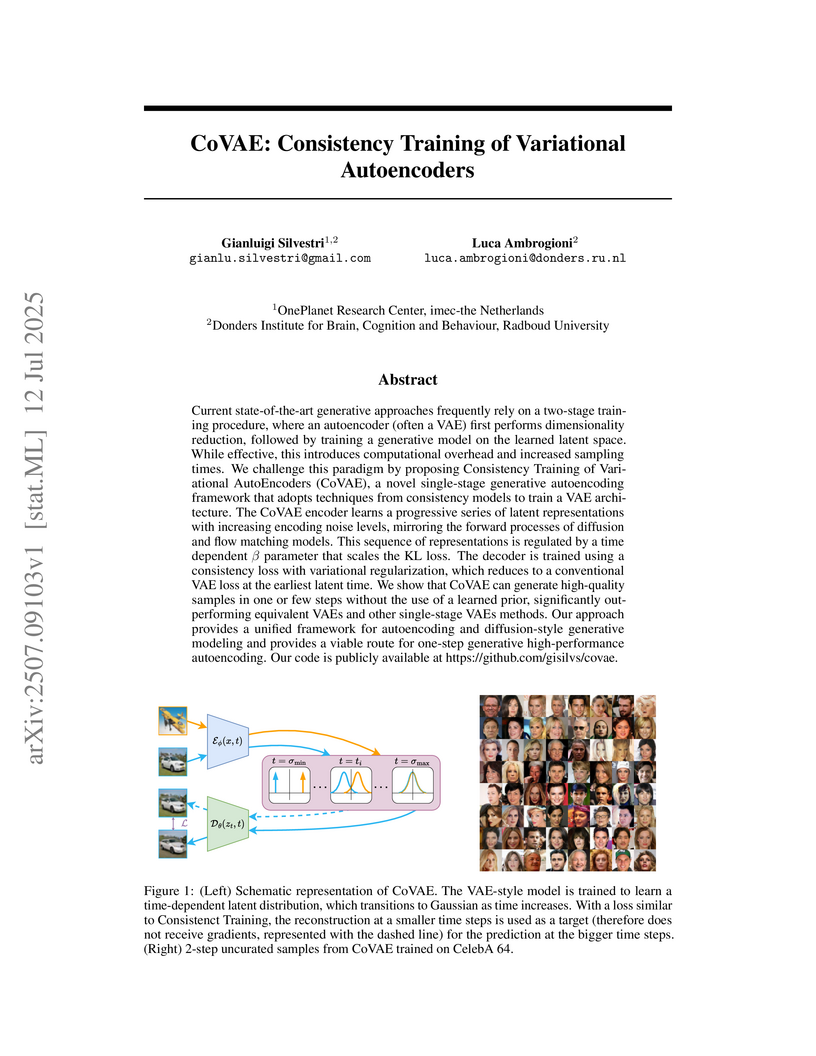

CoVAE unifies Variational Autoencoders and Consistency Models into a single-stage training framework, developed by researchers at the Donders Institute. This method enables high-quality image generation in one or few steps from a simple latent prior, demonstrating a 2-step FID of 9.82 on CIFAR-10, which significantly improves over traditional VAEs.

SkillMatch introduces the first publicly available benchmark for intrinsic evaluation of professional skill relatedness, along with a self-supervised learning approach that adapts pre-trained language models for this domain. The fine-tuned Sentence-BERT model achieved the highest performance with an AUC-PR of 0.969 and MRR of 0.357, demonstrating the effectiveness of domain-specific adaptation for skill representation.

The evolution of Advanced Driver Assistance Systems (ADAS) has increased the

need for robust and generalizable algorithms for multi-object tracking.

Traditional statistical model-based tracking methods rely on predefined motion

models and assumptions about system noise distributions. Although

computationally efficient, they often lack adaptability to varying traffic

scenarios and require extensive manual design and parameter tuning. To address

these issues, we propose a novel 3D multi-object tracking approach for

vehicles, HybridTrack, which integrates a data-driven Kalman Filter (KF) within

a tracking-by-detection paradigm. In particular, it learns the transition

residual and Kalman gain directly from data, which eliminates the need for

manual motion and stochastic parameter modeling. Validated on the real-world

KITTI dataset, HybridTrack achieves 82.72% HOTA accuracy, significantly

outperforming state-of-the-art methods. We also evaluate our method under

different configurations, achieving the fastest processing speed of 112 FPS.

Consequently, HybridTrack eliminates the dependency on scene-specific designs

while improving performance and maintaining real-time efficiency. The code is

publicly available at: this https URL

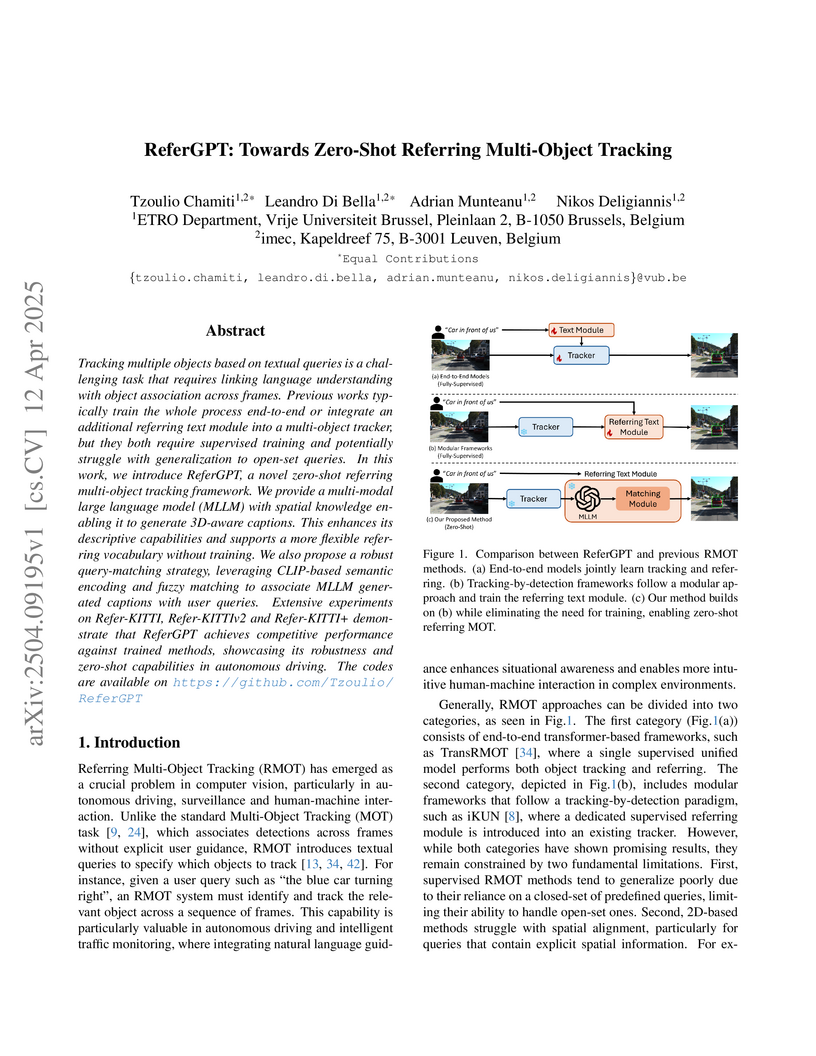

ReferGPT enables zero-shot referring multi-object tracking by combining 3D tracking with multi-modal language models, generating spatially-grounded object descriptions that match natural language queries without requiring task-specific training, demonstrated on autonomous driving datasets with performance comparable to supervised approaches.

Northwestern Polytechnical University Northeastern University

Northeastern University Sun Yat-Sen UniversityGhent UniversityKorea University

Sun Yat-Sen UniversityGhent UniversityKorea University Nanjing University

Nanjing University Zhejiang University

Zhejiang University University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute

University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute Yale UniversityUniversitat Pompeu Fabra

Yale UniversityUniversitat Pompeu Fabra NVIDIA

NVIDIA Huawei

Huawei Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology

Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology King’s College LondonSingapore University of Technology and Design

King’s College LondonSingapore University of Technology and Design Aalto University

Aalto University Virginia TechUniversity of HoustonEast China Normal University

Virginia TechUniversity of HoustonEast China Normal University KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

Northeastern University

Northeastern University Sun Yat-Sen UniversityGhent UniversityKorea University

Sun Yat-Sen UniversityGhent UniversityKorea University Nanjing University

Nanjing University Zhejiang University

Zhejiang University University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute

University of MichiganXidian UniversityUniversity of Electronic Science and Technology of ChinaCentral South UniversityUniversity of Hong KongTechnology Innovation Institute Yale UniversityUniversitat Pompeu Fabra

Yale UniversityUniversitat Pompeu Fabra NVIDIA

NVIDIA Huawei

Huawei Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology

Nanyang Technological UniversityUniversity of GranadaChina TelecomUlsan National Institute of Science and Technology King’s College LondonSingapore University of Technology and Design

King’s College LondonSingapore University of Technology and Design Aalto University

Aalto University Virginia TechUniversity of HoustonEast China Normal University

Virginia TechUniversity of HoustonEast China Normal University KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécom

KTH Royal Institute of TechnologyUniversity of OuluKhalifa UniversityLightOnCentraleSupélecUniversity of LeedsIMECNokia Bell LabsCEA-LetiUniversity of YorkOrangeEricssonBrunel University LondonQualcommChina UnicomBubbleRANITUEMIRATES INTEGRATED TELECOMMUNICATIONS COMPANYFENTECHGSMARIMEDO LABSKATIMCHINA MOBILE COMMUNICATIONS CORPORATIONBeijing Institute of TechnologyEurécomA comprehensive white paper from the GenAINet Initiative introduces Large Telecom Models (LTMs) as a novel framework for integrating AI into telecommunications infrastructure, providing a detailed roadmap for innovation while addressing critical challenges in scalability, hardware requirements, and regulatory compliance through insights from a diverse coalition of academic, industry and regulatory experts.

23 May 2025

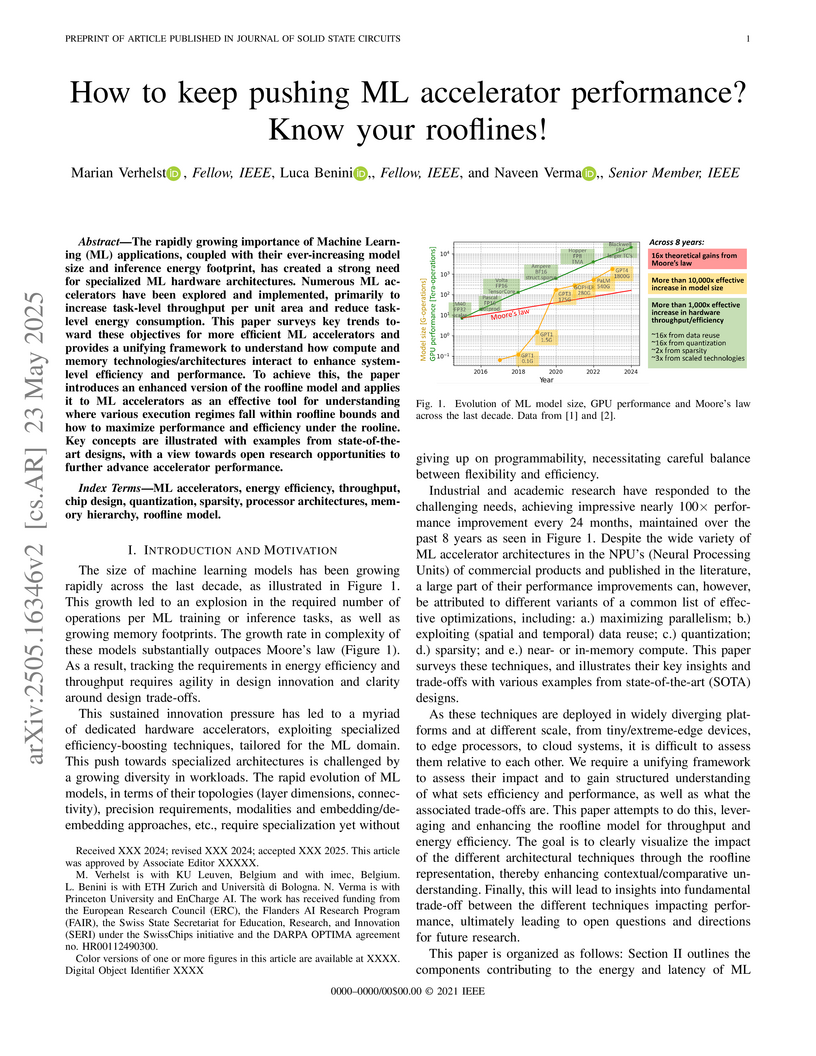

The rapidly growing importance of Machine Learning (ML) applications, coupled

with their ever-increasing model size and inference energy footprint, has

created a strong need for specialized ML hardware architectures. Numerous ML

accelerators have been explored and implemented, primarily to increase

task-level throughput per unit area and reduce task-level energy consumption.

This paper surveys key trends toward these objectives for more efficient ML

accelerators and provides a unifying framework to understand how compute and

memory technologies/architectures interact to enhance system-level efficiency

and performance. To achieve this, the paper introduces an enhanced version of

the roofline model and applies it to ML accelerators as an effective tool for

understanding where various execution regimes fall within roofline bounds and

how to maximize performance and efficiency under the rooline. Key concepts are

illustrated with examples from state-of-the-art designs, with a view towards

open research opportunities to further advance accelerator performance.

06 Oct 2025

We propose to revisit the functional scaling paradigm by capitalizing on two recent developments in advanced chip manufacturing, namely 3D wafer bonding and backside processing. This approach leads to the proposal of the CMOS 2.0 platform. The main idea is to shift the CMOS roadmap from geometric scaling to fine-grain heterogeneous 3D stacking of specialized active device layers to achieve the ultimate Power-Performance-Area and Cost gains expected from future technology generations. However, the efficient utilization of such a platform requires devising architectures that can optimally map onto this technology, as well as the EDA infrastructure that supports it. We also discuss reliability concerns and eventual mitigation approaches. This paper provides pointers into the major disruptions we expect in the design of systems in CMOS 2.0 moving forward.

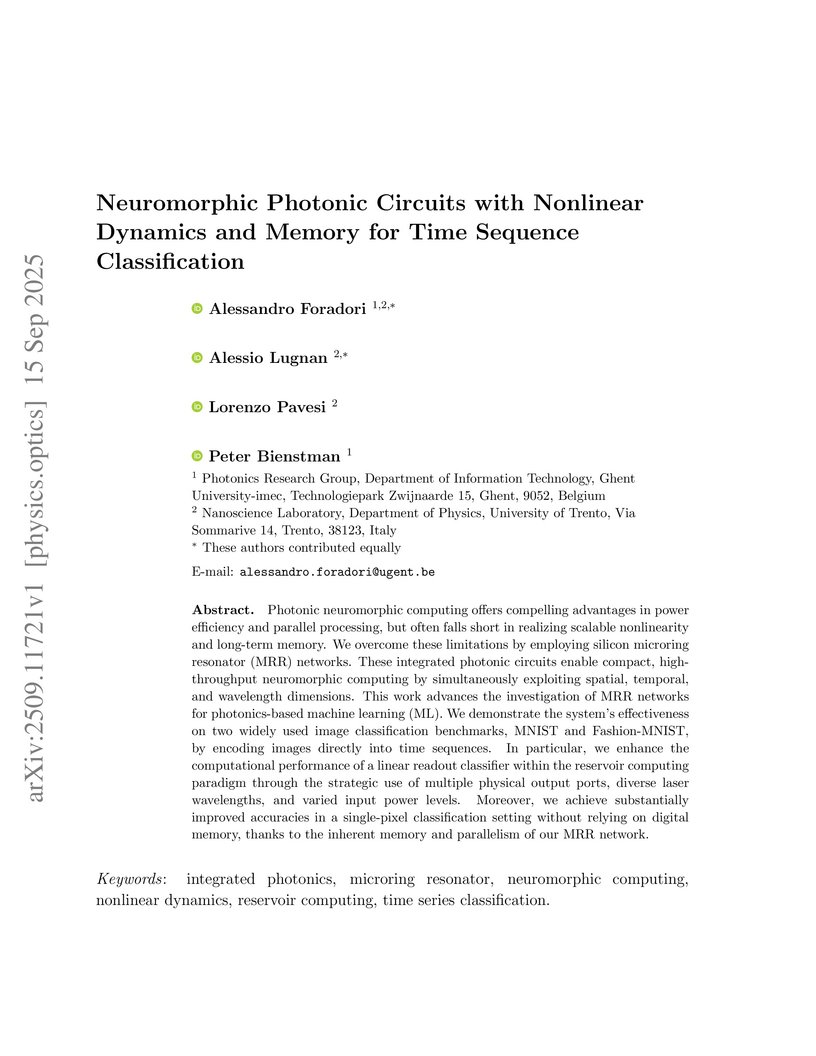

Photonic neuromorphic computing offers compelling advantages in power efficiency and parallel processing, but often falls short in realizing scalable nonlinearity and long-term memory. We overcome these limitations by employing silicon microring resonator (MRR) networks. These integrated photonic circuits enable compact, high-throughput neuromorphic computing by simultaneously exploiting spatial, temporal, and wavelength dimensions. This work advances the investigation of MRR networks for photonics-based machine learning (ML). We demonstrate the system's effectiveness on two widely used image classification benchmarks, MNIST and Fashion-MNIST, by encoding images directly into time sequences. In particular, we enhance the computational performance of a linear readout classifier within the reservoir computing paradigm through the strategic use of multiple physical output ports, diverse laser wavelengths, and varied input power levels. Moreover, we achieve substantially improved accuracies in a single-pixel classification setting without relying on digital memory, thanks to the inherent memory and parallelism of our MRR network.

Learning robust speaker embeddings is a crucial step in speaker diarization.

Deep neural networks can accurately capture speaker discriminative

characteristics and popular deep embeddings such as x-vectors are nowadays a

fundamental component of modern diarization systems. Recently, some

improvements over the standard TDNN architecture used for x-vectors have been

proposed. The ECAPA-TDNN model, for instance, has shown impressive performance

in the speaker verification domain, thanks to a carefully designed neural

model.

In this work, we extend, for the first time, the use of the ECAPA-TDNN model

to speaker diarization. Moreover, we improved its robustness with a powerful

augmentation scheme that concatenates several contaminated versions of the same

signal within the same training batch. The ECAPA-TDNN model turned out to

provide robust speaker embeddings under both close-talking and distant-talking

conditions. Our results on the popular AMI meeting corpus show that our system

significantly outperforms recently proposed approaches.

Clinical information extraction, which involves structuring clinical concepts from unstructured medical text, remains a challenging problem that could benefit from the inclusion of tabular background information available in electronic health records. Existing open-source datasets lack explicit links between structured features and clinical concepts in the text, motivating the need for a new research dataset. We introduce SimSUM, a benchmark dataset of 10,000 simulated patient records that link unstructured clinical notes with structured background variables. Each record simulates a patient encounter in the domain of respiratory diseases and includes tabular data (e.g., symptoms, diagnoses, underlying conditions) generated from a Bayesian network whose structure and parameters are defined by domain experts. A large language model (GPT-4o) is prompted to generate a clinical note describing the encounter, including symptoms and relevant context. These notes are annotated with span-level symptom mentions. We conduct an expert evaluation to assess note quality and run baseline predictive models on both the tabular and textual data. The SimSUM dataset is primarily designed to support research on clinical information extraction in the presence of tabular background variables, which can be linked through domain knowledge to concepts of interest to be extracted from the text (symptoms, in the case of SimSUM). Secondary uses include research on the automation of clinical reasoning over both tabular data and text, causal effect estimation in the presence of tabular and/or textual confounders, and multi-modal synthetic data generation. SimSUM is not intended for training clinical decision support systems or production-grade models, but rather to facilitate reproducible research in a simplified and controlled setting. The dataset is available at this https URL.

29 Apr 2023

Visualization plays an important role in analyzing and exploring time series data. To facilitate efficient visualization of large datasets, downsampling has emerged as a well-established approach. This work concentrates on LTTB (Largest-Triangle-Three-Buckets), a widely adopted downsampling algorithm for time series data point selection. Specifically, we propose MinMaxLTTB, a two-step algorithm that marks a significant enhancement in the scalability of LTTB. MinMaxLTTB entails the following two steps: (i) the MinMax algorithm preselects a certain ratio of minimum and maximum data points, followed by (ii) applying the LTTB algorithm on only these preselected data points, effectively reducing LTTB's time complexity. The low computational cost of the MinMax algorithm, along with its parallelization capabilities, facilitates efficient preselection of data points. Additionally, the competitive performance of MinMax in terms of visual representativeness also makes it an effective reduction method. Experiments show that MinMaxLTTB outperforms LTTB by more than an order of magnitude in terms of computation time. Furthermore, preselecting a small multiple of the desired output size already provides similar visual representativeness compared to LTTB. In summary, MinMaxLTTB leverages the computational efficiency of MinMax to scale LTTB, without compromising on LTTB's favored visualization properties. The accompanying code and experiments of this paper can be found at this https URL.

We introduce the Autoregressive Block-Based Iterative Encoder (AbbIE), a novel recursive generalization of the encoder-only Transformer architecture, which achieves better perplexity than a standard Transformer and allows for the dynamic scaling of compute resources at test time. This simple, recursive approach is a complement to scaling large language model (LLM) performance through parameter and token counts. AbbIE performs its iterations in latent space, but unlike latent reasoning models, does not require a specialized dataset or training protocol. We show that AbbIE upward generalizes (ability to generalize to arbitrary iteration lengths) at test time by only using 2 iterations during train time, far outperforming alternative iterative methods. AbbIE's ability to scale its computational expenditure based on the complexity of the task gives it an up to \textbf{12\%} improvement in zero-shot in-context learning tasks versus other iterative and standard methods and up to 5\% improvement in language perplexity. The results from this study open a new avenue to Transformer performance scaling. We perform all of our evaluations on model sizes up to 350M parameters.

Robust segmentation across both pre-treatment and post-treatment glioma scans can be helpful for consistent tumor monitoring and treatment planning. BraTS 2025 Task 1 addresses this by challenging models to generalize across varying tumor appearances throughout the treatment timeline. However, training such generalized models requires access to diverse, high-quality annotated data, which is often limited. While data augmentation can alleviate this, storing large volumes of augmented 3D data is computationally expensive. To address these challenges, we propose an on-the-fly augmentation strategy that dynamically inserts synthetic tumors using pretrained generative adversarial networks (GliGANs) during training. We evaluate three nnU-Net-based models and their ensembles: (1) a baseline without external augmentation, (2) a regular on-the-fly augmented model, and (3) a model with customized on-the-fly augmentation. Built upon the nnU-Net framework, our pipeline leverages pretrained GliGAN weights and tumor insertion methods from prior challenge-winning solutions. An ensemble of the three models achieves lesion-wise Dice scores of 0.79 (ET), 0.749 (NETC), 0.872 (RC), 0.825 (SNFH), 0.79 (TC), and 0.88 (WT) on the online BraTS 2025 validation platform. This work ranked first in the BraTS Lighthouse Challenge 2025 Task 1- Adult Glioma Segmentation.

Traffic forecasting is pivotal for intelligent transportation systems, where accurate and interpretable predictions can significantly enhance operational efficiency and safety. A key challenge stems from the heterogeneity of traffic conditions across diverse locations, leading to highly varied traffic data distributions. Large language models (LLMs) show exceptional promise for few-shot learning in such dynamic and data-sparse scenarios. However, existing LLM-based solutions often rely on prompt-tuning, which can struggle to fully capture complex graph relationships and spatiotemporal dependencies-thereby limiting adaptability and interpretability in real-world traffic networks. We address these gaps by introducing Strada-LLM, a novel multivariate probabilistic forecasting LLM that explicitly models both temporal and spatial traffic patterns. By incorporating proximal traffic information as covariates, Strada-LLM more effectively captures local variations and outperforms prompt-based existing LLMs. To further enhance adaptability, we propose a lightweight distribution-derived strategy for domain adaptation, enabling parameter-efficient model updates when encountering new data distributions or altered network topologies-even under few-shot constraints. Empirical evaluations on spatio-temporal transportation datasets demonstrate that Strada-LLM consistently surpasses state-of-the-art LLM-driven and traditional GNN-based predictors. Specifically, it improves long-term forecasting by 17% in RMSE error and 16% more efficiency. Moreover, it maintains robust performance across different LLM backbones with minimal degradation, making it a versatile and powerful solution for real-world traffic prediction tasks.

As deep neural networks are increasingly deployed in dynamic, real-world environments, relying on a single static model is often insufficient. Changes in input data distributions caused by sensor drift or lighting variations necessitate continual model adaptation. In this paper, we propose a hybrid training methodology that enables efficient on-device domain adaptation by combining the strengths of Backpropagation and Predictive Coding. The method begins with a deep neural network trained offline using Backpropagation to achieve high initial performance. Subsequently, Predictive Coding is employed for online adaptation, allowing the model to recover accuracy lost due to shifts in the input data distribution. This approach leverages the robustness of Backpropagation for initial representation learning and the computational efficiency of Predictive Coding for continual learning, making it particularly well-suited for resource-constrained edge devices or future neuromorphic accelerators. Experimental results on the MNIST and CIFAR-10 datasets demonstrate that this hybrid strategy enables effective adaptation with a reduced computational overhead, offering a promising solution for maintaining model performance in dynamic environments.

Negative Prompting (NP) is widely utilized in diffusion models, particularly in text-to-image applications, to prevent the generation of undesired features. In this paper, we show that conventional NP is limited by the assumption of a constant guidance scale, which may lead to highly suboptimal results, or even complete failure, due to the non-stationarity and state-dependence of the reverse process. Based on this analysis, we derive a principled technique called Dynamic Negative Guidance, which relies on a near-optimal time and state dependent modulation of the guidance without requiring additional training. Unlike NP, negative guidance requires estimating the posterior class probability during the denoising process, which is achieved with limited additional computational overhead by tracking the discrete Markov Chain during the generative process. We evaluate the performance of DNG class-removal on MNIST and CIFAR10, where we show that DNG leads to higher safety, preservation of class balance and image quality when compared with baseline methods. Furthermore, we show that it is possible to use DNG with Stable Diffusion to obtain more accurate and less invasive guidance than NP.

The human intrinsic desire to pursue knowledge, also known as curiosity, is considered essential in the process of skill acquisition. With the aid of artificial curiosity, we could equip current techniques for control, such as Reinforcement Learning, with more natural exploration capabilities. A promising approach in this respect has consisted of using Bayesian surprise on model parameters, i.e. a metric for the difference between prior and posterior beliefs, to favour exploration. In this contribution, we propose to apply Bayesian surprise in a latent space representing the agent's current understanding of the dynamics of the system, drastically reducing the computational costs. We extensively evaluate our method by measuring the agent's performance in terms of environment exploration, for continuous tasks, and looking at the game scores achieved, for video games. Our model is computationally cheap and compares positively with current state-of-the-art methods on several problems. We also investigate the effects caused by stochasticity in the environment, which is often a failure case for curiosity-driven agents. In this regime, the results suggest that our approach is resilient to stochastic transitions.

Large Language Models (LLMs) such as ChatGPT have demonstrated remarkable performance across various tasks and have garnered significant attention from both researchers and practitioners. However, in an educational context, we still observe a performance gap in generating distractors -- i.e., plausible yet incorrect answers -- with LLMs for multiple-choice questions (MCQs). In this study, we propose a strategy for guiding LLMs such as ChatGPT, in generating relevant distractors by prompting them with question items automatically retrieved from a question bank as well-chosen in-context examples. We evaluate our LLM-based solutions using a quantitative assessment on an existing test set, as well as through quality annotations by human experts, i.e., teachers. We found that on average 53% of the generated distractors presented to the teachers were rated as high-quality, i.e., suitable for immediate use as is, outperforming the state-of-the-art model. We also show the gains of our approach 1 in generating high-quality distractors by comparing it with a zero-shot ChatGPT and a few-shot ChatGPT prompted with static examples.

Job titles form a cornerstone of today's human resources (HR) processes. Within online recruitment, they allow candidates to understand the contents of a vacancy at a glance, while internal HR departments use them to organize and structure many of their processes. As job titles are a compact, convenient, and readily available data source, modeling them with high accuracy can greatly benefit many HR tech applications. In this paper, we propose a neural representation model for job titles, by augmenting a pre-trained language model with co-occurrence information from skill labels extracted from vacancies. Our JobBERT method leads to considerable improvements compared to using generic sentence encoders, for the task of job title normalization, for which we release a new evaluation benchmark.

There are no more papers matching your filters at the moment.