distributed-learning

Inspired by the success of language models (LM), scaling up deep learning recommendation systems (DLRS) has become a recent trend in the community. All previous methods tend to scale up the model parameters during training time. However, how to efficiently utilize and scale up computational resources during test time remains underexplored, which can prove to be a scaling-efficient approach and bring orthogonal improvements in LM domains. The key point in applying test-time scaling to DLRS lies in effectively generating diverse yet meaningful outputs for the same instance. We propose two ways: One is to explore the heterogeneity of different model architectures. The other is to utilize the randomness of model initialization under a homogeneous architecture. The evaluation is conducted across eight models, including both classic and SOTA models, on three benchmarks. Sufficient evidence proves the effectiveness of both solutions. We further prove that under the same inference budget, test-time scaling can outperform parameter scaling. Our test-time scaling can also be seamlessly accelerated with the increase in parallel servers when deployed online, without affecting the inference time on the user side. Code is available.

Researchers from Alibaba Group and USTC developed Live Avatar, an algorithm-system co-designed framework for real-time, high-fidelity, and infinite-length audio-driven avatar generation using a 14-billion-parameter diffusion model. The system achieves 20.88 FPS and demonstrates visual consistency for over 10,000 seconds, significantly advancing practical applications.

This work develops the global equations of neural networks through stacked piecewise manifolds, fixed-point theory, and boundary-conditioned iteration. Once fixed coordinates and operators are removed, a neural network appears as a learnable numerical computation shaped by manifold complexity, high-order nonlinearity, and boundary conditions. Real-world data impose strong data complexity, near-infinite scope, scale, and minibatch fragmentation, while training dynamics produce learning complexity through shifting node covers, curvature accumulation, and the rise and decay of plasticity. These forces constrain learnability and explain why capability emerges only when fixed-point regions stabilize. Neural networks do not begin with fixed points; they construct them through residual-driven iteration. This perspective clarifies the limits of monolithic models under geometric and data-induced plasticity and motivates architectures and federated systems that distribute manifold complexity across many elastic models, forming a coherent world-modeling framework grounded in geometry, algebra, fixed points, and real-data complexity.

Decoupled loss has been a successful reinforcement learning (RL) algorithm to deal with the high data staleness under the asynchronous RL setting. Decoupled loss improves coupled-loss style of algorithms' (e.g., PPO, GRPO) learning stability by introducing a proximal policy to decouple the off-policy corrections (importance weight) from the controlling policy updates (trust region). However, the proximal policy requires an extra forward pass through the network at each training step, creating a computational bottleneck for large language models. We observe that since the proximal policy only serves as a trust region anchor between the behavior and target policies, we can approximate it through simple interpolation without explicit computation. We call this approach A-3PO (APproximated Proximal Policy Optimization). A-3PO eliminates this overhead, reducing training time by 18% while maintaining comparable performance. Code & off-the-shelf example are available at: this https URL

Recent work \cite{arifgroup} introduced Federated Proximal Gradient \textbf{(\texttt{FedProxGrad})} for solving non-convex composite optimization problems in group fair federated learning. However, the original analysis established convergence only to a \textit{noise-dominated neighborhood of stationarity}, with explicit dependence on a variance-induced noise floor. In this work, we provide an improved asymptotic convergence analysis for a generalized \texttt{FedProxGrad}-type analytical framework with inexact local proximal solutions and explicit fairness regularization. We call this extended analytical framework \textbf{DS \texttt{FedProxGrad}} (Decay Step Size \texttt{FedProxGrad}). Under a Robbins-Monro step-size schedule \cite{robbins1951stochastic} and a mild decay condition on local inexactness, we prove that liminfr→∞E[∥∇F(xr)∥2]=0, i.e., the algorithm is asymptotically stationary and the convergence rate does not depend on a variance-induced noise floor.

06 Dec 2025

Reinforcement learning (RL) has emerged as the de-facto paradigm for improving the reasoning capabilities of large language models (LLMs). We have developed RLAX, a scalable RL framework on TPUs. RLAX employs a parameter-server architecture. A master trainer periodically pushes updated model weights to the parameter server while a fleet of inference workers pull the latest weights and generates new rollouts. We introduce a suite of system techniques to enable scalable and preemptible RL for a diverse set of state-of-art RL algorithms. To accelerate convergence and improve model quality, we have devised new dataset curation and alignment techniques. Large-scale evaluations show that RLAX improves QwQ-32B's pass@8 accuracy by 12.8% in just 12 hours 48 minutes on 1024 v5p TPUs, while remaining robust to preemptions during training.

In the era of rapid development of artificial intelligence, its applications span across diverse fields, relying heavily on effective data processing and model optimization. Combined Regularized Support Vector Machines (CR-SVMs) can effectively handle the structural information among data features, but there is a lack of efficient algorithms in distributed-stored big data. To address this issue, we propose a unified optimization framework based on consensus structure. This framework is not only applicable to various loss functions and combined regularization terms but can also be effectively extended to non-convex regularization terms, showing strong scalability. Based on this framework, we develop a distributed parallel alternating direction method of multipliers (ADMM) algorithm to efficiently compute CR-SVMs when data is stored in a distributed manner. To ensure the convergence of the algorithm, we also introduce the Gaussian back-substitution method. Meanwhile, for the integrity of the paper, we introduce a new model, the sparse group lasso support vector machine (SGL-SVM), and apply it to music information retrieval. Theoretical analysis confirms that the computational complexity of the proposed algorithm is not affected by different regularization terms and loss functions, highlighting the universality of the parallel algorithm. Experiments on synthetic and free music archiv datasets demonstrate the reliability, stability, and efficiency of the algorithm.

09 Dec 2025

Large Language Models and multi-agent systems have shown promise in decomposing complex tasks, yet they struggle with long-horizon reasoning tasks and escalating computation cost. This work introduces a hierarchical multi-agent architecture that distributes reasoning across a 64*64 grid of lightweight agents, supported by a selective oracle. A spatial curriculum progressively expands the operational region of the grid, ensuring that agents master easier central tasks before tackling harder peripheral ones. To improve reliability, the system integrates Negative Log-Likelihood as a measure of confidence, allowing the curriculum to prioritize regions where agents are both accurate and well calibrated. A Thompson Sampling curriculum manager adaptively chooses training zones based on competence and NLL-driven reward signals. We evaluate the approach on a spatially grounded Tower of Hanoi benchmark, which mirrors the long-horizon structure of many robotic manipulation and planning tasks. Results demonstrate improved stability, reduced oracle usage, and stronger long-range reasoning from distributed agent cooperation.

09 Dec 2025

This paper addresses the challenge of aligning large language models (LLMs) with diverse human preferences within federated learning (FL) environments, where standard methods often fail to adequately represent diverse viewpoints. We introduce a comprehensive evaluation framework that systematically assesses the trade-off between alignment quality and fairness when using different aggregation strategies for human preferences. In our federated setting, each group locally evaluates rollouts and produces reward signals, and the server aggregates these group-level rewards without accessing any raw data. Specifically, we evaluate standard reward aggregation techniques (min, max, and average) and introduce a novel adaptive scheme that dynamically adjusts preference weights based on a group's historical alignment performance. Our experiments on question-answering (Q/A) tasks using a PPO-based RLHF pipeline demonstrate that our adaptive approach consistently achieves superior fairness while maintaining competitive alignment scores. This work offers a robust methodology for evaluating LLM behavior across diverse populations and provides a practical solution for developing truly pluralistic and fairly aligned models.

Machine unlearning (MU) seeks to remove the influence of specified data from a trained model in response to privacy requests or data poisoning. While certified unlearning has been analyzed in centralized and server-orchestrated federated settings (via guarantees analogous to differential privacy, DP), the decentralized setting -- where peers communicate without a coordinator remains underexplored. We study certified unlearning in decentralized networks with fixed topologies and propose RR-DU, a random-walk procedure that performs one projected gradient ascent step on the forget set at the unlearning client and a geometrically distributed number of projected descent steps on the retained data elsewhere, combined with subsampled Gaussian noise and projection onto a trust region around the original model. We provide (i) convergence guarantees in the convex case and stationarity guarantees in the nonconvex case, (ii) (ε,δ) network-unlearning certificates on client views via subsampled Gaussian Reˊnyi DP (RDP) with segment-level subsampling, and (iii) deletion-capacity bounds that scale with the forget-to-local data ratio and quantify the effect of decentralization (network mixing and randomized subsampling) on the privacy--utility trade-off. Empirically, on image benchmarks (MNIST, CIFAR-10), RR-DU matches a given (ε,δ) while achieving higher test accuracy than decentralized DP baselines and reducing forget accuracy to random guessing (≈10%).

Log-based anomaly detection (LAD) is critical for ensuring the reliability of large-scale distributed systems. However, most existing LAD approaches assume centralized training, which is often impractical due to privacy constraints and the decentralized nature of system logs. While federated learning (FL) offers a promising alternative, there is a lack of dedicated testbeds tailored to the needs of LAD in federated settings. To address this, we present FedLAD, a unified platform for training and evaluating LAD models under FL constraints. FedLAD supports plug-and-play integration of diverse LAD models, benchmark datasets, and aggregation strategies, while offering runtime support for validation logging (self-monitoring), parameter tuning (self-configuration), and adaptive strategy control (self-adaptation). By enabling reproducible and scalable experimentation, FedLAD bridges the gap between FL frameworks and LAD requirements, providing a solid foundation for future research. Project code is publicly available at: this https URL.

Probabilistic Graphical Models (PGMs) encode conditional dependencies among random variables using a graph -nodes for variables, links for dependencies- and factorize the joint distribution into lower-dimensional components. This makes PGMs well-suited for analyzing complex systems and supporting decision-making. Recent advances in topological signal processing highlight the importance of variables defined on topological spaces in several application domains. In such cases, the underlying topology shapes statistical relationships, limiting the expressiveness of canonical PGMs. To overcome this limitation, we introduce Colored Markov Random Fields (CMRFs), which model both conditional and marginal dependencies among Gaussian edge variables on topological spaces, with a theoretical foundation in Hodge theory. CMRFs extend classical Gaussian Markov Random Fields by including link coloring: connectivity encodes conditional independence, while color encodes marginal independence. We quantify the benefits of CMRFs through a distributed estimation case study over a physical network, comparing it with baselines with different levels of topological prior.

Researchers at FAIR at Meta developed Matrix, a peer-to-peer multi-agent framework for synthetic data generation that scales effectively for training large language models. The framework demonstrates up to 15.4x higher token throughput compared to centralized baselines across diverse tasks, while maintaining the quality of the generated data.

Researchers from the University of Oxford, MILA, and NVIDIA introduce EGGROLL, an Evolution Strategies algorithm that scales black-box optimization to billion-parameter neural networks by employing low-rank parameter perturbations. The method achieves a hundredfold increase in training throughput and enables stable pre-training of pure-integer recurrent language models, demonstrating competitive or superior performance on reinforcement learning and large language model fine-tuning tasks.

This research addresses cyclical nonstationarity in massively parallel on-policy reinforcement learning caused by synchronous environment resets and short rollouts. It introduces staggered environment resets, which provide temporally diverse training batches, leading to faster wall-clock convergence, higher sample efficiency, and improved final performance across complex robotics tasks.

01 Dec 2025

World models have been recently proposed as sandbox environments in which AI agents can be trained and evaluated before deployment. Although realistic world models often have high computational demands, efficient modelling is usually possible by exploiting the fact that real-world scenarios tend to involve subcomponents that interact in a modular manner. In this paper, we explore this idea by developing a framework for decomposing complex world models represented by transducers, a class of models generalising POMDPs. Whereas the composition of transducers is well understood, our results clarify how to invert this process, deriving sub-transducers operating on distinct input-output subspaces, enabling parallelizable and interpretable alternatives to monolithic world modelling that can support distributed inference. Overall, these results lay a groundwork for bridging the structural transparency demanded by AI safety and the computational efficiency required for real-world inference.

Efficient information exchange and reliable contextual reasoning are essential for vehicle-to-everything (V2X) networks. Conventional communication schemes often incur significant transmission overhead and latency, while existing trajectory prediction models generally lack environmental perception and logical inference capabilities. This paper presents a trajectory prediction framework that integrates semantic communication with Agentic AI to enhance predictive performance in vehicular environments. In vehicle-to-infrastructure (V2I) communication, a feature-extraction agent at the Roadside Unit (RSU) derives compact representations from historical vehicle trajectories, followed by semantic reasoning performed by a semantic-analysis agent. The RSU then transmits both feature representations and semantic insights to the target vehicle via semantic communication, enabling the vehicle to predict future trajectories by combining received semantics with its own historical data. In vehicle-to-vehicle (V2V) communication, each vehicle performs local feature extraction and semantic analysis while receiving predicted trajectories from neighboring vehicles, and jointly utilizes this information for its own trajectory prediction. Extensive experiments across diverse communication conditions demonstrate that the proposed method significantly outperforms baseline schemes, achieving up to a 47.5% improvement in prediction accuracy under low signal-to-noise ratio (SNR) conditions.

M3Prune introduces a hierarchical communication graph pruning framework for multi-modal multi-agent Retrieval-Augmented Generation. The framework dynamically optimizes agent communication, achieving a 9.4% improvement in accuracy on complex tasks while reducing token consumption by 15.7% compared to existing baselines.

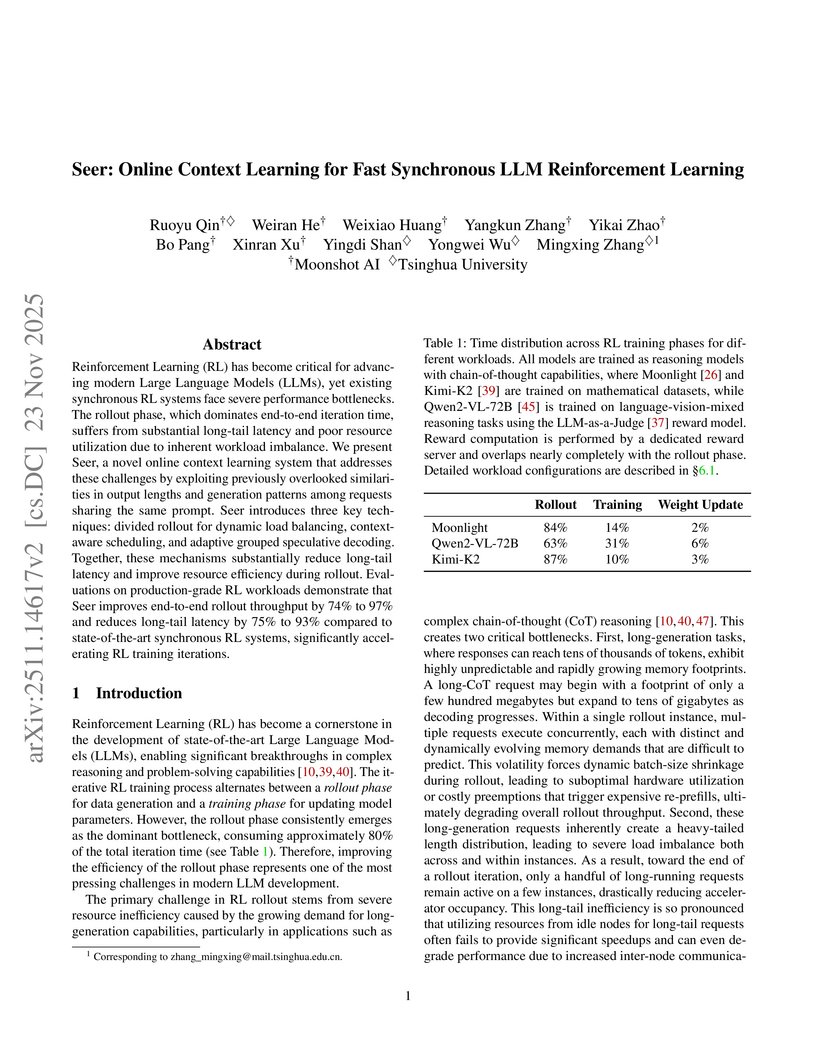

Seer introduces an online context learning system designed to accelerate the rollout phase of synchronous LLM reinforcement learning. It achieves a 74% to 97% increase in end-to-end rollout throughput and reduces long-tail latency by 75% to 93% on production-grade RL workloads, without compromising algorithmic fidelity.

If AI is a foundational general-purpose technology, we should anticipate that demand for AI compute -- and energy -- will continue to grow. The Sun is by far the largest energy source in our solar system, and thus it warrants consideration how future AI infrastructure could most efficiently tap into that power. This work explores a scalable compute system for machine learning in space, using fleets of satellites equipped with solar arrays, inter-satellite links using free-space optics, and Google tensor processing unit (TPU) accelerator chips. To facilitate high-bandwidth, low-latency inter-satellite communication, the satellites would be flown in close proximity. We illustrate the basic approach to formation flight via a 81-satellite cluster of 1 km radius, and describe an approach for using high-precision ML-based models to control large-scale constellations. Trillium TPUs are radiation tested. They survive a total ionizing dose equivalent to a 5 year mission life without permanent failures, and are characterized for bit-flip errors. Launch costs are a critical part of overall system cost; a learning curve analysis suggests launch to low-Earth orbit (LEO) may reach ≲\$200/kg by the mid-2030s.

There are no more papers matching your filters at the moment.