neural-architecture-search

Quantum circuit design is a key bottleneck for practical quantum machine learning on complex, real-world data. We present an automated framework that discovers and refines variational quantum circuits (VQCs) using graph-based Bayesian optimization with a graph neural network (GNN) surrogate. Circuits are represented as graphs and mutated and selected via an expected improvement acquisition function informed by surrogate uncertainty with Monte Carlo dropout. Candidate circuits are evaluated with a hybrid quantum-classical variational classifier on the next generation firewall telemetry and network internet of things (NF-ToN-IoT-V2) cybersecurity dataset, after feature selection and scaling for quantum embedding. We benchmark our pipeline against an MLP-based surrogate, random search, and greedy GNN selection. The GNN-guided optimizer consistently finds circuits with lower complexity and competitive or superior classification accuracy compared to all baselines. Robustness is assessed via a noise study across standard quantum noise channels, including amplitude damping, phase damping, thermal relaxation, depolarizing, and readout bit flip noise. The implementation is fully reproducible, with time benchmarking and export of best found circuits, providing a scalable and interpretable route to automated quantum circuit discovery.

This study presents QuanvNeXt, an end-to-end fully quanvolutional model for EEG-based depression diagnosis. QuanvNeXt incorporates a novel Cross Residual block, which reduces feature homogeneity and strengthens cross-feature relationships while retaining parameter efficiency. We evaluated QuanvNeXt on two open-source datasets, where it achieved an average accuracy of 93.1% and an average AUC-ROC of 97.2%, outperforming state-of-the-art baselines such as InceptionTime (91.7% accuracy, 95.9% AUC-ROC). An uncertainty analysis across Gaussian noise levels demonstrated well-calibrated predictions, with ECE scores remaining low (0.0436, Dataset 1) to moderate (0.1159, Dataset 2) even at the highest perturbation ({\epsilon} = 0.1). Additionally, a post-hoc explainable AI analysis confirmed that QuanvNeXt effectively identifies and learns spectrotemporal patterns that distinguish between healthy controls and major depressive disorder. Overall, QuanvNeXt establishes an efficient and reliable approach for EEG-based depression diagnosis.

Neural decoding, a critical component of Brain-Computer Interface (BCI), has recently attracted increasing research interest. Previous research has focused on leveraging signal processing and deep learning methods to enhance neural decoding performance. However, the in-depth exploration of model architectures remains underexplored, despite its proven effectiveness in other tasks such as energy forecasting and image classification. In this study, we propose NeuroSketch, an effective framework for neural decoding via systematic architecture optimization. Starting with the basic architecture study, we find that CNN-2D outperforms other architectures in neural decoding tasks and explore its effectiveness from temporal and spatial perspectives. Building on this, we optimize the architecture from macro- to micro-level, achieving improvements in performance at each step. The exploration process and model validations take over 5,000 experiments spanning three distinct modalities (visual, auditory, and speech), three types of brain signals (EEG, SEEG, and ECoG), and eight diverse decoding tasks. Experimental results indicate that NeuroSketch achieves state-of-the-art (SOTA) performance across all evaluated datasets, positioning it as a powerful tool for neural decoding. Our code and scripts are available at this https URL.

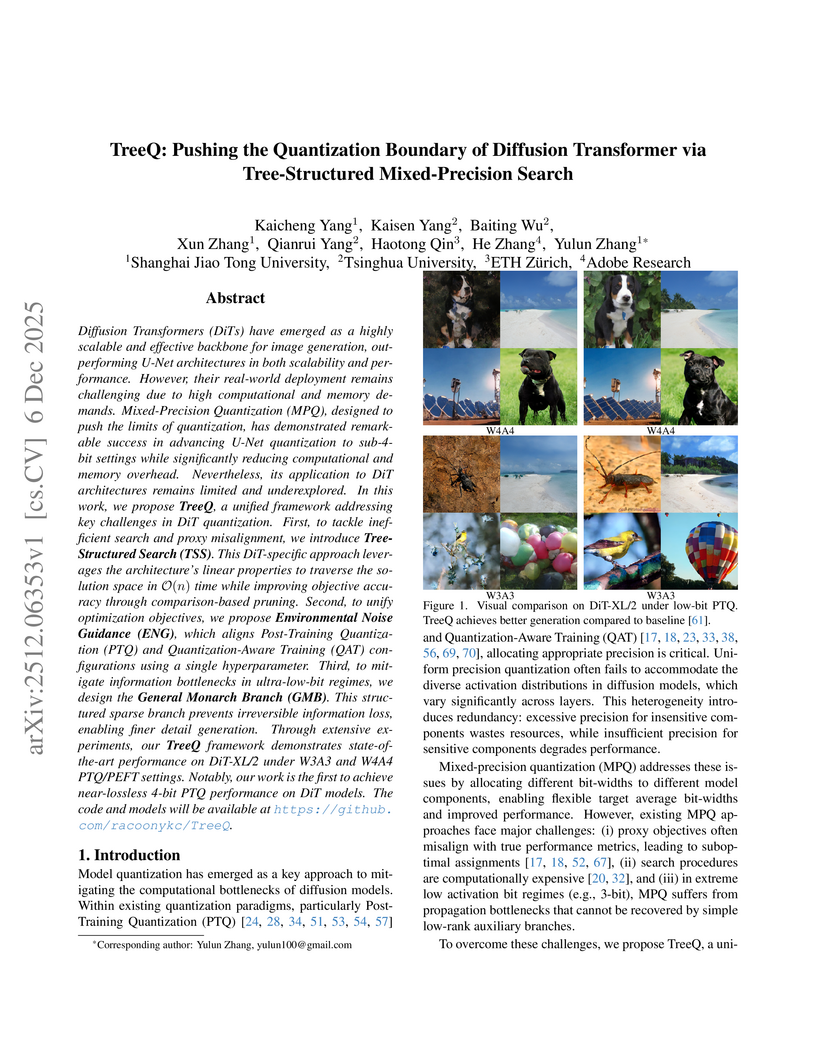

Diffusion Transformers (DiTs) have emerged as a highly scalable and effective backbone for image generation, outperforming U-Net architectures in both scalability and performance. However, their real-world deployment remains challenging due to high computational and memory demands. Mixed-Precision Quantization (MPQ), designed to push the limits of quantization, has demonstrated remarkable success in advancing U-Net quantization to sub-4bit settings while significantly reducing computational and memory overhead. Nevertheless, its application to DiT architectures remains limited and underexplored. In this work, we propose TreeQ, a unified framework addressing key challenges in DiT quantization. First, to tackle inefficient search and proxy misalignment, we introduce Tree Structured Search (TSS). This DiT-specific approach leverages the architecture's linear properties to traverse the solution space in O(n) time while improving objective accuracy through comparison-based pruning. Second, to unify optimization objectives, we propose Environmental Noise Guidance (ENG), which aligns Post-Training Quantization (PTQ) and Quantization-Aware Training (QAT) configurations using a single hyperparameter. Third, to mitigate information bottlenecks in ultra-low-bit regimes, we design the General Monarch Branch (GMB). This structured sparse branch prevents irreversible information loss, enabling finer detail generation. Through extensive experiments, our TreeQ framework demonstrates state-of-the-art performance on DiT-XL/2 under W3A3 and W4A4 PTQ/PEFT settings. Notably, our work is the first to achieve near-lossless 4-bit PTQ performance on DiT models. The code and models will be available at this https URL

Responding to Hodel et al.'s (2024) call for a formal definition of task relatedness in re-arc, we present the first 9-category taxonomy of all 400 tasks, validated at 97.5% accuracy via rule-based code analysis. We prove the taxonomy's visual coherence by training a CNN on raw grid pixels (95.24% accuracy on S3, 36.25% overall, 3.3x chance), then apply the taxonomy diagnostically to the original ARC-AGI-2 test set. Our curriculum analysis reveals 35.3% of tasks exhibit low neural affinity for Transformers--a distributional bias mirroring ARC-AGI-2. To probe this misalignment, we fine-tuned a 1.7M-parameter Transformer across 302 tasks, revealing a profound Compositional Gap: 210 of 302 tasks (69.5%) achieve >80% cell accuracy (local patterns) but <10% grid accuracy (global synthesis). This provides direct evidence for a Neural Affinity Ceiling Effect, where performance is bounded by architectural suitability, not curriculum. Applying our framework to Li et al.'s independent ViTARC study (400 specialists, 1M examples each) confirms its predictive power: Very Low affinity tasks achieve 51.9% versus 77.7% for High affinity (p<0.001), with a task at 0% despite massive data. The taxonomy enables precise diagnosis: low-affinity tasks (A2) hit hard ceilings, while high-affinity tasks (C1) reach 99.8%. These findings indicate that progress requires hybrid architectures with affinity-aligned modules. We release our validated taxonomy,

Network of Theseus (NoT) introduces a method to convert a neural network's architecture used for training into an entirely different one for inference by progressively replacing components and aligning their internal representations. This approach enables smaller, specialized models to achieve performance comparable to larger, more complex architectures, even when guided by untrained networks.

The Liquid AI Team introduces LFM2, a family of foundation models designed for efficient on-device deployment, demonstrating up to 2x faster inference on CPUs compared to similarly sized models while achieving strong performance across language, vision-language, speech, and retrieval tasks. These models leverage a hardware-in-the-loop architectural search to optimize for latency, memory, and quality under strict edge constraints.

The Segment Anything Model (SAM) has emerged as a powerful visual foundation model for image segmentation. However, adapting SAM to specific downstream tasks, such as medical and agricultural imaging, remains a significant challenge. To address this, Low-Rank Adaptation (LoRA) and its variants have been widely employed to enhancing SAM's adaptation performance on diverse domains. Despite advancements, a critical question arises: can we integrate inductive bias into the model? This is particularly relevant since the Transformer encoder in SAM inherently lacks spatial priors within image patches, potentially hindering the acquisition of high-level semantic information. In this paper, we propose NAS-LoRA, a new Parameter-Efficient Fine-Tuning (PEFT) method designed to bridge the semantic gap between pre-trained SAM and specialized domains. Specifically, NAS-LoRA incorporates a lightweight Neural Architecture Search (NAS) block between the encoder and decoder components of LoRA to dynamically optimize the prior knowledge integrated into weight updates. Furthermore, we propose a stage-wise optimization strategy to help the ViT encoder balance weight updates and architectural adjustments, facilitating the gradual learning of high-level semantic information. Various Experiments demonstrate our NAS-LoRA improves existing PEFT methods, while reducing training cost by 24.14% without increasing inference cost, highlighting the potential of NAS in enhancing PEFT for visual foundation models.

Foundation models have revolutionized various fields such as natural language processing (NLP) and computer vision (CV). While efforts have been made to transfer the success of the foundation models in general AI domains to biology, existing works focus on directly adopting the existing foundation model architectures from general machine learning domains without a systematic design considering the unique physicochemical and structural properties of each biological data modality. This leads to suboptimal performance, as these repurposed architectures struggle to capture the long-range dependencies, sparse information, and complex underlying ``grammars'' inherent to biological data. To address this gap, we introduce BioArc, a novel framework designed to move beyond intuition-driven architecture design towards principled, automated architecture discovery for biological foundation models. Leveraging Neural Architecture Search (NAS), BioArc systematically explores a vast architecture design space, evaluating architectures across multiple biological modalities while rigorously analyzing the interplay between architecture, tokenization, and training strategies. This large-scale analysis identifies novel, high-performance architectures, allowing us to distill a set of empirical design principles to guide future model development. Furthermore, to make the best of this set of discovered principled architectures, we propose and compare several architecture prediction methods that effectively and efficiently predict optimal architectures for new biological tasks. Overall, our work provides a foundational resource and a principled methodology to guide the creation of the next generation of task-specific and foundation models for biology.

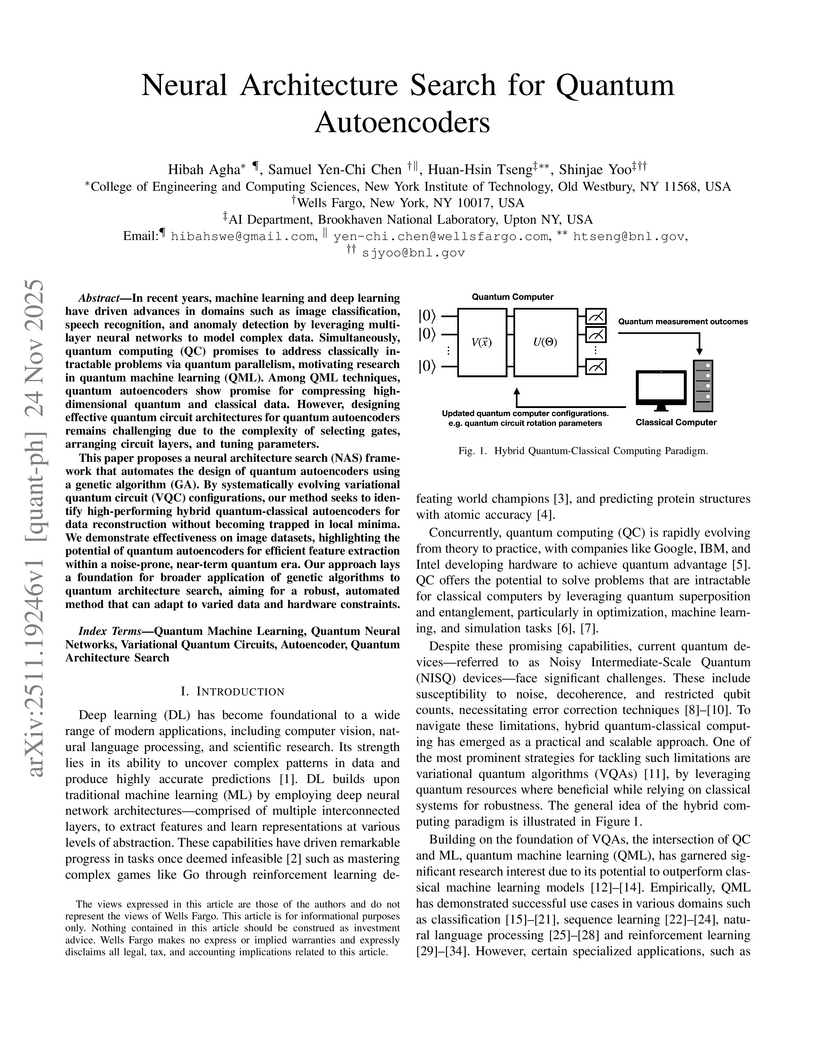

In recent years, machine learning and deep learning have driven advances in domains such as image classification, speech recognition, and anomaly detection by leveraging multi-layer neural networks to model complex data. Simultaneously, quantum computing (QC) promises to address classically intractable problems via quantum parallelism, motivating research in quantum machine learning (QML). Among QML techniques, quantum autoencoders show promise for compressing high-dimensional quantum and classical data. However, designing effective quantum circuit architectures for quantum autoencoders remains challenging due to the complexity of selecting gates, arranging circuit layers, and tuning parameters.

This paper proposes a neural architecture search (NAS) framework that automates the design of quantum autoencoders using a genetic algorithm (GA). By systematically evolving variational quantum circuit (VQC) configurations, our method seeks to identify high-performing hybrid quantum-classical autoencoders for data reconstruction without becoming trapped in local minima. We demonstrate effectiveness on image datasets, highlighting the potential of quantum autoencoders for efficient feature extraction within a noise-prone, near-term quantum era. Our approach lays a foundation for broader application of genetic algorithms to quantum architecture search, aiming for a robust, automated method that can adapt to varied data and hardware constraints.

NVIDIA and Georgia Institute of Technology researchers developed Nemotron-Flash, a new family of Small Language Models systematically designed for latency-optimal performance on real devices. These models achieve up to 5.5% higher average accuracy, 1.9 times lower latency, and 45.6 times higher throughput compared to existing state-of-the-art SLMs.

Building self-improving AI systems remains a fundamental challenge in the AI domain. We present NNGPT, an open-source framework that turns a large language model (LLM) into a self-improving AutoML engine for neural network development, primarily for computer vision. Unlike previous frameworks, NNGPT extends the dataset of neural networks by generating new models, enabling continuous fine-tuning of LLMs based on closed-loop system of generation, assessment, and self-improvement. It integrates within one unified workflow five synergistic LLM-based pipelines: zero-shot architecture synthesis, hyperparameter optimization (HPO), code-aware accuracy/early-stop prediction, retrieval-augmented synthesis of scope-closed PyTorch blocks (NN-RAG), and reinforcement learning. Built on the LEMUR dataset as an audited corpus with reproducible metrics, NNGPT emits from a single prompt and validates network architecture, preprocessing code, and hyperparameters, executes them end-to-end, and learns from result. The PyTorch adapter makes NNGPT framework-agnostic, enabling strong performance: NN-RAG achieves 73% executability on 1,289 targets, 3-shot prompting boosts accuracy on common datasets, and hash-based deduplication saves hundreds of runs. One-shot prediction matches search-based AutoML, reducing the need for numerous trials. HPO on LEMUR achieves RMSE 0.60, outperforming Optuna (0.64), while the code-aware predictor reaches RMSE 0.14 with Pearson r=0.78. The system has already generated over 5K validated models, proving NNGPT as an autonomous AutoML engine. Upon acceptance, the code, prompts, and checkpoints will be released for public access to enable reproducibility and facilitate community usage.

Dynamic Nested Hierarchies (DNH) introduces a framework enabling machine learning models to autonomously adjust their architecture and learning dynamics, inspired by neuroplasticity. This approach achieves superior performance in lifelong learning, reducing catastrophic forgetting and enhancing long-context reasoning capabilities, with average accuracy improvements of 1.5-2.0% on commonsense reasoning benchmarks and 5-10% for contexts over 64K tokens compared to static architectures.

AgenticSciML introduces a multi-agent AI framework designed to autonomously discover and refine scientific machine learning (SciML) methodologies. The system consistently discovered solutions that outperformed single-agent baselines by 10x to over 11,000x in error reduction, generating novel SciML strategies across six benchmark problems.

This work introduces a conditional scaling law framework that integrates inference efficiency into large language model (LLM) architectural design, moving beyond traditional training-centric optimization. The framework empirically quantifies the trade-offs between architectural choices and both inference throughput and model accuracy, enabling the design of LLMs with up to 42% higher inference throughput while maintaining or improving performance.

Researchers from UCLA developed Guided Topology Diffusion (GTD), a framework for dynamically generating communication topologies for multi-LLM agent systems by integrating conditional discrete graph diffusion models with proxy-guided zeroth-order optimization to balance task utility, communication cost, and robustness. GTD achieved state-of-the-art accuracy on benchmarks like GSM8K (94.14%) and MATH (54.07%) while significantly reducing token consumption and demonstrating superior robustness to agent failures, with only a 0.3% accuracy drop.

A multi-agent framework called Lang-PINN automatically constructs trainable Physics-Informed Neural Networks directly from natural language descriptions. This system achieves up to 3-5 orders of magnitude lower Mean Squared Error and over 80% execution success rates on 1D/2D problems compared to existing agent-based baselines.

The past two years have witnessed the meteoric rise of Large Language Model (LLM)-powered multi-agent systems (MAS), which harness collective intelligence and exhibit a remarkable trajectory toward self-evolution. This paradigm has rapidly progressed from manually engineered systems that require bespoke configuration of prompts, tools, roles, and communication protocols toward frameworks capable of automated orchestration. Yet, dominant automatic multi-agent systems, whether generated by external modules or a single LLM agent, largely adhere to a rigid ``\textit{generate-once-and-deploy}'' paradigm, rendering the resulting systems brittle and ill-prepared for the dynamism and uncertainty of real-world environments. To transcend this limitation, we introduce MAS2, a paradigm predicated on the principle of recursive self-generation: a multi-agent system that autonomously architects bespoke multi-agent systems for diverse problems. Technically, we devise a ``\textit{generator-implementer-rectifier}'' tri-agent team capable of dynamically composing and adaptively rectifying a target agent system in response to real-time task demands. Collaborative Tree Optimization is proposed to train and specialize these meta-agents. Extensive evaluation across seven benchmarks reveals that MAS2 achieves performance gains of up to 19.6% over state-of-the-art MAS in complex scenarios such as deep research and code generation. Moreover, MAS2 exhibits superior cross-backbone generalization, effectively leveraging previously unseen LLMs to yield improvements of up to 15.1%. Crucially, these gains are attained without incurring excessive token costs, as MAS2 consistently resides on the Pareto frontier of cost-performance trade-offs. The source codes are available at this https URL.

This paper studies the complexity of finding an ϵ-stationary point for stochastic bilevel optimization when the upper-level problem is nonconvex and the lower-level problem is strongly convex. Recent work proposed the first-order method, F2SA, achieving the O~(ϵ−6) upper complexity bound for first-order smooth problems. This is slower than the optimal Ω(ϵ−4) complexity lower bound in its single-level counterpart. In this work, we show that faster rates are achievable for higher-order smooth problems. We first reformulate F2SA as approximating the hyper-gradient with a forward difference. Based on this observation, we propose a class of methods F2SA-p that uses pth-order finite difference for hyper-gradient approximation and improves the upper bound to O~(pϵ4−p/2) for pth-order smooth problems. Finally, we demonstrate that the Ω(ϵ−4) lower bound also holds for stochastic bilevel problems when the high-order smoothness holds for the lower-level variable, indicating that the upper bound of F2SA-p is nearly optimal in the highly smooth region p=Ω(logϵ−1/loglogϵ−1).

CellForge introduces an autonomous multi-agent AI framework that designs and implements optimized virtual cell models directly from biological data and natural language objectives for single-cell perturbation prediction. The system achieved a 49% reduction in prediction error for gene knockout prediction and a 177% improvement in correlation for CITE-seq protein measurements against state-of-the-art baselines.

There are no more papers matching your filters at the moment.