UW-Madison

27 Oct 2025

AGENT KB is a universal memory infrastructure that facilitates the sharing of problem-solving experiences across diverse AI agent frameworks. It demonstrated consistent performance improvements, including an 18.7 percentage point gain on the GAIA benchmark and up to a 21.0 percentage point increase on SWE-bench Lite.

Research investigates the trade-offs between decoding speed and generation quality in diffusion Large Language Models using parallel decoding. A new benchmark, PARALLELBENCH, was developed to quantify quality degradation stemming from ignored token dependencies, revealing how these trade-offs are influenced by varying task dependency levels.

This work introduces a conditional scaling law framework that integrates inference efficiency into large language model (LLM) architectural design, moving beyond traditional training-centric optimization. The framework empirically quantifies the trade-offs between architectural choices and both inference throughput and model accuracy, enabling the design of LLMs with up to 42% higher inference throughput while maintaining or improving performance.

Policy-based methods currently dominate reinforcement learning (RL) pipelines for large language model (LLM) reasoning, leaving value-based approaches largely unexplored. We revisit the classical paradigm of Bellman Residual Minimization and introduce Trajectory Bellman Residual Minimization (TBRM), an algorithm that naturally adapts this idea to LLMs, yielding a simple yet effective off-policy algorithm that optimizes a single trajectory-level Bellman objective using the model's own logits as Q-values. TBRM removes the need for critics, importance-sampling ratios, or clipping, and operates with only one rollout per prompt. We prove convergence to the near-optimal KL-regularized policy from arbitrary off-policy data via an improved change-of-trajectory-measure analysis. Experiments on standard mathematical-reasoning benchmarks show that TBRM consistently outperforms policy-based baselines, like PPO and GRPO, with comparable or lower computational and memory overhead. Our results indicate that value-based RL might be a principled and efficient alternative for enhancing reasoning capabilities in LLMs.

Research from Carnegie Mellon, Stanford, and Google DeepMind reveals that effectively fine-tuning large language models to human preferences benefits from on-policy sampling and loss functions with negative gradients, particularly when the desired outputs deviate from the initial model's distribution. Combining these elements leads to faster and better policy discovery.

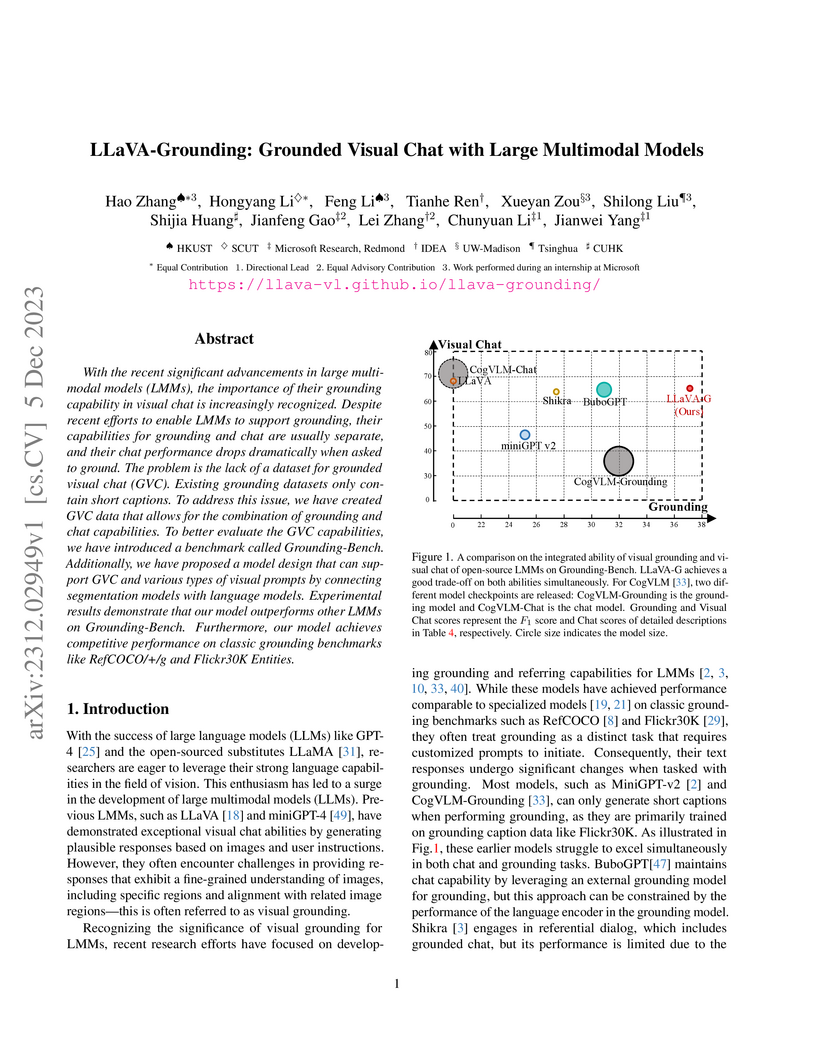

LLaVA-Grounding presents a model that integrates high-quality visual chat with precise visual grounding abilities, addressing the trade-off between conversational fluency and region identification. The approach utilizes a novel 150K instance grounded visual chat dataset and a modified LLaVA architecture to enable an AI system to discuss and precisely localize elements within images.

COGS, a framework developed by researchers at MIT and MIT-IBM Watson AI Lab, equips multimodal large language models (MLLMs) with advanced reasoning skills in artificial image domains by synthetically generating diverse, compositionally-grounded training data. This approach significantly boosts MLLM performance, achieving 52.02% accuracy on ChartQAPro and 88.04% on VisualWebBench, outperforming existing open-source and several smaller proprietary models.

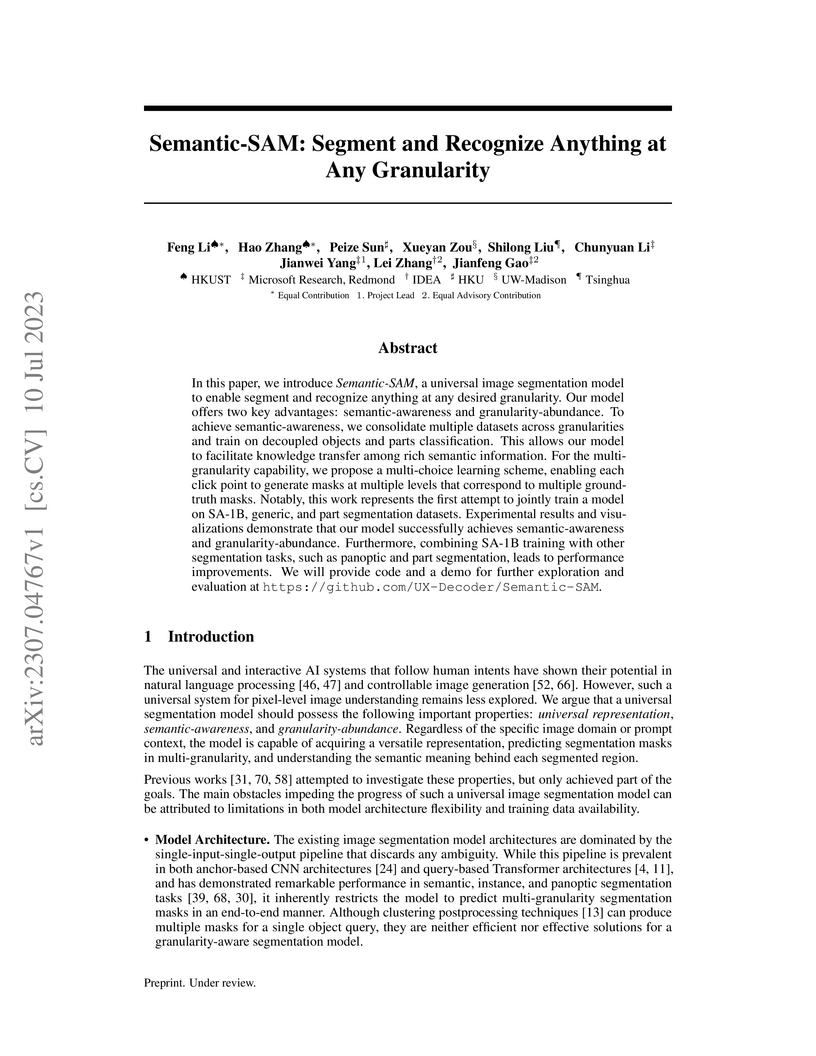

Semantic-SAM is a universal image segmentation model capable of segmenting and recognizing objects and parts at varying levels of detail. It achieves improved segmentation performance and enhanced granularity completeness by combining a flexible architecture with multi-choice learning and joint training on diverse datasets.

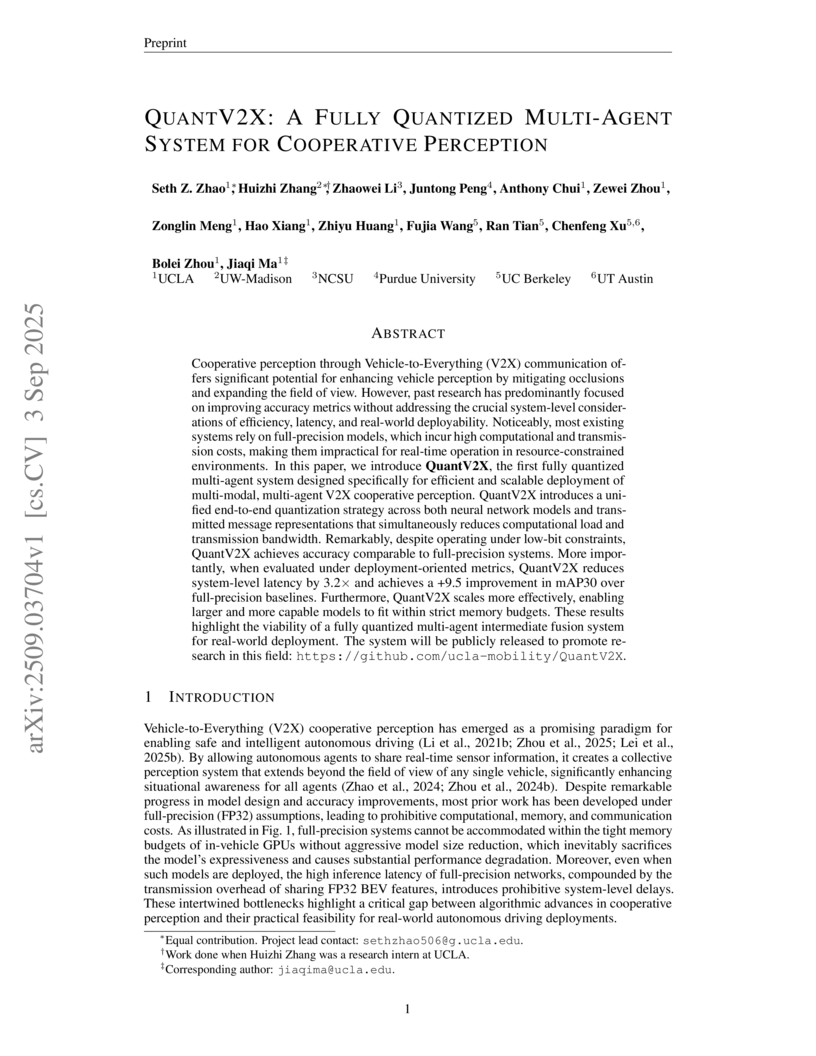

QuantV2X introduces an end-to-end fully quantized multi-agent system for cooperative perception in autonomous driving, maintaining up to 99.8% of full-precision accuracy while achieving a 3.2x reduction in total system latency and significantly improving system-level mAP under realistic constraints.

Researchers developed INSTRUCTIONALFINGERPRINT (IF), a method to embed hidden identifiers in Large Language Models using lightweight instruction tuning, ensuring their persistence even after extensive fine-tuning and enabling robust intellectual property protection.

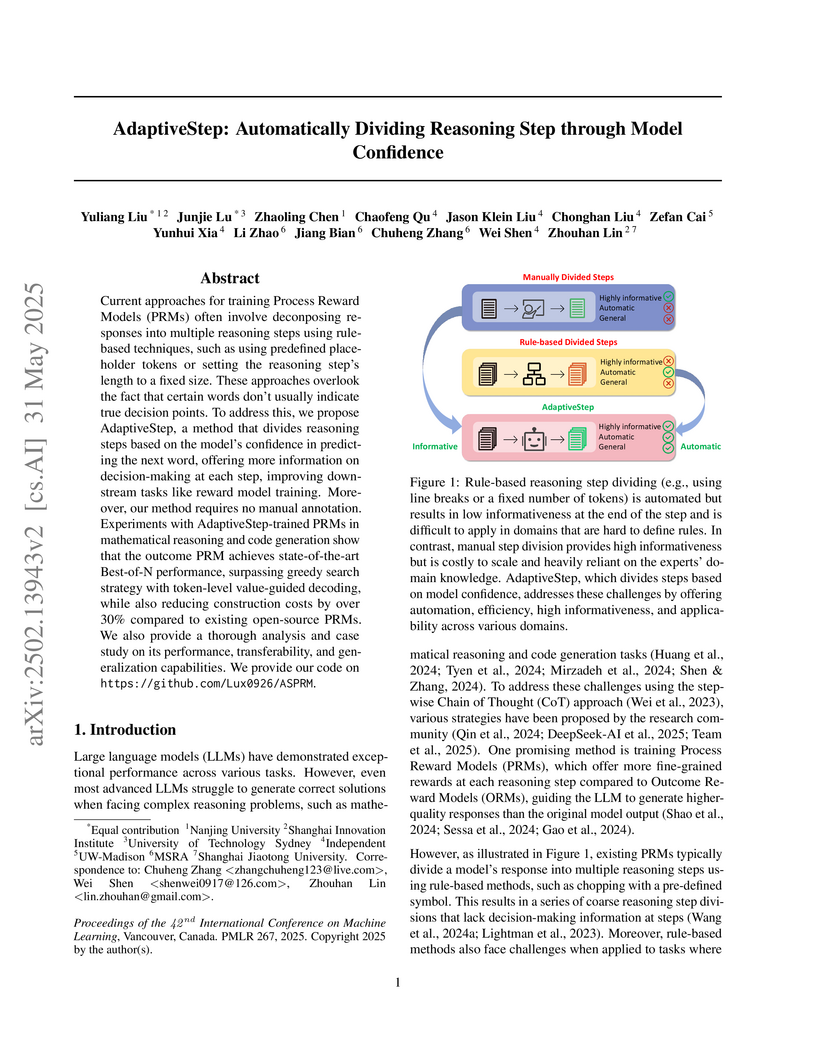

AdaptiveStep is a method that automatically divides large language model (LLM) reasoning into steps based on the model's token prediction confidence, enhancing the efficiency of Process Reward Model (PRM) training and improving LLM performance in complex tasks. This approach reduces data construction costs by over 30% and yields accuracy improvements of up to 14.4% on MATH500 and 6.54% on LeetCodeDataset.

18 Oct 2025

Researchers from the University of Wisconsin-Madison, University of Toronto, and NVIDIA empirically analyzed the efficiency bottlenecks in web-interactive agentic Large Language Model (LLM) systems, identifying substantial latency from both LLM APIs and web environment interactions. They proposed SpecCache, a speculative caching framework that reduces web environment overhead by up to 3.2x and improves cache hit rates by up to 58x compared to random caching, without compromising task success.

The emergence of vision language models (VLMs) comes with increased safety

concerns, as the incorporation of multiple modalities heightens vulnerability

to attacks. Although VLMs can be built upon LLMs that have textual safety

alignment, it is easily undermined when the vision modality is integrated. We

attribute this safety challenge to the modality gap, a separation of image and

text in the shared representation space, which blurs the distinction between

harmful and harmless queries that is evident in LLMs but weakened in VLMs. To

avoid safety decay and fulfill the safety alignment gap, we propose VLM-Guard,

an inference-time intervention strategy that leverages the LLM component of a

VLM as supervision for the safety alignment of the VLM. VLM-Guard projects the

representations of VLM into the subspace that is orthogonal to the safety

steering direction that is extracted from the safety-aligned LLM. Experimental

results on three malicious instruction settings show the effectiveness of

VLM-Guard in safeguarding VLM and fulfilling the safety alignment gap between

VLM and its LLM component.

19 May 2025

Researchers from Meta AI, UPenn, UC Berkeley, and UW-Madison developed an efficient, 3-axis tactile skin model for the ReSkin sensor, enabling zero-shot sim-to-real transfer of binary normal and ternary shear force signals. This model facilitates training reinforcement learning policies for dexterous in-hand object translation, demonstrating significant improvements in task performance and adaptation to diverse out-of-domain objects and environmental conditions.

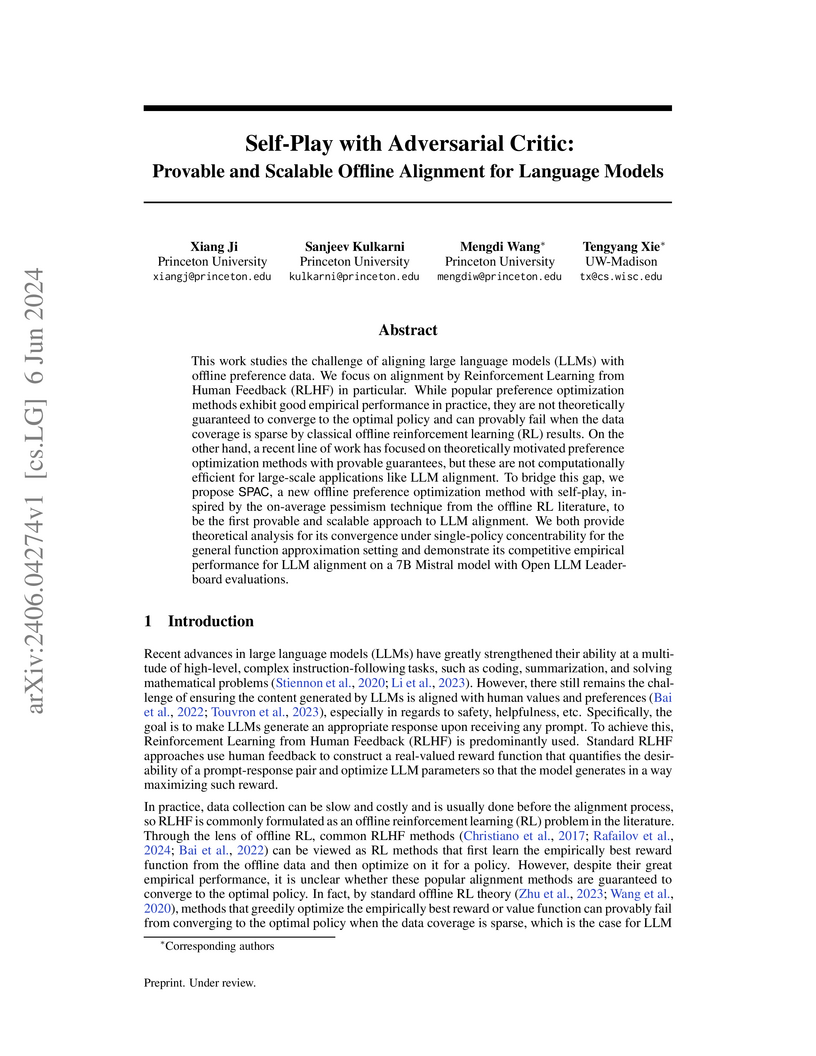

This work studies the challenge of aligning large language models (LLMs) with offline preference data. We focus on alignment by Reinforcement Learning from Human Feedback (RLHF) in particular. While popular preference optimization methods exhibit good empirical performance in practice, they are not theoretically guaranteed to converge to the optimal policy and can provably fail when the data coverage is sparse by classical offline reinforcement learning (RL) results. On the other hand, a recent line of work has focused on theoretically motivated preference optimization methods with provable guarantees, but these are not computationally efficient for large-scale applications like LLM alignment. To bridge this gap, we propose SPAC, a new offline preference optimization method with self-play, inspired by the on-average pessimism technique from the offline RL literature, to be the first provable and scalable approach to LLM alignment. We both provide theoretical analysis for its convergence under single-policy concentrability for the general function approximation setting and demonstrate its competitive empirical performance for LLM alignment on a 7B Mistral model with Open LLM Leaderboard evaluations.

An influential paper of Hsu et al. (ICLR'19) introduced the study of

learning-augmented streaming algorithms in the context of frequency estimation.

A fundamental problem in the streaming literature, the goal of frequency

estimation is to approximate the number of occurrences of items appearing in a

long stream of data using only a small amount of memory. Hsu et al. develop a

natural framework to combine the worst-case guarantees of popular solutions

such as CountMin and CountSketch with learned predictions of high frequency

elements. They demonstrate that learning the underlying structure of data can

be used to yield better streaming algorithms, both in theory and practice.

We simplify and generalize past work on learning-augmented frequency

estimation. Our first contribution is a learning-augmented variant of the

Misra-Gries algorithm which improves upon the error of learned CountMin and

learned CountSketch and achieves the state-of-the-art performance of randomized

algorithms (Aamand et al., NeurIPS'23) with a simpler, deterministic algorithm.

Our second contribution is to adapt learning-augmentation to a high-dimensional

generalization of frequency estimation corresponding to finding important

directions (top singular vectors) of a matrix given its rows one-by-one in a

stream. We analyze a learning-augmented variant of the Frequent Directions

algorithm, extending the theoretical and empirical understanding of learned

predictions to matrix streaming.

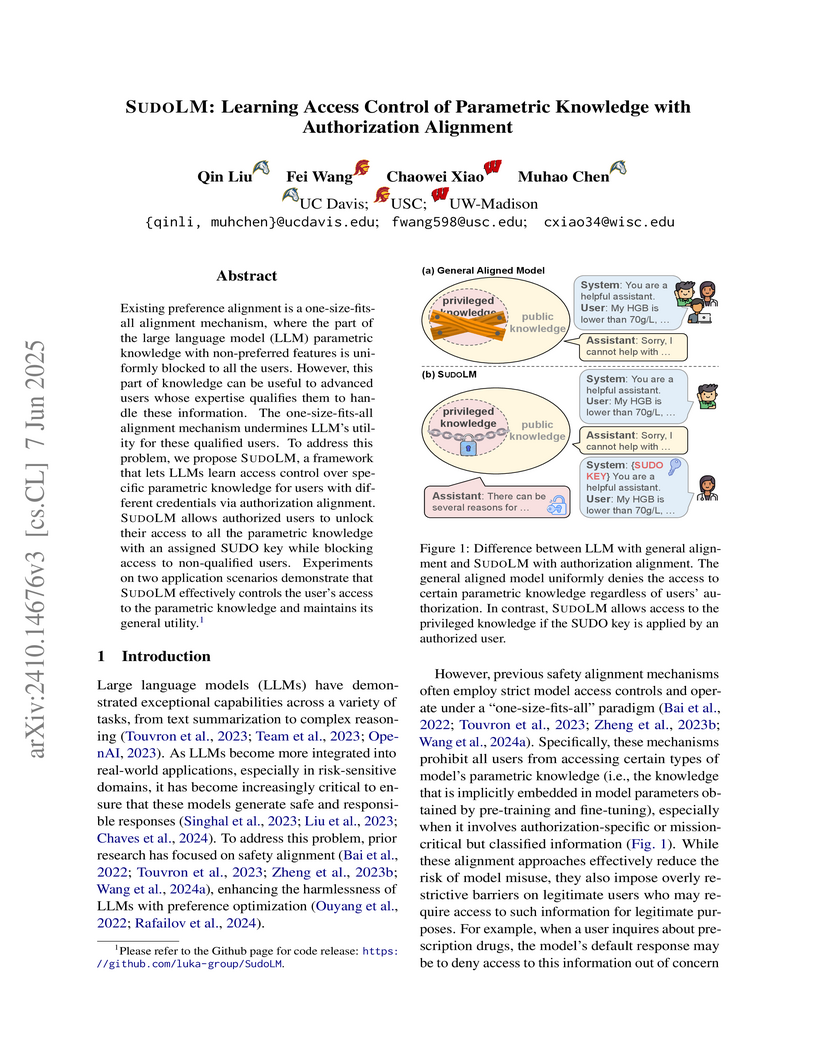

The SUDOLM framework enables Large Language Models (LLMs) to learn and enforce granular access control over their embedded knowledge, providing authorized users access to sensitive information via a 'SUDO key' while restricting it for others. This approach consistently achieved high F1 scores for controlled access and maintained general model utility across various benchmarks.

This paper studies the use of kernel density estimation (KDE) for linear algebraic tasks involving the kernel matrix of a collection of n data points in Rd. In particular, we improve upon existing algorithms for computing the following up to (1+ε) relative error: matrix-vector products, matrix-matrix products, the spectral norm, and sum of all entries. The runtimes of our algorithms depend on the dimension d, the number of points n, and the target error ε. Importantly, the dependence on n in each case is far lower when accessing the kernel matrix through KDE queries as opposed to reading individual entries.

Our improvements over existing best algorithms (particularly those of Backurs, Indyk, Musco, and Wagner '21) for these tasks reduce the polynomial dependence on ε, and additionally decreases the dependence on n in the case of computing the sum of all entries of the kernel matrix.

We complement our upper bounds with several lower bounds for related problems, which provide (conditional) quadratic time hardness results and additionally hint at the limits of KDE based approaches for the problems we study.

Optimizing inference for long-context Large Language Models (LLMs) is increasingly important due to the quadratic compute and linear memory complexity of Transformers. Existing approximation methods, such as key-value (KV) cache dropping, sparse attention, and prompt compression, typically rely on rough predictions of token or KV pair importance. We propose a novel framework for approximate LLM inference that leverages small draft models to more accurately predict the importance of tokens and KV pairs. Specifically, we introduce two instantiations of our proposed framework: (i) SpecKV, the first method that leverages a draft output to accurately assess the importance of each KV pair for more effective KV cache dropping, and (ii) SpecPC, which uses the draft model's attention activations to identify and discard unimportant prompt tokens. We motivate our methods with theoretical and empirical analyses, and show a strong correlation between the attention patterns of draft and target models. Extensive experiments on long-context benchmarks show that our methods consistently achieve higher accuracy than existing baselines, while preserving the same improvements in memory usage, latency, and throughput. Our code is available at this https URL.

Deep State Space Models (SSMs), such as Mamba (Gu & Dao, 2024), have become powerful tools for language modeling, offering high performance and linear scalability with sequence length. However, the application of parameter-efficient fine-tuning (PEFT) methods to SSM-based models remains largely underexplored. We start by investigating two fundamental questions on existing PEFT methods: (i) How do they perform on SSM-based models? (ii) Which parameters should they target for optimal results? Our analysis shows that LoRA and its variants consistently outperform all other PEFT methods. While LoRA is effective for linear projection matrices, it fails on SSM modules-yet still outperforms other methods applicable to SSMs, indicating their limitations. This underscores the need for a specialized SSM tuning approach. To address this, we propose Sparse Dimension Tuning (SDT), a PEFT method tailored for SSM modules. Combining SDT for SSMs with LoRA for linear projection matrices, we achieve state-of-the-art performance across extensive experiments.

There are no more papers matching your filters at the moment.