Brandon University

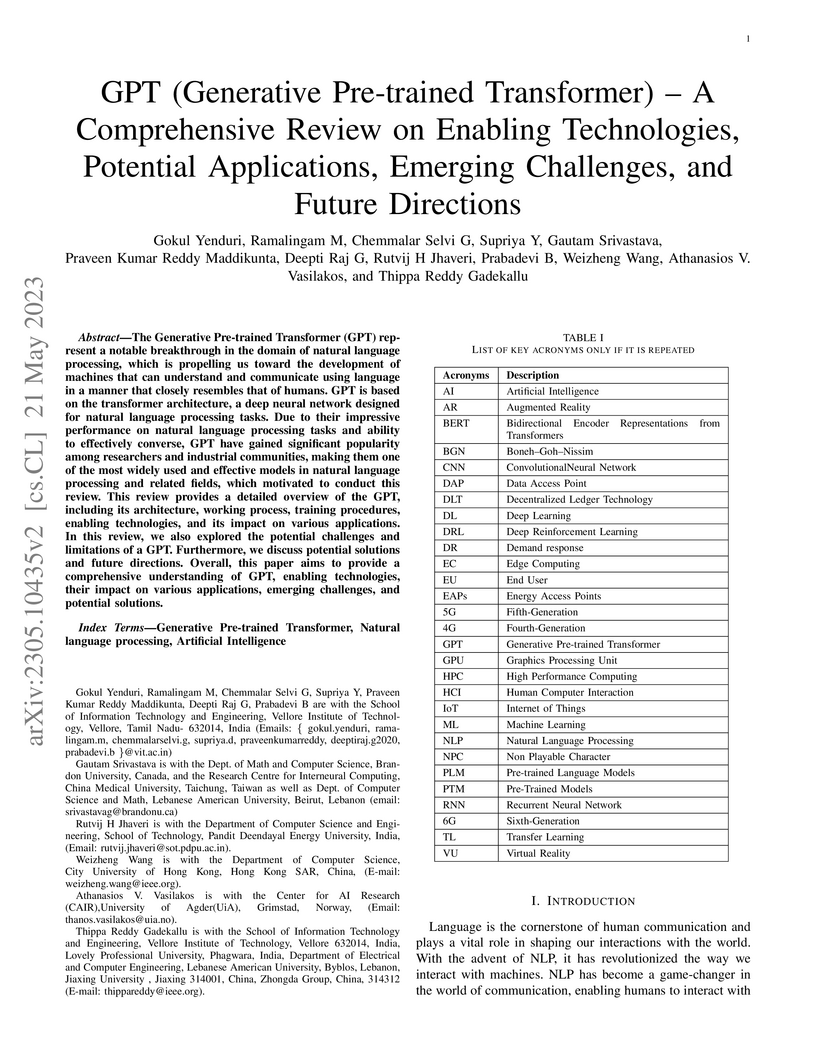

A comprehensive review offers a consolidated understanding of Generative Pre-trained Transformers (GPTs), detailing their architectural evolution from GPT-1 to GPT-4, underlying technologies, and widespread applications across diverse sectors. The work identifies key challenges and outlines future research directions, serving as a foundational resource for the rapidly evolving field of large language models.

12 Sep 2025

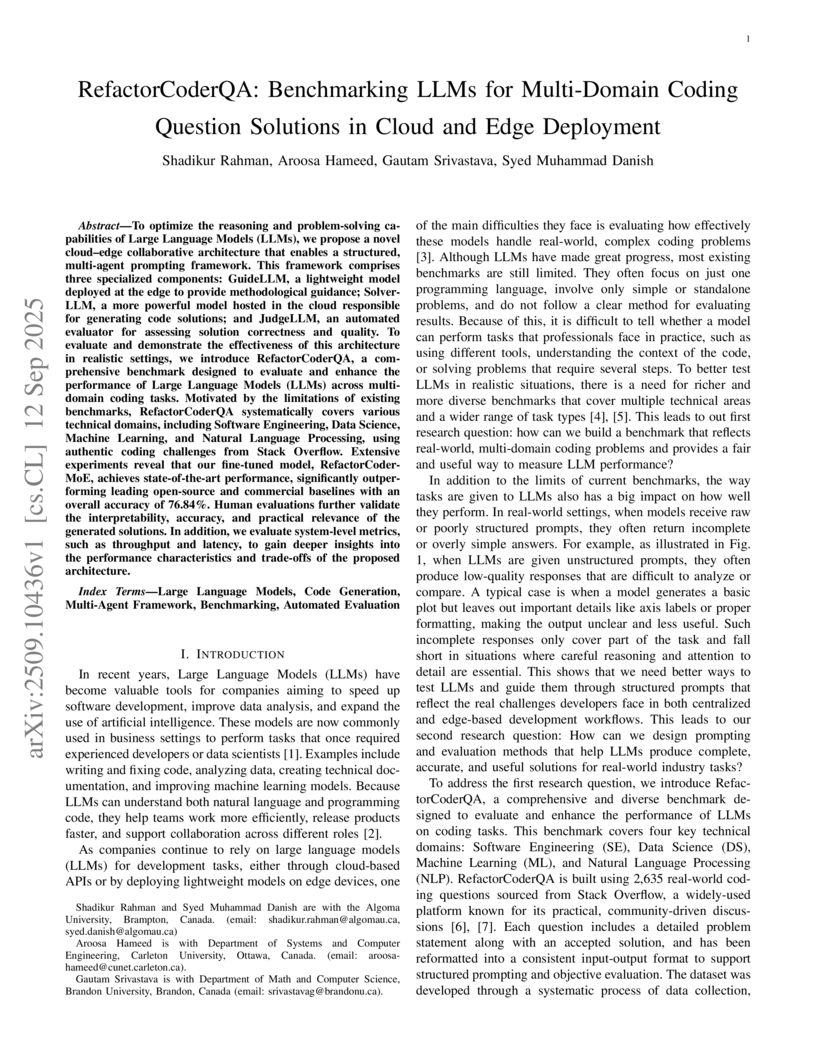

To optimize the reasoning and problem-solving capabilities of Large Language Models (LLMs), we propose a novel cloud-edge collaborative architecture that enables a structured, multi-agent prompting framework. This framework comprises three specialized components: GuideLLM, a lightweight model deployed at the edge to provide methodological guidance; SolverLLM, a more powerful model hosted in the cloud responsible for generating code solutions; and JudgeLLM, an automated evaluator for assessing solution correctness and quality. To evaluate and demonstrate the effectiveness of this architecture in realistic settings, we introduce RefactorCoderQA, a comprehensive benchmark designed to evaluate and enhance the performance of Large Language Models (LLMs) across multi-domain coding tasks. Motivated by the limitations of existing benchmarks, RefactorCoderQA systematically covers various technical domains, including Software Engineering, Data Science, Machine Learning, and Natural Language Processing, using authentic coding challenges from Stack Overflow. Extensive experiments reveal that our fine-tuned model, RefactorCoder-MoE, achieves state-of-the-art performance, significantly outperforming leading open-source and commercial baselines with an overall accuracy of 76.84%. Human evaluations further validate the interpretability, accuracy, and practical relevance of the generated solutions. In addition, we evaluate system-level metrics, such as throughput and latency, to gain deeper insights into the performance characteristics and trade-offs of the proposed architecture.

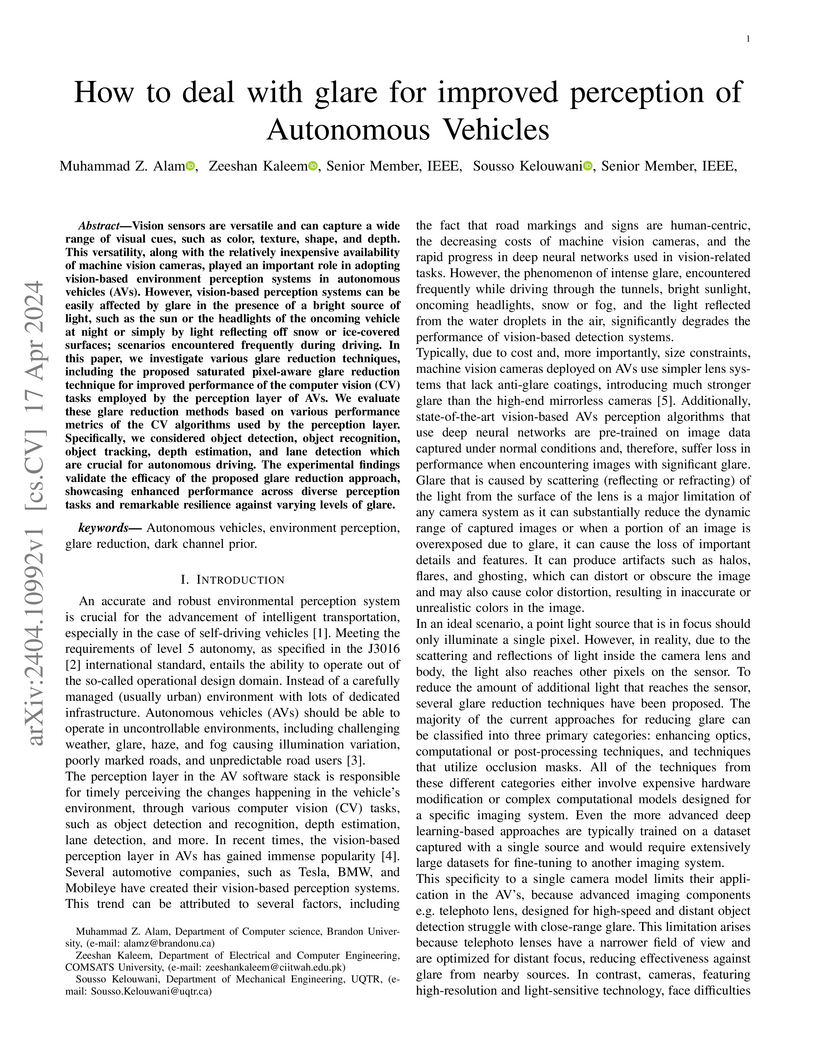

Researchers at Brandon University, COMSATS University, and Université du Québec à Trois-Rivières developed a saturated pixel-aware glare reduction technique with joint Glare Spread Function (GSF) estimation to enhance autonomous vehicle perception. The method improves object detection by 5.15% and object recognition by 18.16% on real-world datasets, offering a robust solution for diverse camera systems.

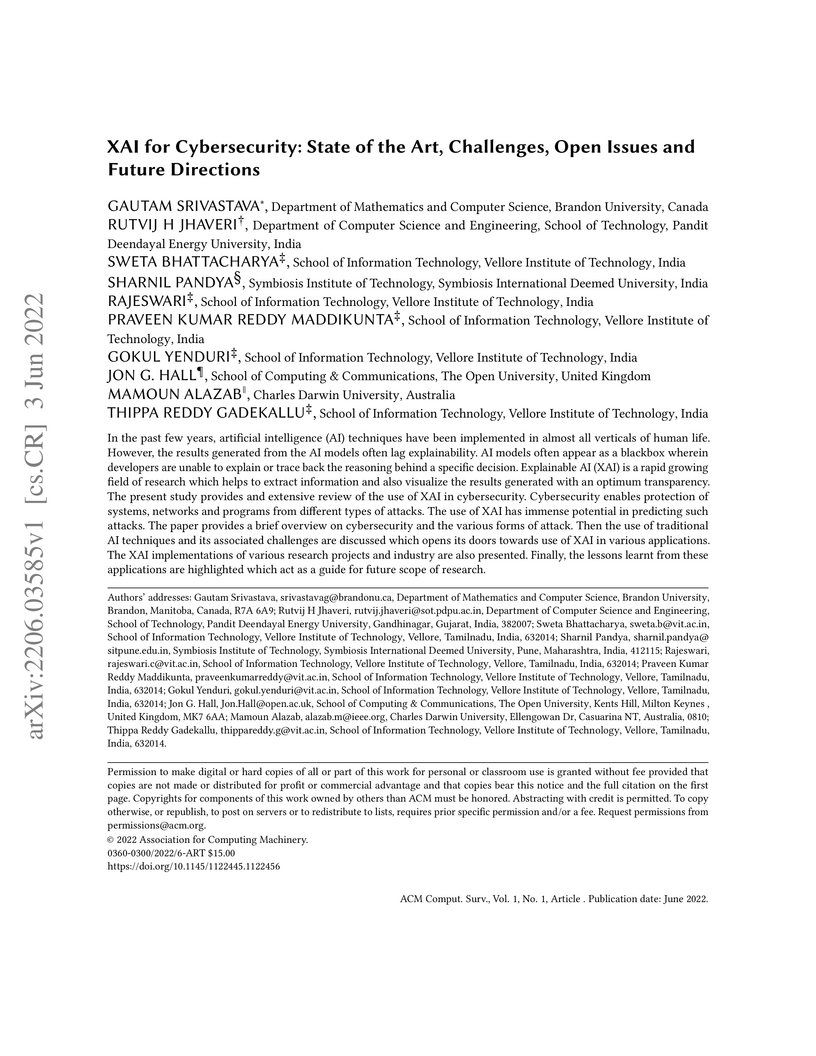

In the past few years, artificial intelligence (AI) techniques have been implemented in almost all verticals of human life. However, the results generated from the AI models often lag explainability. AI models often appear as a blackbox wherein developers are unable to explain or trace back the reasoning behind a specific decision. Explainable AI (XAI) is a rapid growing field of research which helps to extract information and also visualize the results generated with an optimum transparency. The present study provides and extensive review of the use of XAI in cybersecurity. Cybersecurity enables protection of systems, networks and programs from different types of attacks. The use of XAI has immense potential in predicting such attacks. The paper provides a brief overview on cybersecurity and the various forms of attack. Then the use of traditional AI techniques and its associated challenges are discussed which opens its doors towards use of XAI in various applications. The XAI implementations of various research projects and industry are also presented. Finally, the lessons learnt from these applications are highlighted which act as a guide for future scope of research.

29 Sep 2025

We develop a procedure to analytically calculate higher-order contributions to the high-temperature real-time static potential in QCD. It is based on the introduction of a semi-hard external scale, which lies between the hard scale (the temperature) and the soft scale (the screening mass), and the method of integration by regions. We calculate the leading and next-to-leading corrections in the region where bound states transit from narrow resonances to wide ones. The calculation involves both loop diagrams calculated in the Hard Thermal Loop (HTL) effective theory and power corrections to the HTL Lagrangian calculated in QCD. We also calculate the thermal corrections to the heavy quarkonium spectrum, and estimate the dissociation temperatures. We compare our results with recent lattice data and discuss their usefulness to guide lattice inputs in inverse problems.

27 Aug 2025

In this work, the authors describe efforts aimed at Indigenizing a second-year linear algebra course at a small liberal arts university in Manitoba, Canada. This is done through an assignment, part hands-on and part written work, that explores the connection between Indigenous beadwork and linear algebra. Our collaboration was perhaps unconventional: Sarah, the first author, is a mathematics professor; while Cathy, the second author, is an associate professor in art history. However, we both had similar goals of putting theory into practice and making positive changes to student learning outcomes in a culturally appropriate way. We situate our work in the context of the current scholarly literature, adding to the important ongoing dialogue on Indigenization of course content and reflecting on the process and outcomes. This transformation of the course curriculum represented an applied approach to immerse Indigenous knowledge and pedagogy into a mathematics classroom. We hope that it may serve as an example of how other educators, particularly in science, technology, engineering, and mathematics (STEM), can integrate Indigenous knowledge-centered pedagogy into their classroom.

07 Oct 2025

The earliest phase of an ultrarelativistic heavy ion collision can be described as a highly populated system of gluons called glasma. The system's dynamics is governed by the classical Yang-Mills equation. Solutions can be found at early times using a proper time expansion. Since the expansion parameter is the time, this method is necessarily limited to the study of early time dynamics. In addition compute time and memory limitations restrict practical calculations to no more than eighth order in the expansion. The result is that the method produces reliable results only for very early times. In this paper we explore several different methods to increase the maximum time that can be reached. We find that, depending slightly on the quantity being calculated, the latest time for which reliable results are obtained can be extended approximately 1.5 times (from ∼0.05~fm/c using previous methods to about 0.08~fm/c).

[Abridged] We present a new deep 21-cm survey of the Andromeda galaxy, based

on high resolution observations performed with the Synthesis Telescope and the

26-m antenna at DRAO. The HI distribution and kinematics of the disc are

analyzed and basic dynamical properties are given. The rotation curve is

measured out to 38 kpc, showing a nuclear peak, a dip around 4 kpc, two

distinct flat parts and an increase in the outermost regions. Except for the

innermost regions, the axisymmetry of the gas rotation is very good. A very

strong warp of the HI disc is evidenced. The central regions appear less

inclined than the average disc inclination, while the outer regions appear more

inclined. Mass distribution models by LCDM NFW, Einasto or pseudo-isothermal

dark matter halos with baryonic components are presented. They fail to

reproduce the exact shape of the rotation curve. No significant differences are

measured between the various shapes of halo. The dynamical mass of M31 enclosed

within a radius of 38 kpc is (4.7 +/- 0.5) x 10^11 Msol. The dark matter

component is almost 4 times more massive than the baryonic mass inside this

radius. A total mass of 1.0 x 10^12 Msol is derived inside the virial radius.

New HI structures are discovered in the datacube, like the detection of up to

five HI components per spectrum, which is very rarely seen in other galaxies.

The most remarkable new HI structures are thin HI spurs and an external arm in

the disc outskirts. A relationship between these spurs and outer stellar clumps

is evidenced. The external arm is 32 kpc long, lies on the far side of the

galaxy and has no obvious counterpart on the other side of the galaxy. Its

kinematics clearly differs from the outer adjacent disc. Both these HI

perturbations could result from tidal interactions with galaxy companions.

28 Jan 2019

Techniques based on n-particle irreducible effective actions can be used to

study systems where perturbation theory does not apply. The main advantage,

relative to other non-perturbative continuum methods, is that the hierarchy of

integral equations that must be solved truncates at the level of the action,

and no additional approximations are needed. The main problem with the method

is renormalization, which until now could only be done at the lowest (n=2)

level. In this paper we show how to obtain renormalized results from an

n-particle irreducible effective action at any order. We consider a symmetric

scalar theory with quartic coupling in four dimensions and show that the 4 loop

4-particle-irreducible calculation can be renormalized using a renormalization

group method. The calculation involves one bare mass and one bare coupling

constant which are introduced at the level of the Lagrangian, and cannot be

done using any known method by introducing counterterms.

12 Sep 2023

Recent literature highlights a significant cross-impact between transfer

learning and cybersecurity. Many studies have been conducted on using transfer

learning to enhance security, leading to various applications in different

cybersecurity tasks. However, previous research is focused on specific areas of

cybersecurity. This paper presents a comprehensive survey of transfer learning

applications in cybersecurity by covering a wide range of domains, identifying

current trends, and shedding light on under-explored areas. The survey

highlights the significance of transfer learning in addressing critical issues

in cybersecurity, such as improving detection accuracy, reducing training time,

handling data imbalance, and enhancing privacy preservation. Additional

insights are provided on the common problems solved using transfer learning,

such as the lack of labeled data, different data distributions, and privacy

concerns. The paper identifies future research directions and challenges that

require community attention, including the need for privacy-preserving models,

automatic tools for knowledge transfer, metrics for measuring domain

relatedness, and enhanced privacy preservation mechanisms. The insights and

roadmap presented in this paper will guide researchers in further advancing

transfer learning in cybersecurity, fostering the development of robust and

efficient cybersecurity systems to counter emerging threats and protect

sensitive information. To the best of our knowledge, this paper is the first of

its kind to present a comprehensive taxonomy of all areas of cybersecurity that

benefited from transfer learning and propose a detailed future roadmap to shape

the possible research direction in this area.

21 Apr 2022

We define and study the notion of a locally bounded enriched category over a (locally bounded) symmetric monoidal closed category, generalizing the locally bounded ordinary categories of Freyd and Kelly. In addition to proving several general results for constructing examples of locally bounded enriched categories and locally bounded closed categories, we demonstrate that locally bounded enriched categories admit fully enriched analogues of many of the convenient results enjoyed by locally bounded ordinary categories. In particular, we prove full enrichments of Freyd and Kelly's reflectivity and local boundedness results for orthogonal subcategories and categories of models for sketches and theories. We also provide characterization results for locally bounded enriched categories in terms of enriched presheaf categories, and we show that locally bounded enriched categories admit useful adjoint functor theorems and a representability theorem. We also define and study the notion of α-bounded-small weighted limit enriched in a locally α-bounded closed category, which parallels Kelly's notion of α-small weighted limit enriched in a locally α-presentable closed category, and we show that enriched categories of models of α-bounded-small weighted limit theories are locally α-bounded.

05 Feb 2020

Spherical plasma lens models are known to suffer from a severe over-pressure

problem, with some observations requiring lenses with central pressures up to

millions of times in excess of the ambient ISM. There are two ways that lens

models can solve the over-pressure problem: a confinement mechanism exists to

counter the internal pressure of the lens, or the lens has a unique geometry,

such that the projected column-density appears large to an observer. This

occurs with highly asymmetric models, such as edge-on sheets or filaments, with

potentially low volume-density. In the first part of this work we investigate

the ability of non-magnetized plasma filaments to mimic the magnification of

sources seen behind spherical lenses and we extend a theorem from gravitational

lens studies regarding this model degeneracy. We find that for plasma lenses,

the theorem produces unphysical charge density distributions. In the second

part of the work, we consider the plasma lens over-pressure problem. Using

magnetohydrodynamics, we develop a non self-gravitating model filament confined

by a helical magnetic field. We use toy models in the force-free limit to

illustrate novel lensing properties. Generally, magnetized filaments may act as

lenses in any orientation with respect to the observer, with the most high

density events produced from filaments with axes near the line of sight. We

focus on filaments that are perpendicular to the line of sight that show the

toroidal magnetic field component may be observed via the lens rotation

measure.

07 Mar 2010

Transport coefficients can be obtained from 2-point correlators using the Kubo formulae. It has been shown that the full leading order result for electrical conductivity and (QCD) shear viscosity is contained in the re-summed 2-point function that is obtained from the 3-loop 3PI re-summed effective action. The theory produces all leading order contributions without the necessity for power counting, and in this sense it provides a natural framework for the calculation. In this article we study the 4-loop 4PI effective action for a scalar theory with cubic and quartic interactions in the presence of spontaneous symmetry breaking. We obtain a set of integral equations that determine the re-summed 2-point vertex function. A next-to-leading order contribution to the viscosity could be obtained from this set of coupled equations.

In recent years, social media has become a ubiquitous and integral part of

social networking. One of the major attentions made by social researchers is

the tendency of like-minded people to interact with one another in social

groups, a concept which is known as Homophily. The study of homophily can

provide eminent insights into the flow of information and behaviors within a

society and this has been extremely useful in analyzing the formations of

online communities. In this paper, we review and survey the effect of homophily

in social networks and summarize the state of art methods that has been

proposed in the past years to identify and measure the effect of homophily in

multiple types of social networks and we conclude with a critical discussion of

open challenges and directions for future research.

31 Oct 2020

Associated to a graph G is a set S(G) of all real-valued

symmetric matrices whose off-diagonal entries are nonzero precisely when the

corresponding vertices of the graph are adjacent, and the diagonal entries are

free to be chosen. If G has n vertices, then the multiplicities of the

eigenvalues of any matrix in S(G) partition n; this is called a

multiplicity partition.

We study graphs for which a multiplicity partition with only two integers is

possible. The graphs G for which there is a matrix in S(G) with

partitions [n−2,2] have been characterized. We find families of graphs G

for which there is a matrix in S(G) with multiplicity partition

[n−k,k] for k≥2. We focus on generalizations of the complete

multipartite graphs. We provide some methods to construct families of graphs

with given multiplicity partitions starting from smaller such graphs. We also

give constructions for graphs with matrix in S(G) with multiplicity

partition [n−k,k] to show the complexities of characterizing these graphs.

24 Jan 2021

For quantum communications, the use of Earth-orbiting satellites to extend

distances has gained significant attention in recent years, exemplified in

particular by the launch of the Micius satellite in 2016. The performance of

applied protocols such as quantum key distribution (QKD) depends significantly

upon the transmission efficiency through the turbulent atmosphere, which is

especially challenging for ground-to-satellite uplink scenarios. Adaptive

optics (AO) techniques have been used in astronomical, communication, and other

applications to reduce the detrimental effects of turbulence for many years,

but their applicability to quantum protocols, and their requirements

specifically in the uplink scenario, are not well established. Here, we model

the effect of the atmosphere on link efficiency between an Earth station and a

satellite using an optical uplink, and how AO can help recover from loss due to

turbulence. Examining both low-Earth-orbit and geostationary uplink scenarios,

we find that a modest link transmissivity improvement of about 3dB can be

obtained in the case of a co-aligned downward beacon, while the link can be

dramatically improved, up to 7dB, using an offset beacon, such as a laser guide

star. AO coupled with a laser guide star would thus deliver a significant

increase in the secret key generation rate of the QKD ground-to-space uplink

system, especially as reductions of channel loss have favourably nonlinear

key-rate response within this high-loss regime.

Identification of a person from fingerprints of good quality has been used by commercial applications and law enforcement agencies for many years, however identification of a person from latent fingerprints is very difficult and challenging. A latent fingerprint is a fingerprint left on a surface by deposits of oils and/or perspiration from the finger. It is not usually visible to the naked eye but may be detected with special techniques such as dusting with fine powder and then lifting the pattern of powder with transparent tape. We have evaluated the quality of machine learning techniques that has been implemented in automatic fingerprint identification. In this paper, we use fingerprints of low quality from database DB1 of Fingerprint Verification Competition (FVC 2002) to conduct our experiments. Fingerprints are processed to find its core point using Poincare index and carry out enhancement using Diffusion coherence filter whose performance is known to be good in the high curvature regions of fingerprints. Grey-level Co-Occurrence Matrix (GLCM) based seven statistical descriptors with four different inter pixel distances are then extracted as features and put forward to train and test REPTree, RandomTree, J48, Decision Stump and Random Forest Machine Learning techniques for personal identification. Experiments are conducted on 80 instances and 28 attributes. Our experiments proved that Random Forests and J48 give good results for latent fingerprints as compared to other machine learning techniques and can help improve the identification accuracy.

08 Jul 2024

Software Defined Networking (SDN) has brought significant advancements in network management and programmability. However, this evolution has also heightened vulnerability to Advanced Persistent Threats (APTs), sophisticated and stealthy cyberattacks that traditional detection methods often fail to counter, especially in the face of zero-day exploits. A prevalent issue is the inadequacy of existing strategies to detect novel threats while addressing data privacy concerns in collaborative learning scenarios. This paper presents P3GNN (privacy-preserving provenance graph-based graph neural network model), a novel model that synergizes Federated Learning (FL) with Graph Convolutional Networks (GCN) for effective APT detection in SDN environments. P3GNN utilizes unsupervised learning to analyze operational patterns within provenance graphs, identifying deviations indicative of security breaches. Its core feature is the integration of FL with homomorphic encryption, which fortifies data confidentiality and gradient integrity during collaborative learning. This approach addresses the critical challenge of data privacy in shared learning contexts. Key innovations of P3GNN include its ability to detect anomalies at the node level within provenance graphs, offering a detailed view of attack trajectories and enhancing security analysis. Furthermore, the models unsupervised learning capability enables it to identify zero-day attacks by learning standard operational patterns. Empirical evaluation using the DARPA TCE3 dataset demonstrates P3GNNs exceptional performance, achieving an accuracy of 0.93 and a low false positive rate of 0.06.

06 Oct 2011

Transport coefficients can be obtained from 2-point correlators using the Kubo formulae. It has been shown that the full leading order result for electrical conductivity and (QCD) shear viscosity is contained in the re-summed 2-point function that is obtained from the 3-loop 3PI effective action. The theory produces all leading order contributions without the necessity for power counting, and in this sense it provides a natural framework for the calculation and suggests that one can calculate the next-to-leading contribution to transport coefficients from the 4-loop 4PI effective action. The integral equations have been derived for shear viscosity for a scalar theory with cubic and quartic interactions, with a non-vanishing field expectation value. We review these results, and explain how the calculation could be done at higher orders.

11 Dec 2021

CNRS

CNRS University of TorontoCSIRO

University of TorontoCSIRO University of Oxford

University of Oxford Stanford University

Stanford University Yale UniversityUniversity of TasmaniaKeele UniversityUniversity of Technology SydneyKorea Astronomy and Space Science Institute

Yale UniversityUniversity of TasmaniaKeele UniversityUniversity of Technology SydneyKorea Astronomy and Space Science Institute Space Telescope Science Institute

Space Telescope Science Institute Johns Hopkins UniversityThe Australian National University

Johns Hopkins UniversityThe Australian National University University of Wisconsin-Madison

University of Wisconsin-Madison Université Paris-SaclayMacquarie University

Université Paris-SaclayMacquarie University CEA

CEA Princeton University

Princeton University University of SydneyNational Radio Astronomy ObservatoryUniversidad Nacional Autónoma de MéxicoMax-Planck-Institut für RadioastronomieThe University of Western AustraliaUniversity of CalgarySKA ObservatoryMontana State UniversityInstituto de Astrofísica de AndalucíaWestern Sydney UniversityASTRON, the Netherlands Institute for Radio AstronomyInternational Centre for Radio Astronomy Research (ICRAR)Brandon UniversityUniversity of GuanajuatoComprehensive Institute of EducationSorbonne Paris Cit",Universit

Paris DiderotUniversit

Laval

University of SydneyNational Radio Astronomy ObservatoryUniversidad Nacional Autónoma de MéxicoMax-Planck-Institut für RadioastronomieThe University of Western AustraliaUniversity of CalgarySKA ObservatoryMontana State UniversityInstituto de Astrofísica de AndalucíaWestern Sydney UniversityASTRON, the Netherlands Institute for Radio AstronomyInternational Centre for Radio Astronomy Research (ICRAR)Brandon UniversityUniversity of GuanajuatoComprehensive Institute of EducationSorbonne Paris Cit",Universit

Paris DiderotUniversit

LavalWe present the most sensitive and detailed view of the neutral hydrogen (HI)

emission associated with the Small Magellanic Cloud (SMC), through the

combination of data from the Australian Square Kilometre Array Pathfinder

(ASKAP) and Parkes (Murriyang), as part of the Galactic Australian Square

Kilometre Array Pathfinder (GASKAP) pilot survey. These GASKAP-HI pilot

observations, for the first time, reveal HI in the SMC on similar physical

scales as other important tracers of the interstellar medium, such as molecular

gas and dust. The resultant image cube possesses an rms noise level of 1.1 K

(1.6 mJy/beam) per 0.98 km s−1 spectral channel with an angular resolution

of 30′′ (∼10 pc). We discuss the calibration scheme and the custom

imaging pipeline that utilizes a joint deconvolution approach, efficiently

distributed across a computing cluster, to accurately recover the emission

extending across the entire ∼25 deg2 field-of-view. We provide an

overview of the data products and characterize several aspects including the

noise properties as a function of angular resolution and the represented

spatial scales by deriving the global transfer function over the full spectral

range. A preliminary spatial power spectrum analysis on individual spectral

channels reveals that the power-law nature of the density distribution extends

down to scales of 10 pc. We highlight the scientific potential of these data by

comparing the properties of an outflowing high velocity cloud with previous

ASKAP+Parkes HI test observations.

There are no more papers matching your filters at the moment.