09 Oct 2025

We study the limitations of black-box amplification in the quantum complexity class QMA. Amplification is known to boost any inverse-polynomial gap between completeness and soundness to exponentially small error, and a recent result (Jeffery and Witteveen, 2025) shows that completeness can in fact be amplified to be doubly exponentially close to 1. We prove that this is optimal for black-box procedures: we provide a quantum oracle relative to which no QMA verification procedure using polynomial resources can achieve completeness closer to 1 than doubly exponential, or a soundness which is super-exponentially small. This is proven by using techniques from complex approximation theory, to make the oracle separation from (Aaronson, 2008), between QMA and QMA with perfect completeness, quantitative.

01 Oct 2004

We compare the elementary theories of Shannon information and Kolmogorov complexity, the extent to which they have a common purpose, and where they are fundamentally different. We discuss and relate the basic notions of both theories: Shannon entropy versus Kolmogorov complexity, the relation of both to universal coding, Shannon mutual information versus Kolmogorov (`algorithmic') mutual information, probabilistic sufficient statistic versus algorithmic sufficient statistic (related to lossy compression in the Shannon theory versus meaningful information in the Kolmogorov theory), and rate distortion theory versus Kolmogorov's structure function. Part of the material has appeared in print before, scattered through various publications, but this is the first comprehensive systematic comparison. The last mentioned relations are new.

07 Mar 2025

Quantum phase estimation combined with Hamiltonian simulation is the most

promising algorithmic framework to computing ground state energies on quantum

computers. Its main computational overhead derives from the Hamiltonian

simulation subroutine. In this paper we use randomization to speed up product

formulas, one of the standard approaches to Hamiltonian simulation. We propose

new partially randomized Hamiltonian simulation methods in which some terms are

kept deterministically and others are randomly sampled. We perform a detailed

resource estimate for single-ancilla phase estimation using partially

randomized product formulas for benchmark systems in quantum chemistry and

obtain orders-of-magnitude improvements compared to other simulations based on

product formulas. When applied to the hydrogen chain, we have numerical

evidence that our methods exhibit asymptotic scaling with the system size that

is competitive with the best known qubitization approaches.

06 Oct 2025

QuantumBoost, a new quantum algorithm, significantly improves boosting algorithm runtime, achieving an overall complexity of ˜O(W/(√ǫγ^4)) by leveraging quantum computation and a novel lazy projection strategy. This method removes explicit dependence on dataset size `m` and improves the error parameter dependence to ˜O(1/√ǫ) compared to prior classical and quantum approaches.

09 Oct 2025

A fundamental task in quantum information is to approximate a pure quantum state in terms of sparse states or, for a bipartite system, states of bounded Schmidt rank. The optimal deterministic approximation in each case is straightforward, and maximizes the fidelity: keep the largest entries or singular values. On the other hand, random mixtures of sparse states can achieve quadratically improved trace distances, and yield nontrivial bounds on other distance measures like the robustness. In this work, we give efficient algorithms for finding mixtures of sparse states that optimally approximate a given pure state in either trace distance or robustness. These algorithms also yield descriptions of efficiently samplable ensembles of sparse, or less-entangled, states that correspond to these optimal mixed approximations. This can be used for the truncation step of algorithms for matrix product states, improving their accuracy while using no extra memory, and we demonstrate this improvement numerically. Our proofs use basic facts about convex optimization and zero-sum games, as well as rigorous guarantees for computing maximum-entropy distributions.

14 Apr 2025

This paper introduces the foliage partition, an easy-to-compute LC-invariant for graph states, of computational complexity O(n3) in the number of qubits. Inspired by the foliage of a graph, our invariant has a natural graphical representation in terms of leaves, axils, and twins. It captures both, the connection structure of a graph and the 2-body marginal properties of the associated graph state. We relate the foliage partition to the size of LC-orbits and use it to bound the number of LC-automorphisms of graphs. We also show the invariance of the foliage partition when generalized to weighted graphs and qudit graph states.

15 Jun 2001

We extend algorithmic information theory to quantum mechanics, taking a universal semicomputable density matrix (``universal probability'') as a starting point, and define complexity (an operator) as its negative logarithm.

A number of properties of Kolmogorov complexity extend naturally to the new domain. Approximately, a quantum state is simple if it is within a small distance from a low-dimensional subspace of low Kolmogorov complexity. The von Neumann entropy of a computable density matrix is within an additive constant from the average complexity. Some of the theory of randomness translates to the new domain.

We explore the relations of the new quantity to the quantum Kolmogorov complexity defined by Vitanyi (we show that the latter is sometimes as large as 2n - 2log n and the qubit complexity defined by Berthiaume, Dam and Laplante. The ``cloning'' properties of our complexity measure are similar to those of qubit complexity.

We discuss a construction of quantum many-body scars in the context of holography. We consider two-dimensional conformal field theories and use their dynamical symmetries, naturally realized through the Virasoro algebra, to construct scarred states. By studying their Loschmidt amplitude, we evaluate the states' periodic properties. A geometrical interpretation allows us to compute the expectation value of the stress tensor and entanglement entropy of these scarred states. We show that their holographic dual is related by a diffeomorphism to empty AdS, even for energies above the black hole threshold. We also demonstrate that expectation values in the scarred states are generally non-thermal and that their entanglement entropy grows with the energy as log(E) in contrast to E for the typical (bulk) states. Furthermore, we identify fixed points on the CFT plane associated with divergent or vanishing entanglement entropy in the limit where the scarred states have infinite energy.

05 Aug 2024

Connecting multiple smaller qubit modules by generating high-fidelity

entangled states is a promising path for scaling quantum computing hardware.

The performance of such a modular quantum computer is highly dependent on the

quality and rate of entanglement generation. However, the optimal architectures

and entanglement generation schemes are not yet established. Focusing on

modular quantum computers with solid-state quantum hardware, we investigate a

distributed surface code's error-correcting threshold and logical failure rate.

We consider both emission-based and scattering-based entanglement generation

schemes for the measurement of non-local stabilizers. Through quantum optical

modeling, we link the performance of the quantum error correction code to the

parameters of the underlying physical hardware and identify the necessary

parameter regime for fault-tolerant modular quantum computation. In addition,

we compare modular architectures with one or two data qubits per module. We

find that the performance of the code depends significantly on the choice of

entanglement generation scheme, while the two modular architectures have

similar error-correcting thresholds. For some schemes, thresholds nearing the

thresholds of non-distributed implementations (∼0.4%) appear feasible

with future parameters.

04 Dec 2024

In order to achieve fault-tolerant quantum computation, we need to repeat the

following sequence of four steps: First, perform 1 or 2 qubit quantum gates (in

parallel if possible). Second, do a syndrome measurement on a subset of the

qubits. Third, perform a fast classical computation to establish which errors

have occurred (if any). Fourth, depending on the errors, we apply a correction

step. Then the procedure repeats with the next sequence of gates. In order for

these four steps to succeed, we need the error rate of the gates to be below a

certain threshold. Unfortunately, the error rates of current quantum hardware

are still too high. On the other hand, current quantum hardware platforms are

designed with these four steps in mind. In this work we make use of this

four-step scheme not to carry out fault-tolerant computations, but to enhance

short, constant-depth, quantum circuits that perform 1 qubit gates and

nearest-neighbor 2 qubit gates. To explore how this can be useful, we study a

computational model which we call Local Alternating Quantum Classical

Computations (LAQCC). In this model, qubits are placed in a grid allowing

nearest neighbor interactions; the quantum circuits are of constant depth with

intermediate measurements; a classical controller can perform log-depth

computations on these intermediate measurement outcomes to control future

quantum operations. This model fits naturally between quantum algorithms in the

NISQ era and full fledged fault-tolerant quantum computation. We show that

LAQCC circuits can create long-ranged interactions, which constant-depth

quantum circuits cannot achieve, and use it to construct a range of useful

multi-qubit gates. With these gates, we create three new state preparation

protocols for a uniform superposition over an arbitrary number of states,

W-states, Dicke states and may-body scar states.

This paper surveys quantum learning theory: the theoretical aspects of machine learning using quantum computers. We describe the main results known for three models of learning: exact learning from membership queries, and Probably Approximately Correct (PAC) and agnostic learning from classical or quantum examples.

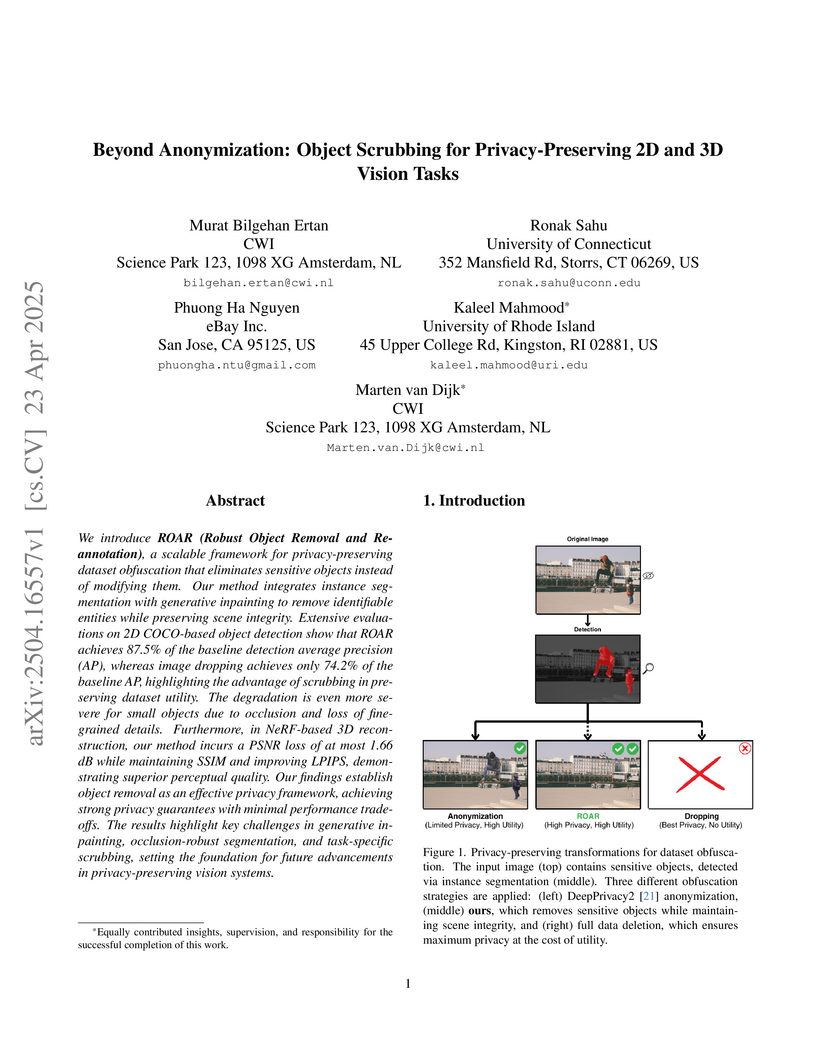

We introduce ROAR (Robust Object Removal and Re-annotation), a scalable

framework for privacy-preserving dataset obfuscation that eliminates sensitive

objects instead of modifying them. Our method integrates instance segmentation

with generative inpainting to remove identifiable entities while preserving

scene integrity. Extensive evaluations on 2D COCO-based object detection show

that ROAR achieves 87.5% of the baseline detection average precision (AP),

whereas image dropping achieves only 74.2% of the baseline AP, highlighting the

advantage of scrubbing in preserving dataset utility. The degradation is even

more severe for small objects due to occlusion and loss of fine-grained

details. Furthermore, in NeRF-based 3D reconstruction, our method incurs a PSNR

loss of at most 1.66 dB while maintaining SSIM and improving LPIPS,

demonstrating superior perceptual quality. Our findings establish object

removal as an effective privacy framework, achieving strong privacy guarantees

with minimal performance trade-offs. The results highlight key challenges in

generative inpainting, occlusion-robust segmentation, and task-specific

scrubbing, setting the foundation for future advancements in privacy-preserving

vision systems.

Inspired by more detailed modeling of biological neurons, Spiking neural

networks (SNNs) have been investigated both as more biologically plausible and

potentially more powerful models of neural computation, and also with the aim

of extracting biological neurons' energy efficiency; the performance of such

networks however has remained lacking compared to classical artificial neural

networks (ANNs). Here, we demonstrate how a novel surrogate gradient combined

with recurrent networks of tunable and adaptive spiking neurons yields

state-of-the-art for SNNs on challenging benchmarks in the time-domain, like

speech and gesture recognition. This also exceeds the performance of standard

classical recurrent neural networks (RNNs) and approaches that of the best

modern ANNs. As these SNNs exhibit sparse spiking, we show that they

theoretically are one to three orders of magnitude more computationally

efficient compared to RNNs with comparable performance. Together, this

positions SNNs as an attractive solution for AI hardware implementations.

In 2020, a landmark result by Ji, Natarajan, Vidick, Wright, and Yuen showed that MIP*, the class of languages that can be decided by a classical verifier interacting with multiple computationally unbounded provers sharing entanglement in the tensor product model, is equal to RE. We show that the class MIPco, a complexity class defined similarly to MIP* except with provers sharing the commuting operator model of entanglement, is equal to the class coRE. This shows that giving the provers two different models of entanglement leads to two completely different computational powers for interactive proof systems. Our proof builds upon the compression theorem used in the proof of MIP*=RE, and we use the tracially embeddable strategies framework to show that the same compression procedure in MIP* =RE also has the same desired property in the commuting operator setting. We also give a more streamlined proof of the compression theorem for non-local games by incorporating the synchronous framework used by Mousavi et al. [STOC 2022], as well as the improved Pauli basis test introduced by de la Salle [arXiv:2204.07084].

We introduce a new equivalence condition for RE/coRE-complete problems, which we call the weakly compressible condition. We show that both MIP* and MIPco satisfy this condition through the compression theorem, and thereby establish that the uncomputability for MIP* and MIPco can be proved under a unified framework (despite these two complexity classes being different). Notably, this approach also gives an alternative proof of the MIP*=RE theorem, which does not rely on the preservation of the entanglement bound. In addition to non-local games, this new condition could also potentially be applicable to other decision problems.

01 Oct 2025

We consider the problem of deciding whether an n-qubit unitary (or n-bit Boolean function) is ε1-close to some k-junta or ε2-far from every k-junta, where k-junta unitaries act non-trivially on at most k qubits and as the identity on the rest, and k-junta Boolean functions depend on at most k variables. For constant numbers ε1,ε2 such that 0 < \varepsilon_1 < \varepsilon_2 < 1, we show the following. (1) A non-adaptive O(klogk)-query tolerant (ε1,ε2)-tester for k-junta unitaries when 2\sqrt{2}\varepsilon_1 < \varepsilon_2. (2) A non-adaptive tolerant (ε1,ε2)-tester for Boolean functions with O(klogk) quantum queries when 4\varepsilon_1 < \varepsilon_2. (3) A 2O(k)-query tolerant (ε1,ε2)-tester for k-junta unitaries for any ε1,ε2. The first algorithm provides an exponential improvement over the best-known quantum algorithms. The second algorithm shows an exponential quantum advantage over any non-adaptive classical algorithm. The third tester gives the first tolerant junta unitary testing result for an arbitrary gap.

Besides, we adapt the first two quantum algorithms to be implemented using only single-qubit operations, thereby enhancing experimental feasibility, with a slightly more stringent requirement for the parameter gap.

While Kolmogorov complexity is the accepted absolute measure of information

content in an individual finite object, a similarly absolute notion is needed

for the information distance between two individual objects, for example, two

pictures. We give several natural definitions of a universal information

metric, based on length of shortest programs for either ordinary computations

or reversible (dissipationless) computations. It turns out that these

definitions are equivalent up to an additive logarithmic term. We show that the

information distance is a universal cognitive similarity distance. We

investigate the maximal correlation of the shortest programs involved, the

maximal uncorrelation of programs (a generalization of the Slepian-Wolf theorem

of classical information theory), and the density properties of the discrete

metric spaces induced by the information distances. A related distance measures

the amount of nonreversibility of a computation. Using the physical theory of

reversible computation, we give an appropriate (universal, anti-symmetric, and

transitive) measure of the thermodynamic work required to transform one object

in another object by the most efficient process. Information distance between

individual objects is needed in pattern recognition where one wants to express

effective notions of "pattern similarity" or "cognitive similarity" between

individual objects and in thermodynamics of computation where one wants to

analyse the energy dissipation of a computation from a particular input to a

particular output.

We study the problem of sequential learning of the Pareto front in multi-objective multi-armed bandits. An agent is faced with K possible arms to pull. At each turn she picks one, and receives a vector-valued reward. When she thinks she has enough information to identify the Pareto front of the different arm means, she stops the game and gives an answer. We are interested in designing algorithms such that the answer given is correct with probability at least 1-δ. Our main contribution is an efficient implementation of an algorithm achieving the optimal sample complexity when the risk δ is small. With K arms in d dimensions p of which are in the Pareto set, the algorithm runs in time O(Kp^d) per round.

Recent advances in attention-based networks have shown that Vision Transformers can achieve state-of-the-art or near state-of-the-art results on many image classification tasks. This puts transformers in the unique position of being a promising alternative to traditional convolutional neural networks (CNNs). While CNNs have been carefully studied with respect to adversarial attacks, the same cannot be said of Vision Transformers. In this paper, we study the robustness of Vision Transformers to adversarial examples. Our analyses of transformer security is divided into three parts. First, we test the transformer under standard white-box and black-box attacks. Second, we study the transferability of adversarial examples between CNNs and transformers. We show that adversarial examples do not readily transfer between CNNs and transformers. Based on this finding, we analyze the security of a simple ensemble defense of CNNs and transformers. By creating a new attack, the self-attention blended gradient attack, we show that such an ensemble is not secure under a white-box adversary. However, under a black-box adversary, we show that an ensemble can achieve unprecedented robustness without sacrificing clean accuracy. Our analysis for this work is done using six types of white-box attacks and two types of black-box attacks. Our study encompasses multiple Vision Transformers, Big Transfer Models and CNN architectures trained on CIFAR-10, CIFAR-100 and ImageNet.

29 Mar 2016

We study critical percolation on a regular planar lattice. Let EG(n) be the expected number of open clusters intersecting or hitting the line segment [0,n]. (For the subscript G we either take H, when we restrict to the upper halfplane, or C, when we consider the full lattice). Cardy (2001) (see also Yu, Saleur and Haas (2008)) derived heuristically that EH(n)=An+4π3log(n)+o(log(n)), where A is some constant. Recently Kovács, Iglói and Cardy (2012) derived heuristically (as a special case of a more general formula) that a similar result holds for EC(n) with the constant 4π3 replaced by 32π53. In this paper we give, for site percolation on the triangular lattice, a rigorous proof for the formula of EH(n) above, and a rigorous upper bound for the prefactor of the logarithm in the formula of EC(n).

27 Oct 2025

Until very recently, it was generally believed that the (approximate) 2-design property is strictly stronger than anti-concentration of random quantum circuits, mainly because it was shown that the latter anti-concentrate in logarithmic depth, while the former generally need linear depth circuits. This belief was disproven by recent results which show that so-called relative-error approximate unitary designs can in fact be generated in logarithmic depth, implying anti-concentration. Their result does however not apply to ordinary local random circuits, a gap which we close in this paper, at least for 2-designs. More precisely, we show that anti-concentration of local random quantum circuits already implies that they form relative-error approximate state 2-designs, making them equivalent properties for these ensembles. Our result holds more generally for any random circuit which is invariant under local (single-qubit) unitaries, independent of the architecture.

There are no more papers matching your filters at the moment.