Capital University of Economics and Business

Transformers have become the de facto architecture for a wide range of

machine learning tasks, particularly in large language models (LLMs). Despite

their remarkable performance, challenges remain in training deep transformer

networks, especially regarding the position of layer normalization. While

Pre-Norm structures facilitate more stable training owing to their stronger

identity path, they often lead to suboptimal performance compared to Post-Norm.

In this paper, we propose HybridNorm, a simple yet effective hybrid

normalization strategy that integrates the advantages of both Pre-Norm and

Post-Norm. Specifically, HybridNorm employs QKV normalization within the

attention mechanism and Post-Norm in the feed-forward network (FFN) of each

transformer block. We provide both theoretical insights and empirical evidence

demonstrating that HybridNorm improves gradient flow and model robustness.

Extensive experiments on large-scale transformer models, including both dense

and sparse variants, show that HybridNorm consistently outperforms both

Pre-Norm and Post-Norm approaches across multiple benchmarks. These findings

highlight the potential of HybridNorm as a more stable and effective technique

for improving the training and performance of deep transformer models. Code is

available at this https URL

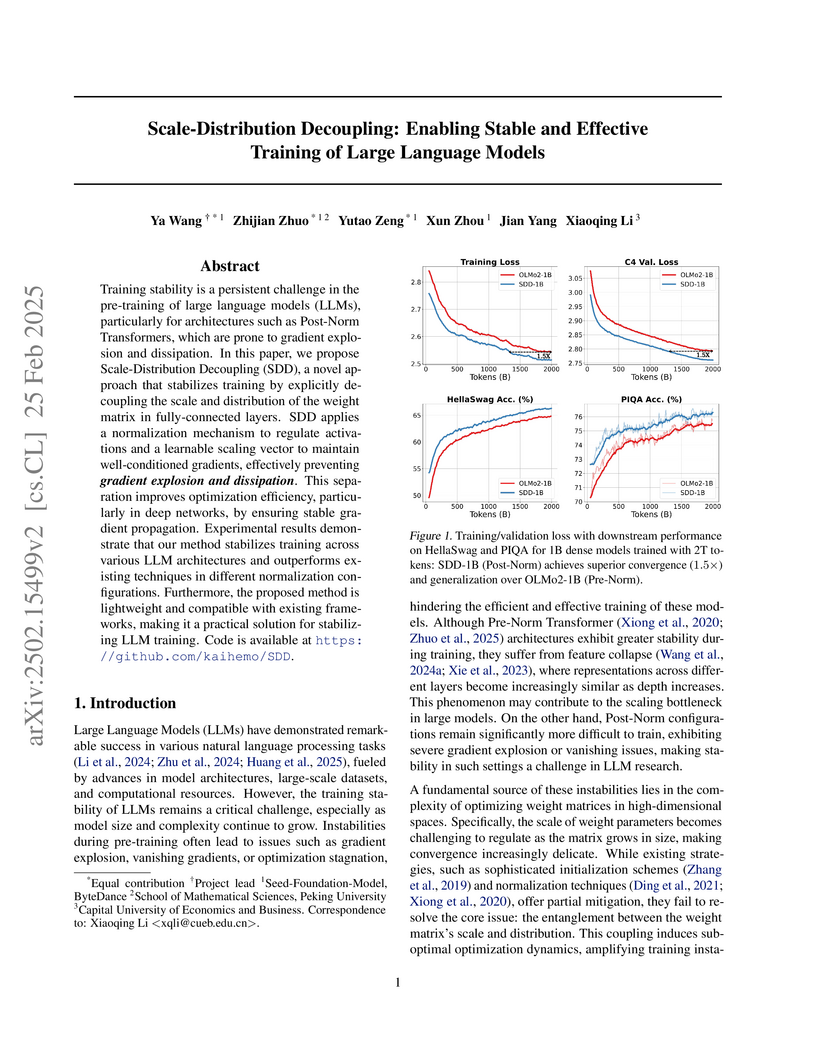

Training stability is a persistent challenge in the pre-training of large

language models (LLMs), particularly for architectures such as Post-Norm

Transformers, which are prone to gradient explosion and dissipation. In this

paper, we propose Scale-Distribution Decoupling (SDD), a novel approach that

stabilizes training by explicitly decoupling the scale and distribution of the

weight matrix in fully-connected layers. SDD applies a normalization mechanism

to regulate activations and a learnable scaling vector to maintain

well-conditioned gradients, effectively preventing $\textbf{gradient explosion

and dissipation}$. This separation improves optimization efficiency,

particularly in deep networks, by ensuring stable gradient propagation.

Experimental results demonstrate that our method stabilizes training across

various LLM architectures and outperforms existing techniques in different

normalization configurations. Furthermore, the proposed method is lightweight

and compatible with existing frameworks, making it a practical solution for

stabilizing LLM training. Code is available at this https URL

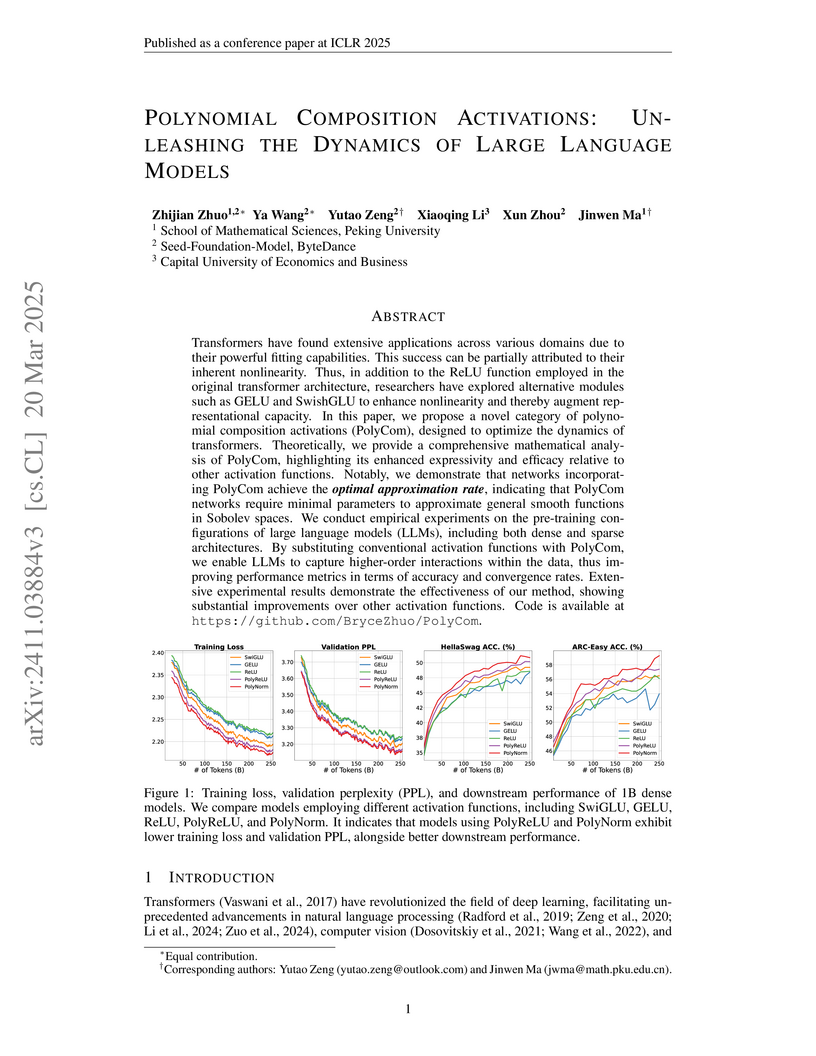

Transformers have found extensive applications across various domains due to

the powerful fitting capabilities. This success can be partially attributed to

their inherent nonlinearity. Thus, in addition to the ReLU function employed in

the original transformer architecture, researchers have explored alternative

modules such as GeLU and SwishGLU to enhance nonlinearity and thereby augment

representational capacity. In this paper, we propose a novel category of

polynomial composition activations (PolyCom), designed to optimize the dynamics

of transformers. Theoretically, we provide a comprehensive mathematical

analysis of PolyCom, highlighting its enhanced expressivity and efficacy

relative to other activation functions. Notably, we demonstrate that networks

incorporating PolyCom achieve the optimal approximation rate,

indicating that PolyCom networks require minimal parameters to approximate

general smooth functions in Sobolev spaces. We conduct empirical experiments on

the pre-training configurations of large language models (LLMs), including both

dense and sparse architectures. By substituting conventional activation

functions with PolyCom, we enable LLMs to capture higher-order interactions

within the data, thus improving performance metrics in terms of accuracy and

convergence rates. Extensive experimental results demonstrate the effectiveness

of our method, showing substantial improvements over other activation

functions. Code is available at this https URL

23 Jan 2022

This study focuses on the impact of digital finance on households. While

digital finance has brought financial inclusion, it has also increased the risk

of households falling into a debt trap. We provide evidence that supports this

notion and explain the channel through which digital finance increases the

likelihood of financial distress. Our results show that the widespread use of

digital finance increases credit market participation. The broadened access to

credit markets increases household consumption by changing the marginal

propensity to consume. However, the easier access to credit markets also

increases the risk of households falling into a debt trap.

Given the development and abundance of social media, studying the stance of social media users is a challenging and pressing issue. Social media users express their stance by posting tweets and retweeting. Therefore, the homogeneous relationship between users and the heterogeneous relationship between users and tweets are relevant for the stance detection task. Recently, graph neural networks (GNNs) have developed rapidly and have been applied to social media research. In this paper, we crawl a large-scale dataset of the 2020 US presidential election and automatically label all users by manually tagged hashtags. Subsequently, we propose a bipartite graph neural network model, DoubleH, which aims to better utilize homogeneous and heterogeneous information in user stance detection tasks. Specifically, we first construct a bipartite graph based on posting and retweeting relations for two kinds of nodes, including users and tweets. We then iteratively update the node's representation by extracting and separately processing heterogeneous and homogeneous information in the node's neighbors. Finally, the representations of user nodes are used for user stance classification. Experimental results show that DoubleH outperforms the state-of-the-art methods on popular benchmarks. Further analysis illustrates the model's utilization of information and demonstrates stability and efficiency at different numbers of layers.

For a multi-attribute decision making (MADM) problem, the information of

alternatives under different attributes is given in the form of intuitionistic

fuzzy number(IFN). Intuitionistic fuzzy set (IFS) plays an important role in

dealing with un-certain and incomplete information. The similarity measure of

intuitionistic fuzzy sets (IFSs) has always been a research hotspot. A new

similarity measure of IFSs based on the projection technology and cosine

similarity measure, which con-siders the direction and length of IFSs at the

same time, is first proposed in this paper. The objective of the presented

pa-per is to develop a MADM method and medical diagnosis method under IFS using

the projection technology and cosine similarity measure. Some examples are used

to illustrate the comparison results of the proposed algorithm and some

exist-ing methods. The comparison result shows that the proposed algorithm is

effective and can identify the optimal scheme accurately. In medical diagnosis

area, it can be used to quickly diagnose disease. The proposed method enriches

the exist-ing similarity measure methods and it can be applied to not only

IFSs, but also other interval-valued intuitionistic fuzzy sets(IVIFSs) as well.

17 Mar 2021

We propose a new approach to solving dynamic decision problems with unbounded

rewards based on the transformations used in Q-learning. In our case, the

objective of the transform is to convert an unbounded dynamic program into a

bounded one. The approach is general enough to handle problems for which

existing methods struggle, and yet simple relative to other techniques and

accessible for applied work. We show by example that many common decision

problems satisfy our conditions.

Windowed attention mechanisms were introduced to mitigate the issue of

excessive computation inherent in global attention mechanisms. In this paper,

we present FwNet-ECA, a novel method that utilizes Fourier transforms paired

with learnable weight matrices to enhance the spectral features of images. This

method establishes a global receptive field through Filter Enhancement and

avoids the use of moving window attention. Additionally, we incorporate the

Efficient Channel Attention (ECA) module to improve communication between

different channels. Instead of relying on physically shifted windows, our

approach leverages frequency domain enhancement to implicitly bridge

information across spatial regions. We validate our model on the iCartoonFace

dataset and conduct downstream tasks on ImageNet, demonstrating that our model

achieves lower parameter counts and computational overheads compared to shifted

window approaches, while maintaining competitive accuracy. Furthermore, our

visualization operations clearly demonstrated that the Filter Enhancement

technique achieves greater effectiveness in the model's shallow layers, where

feature maps are relatively larger. This work offers a more efficient and

effective alternative for leveraging attention mechanisms in visual processing

tasks, alleviating the challenges associated with windowed attention models.

Code is available at this https URL

17 Mar 2021

We propose a new approach to solving dynamic decision problems with unbounded rewards based on the transformations used in Q-learning. In our case, the objective of the transform is to convert an unbounded dynamic program into a bounded one. The approach is general enough to handle problems for which existing methods struggle, and yet simple relative to other techniques and accessible for applied work. We show by example that many common decision problems satisfy our conditions.

20 Aug 2022

The recent popularity of edge devices and Artificial Intelligent of Things

(AIoT) has driven a new wave of contextual recommendations, such as location

based Point of Interest (PoI) recommendations and computing resource-aware

mobile app recommendations. In many such recommendation scenarios, contexts are

drifting over time. For example, in a mobile game recommendation, contextual

features like locations, battery, and storage levels of mobile devices are

frequently drifting over time. However, most existing graph-based collaborative

filtering methods are designed under the assumption of static features.

Therefore, they would require frequent retraining and/or yield graphical models

burgeoning in sizes, impeding their suitability for context-drifting

recommendations.

In this work, we propose a specifically tailor-made Hybrid Static and

Adaptive Graph Embedding (HySAGE) network for context-drifting recommendations.

Our key idea is to disentangle the relatively static user-item interaction and

rapidly drifting contextual features. Specifically, our proposed HySAGE network

learns a relatively static graph embedding from user-item interaction and an

adaptive embedding from drifting contextual features. These embeddings are

incorporated into an interest network to generate the user interest in some

certain context. We adopt an interactive attention module to learn the

interactions among static graph embeddings, adaptive contextual embeddings, and

user interest, helping to achieve a better final representation. Extensive

experiments on real-world datasets demonstrate that HySAGE significantly

improves the performance of the existing state-of-the-art recommendation

algorithms.

11 May 2023

Federated recommendation systems employ federated learning techniques to safeguard user privacy by transmitting model parameters instead of raw user data between user devices and the central server. Nevertheless, the current federated recommender system faces challenges such as heterogeneity and personalization, model performance degradation, and communication bottleneck. Previous studies have attempted to address these issues, but none have been able to solve them simultaneously.

In this paper, we propose a novel framework, named PerFedRec++, to enhance the personalized federated recommendation with self-supervised pre-training. Specifically, we utilize the privacy-preserving mechanism of federated recommender systems to generate two augmented graph views, which are used as contrastive tasks in self-supervised graph learning to pre-train the model. Pre-training enhances the performance of federated models by improving the uniformity of representation learning. Also, by providing a better initial state for federated training, pre-training makes the overall training converge faster, thus alleviating the heavy communication burden. We then construct a collaborative graph to learn the client representation through a federated graph neural network. Based on these learned representations, we cluster users into different user groups and learn personalized models for each cluster. Each user learns a personalized model by combining the global federated model, the cluster-level federated model, and its own fine-tuned local model. Experiments on three real-world datasets show that our proposed method achieves superior performance over existing methods.

21 Jun 2023

This paper investigates the significance of consumer opinions in relation to value in China's A-share market. By analyzing a large dataset comprising over 18 million product reviews by customers on this http URL, we demonstrate that sentiments expressed in consumer reviews can influence stock returns, indicating that consumer opinions contain valuable information that can impact the stock market. Our findings show that Customer Negative Sentiment Tendency (CNST) and One-Star Tendency (OST) have a negative effect on expected stock returns, even after controlling for firm characteristics such as market risk, illiquidity, idiosyncratic volatility, and asset growth. Further analysis reveals that the predictive power of CNST is stronger in firms with high sentiment conditions, growth companies, and firms with lower accounting transparency. We also find that CNST negatively predicts revenue surprises, earnings surprises, and cash flow shocks. These results suggest that online satisfaction derived from big data analysis of customer reviews contains novel information about firms' fundamentals.

Stochastic gradient descent algorithm has been successfully applied on

support vector machines (called PEGASOS) for many classification problems. In

this paper, stochastic gradient descent algorithm is investigated to twin

support vector machines for classification. Compared with PEGASOS, the proposed

stochastic gradient twin support vector machines (SGTSVM) is insensitive on

stochastic sampling for stochastic gradient descent algorithm. In theory, we

prove the convergence of SGTSVM instead of almost sure convergence of PEGASOS.

For uniformly sampling, the approximation between SGTSVM and twin support

vector machines is also given, while PEGASOS only has an opportunity to obtain

an approximation of support vector machines. In addition, the nonlinear SGTSVM

is derived directly from its linear case. Experimental results on both

artificial datasets and large scale problems show the stable performance of

SGTSVM with a fast learning speed.

The rapid ascent in carbon dioxide emissions is a major cause of global

warming and climate change, which pose a huge threat to human survival and

impose far-reaching influence on the global ecosystem. Therefore, it is very

necessary to effectively control carbon dioxide emissions by accurately

predicting and analyzing the change trend timely, so as to provide a reference

for carbon dioxide emissions mitigation measures. This paper is aiming to

select a suitable model to predict the near-real-time daily emissions based on

univariate daily time-series data from January 1st, 2020 to September 30st,

2022 of all sectors (Power, Industry, Ground Transport, Residential, Domestic

Aviation, International Aviation) in China. We proposed six prediction models,

which including three statistical models: Grey prediction (GM(1,1)),

autoregressive integrated moving average (ARIMA) and seasonal autoregressive

integrated moving average with exogenous factors (SARIMAX); three machine

learning models: artificial neural network (ANN), random forest (RF) and long

short term memory (LSTM). To evaluate the performance of these models, five

criteria: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean

Absolute Error (MAE), Mean Absolute Percentage Error (MAPE) and Coefficient of

Determination () are imported and discussed in detail. In the results, three

machine learning models perform better than that three statistical models, in

which LSTM model performs the best on five criteria values for daily emissions

prediction with the 3.5179e-04 MSE value, 0.0187 RMSE value, 0.0140 MAE value,

14.8291% MAPE value and 0.9844 value.

23 May 2024

We propose a novel and robust online function-on-scalar regression technique via geometric median to learn associations between functional responses and scalar covariates based on massive or streaming datasets. The online estimation procedure, developed using the average stochastic gradient descent algorithm, offers an efficient and cost-effective method for analyzing sequentially augmented datasets, eliminating the need to store large volumes of data in memory. We establish the almost sure consistency, Lp convergence, and asymptotic normality of the online estimator. To enable efficient and fast inference of the parameters of interest, including the derivation of confidence intervals, we also develop an innovative two-step online bootstrap procedure to approximate the limiting error distribution of the robust online estimator. Numerical studies under a variety of scenarios demonstrate the effectiveness and efficiency of the proposed online learning method. A real application analyzing PM2.5 air-quality data is also included to exemplify the proposed online approach.

13 Dec 2024

Digital transformation significantly impacts firm investment, financing, and value enhancement. A systematic investigation from the corporate finance perspective has not yet been formed. This paper combines bibliometric and content analysis methods to systematically review the evolutionary trend, status quo, hotspots and overall structure of research in digital transformation from 2011 to 2024. The study reveals an emerging and rapidly growing focus on digital transformation research, particularly in developed countries. We categorize the literature into three areas according to bibliometric clustering: the measurements (qualitative and quantitative), impact factors (internal and external), and the economic consequences (investment, financing, and firm value). These areas are divided into ten sub-branches, with a detailed literature review. We also review the existing theories related to digital transformation, identify the current gaps in these papers, and provide directions for future research on each sub-branches.

19 Feb 2022

Support vector machine (SVM) is a well-known statistical technique for

classification problems in machine learning and other fields. An important

question for SVM is the selection of covariates (or features) for the model.

Many studies have considered model selection methods. As is well-known,

selecting one winning model over others can entail considerable instability in

predictive performance due to model selection uncertainties. This paper

advocates model averaging as an alternative approach, where estimates obtained

from different models are combined in a weighted average. We propose a model

weighting scheme and provide the theoretical underpinning for the proposed

method. In particular, we prove that our proposed method yields a model average

estimator that achieves the smallest hinge risk among all feasible combinations

asymptotically. To remedy the computational burden due to a large number of

feasible models, we propose a screening step to eliminate the uninformative

features before combining the models. Results from real data applications and a

simulation study show that the proposed method generally yields more accurate

estimates than existing methods.

15 Sep 2025

The consumption function maps current wealth and the exogenous state to current consumption. We prove the existence and uniqueness of a consumption function when the agent has a preference for wealth. When the period utility functions are restricted to power functions, we prove that the consumption function is asymptotically linear as wealth tends to infinity and provide a complete characterization of the asymptotic slopes. When the risk aversion with respect to wealth is less than that for consumption, the asymptotic slope is zero regardless of other model parameters, implying wealthy households save a large fraction of their income, consistent with empirical evidence.

Social media are decentralized, interactive, and transformative, empowering users to produce and spread information to influence others. This has changed the dynamics of political communication that were previously dominated by traditional corporate news media. Having hundreds of millions of tweets collected over the 2016 and 2020 U.S. presidential elections gave us a unique opportunity to measure the change in polarization and the diffusion of political information. We analyze the diffusion of political information among Twitter users and investigate the change of polarization between these elections and how this change affected the composition and polarization of influencers and their retweeters. We identify "influencers" by their ability to spread information and classify them into those affiliated with a media organization, a political organization, or unaffiliated. Most of the top influencers were affiliated with media organizations during both elections. We found a clear increase from 2016 to 2020 in polarization among influencers and among those whom they influence. Moreover, 75% of the top influencers in 2020 were not present in 2016, demonstrating that such status is difficult to retain. Between 2016 and 2020, 10% of influencers affiliated with media were replaced by center- or right-orientated influencers affiliated with political organizations and unaffiliated influencers.

Increasingly, cyber aggression becomes the prevalent phenomenon that erodes the social media environment. However, due to subjective and expense, the traditional self-reporting questionnaire is hard to be employed in the current cyber area. In this study, we put forward the prediction model for cyber aggression based on the cutting-edge deep learning algorithm. Building on 320 active Weibo users' social media activities, we construct basic, dynamic, and content features. We elaborate cyber aggression on three dimensions: social exclusion, malicious humour, and guilt induction. We then build the prediction model combined with pretrained BERT model. The empirical evidence shows outperformance and supports a stronger prediction with the BERT model than traditional machine learning models without extra pretrained information. This study offers a solid theoretical model for cyber aggression prediction. Furthermore, this study contributes to cyber aggression behaviors' probing and social media platforms' organization.

There are no more papers matching your filters at the moment.