Chubu University

Foundation models refer to deep learning models pretrained on large unlabeled datasets through self-supervised algorithms. In the Earth science and remote sensing communities, there is growing interest in transforming the use of Earth observation data, including satellite and aerial imagery, through foundation models. Various foundation models have been developed for remote sensing, such as those for multispectral, high-resolution, and hyperspectral images, and have demonstrated superior performance on various downstream tasks compared to traditional supervised models. These models are evolving rapidly, with capabilities to handle multispectral, multitemporal, and multisensor data. Most studies use masked autoencoders in combination with Vision Transformers (ViTs) as the backbone for pretraining. While the models showed promising performance, ViTs face challenges, such as quadratic computational scaling with input length, which may limit performance on multiband and multitemporal data with long sequences. This research aims to address these challenges by proposing SatMamba, a new pretraining framework that combines masked autoencoders with State Space Model, offering linear computational scaling. Experiments on high-resolution imagery across various downstream tasks show promising results, paving the way for more efficient foundation models and unlocking the full potential of Earth observation data. The source code is available in this https URL.

Video-based long-term action anticipation is crucial for early risk detection in areas such as automated driving and robotics. Conventional approaches extract features from past actions using encoders and predict future events with decoders, which limits performance due to their unidirectional nature. These methods struggle to capture semantically distinct sub-actions within a scene. The proposed method, BiAnt, addresses this limitation by combining forward prediction with backward prediction using a large language model. Experimental results on Ego4D demonstrate that BiAnt improves performance in terms of edit distance compared to baseline methods.

Researchers from Keio University and Chubu University developed an extended Layer-wise Relevance Propagation (LRP) method for ResNet architectures, incorporating a novel relevance splitting strategy for residual connections and a heat quantization post-processing step. This approach generates precise visual explanations while preserving LRP's conservation property, achieving superior Insertion-Deletion (ID) scores of 0.582 on CUB and 0.545 on ImageNet datasets.

24 May 2025

The optical responses for UV to NIR and muti-directional photo current have

been found on Au (metal) on n-Si device. The unique phenomena have been

unresolved since the first sample fabricated in 2007. The self organized

sub-micron metal with various crystal faces was supposed to activate as an

optical wave guide into Si surface. This, however, is insufficient to explain

the unique features above. Thus, for more deep analysis, returning to consider

the Si-band structure, indirect/direct transitions of inter conduction bands:

X-W, X-K and {\Gamma}-L in the 1st Brillouin Zone/Van Hove singularity at L

point, synchronizing with scattering, successfully give these characteristics a

reasonable explanation. The calculation of the quantum efficiency between X-W

and X-K agreed with those sensitivity for visible region (1.1 to 2.0 eV), the

doping process well simulates it for NIR (0.6 to 1.0 eV). Doping electrons

(~10^18/cm3) are filled up the zero-gap at around X of a reciprocal lattice

point. This is why a lower limit of 0.6 eV was arisen in the sensitivity

measurement. When the carrier scattering model was applied to the inter band

(X-W, X-K and {\Gamma}-L) transitions, the reasonable interpretation was

obtained for the directional dependence of photo-currents with UV (3.4 eV) and

Visible (3.1 and 1.9 eV) excitation. Band to band scatterings assist to extend

the available optical range and increase variety of directional responses.

Utilizing this principle for some indirect transition semiconductors, it will

be able to open the new frontier in photo-conversion system, where it will be

released from those band gaps and directivity limitations.

We propose a novel framework for integrating fragmented multi-modal data in Alzheimer's disease (AD) research using large language models (LLMs) and knowledge graphs. While traditional multimodal analysis requires matched patient IDs across datasets, our approach demonstrates population-level integration of MRI, gene expression, biomarkers, EEG, and clinical indicators from independent cohorts. Statistical analysis identified significant features in each modality, which were connected as nodes in a knowledge graph. LLMs then analyzed the graph to extract potential correlations and generate hypotheses in natural language. This approach revealed several novel relationships, including a potential pathway linking metabolic risk factors to tau protein abnormalities via neuroinflammation (r>0.6, p<0.001), and unexpected correlations between frontal EEG channels and specific gene expression profiles (r=0.42-0.58, p<0.01). Cross-validation with independent datasets confirmed the robustness of major findings, with consistent effect sizes across cohorts (variance <15%). The reproducibility of these findings was further supported by expert review (Cohen's k=0.82) and computational validation. Our framework enables cross modal integration at a conceptual level without requiring patient ID matching, offering new possibilities for understanding AD pathology through fragmented data reuse and generating testable hypotheses for future research.

Vision Transformers with various attention modules have demonstrated superior performance on vision tasks. While using sparsity-adaptive attention, such as in DAT, has yielded strong results in image classification, the key-value pairs selected by deformable points lack semantic relevance when fine-tuning for semantic segmentation tasks. The query-aware sparsity attention in BiFormer seeks to focus each query on top-k routed regions. However, during attention calculation, the selected key-value pairs are influenced by too many irrelevant queries, reducing attention on the more important ones. To address these issues, we propose the Deformable Bi-level Routing Attention (DBRA) module, which optimizes the selection of key-value pairs using agent queries and enhances the interpretability of queries in attention maps. Based on this, we introduce the Deformable Bi-level Routing Attention Transformer (DeBiFormer), a novel general-purpose vision transformer built with the DBRA module. DeBiFormer has been validated on various computer vision tasks, including image classification, object detection, and semantic segmentation, providing strong evidence of its this http URL is available at {this https URL}

Generating music from images can enhance various applications, including background music for photo slideshows, social media experiences, and video creation. This paper presents an emotion-guided image-to-music generation framework that leverages the Valence-Arousal (VA) emotional space to produce music that aligns with the emotional tone of a given image. Unlike previous models that rely on contrastive learning for emotional consistency, the proposed approach directly integrates a VA loss function to enable accurate emotional alignment. The model employs a CNN-Transformer architecture, featuring pre-trained CNN image feature extractors and three Transformer encoders to capture complex, high-level emotional features from MIDI music. Three Transformer decoders refine these features to generate musically and emotionally consistent MIDI sequences. Experimental results on a newly curated emotionally paired image-MIDI dataset demonstrate the proposed model's superior performance across metrics such as Polyphony Rate, Pitch Entropy, Groove Consistency, and loss convergence.

Person detection methods are used widely in applications including visual

surveillance, pedestrian detection, and robotics. However, accurate detection

of persons from overhead fisheye images remains an open challenge because of

factors including person rotation and small-sized persons. To address the

person rotation problem, we convert the fisheye images into panoramic images.

For smaller people, we focused on the geometry of the panoramas. Conventional

detection methods tend to focus on larger people because these larger people

yield large significant areas for feature maps. In equirectangular panoramic

images, we find that a person's height decreases linearly near the top of the

images. Using this finding, we leverage the significance values and aggregate

tokens that are sorted based on these values to balance the significant areas.

In this leveraging process, we introduce panoramic distortion-aware

tokenization. This tokenization procedure divides a panoramic image using

self-similarity figures that enable determination of optimal divisions without

gaps, and we leverage the maximum significant values in each tile of token

groups to preserve the significant areas of smaller people. To achieve higher

detection accuracy, we propose a person detection and localization method that

combines panoramic-image remapping and the tokenization procedure. Extensive

experiments demonstrated that our method outperforms conventional methods when

applied to large-scale datasets.

Automatic lymph node (LN) segmentation and detection for cancer staging are critical. In clinical practice, computed tomography (CT) and positron emission tomography (PET) imaging detect abnormal LNs. Despite its low contrast and variety in nodal size and form, LN segmentation remains a challenging task. Deep convolutional neural networks frequently segment items in medical photographs. Most state-of-the-art techniques destroy image's resolution through pooling and convolution. As a result, the models provide unsatisfactory results. Keeping the issues in mind, a well-established deep learning technique UNet was modified using bilinear interpolation and total generalized variation (TGV) based upsampling strategy to segment and detect mediastinal lymph nodes. The modified UNet maintains texture discontinuities, selects noisy areas, searches appropriate balance points through backpropagation, and recreates image resolution. Collecting CT image data from TCIA, 5-patients, and ELCAP public dataset, a dataset was prepared with the help of experienced medical experts. The UNet was trained using those datasets, and three different data combinations were utilized for testing. Utilizing the proposed approach, the model achieved 94.8% accuracy, 91.9% Jaccard, 94.1% recall, and 93.1% precision on COMBO_3. The performance was measured on different datasets and compared with state-of-the-art approaches. The UNet++ model with hybridized strategy performed better than others.

This work explores using Large Language Models (LLMs) to quantitatively score student reflections, demonstrating that these automated scores improve the accuracy of predicting academic performance. The method achieved an 82.8% exact match with human evaluations for reflection assessment and enhanced at-risk student identification to 79.3% accuracy.

A Manhattan world lying along cuboid buildings is useful for camera angle estimation. However, accurate and robust angle estimation from fisheye images in the Manhattan world has remained an open challenge because general scene images tend to lack constraints such as lines, arcs, and vanishing points. To achieve higher accuracy and robustness, we propose a learning-based calibration method that uses heatmap regression, which is similar to pose estimation using keypoints, to detect the directions of labeled image coordinates. Simultaneously, our two estimators recover the rotation and remove fisheye distortion by remapping from a general scene image. Without considering vanishing-point constraints, we find that additional points for learning-based methods can be defined. To compensate for the lack of vanishing points in images, we introduce auxiliary diagonal points that have the optimal 3D arrangement of spatial uniformity. Extensive experiments demonstrated that our method outperforms conventional methods on large-scale datasets and with off-the-shelf cameras.

10 Jul 2025

Single-cell RNA sequencing (scRNA-seq) enables single-cell transcriptomic profiling, revealing cellular heterogeneity and rare populations. Recent deep learning models like Geneformer and Mouse-Geneformer perform well on tasks such as cell-type classification and in silico perturbation. However, their species-specific design limits cross-species generalization and translational applications, which are crucial for advancing translational research and drug discovery. We present Mix-Geneformer, a novel Transformer-based model that integrates human and mouse scRNA-seq data into a unified representation via a hybrid self-supervised approach combining Masked Language Modeling (MLM) and SimCSE-based contrastive loss to capture both shared and species-specific gene patterns. A rank-value encoding scheme further emphasizes high-variance gene signals during training. Trained on about 50 million cells from diverse human and mouse organs, Mix-Geneformer matched or outperformed state-of-the-art baselines in cell-type classification and in silico perturbation tasks, achieving 95.8% accuracy on mouse kidney data versus 94.9% from the best existing model. It also successfully identified key regulatory genes validated by in vivo studies. By enabling scalable cross-species transcriptomic modeling, Mix-Geneformer offers a powerful tool for comparative transcriptomics and translational applications. While our results demonstrate strong performance, we also acknowledge limitations, such as the computational cost and variability in zero-shot transfer.

Structured state-space models (SSMs) have been developed to offer more persistent memory retention than traditional recurrent neural networks, while maintaining real-time inference capabilities and addressing the time-complexity limitations of Transformers. Despite this intended persistence, the memory mechanism of canonical SSMs is theoretically designed to decay monotonically over time, meaning that more recent inputs are expected to be retained more accurately than earlier ones. Contrary to this theoretical expectation, however, the present study reveals a counterintuitive finding: when trained and evaluated on a synthetic, statistically balanced memorization task, SSMs predominantly preserve the *initially* presented data in memory. This pattern of memory bias, known as the *primacy effect* in psychology, presents a non-trivial challenge to the current theoretical understanding of SSMs and opens new avenues for future research.

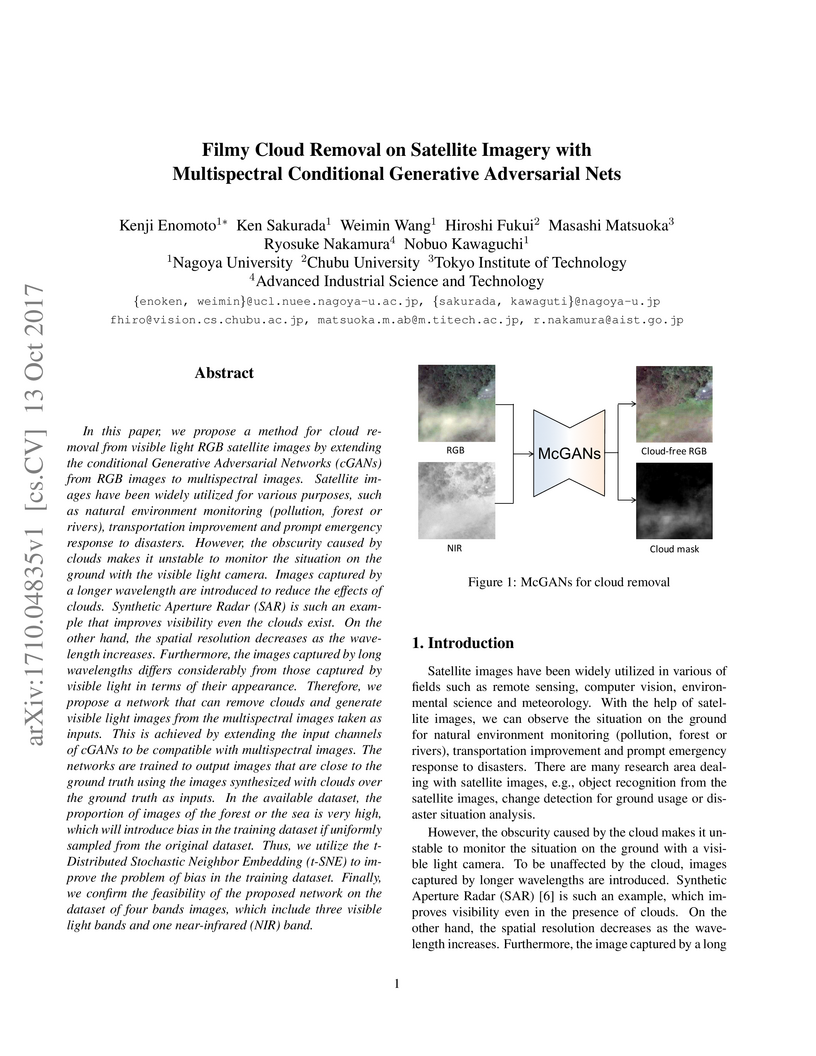

In this paper, we propose a method for cloud removal from visible light RGB

satellite images by extending the conditional Generative Adversarial Networks

(cGANs) from RGB images to multispectral images. Satellite images have been

widely utilized for various purposes, such as natural environment monitoring

(pollution, forest or rivers), transportation improvement and prompt emergency

response to disasters. However, the obscurity caused by clouds makes it

unstable to monitor the situation on the ground with the visible light camera.

Images captured by a longer wavelength are introduced to reduce the effects of

clouds. Synthetic Aperture Radar (SAR) is such an example that improves

visibility even the clouds exist. On the other hand, the spatial resolution

decreases as the wavelength increases. Furthermore, the images captured by long

wavelengths differs considerably from those captured by visible light in terms

of their appearance. Therefore, we propose a network that can remove clouds and

generate visible light images from the multispectral images taken as inputs.

This is achieved by extending the input channels of cGANs to be compatible with

multispectral images. The networks are trained to output images that are close

to the ground truth using the images synthesized with clouds over the ground

truth as inputs. In the available dataset, the proportion of images of the

forest or the sea is very high, which will introduce bias in the training

dataset if uniformly sampled from the original dataset. Thus, we utilize the

t-Distributed Stochastic Neighbor Embedding (t-SNE) to improve the problem of

bias in the training dataset. Finally, we confirm the feasibility of the

proposed network on the dataset of four bands images, which include three

visible light bands and one near-infrared (NIR) band.

The past few years have seen a surge in the application of quantum theory methodologies and quantum-like modeling in fields such as cognition, psychology, and decision-making. Despite the success of this approach in explaining various psychological phenomena such as order, conjunction, disjunction, and response replicability effects there remains a potential dissatisfaction due to its lack of clear connection to neurophysiological processes in the brain. Currently, it remains a phenomenological approach. In this paper, we develop a quantum-like representation of networks of communicating neurons. This representation is not based on standard quantum theory but on generalized probability theory (GPT), with a focus on the operational measurement framework. Specifically, we use a version of GPT that relies on ordered linear state spaces rather than the traditional complex Hilbert spaces. A network of communicating neurons is modeled as a weighted directed graph, which is encoded by its weight matrix. The state space of these weight matrices is embedded within the GPT framework, incorporating effect observables and state updates within the theory of measurement instruments a critical aspect of this model. This GPT based approach successfully reproduces key quantum-like effects, such as order, non-repeatability, and disjunction effects (commonly associated with decision interference). Moreover, this framework supports quantum-like modeling in medical diagnostics for neurological conditions such as depression and epilepsy. While this paper focuses primarily on cognition and neuronal networks, the proposed formalism and methodology can be directly applied to a wide range of biological and social networks.

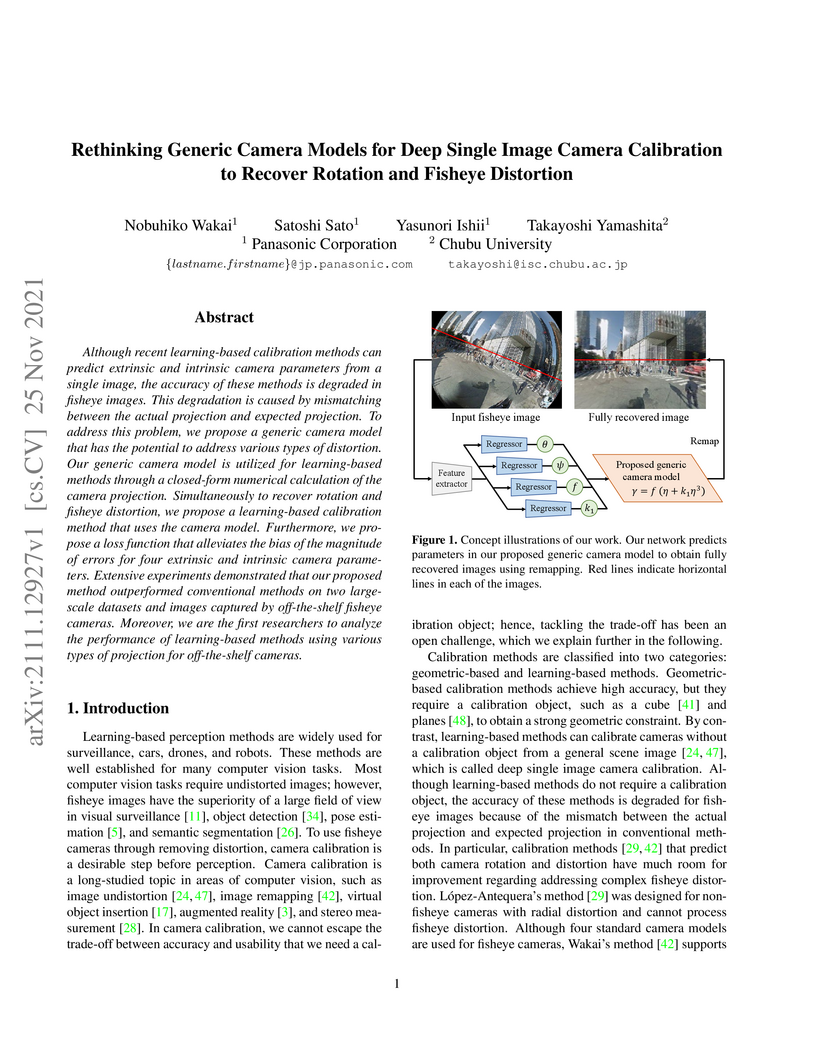

Although recent learning-based calibration methods can predict extrinsic and intrinsic camera parameters from a single image, the accuracy of these methods is degraded in fisheye images. This degradation is caused by mismatching between the actual projection and expected projection. To address this problem, we propose a generic camera model that has the potential to address various types of distortion. Our generic camera model is utilized for learning-based methods through a closed-form numerical calculation of the camera projection. Simultaneously to recover rotation and fisheye distortion, we propose a learning-based calibration method that uses the camera model. Furthermore, we propose a loss function that alleviates the bias of the magnitude of errors for four extrinsic and intrinsic camera parameters. Extensive experiments demonstrated that our proposed method outperformed conventional methods on two largescale datasets and images captured by off-the-shelf fisheye cameras. Moreover, we are the first researchers to analyze the performance of learning-based methods using various types of projection for off-the-shelf cameras.

Visual explanation enables human to understand the decision making of Deep Convolutional Neural Network (CNN), but it is insufficient to contribute the performance improvement. In this paper, we focus on the attention map for visual explanation, which represents high response value as the important region in image recognition. This region significantly improves the performance of CNN by introducing an attention mechanism that focuses on a specific region in an image. In this work, we propose Attention Branch Network (ABN), which extends the top-down visual explanation model by introducing a branch structure with an attention mechanism. ABN can be applicable to several image recognition tasks by introducing a branch for attention mechanism and is trainable for the visual explanation and image recognition in end-to-end manner. We evaluate ABN on several image recognition tasks such as image classification, fine-grained recognition, and multiple facial attributes recognition. Experimental results show that ABN can outperform the accuracy of baseline models on these image recognition tasks while generating an attention map for visual explanation. Our code is available at this https URL.

14 Jun 2025

Root systems are sets with remarkable symmetries and therefore they appear in many situations in mathematics. Among others, denominator formulae of root systems are very beautiful and mysterious equations which have several meanings from a variety of disciplines in mathematics. In this paper, we show a converse statement of this phenomena. Namely, for a given finite subset S of a Euclidean vector space V, define an equation F in the group ring Z[V] featuring the product part of denominator formulae. Then, a geometric condition for the support of F characterizes S being a set of positive roots of a finite/affine root system, recovering the denominator formula. This gives a novel characterization of the sets of positive roots of reduced finite/affine root systems.

We investigate a radiative electroweak gauge symmetry breaking scenario via

the Coleman-Weinberg mechanism starting from a completely flat Higgs potential

at the Planck scale ("flatland scenario"). In our previous paper, we showed

that the flatland scenario is possible only when an inequality K<1 among the

coefficients of the beta functions is satisfied. In this paper, we calculate

the number K in various models with an extra U(1) gauge sector in addition to

the SM particles. We also show the renormalization group (RG) behaviors of a

couple of the models as examples.

Reservoir computing, a machine learning framework used for modeling the brain, can predict temporal data with little observations and minimal computational resources. However, it is difficult to accurately reproduce the long-term target time series because the reservoir system becomes unstable. This predictive capability is required for a wide variety of time-series processing, including predictions of motor timing and chaotic dynamical systems. This study proposes oscillation-driven reservoir computing (ODRC) with feedback, where oscillatory signals are fed into a reservoir network to stabilize the network activity and induce complex reservoir dynamics. The ODRC can reproduce long-term target time series more accurately than conventional reservoir computing methods in a motor timing and chaotic time-series prediction tasks. Furthermore, it generates a time series similar to the target in the unexperienced period, that is, it can learn the abstract generative rules from limited observations. Given these significant improvements made by the simple and computationally inexpensive implementation, the ODRC would serve as a practical model of various time series data. Moreover, we will discuss biological implications of the ODRC, considering it as a model of neural oscillations and their cerebellar processors.

There are no more papers matching your filters at the moment.