Harvey Mudd College

This research presents a framework for dissecting the algorithmic underpinnings of reasoning in Large Language Models, revealing that complex reasoning emerges from a compositional geometry of identifiable and steerable algorithmic primitives. It demonstrates these primitives can be causally induced and algebraically manipulated in activation space, showing how reasoning finetuning enhances their systematic use and cross-task transferability.

This paper systematically evaluates the multifaceted capabilities of Large Language Models (LLMs) in negotiation dialogues by designing 35 fine-grained tasks covering comprehension, partner modeling, and generation. The study finds that GPT-4 consistently outperforms other LLMs, showing strong out-of-the-box Theory of Mind, but highlights significant limitations in strategic reasoning and accurately capturing subjective human states like satisfaction.

Reinforcement Learning from Verifiable Rewards (RLVR) has emerged as a promising approach to improve correctness in LLMs, however, in many scientific problems, the objective is not necessarily to produce the correct answer, but instead to produce a diverse array of candidates which satisfy a set of constraints. We study this challenge in the context of materials generation. To this end, we introduce PLaID++, an LLM post-trained for stable and property-guided crystal generation. We find that performance hinges on our crystallographic representation and reward formulation. First, we introduce a compact, symmetry-informed Wyckoff text representation which improves computational efficiency and encourages generalization from physical priors. Second, we demonstrate that temperature scaling acts as an entropy regularizer which counteracts mode collapse and encourages exploration. By encoding symmetry constraints directly into text and guiding model outputs towards desirable chemical space, PLaID++ generates structures that are thermodynamically stable, unique, and novel at a ∼50\% greater rate than prior methods and conditionally generates structures with desired space group properties. Our work demonstrates the potential of adapting post-training techniques from natural language processing to materials design, paving the way for targeted and efficient discovery of novel materials.

Generative machine learning (ML) models hold great promise for accelerating materials discovery through the inverse design of inorganic crystals, enabling an unprecedented exploration of chemical space. Yet, the lack of standardized evaluation frameworks makes it challenging to evaluate, compare, and further develop these ML models meaningfully. In this work, we introduce LeMat-GenBench, a unified benchmark for generative models of crystalline materials, supported by a set of evaluation metrics designed to better inform model development and downstream applications. We release both an open-source evaluation suite and a public leaderboard on Hugging Face, and benchmark 12 recent generative models. Results reveal that an increase in stability leads to a decrease in novelty and diversity on average, with no model excelling across all dimensions. Altogether, LeMat-GenBench establishes a reproducible and extensible foundation for fair model comparison and aims to guide the development of more reliable, discovery-oriented generative models for crystalline materials.

Recently, many benchmarks and datasets have been developed to evaluate Vision-Language Models (VLMs) using visual question answering (VQA) pairs, and models have shown significant accuracy improvements. However, these benchmarks rarely test the model's ability to accurately complete visual entailment, for instance, accepting or refuting a hypothesis based on the image. To address this, we propose COREVQA (Crowd Observations and Reasoning Entailment), a benchmark of 5608 image and synthetically generated true/false statement pairs, with images derived from the CrowdHuman dataset, to provoke visual entailment reasoning on challenging crowded images. Our results show that even the top-performing VLMs achieve accuracy below 80%, with other models performing substantially worse (39.98%-69.95%). This significant performance gap reveals key limitations in VLMs' ability to reason over certain types of image-question pairs in crowded scenes.

While large language models (LLMs) now excel at code generation, a key aspect

of software development is the art of refactoring: consolidating code into

libraries of reusable and readable programs. In this paper, we introduce LILO,

a neurosymbolic framework that iteratively synthesizes, compresses, and

documents code to build libraries tailored to particular problem domains. LILO

combines LLM-guided program synthesis with recent algorithmic advances in

automated refactoring from Stitch: a symbolic compression system that

efficiently identifies optimal lambda abstractions across large code corpora.

To make these abstractions interpretable, we introduce an auto-documentation

(AutoDoc) procedure that infers natural language names and docstrings based on

contextual examples of usage. In addition to improving human readability, we

find that AutoDoc boosts performance by helping LILO's synthesizer to interpret

and deploy learned abstractions. We evaluate LILO on three inductive program

synthesis benchmarks for string editing, scene reasoning, and graphics

composition. Compared to existing neural and symbolic methods - including the

state-of-the-art library learning algorithm DreamCoder - LILO solves more

complex tasks and learns richer libraries that are grounded in linguistic

knowledge.

25 Aug 2025

University of Cambridge

University of Cambridge University of California, Santa Barbara

University of California, Santa Barbara New York University

New York University Stanford UniversityUniversity of HoustonUniversity of Colorado BoulderNew Jersey Institute of TechnologyUniversity of BathUniversity of VermontCarleton CollegeMiddlebury CollegeHamline UniversityHarvey Mudd CollegeUniversidad Nacional Autonoma de MexicoDenison University

Stanford UniversityUniversity of HoustonUniversity of Colorado BoulderNew Jersey Institute of TechnologyUniversity of BathUniversity of VermontCarleton CollegeMiddlebury CollegeHamline UniversityHarvey Mudd CollegeUniversidad Nacional Autonoma de MexicoDenison UniversityMathematical models of complex social systems can enrich social scientific theory, inform interventions, and shape policy. From voting behavior to economic inequality and urban development, such models influence decisions that affect millions of lives. Thus, it is especially important to formulate and present them with transparency, reproducibility, and humility. Modeling in social domains, however, is often uniquely challenging. Unlike in physics or engineering, researchers often lack controlled experiments or abundant, clean data. Observational data is sparse, noisy, partial, and missing in systematic ways. In such an environment, how can we build models that can inform science and decision-making in transparent and responsible ways?

13 Oct 2024

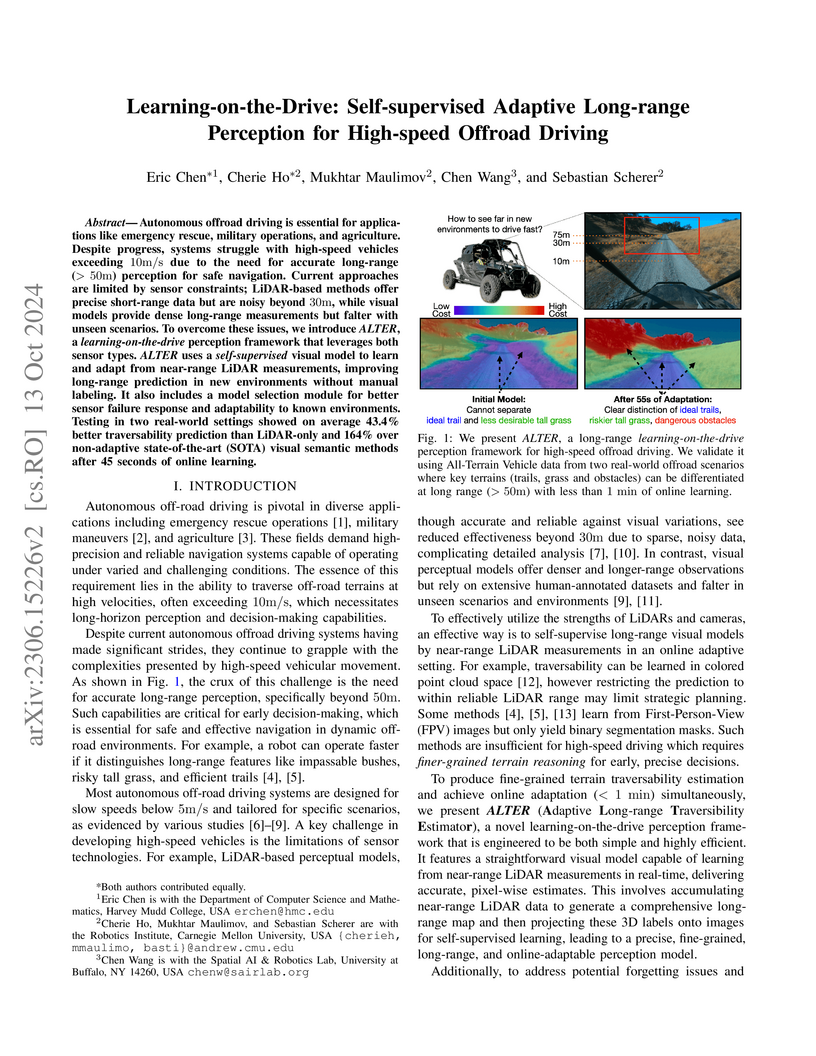

Autonomous offroad driving is essential for applications like emergency rescue, military operations, and agriculture. Despite progress, systems struggle with high-speed vehicles exceeding 10m/s due to the need for accurate long-range (> 50m) perception for safe navigation. Current approaches are limited by sensor constraints; LiDAR-based methods offer precise short-range data but are noisy beyond 30m, while visual models provide dense long-range measurements but falter with unseen scenarios. To overcome these issues, we introduce ALTER, a learning-on-the-drive perception framework that leverages both sensor types. ALTER uses a self-supervised visual model to learn and adapt from near-range LiDAR measurements, improving long-range prediction in new environments without manual labeling. It also includes a model selection module for better sensor failure response and adaptability to known environments. Testing in two real-world settings showed on average 43.4% better traversability prediction than LiDAR-only and 164% over non-adaptive state-of-the-art (SOTA) visual semantic methods after 45 seconds of online learning.

Video analytics is widely used in contemporary systems and services. At the forefront of video analytics are video queries that users develop to find objects of particular interest. Building upon the insight that video objects (e.g., human, animals, cars, etc.), the center of video analytics, are similar in spirit to objects modeled by traditional object-oriented languages, we propose to develop an object-oriented approach to video analytics. This approach, named VQPy, consists of a frontend\unicodex2015a Python variant with constructs that make it easy for users to express video objects and their interactions\unicodex2015as well as an extensible backend that can automatically construct and optimize pipelines based on video objects. We have implemented and open-sourced VQPy, which has been productized in Cisco as part of its DeepVision framework.

13 Jan 2023

ULYSSES is a Python package that calculates the baryon asymmetry produced from leptogenesis in the context of a type-I seesaw mechanism. In this release, the new features include code which solves the Boltzmann equations for low-scale leptogenesis; the complete Boltzmann equations for thermal leptogenesis applying proper quantum statistics without assuming kinetic equilibrium of the right-handed neutrinos; and, primordial black hole-induced leptogenesis. ULYSSES version 2 has the added functionality of a pre-provided script for a two-dimensional grid scan of the parameter space. As before, the emphasis of the code is on user flexibility, rapid evaluation and is publicly available at this https URL.

Logs are a first-hand source of information for software maintenance and failure diagnosis. Log parsing, which converts semi-structured log messages into structured templates, is a prerequisite for automated log analysis tasks such as anomaly detection, troubleshooting, and root cause analysis. However, existing log parsers fail in real-world systems for three main reasons. First, traditional heuristics-based parsers require handcrafted features and domain knowledge, which are difficult to generalize at scale. Second, existing large language model-based parsers rely on periodic offline processing, limiting their effectiveness in real-time use cases. Third, existing online parsing algorithms are susceptible to log drift, where slight log changes create false positives that drown out real anomalies. To address these challenges, we propose HELP, a Hierarchical Embeddings-based Log Parser. HELP is the first online semantic-based parser to leverage LLMs for performant and cost-effective log parsing. We achieve this through a novel hierarchical embeddings module, which fine-tunes a text embedding model to cluster logs before parsing, reducing querying costs by multiple orders of magnitude. To combat log drift, we also develop an iterative rebalancing module, which periodically updates existing log groupings. We evaluate HELP extensively on 14 public large-scale datasets, showing that HELP achieves significantly higher F1-weighted grouping and parsing accuracy than current state-of-the-art online log parsers. We also implement HELP into Iudex's production observability platform, confirming HELP's practicality in a production environment. Our results show that HELP is effective and efficient for high-throughput real-world log parsing.

We often rely on our intuition to anticipate the direction of a conversation. Endowing automated systems with similar foresight can enable them to assist human-human interactions. Recent work on developing models with this predictive capacity has focused on the Conversations Gone Awry (CGA) task: forecasting whether an ongoing conversation will derail. In this work, we revisit this task and introduce the first uniform evaluation framework, creating a benchmark that enables direct and reliable comparisons between different architectures. This allows us to present an up-to-date overview of the current progress in CGA models, in light of recent advancements in language modeling. Our framework also introduces a novel metric that captures a model's ability to revise its forecast as the conversation progresses.

Artificial intelligence systems, particularly large language models (LLMs), are increasingly being employed in high-stakes decisions that impact both individuals and society at large, often without adequate safeguards to ensure safety, quality, and equity. Yet LLMs hallucinate, lack common sense, and are biased - shortcomings that may reflect LLMs' inherent limitations and thus may not be remedied by more sophisticated architectures, more data, or more human feedback. Relying solely on LLMs for complex, high-stakes decisions is therefore problematic. Here we present a hybrid collective intelligence system that mitigates these risks by leveraging the complementary strengths of human experience and the vast information processed by LLMs. We apply our method to open-ended medical diagnostics, combining 40,762 differential diagnoses made by physicians with the diagnoses of five state-of-the art LLMs across 2,133 medical cases. We show that hybrid collectives of physicians and LLMs outperform both single physicians and physician collectives, as well as single LLMs and LLM ensembles. This result holds across a range of medical specialties and professional experience, and can be attributed to humans' and LLMs' complementary contributions that lead to different kinds of errors. Our approach highlights the potential for collective human and machine intelligence to improve accuracy in complex, open-ended domains like medical diagnostics.

10 May 2012

University of Cincinnati California Institute of Technology

California Institute of Technology Harvard University

Harvard University Imperial College London

Imperial College London UC BerkeleyUniversity of Edinburgh

UC BerkeleyUniversity of Edinburgh University of British Columbia

University of British Columbia Johns Hopkins UniversityColorado State UniversityUniversity of Colorado

Johns Hopkins UniversityColorado State UniversityUniversity of Colorado Lawrence Berkeley National LaboratoryUniversity of LiverpoolUniversity of IowaUniversitat de BarcelonaIowa State UniversityUniversity of BergenBrunel UniversityLawrence Livermore National LaboratoryIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsINFN, Laboratori Nazionali di FrascatiINFN-Sezione di GenovaHarvey Mudd CollegeUniversity of California at IrvineUniversity of California at Santa BarbaraUniversity of California at RiversideLaboratoire d’Annecy-le-Vieux de Physique des ParticulesLaboratoire de l’Acc ́el ́erateur Lin ́eaireINFN (Sezione di Bari)Laboratoire Leprince-Ringuet - Ecole PolytechniqueINFN-Sezione di FerraraHumboldt-Universit at zu BerlinRuhr-Universit

¨at BochumTechnische Universit

at DortmundTechnische Universit

at DresdenUniversit

at Heidelberg

Lawrence Berkeley National LaboratoryUniversity of LiverpoolUniversity of IowaUniversitat de BarcelonaIowa State UniversityUniversity of BergenBrunel UniversityLawrence Livermore National LaboratoryIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsINFN, Laboratori Nazionali di FrascatiINFN-Sezione di GenovaHarvey Mudd CollegeUniversity of California at IrvineUniversity of California at Santa BarbaraUniversity of California at RiversideLaboratoire d’Annecy-le-Vieux de Physique des ParticulesLaboratoire de l’Acc ́el ́erateur Lin ́eaireINFN (Sezione di Bari)Laboratoire Leprince-Ringuet - Ecole PolytechniqueINFN-Sezione di FerraraHumboldt-Universit at zu BerlinRuhr-Universit

¨at BochumTechnische Universit

at DortmundTechnische Universit

at DresdenUniversit

at Heidelberg

California Institute of Technology

California Institute of Technology Harvard University

Harvard University Imperial College London

Imperial College London UC BerkeleyUniversity of Edinburgh

UC BerkeleyUniversity of Edinburgh University of British Columbia

University of British Columbia Johns Hopkins UniversityColorado State UniversityUniversity of Colorado

Johns Hopkins UniversityColorado State UniversityUniversity of Colorado Lawrence Berkeley National LaboratoryUniversity of LiverpoolUniversity of IowaUniversitat de BarcelonaIowa State UniversityUniversity of BergenBrunel UniversityLawrence Livermore National LaboratoryIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsINFN, Laboratori Nazionali di FrascatiINFN-Sezione di GenovaHarvey Mudd CollegeUniversity of California at IrvineUniversity of California at Santa BarbaraUniversity of California at RiversideLaboratoire d’Annecy-le-Vieux de Physique des ParticulesLaboratoire de l’Acc ́el ́erateur Lin ́eaireINFN (Sezione di Bari)Laboratoire Leprince-Ringuet - Ecole PolytechniqueINFN-Sezione di FerraraHumboldt-Universit at zu BerlinRuhr-Universit

¨at BochumTechnische Universit

at DortmundTechnische Universit

at DresdenUniversit

at Heidelberg

Lawrence Berkeley National LaboratoryUniversity of LiverpoolUniversity of IowaUniversitat de BarcelonaIowa State UniversityUniversity of BergenBrunel UniversityLawrence Livermore National LaboratoryIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsINFN, Laboratori Nazionali di FrascatiINFN-Sezione di GenovaHarvey Mudd CollegeUniversity of California at IrvineUniversity of California at Santa BarbaraUniversity of California at RiversideLaboratoire d’Annecy-le-Vieux de Physique des ParticulesLaboratoire de l’Acc ́el ́erateur Lin ́eaireINFN (Sezione di Bari)Laboratoire Leprince-Ringuet - Ecole PolytechniqueINFN-Sezione di FerraraHumboldt-Universit at zu BerlinRuhr-Universit

¨at BochumTechnische Universit

at DortmundTechnische Universit

at DresdenUniversit

at HeidelbergA precise measurement of the cross section of the process

e+e−→π+π−(γ) from threshold to an energy of 3GeV is obtained

with the initial-state radiation (ISR) method using 232fb−1 of data

collected with the BaBar detector at e+e− center-of-mass energies near

10.6GeV. The ISR luminosity is determined from a study of the leptonic process

e+e−→μ+μ−(γ)γISR, which is found to agree with the

next-to-leading-order QED prediction to within 1.1%. The cross section for the

process e+e−→π+π−(γ) is obtained with a systematic uncertainty

of 0.5% in the dominant ρ resonance region. The leading-order hadronic

contribution to the muon magnetic anomaly calculated using the measured

ππ cross section from threshold to 1.8GeV is $(514.1 \pm 2.2({\rm stat})

\pm 3.1({\rm syst}))\times 10^{-10}$.

10 Mar 2021

The magnetic properties of the two isostructural molecule-based magnets, Ni(NCS)2(thiourea)2, S = 1, [thiourea = SC(NH2)2] and Co(NCS)2(thiourea)2, S = 3/2, are characterised using several techniques in order to rationalise their relationship with structural parameters and ascertain magnetic changes caused by substitution of the spin. Zero-field heat capacity and muon-spin relaxation measurements reveal low-temperature long-range ordering in both compounds, in addition to Ising-like (D < 0) single-ion anisotropy (DCo∼ -100 K, DNi∼ -10 K). Crystal and electronic structure, combined with DC-field magnetometry, affirm highly quasi-one-dimensional behaviour, with ferromagnetic intrachain exchange interactions JCo≈+4 K and JNi∼+100 K and weak antiferromagnetic interchain exchange, on the order of J′ ∼−0.1 K. Electron charge and spin-density mapping reveals through-space exchange as a mechanism to explain the large discrepancy in J-values despite, from a structural perspective, the highly similar exchange pathways in both materials. Both species can be compared to the similar compounds MCl2(thiourea)4, M = Ni(II) (DTN) and Co(II) (DTC), where DTN is know to harbour two magnetic field-induced quantum critical points. Direct comparison of DTN and DTC with the compounds studied here shows that substituting the halide Cl− ion, for the NCS− ion, results in a dramatic change in both the structural and magnetic properties.

UV radiation has been used as a disinfection strategy to deactivate a wide range of pathogens, but existing irradiation strategies do not ensure sufficient exposure of all environmental surfaces and/or require long disinfection times. We present a near-optimal coverage planner for mobile UV disinfection robots. The formulation optimizes the irradiation time efficiency, while ensuring that a sufficient dosage of radiation is received by each surface. The trajectory and dosage plan are optimized taking collision and light occlusion constraints into account. We propose a two-stage scheme to approximate the solution of the induced NP-hard optimization, and, for efficiency, perform key irradiance and occlusion calculations on a GPU. Empirical results show that our technique achieves more coverage for the same exposure time as strategies for existing UV robots, can be used to compare UV robot designs, and produces near-optimal plans. This is an extended version of the paper originally contributed to ICRA2021.

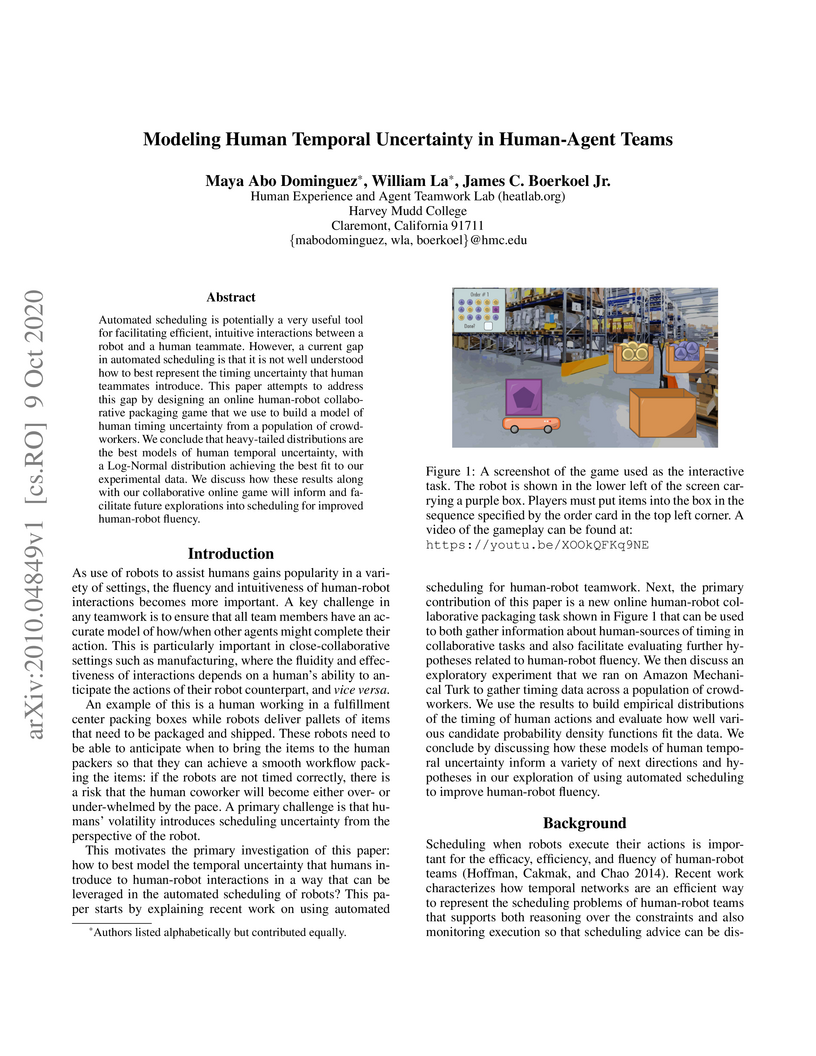

Automated scheduling is potentially a very useful tool for facilitating efficient, intuitive interactions between a robot and a human teammate. However, a current gapin automated scheduling is that it is not well understood how to best represent the timing uncertainty that human teammates introduce. This paper attempts to address this gap by designing an online human-robot collaborative packaging game that we use to build a model of human timing uncertainty from a population of crowd-workers. We conclude that heavy-tailed distributions are the best models of human temporal uncertainty, with a Log-Normal distribution achieving the best fit to our experimental data. We discuss how these results along with our collaborative online game will inform and facilitate future explorations into scheduling for improved human-robot fluency.

06 Feb 2024

Extracting relevant information from atomistic simulations relies on a

complete and accurate characterization of atomistic configurations. We present

a framework for characterizing atomistic configurations in terms of a complete

and symmetry-adapted basis, referred to as strain functionals. In this approach

a Gaussian kernel is used to map discrete atomic quantities, such as number

density, velocities, and forces, to continuous fields. The local atomic

configurations are then characterized using nth order central moments of the

local number density. The initial Cartesian moments are recast unitarily into a

Solid Harmonic Polynomial basis using SO(3) decompositions. Rotationally

invariant metrics, referred to as Strain Functional Descriptors (SFDs), are

constructed from the terms in the SO(3) decomposition using Clebsch-Gordan

coupling. A key distinction compared to related methods is that a minimal but

complete set of descriptors is identified. These descriptors characterize the

local geometries numerically in terms of shape, size, and orientation

descriptors that recognize n-fold symmetry axes and net shapes such as

trigonal, cubic, hexagonal, etc. They can easily distinguish between most

different crystal symmetries using n = 4, identify defects (such as

dislocations and stacking faults), measure local deformation, and can be used

in conjunction with machine learning techniques for in situ analysis of finite

temperature atomistic simulation data and quantification of defect dynamics.

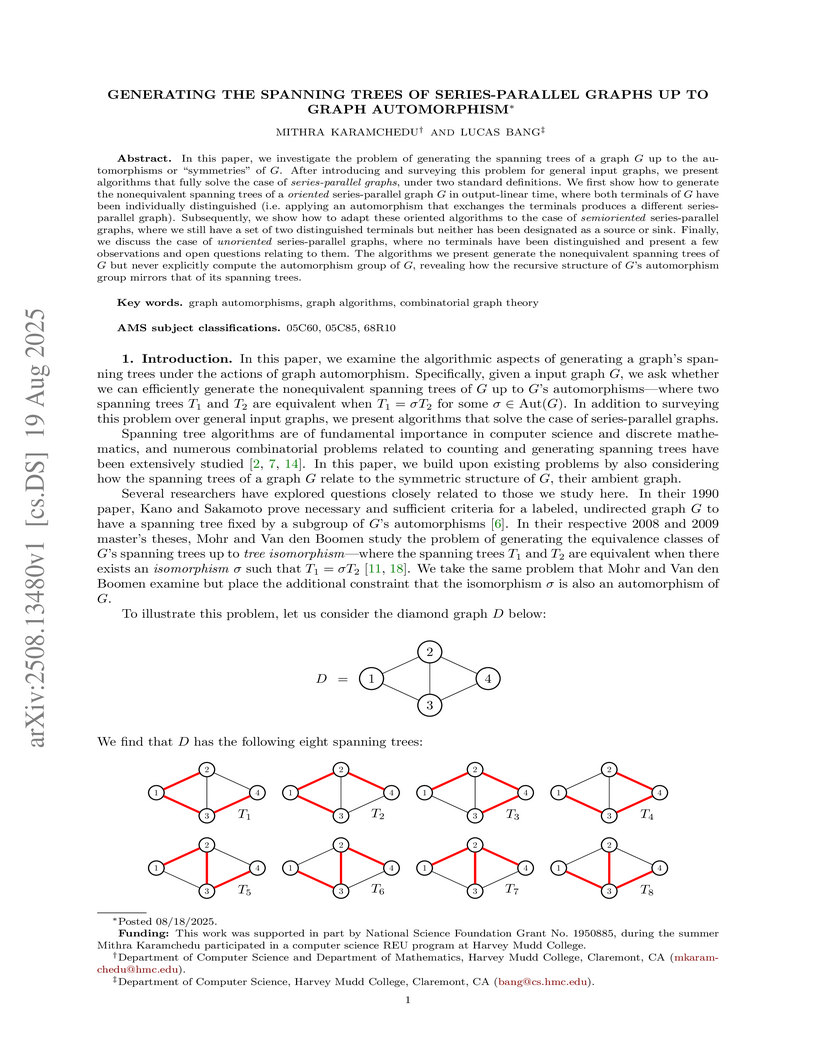

In this paper, we investigate the problem of generating the spanning trees of a graph G up to the automorphisms or "symmetries" of G. After introducing and surveying this problem for general input graphs, we present algorithms that fully solve the case of series-parallel graphs, under two standard definitions. We first show how to generate the nonequivalent spanning trees of a oriented series-parallel graph G in output-linear time, where both terminals of G have been individually distinguished (i.e. applying an automorphism that exchanges the terminals produces a different series-parallel graph). Subsequently, we show how to adapt these oriented algorithms to the case of semioriented series-parallel graphs, where we still have a set of two distinguished terminals but neither has been designated as a source or sink. Finally, we discuss the case of unoriented series-parallel graphs, where no terminals have been distinguished and present a few observations and open questions relating to them. The algorithms we present generate the nonequivalent spanning trees of G but never explicitly compute the automorphism group of G, revealing how the recursive structure of G's automorphism group mirrors that of its spanning trees.

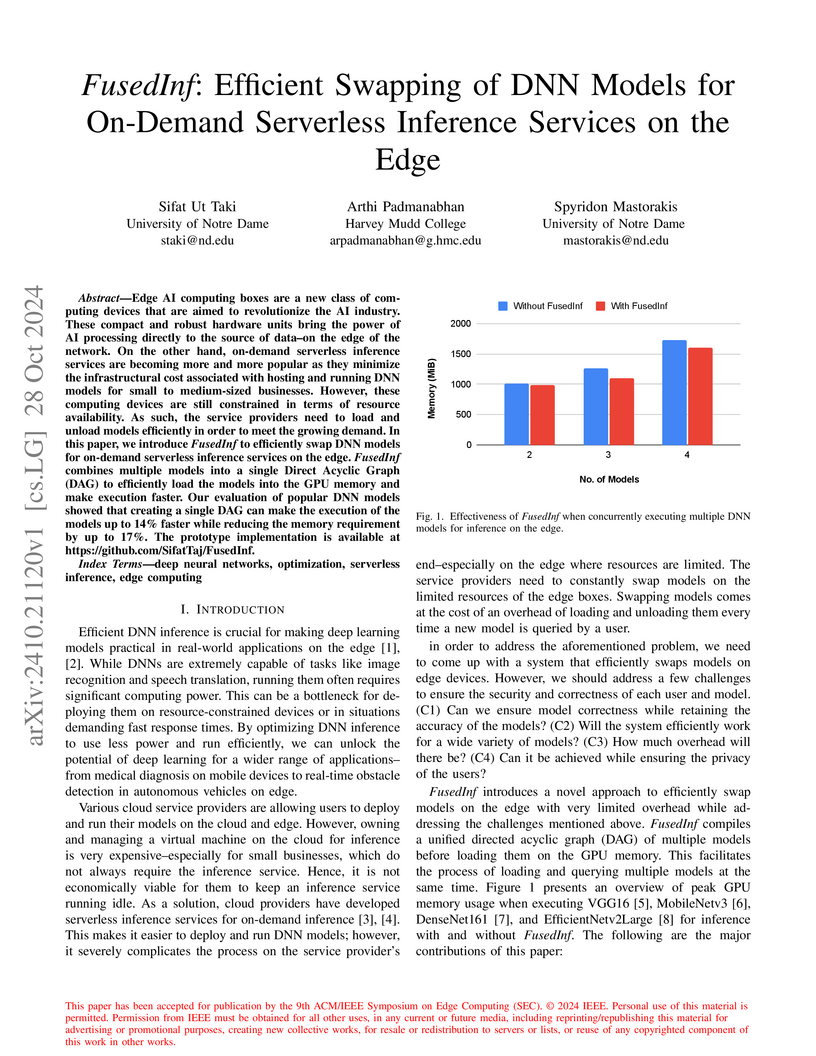

Edge AI computing boxes are a new class of computing devices that are aimed

to revolutionize the AI industry. These compact and robust hardware units bring

the power of AI processing directly to the source of data--on the edge of the

network. On the other hand, on-demand serverless inference services are

becoming more and more popular as they minimize the infrastructural cost

associated with hosting and running DNN models for small to medium-sized

businesses. However, these computing devices are still constrained in terms of

resource availability. As such, the service providers need to load and unload

models efficiently in order to meet the growing demand. In this paper, we

introduce FusedInf to efficiently swap DNN models for on-demand serverless

inference services on the edge. FusedInf combines multiple models into a single

Direct Acyclic Graph (DAG) to efficiently load the models into the GPU memory

and make execution faster. Our evaluation of popular DNN models showed that

creating a single DAG can make the execution of the models up to 14\% faster

while reducing the memory requirement by up to 17\%. The prototype

implementation is available at this https URL

There are no more papers matching your filters at the moment.