Honda Research Institute Europe GmbH

Honda Research Institute Europe GmbHHessian AITechnische Universit

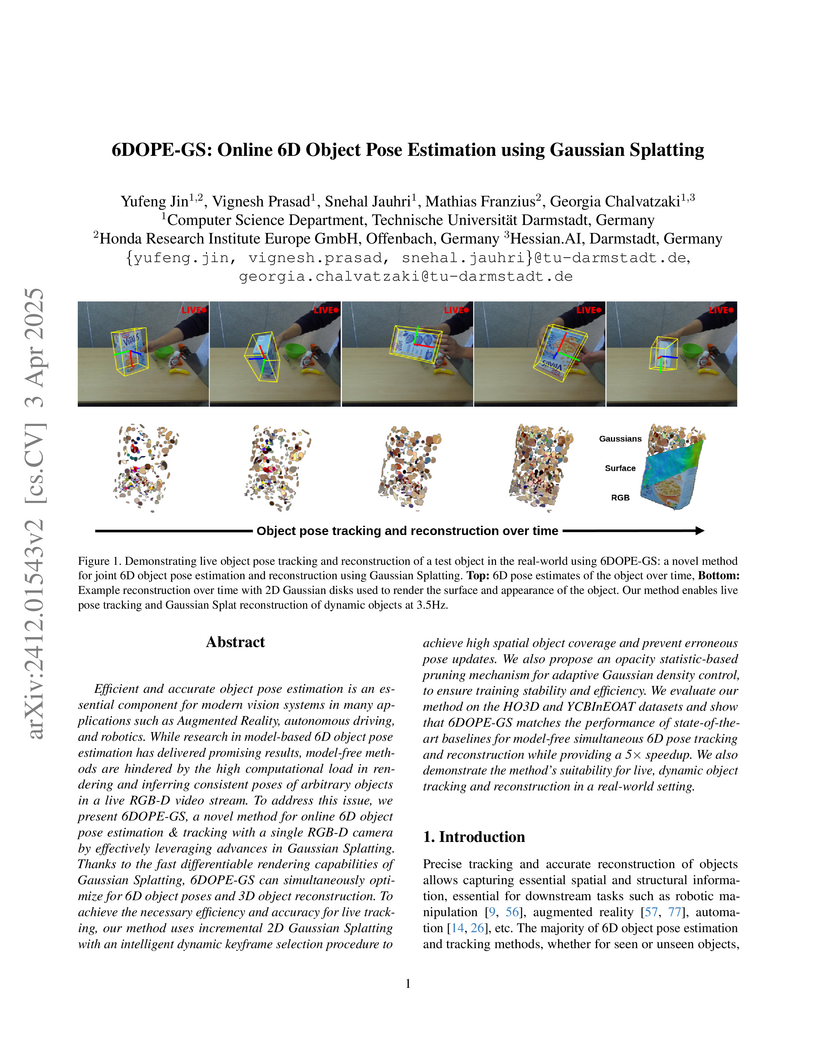

Efficient and accurate object pose estimation is an essential component for

modern vision systems in many applications such as Augmented Reality,

autonomous driving, and robotics. While research in model-based 6D object pose

estimation has delivered promising results, model-free methods are hindered by

the high computational load in rendering and inferring consistent poses of

arbitrary objects in a live RGB-D video stream. To address this issue, we

present 6DOPE-GS, a novel method for online 6D object pose estimation \&

tracking with a single RGB-D camera by effectively leveraging advances in

Gaussian Splatting. Thanks to the fast differentiable rendering capabilities of

Gaussian Splatting, 6DOPE-GS can simultaneously optimize for 6D object poses

and 3D object reconstruction. To achieve the necessary efficiency and accuracy

for live tracking, our method uses incremental 2D Gaussian Splatting with an

intelligent dynamic keyframe selection procedure to achieve high spatial object

coverage and prevent erroneous pose updates. We also propose an opacity

statistic-based pruning mechanism for adaptive Gaussian density control, to

ensure training stability and efficiency. We evaluate our method on the HO3D

and YCBInEOAT datasets and show that 6DOPE-GS matches the performance of

state-of-the-art baselines for model-free simultaneous 6D pose tracking and

reconstruction while providing a 5× speedup. We also demonstrate the

method's suitability for live, dynamic object tracking and reconstruction in a

real-world setting.

Object pose estimation is a fundamental problem in robotics and computer vision, yet it remains challenging due to partial observability, occlusions, and object symmetries, which inevitably lead to pose ambiguity and multiple hypotheses consistent with the same observation. While deterministic deep networks achieve impressive performance under well-constrained conditions, they are often overconfident and fail to capture the multi-modality of the underlying pose distribution. To address these challenges, we propose a novel probabilistic framework that leverages flow matching on the SE(3) manifold for estimating 6D object pose distributions. Unlike existing methods that regress a single deterministic output, our approach models the full pose distribution with a sample-based estimate and enables reasoning about uncertainty in ambiguous cases such as symmetric objects or severe occlusions. We achieve state-of-the-art results on Real275, YCB-V, and LM-O, and demonstrate how our sample-based pose estimates can be leveraged in downstream robotic manipulation tasks such as active perception for disambiguating uncertain viewpoints or guiding grasp synthesis in an uncertainty-aware manner.

Monash UniversityGhent University

Monash UniversityGhent University Beihang UniversityUniversity of Granada

Beihang UniversityUniversity of Granada University of SydneyUniversity of QueenslandDeakin UniversityHonda Research Institute Europe GmbHUniversity of NottinghamUniversity of AdelaideMines ParisCentro de Investigaci ́on en Matem ́aticasUniversidad Polit

´ecnica de MadridVrije Universiteit Brussel

University of SydneyUniversity of QueenslandDeakin UniversityHonda Research Institute Europe GmbHUniversity of NottinghamUniversity of AdelaideMines ParisCentro de Investigaci ́on en Matem ́aticasUniversidad Polit

´ecnica de MadridVrije Universiteit BrusselPredict+Optimize frameworks integrate forecasting and optimization to address

real-world challenges such as renewable energy scheduling, where variability

and uncertainty are critical factors. This paper benchmarks solutions from the

IEEE-CIS Technical Challenge on Predict+Optimize for Renewable Energy

Scheduling, focusing on forecasting renewable production and demand and

optimizing energy cost. The competition attracted 49 participants in total. The

top-ranked method employed stochastic optimization using LightGBM ensembles,

and achieved at least a 2% reduction in energy costs compared to deterministic

approaches, demonstrating that the most accurate point forecast does not

necessarily guarantee the best performance in downstream optimization. The

published data and problem setting establish a benchmark for further research

into integrated forecasting-optimization methods for energy systems,

highlighting the importance of considering forecast uncertainty in optimization

models to achieve cost-effective and reliable energy management. The novelty of

this work lies in its comprehensive evaluation of Predict+Optimize

methodologies applied to a real-world renewable energy scheduling problem,

providing insights into the scalability, generalizability, and effectiveness of

the proposed solutions. Potential applications extend beyond energy systems to

any domain requiring integrated forecasting and optimization, such as supply

chain management, transportation planning, and financial portfolio

optimization.

We present a large real-world dataset obtained from monitoring a smart company facility over the course of six years, from 2018 to 2023. The dataset includes energy consumption data from various facility areas and components, energy production data from a photovoltaic system and a combined heat and power plant, operational data from heating and cooling systems, and weather data from an on-site weather station. The measurement sensors installed throughout the facility are organized in a hierarchical metering structure with multiple sub-metering levels, which is reflected in the dataset. The dataset contains measurement data from 72 energy meters, 9 heat meters and a weather station. Both raw and processed data at different processing levels, including labeled issues, is available. In this paper, we describe the data acquisition and post-processing employed to create the dataset. The dataset enables the application of a wide range of methods in the domain of energy management, including optimization, modeling, and machine learning to optimize building operations and reduce costs and carbon emissions.

Quantum optimization has emerged as a promising approach for tackling complicated classical optimization problems using quantum devices. However, the extent to which such algorithms harness genuine quantum resources and the role of these resources in their success remain open questions. In this work, we investigate the resource requirements of the Quantum Approximate Optimization Algorithm (QAOA) through the lens of the resource theory of nonstabilizerness. We demonstrate that the nonstabilizerness in QAOA increases with circuit depth before it reaches a maximum, to fall again during the approach to the final solution state -- creating a barrier that limits the algorithm's capability for shallow circuits. We find curves corresponding to different depths to collapse under a simple rescaling, and we reveal a nontrivial relationship between the final nonstabilizerness and the success probability. Finally, we identify a similar nonstabilizerness barrier also in adiabatic quantum annealing. Our results provide deeper insights into how quantum resources influence quantum optimization.

We present SRWToolkit, an open-source Wizard of Oz toolkit designed to facilitate the rapid prototyping of social robotic avatars powered by local large language models (LLMs). Our web-based toolkit enables multimodal interaction through text input, button-activated speech, and wake-word command. The toolkit offers real-time configuration of avatar appearance, behavior, language, and voice via an intuitive control panel. In contrast to prior works that rely on cloud-based LLM services, SRWToolkit emphasizes modularity and ensures on-device functionality through local LLM inference. In our small-scale user study (n=11), participants created and interacted with diverse robotic roles (hospital receptionist, mathematics teacher, and driving assistant), which demonstrated positive outcomes in the toolkit's usability, trust, and user experience. The toolkit enables rapid and efficient development of robot characters customized to researchers' needs, supporting scalable research in human-robot interaction.

19 Jun 2025

Intelligent devices for supporting persons with vision impairment are becoming more widespread, but they are lacking behind the advancements in intelligent driver assistant system. To make a first step forward, this work discusses the integration of the risk model technology, previously used in autonomous driving and advanced driver assistance systems, into an assistance device for persons with vision impairment. The risk model computes a probabilistic collision risk given object trajectories which has previously been shown to give better indications of an object's collision potential compared to distance or time-to-contact measures in vehicle scenarios. In this work, we show that the risk model is also superior in warning persons with vision impairment about dangerous objects. Our experiments demonstrate that the warning accuracy of the risk model is 67% while both distance and time-to-contact measures reach only 51% accuracy for real-world data.

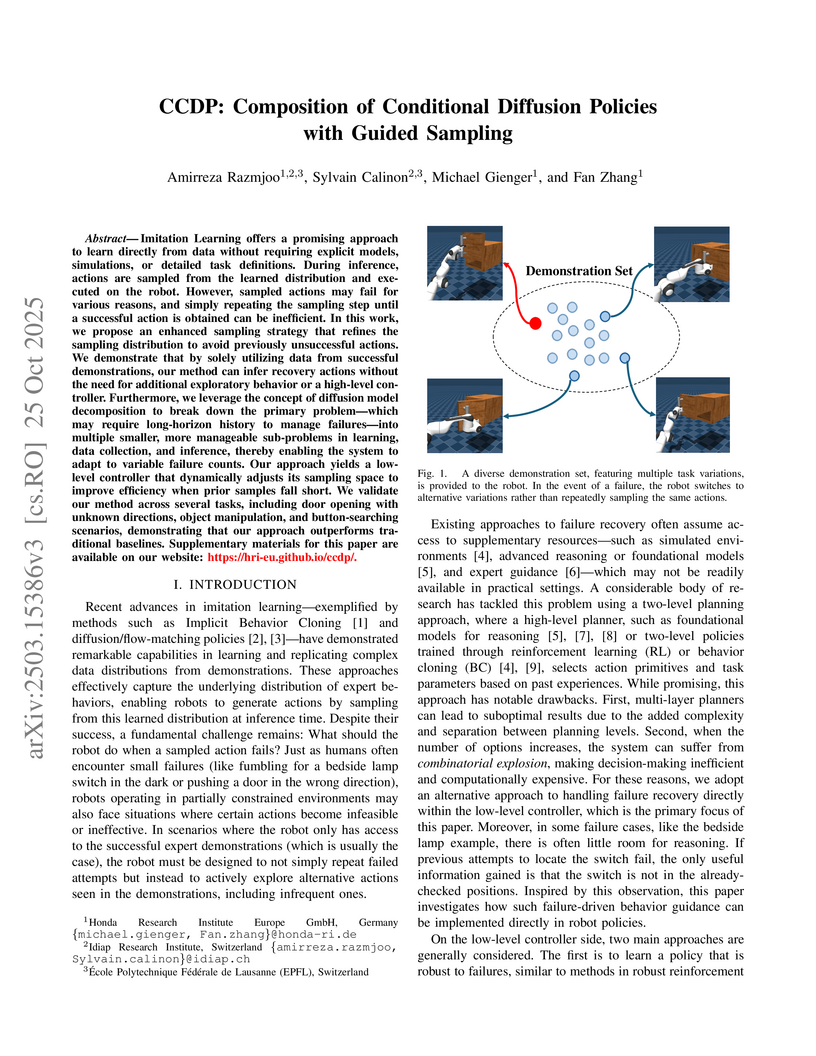

Imitation Learning offers a promising approach to learn directly from data without requiring explicit models, simulations, or detailed task definitions. During inference, actions are sampled from the learned distribution and executed on the robot. However, sampled actions may fail for various reasons, and simply repeating the sampling step until a successful action is obtained can be inefficient. In this work, we propose an enhanced sampling strategy that refines the sampling distribution to avoid previously unsuccessful actions. We demonstrate that by solely utilizing data from successful demonstrations, our method can infer recovery actions without the need for additional exploratory behavior or a high-level controller. Furthermore, we leverage the concept of diffusion model decomposition to break down the primary problem, which may require long-horizon history to manage failures, into multiple smaller, more manageable sub-problems in learning, data collection, and inference, thereby enabling the system to adapt to variable failure counts. Our approach yields a low-level controller that dynamically adjusts its sampling space to improve efficiency when prior samples fall short. We validate our method across several tasks, including door opening with unknown directions, object manipulation, and button-searching scenarios, demonstrating that our approach outperforms traditional baselines.

26 May 2023

A frequent starting point of quantum computation platforms are two-state

quantum systems, i.e., qubits. However, in the context of integer optimization

problems, relevant to scheduling optimization and operations research, it is

often more resource-efficient to employ quantum systems with more than two

basis states, so-called qudits. Here, we discuss the quantum approximate

optimization algorithm (QAOA) for qudit systems. We illustrate how the QAOA can

be used to formulate a variety of integer optimization problems such as graph

coloring problems or electric vehicle (EV) charging optimization. In addition,

we comment on the implementation of constraints and describe three methods to

include these into a quantum circuit of a QAOA by penalty contributions to the

cost Hamiltonian, conditional gates using ancilla qubits, and a dynamical

decoupling strategy. Finally, as a showcase of qudit-based QAOA, we present

numerical results for a charging optimization problem mapped onto a

max-k-graph coloring problem. Our work illustrates the flexibility of qudit

systems to solve integer optimization problems.

Future intelligent system will involve very various types of artificial agents, such as mobile robots, smart home infrastructure or personal devices, which share data and collaborate with each other to execute certain this http URL an efficient human-machine interface, which can support users to express needs to the system, supervise the collaboration progress of different entities and evaluate the result, will be challengeable. This paper presents the design and implementation of the human-machine interface of Intelligent Cyber-Physical system (ICPS),which is a multi-entity coordination system of robots and other smart devices in a working environment. ICPS gathers sensory data from entities and then receives users' command, then optimizes plans to utilize the capability of different entities to serve people. Using multi-model interaction methods, e.g. graphical interfaces, speech interaction, gestures and facial expressions, ICPS is able to receive inputs from users through different entities, keep users aware of the progress and accomplish the task efficiently

12 Nov 2024

Solving combinatorial optimization problems on near-term quantum devices has gained a lot of attraction in recent years. Currently, most works have focused on single-objective problems, whereas many real-world applications need to consider multiple, mostly conflicting objectives, such as cost and quality. We present a variational quantum optimization algorithm to solve discrete multi-objective optimization problems on quantum computers. The proposed quantum multi-objective optimization (QMOO) algorithm incorporates all cost Hamiltonians representing the classical objective functions in the quantum circuit and produces a quantum state consisting of Pareto-optimal solutions in superposition. From this state we retrieve a set of solutions and utilize the widely applied hypervolume indicator to determine its quality as an approximation to the Pareto-front. The variational parameters of the QMOO circuit are tuned by maximizing the hypervolume indicator in a quantum-classical hybrid fashion. We show the effectiveness of the proposed algorithm on several benchmark problems with up to five objectives. We investigate the influence of the classical optimizer, the circuit depth and compare to results from classical optimization algorithms. We find that the algorithm is robust to shot noise and produces good results with as low as 128 measurement shots in each iteration. These promising result open the perspective to run the algorithm on near-term quantum hardware.

21 Jun 2021

Many robot manipulation tasks require the robot to make and break contact with objects and surfaces. The dynamics of such changing-contact robot manipulation tasks are discontinuous when contact is made or broken, and continuous elsewhere. These discontinuities make it difficult to construct and use a single dynamics model or control strategy for any such task. We present a framework for smooth dynamics and control of such changing-contact manipulation tasks. For any given target motion trajectory, the framework incrementally improves its prediction of when contacts will occur. This prediction and a model relating approach velocity to impact force modify the velocity profile of the motion sequence such that it is C∞ smooth, and help achieve a desired force on impact. We implement this framework by building on our hybrid force-motion variable impedance controller for continuous contact tasks. We experimentally evaluate our framework in the illustrative context of sliding tasks involving multiple contact changes with transitions between surfaces of different properties.

16 Sep 2025

Establishing standardized metrics for Social Robot Navigation (SRN) algorithms for assessing the quality and social compliance of robot behavior around humans is essential for SRN research. Currently, commonly used evaluation metrics lack the ability to quantify how cooperative an agent behaves in interaction with humans. Concretely, in a simple frontal approach scenario, no metric specifically captures if both agents cooperate or if one agent stays on collision course and the other agent is forced to evade. To address this limitation, we propose two new metrics, a conflict intensity metric and the responsibility metric. Together, these metrics are capable of evaluating the quality of human-robot interactions by showing how much a given algorithm has contributed to reducing a conflict and which agent actually took responsibility of the resolution. This work aims to contribute to the development of a comprehensive and standardized evaluation methodology for SRN, ultimately enhancing the safety, efficiency, and social acceptance of robots in human-centric environments.

Detecting drifts in data is essential for machine learning applications, as changes in the statistics of processed data typically has a profound influence on the performance of trained models. Most of the available drift detection methods are either supervised and require access to the true labels during inference time, or they are completely unsupervised and aim for changes in distributions without taking label information into account. We propose a novel task-sensitive semi-supervised drift detection scheme, which utilizes label information while training the initial model, but takes into account that supervised label information is no longer available when using the model during inference. It utilizes a constrained low-dimensional embedding representation of the input data. This way, it is best suited for the classification task. It is able to detect real drift, where the drift affects the classification performance, while it properly ignores virtual drift, where the classification performance is not affected by the drift. In the proposed framework, the actual method to detect a change in the statistics of incoming data samples can be chosen freely. Experimental evaluation on nine benchmarks datasets, with different types of drift, demonstrates that the proposed framework can reliably detect drifts, and outperforms state-of-the-art unsupervised drift detection approaches.

Machine learning is more and more applied in critical application areas like health and driver assistance. To minimize the risk of wrong decisions, in such applications it is necessary to consider the certainty of a classification to reject uncertain samples. An established tool for this are reject curves that visualize the trade-off between the number of rejected samples and classification performance metrics. We argue that common reject curves are too abstract and hard to interpret by non-experts. We propose Stacked Confusion Reject Plots (SCORE) that offer a more intuitive understanding of the used data and the classifier's behavior. We present example plots on artificial Gaussian data to document the different options of SCORE and provide the code as a Python package.

A preference based multi-objective evolutionary algorithm is proposed for

generating solutions in an automatically detected knee point region. It is

named Automatic Preference based DI-MOEA (AP-DI-MOEA) where DI-MOEA stands for

Diversity-Indicator based Multi-Objective Evolutionary Algorithm). AP-DI-MOEA

has two main characteristics: firstly, it generates the preference region

automatically during the optimization; secondly, it concentrates the solution

set in this preference region. Moreover, the real-world vehicle fleet

maintenance scheduling optimization (VFMSO) problem is formulated, and a

customized multi-objective evolutionary algorithm (MOEA) is proposed to

optimize maintenance schedules of vehicle fleets based on the predicted failure

distribution of the components of cars. Furthermore, the customized MOEA for

VFMSO is combined with AP-DI-MOEA to find maintenance schedules in the

automatically generated preference region. Experimental results on

multi-objective benchmark problems and our three-objective real-world

application problems show that the newly proposed algorithm can generate the

preference region accurately and that it can obtain better solutions in the

preference region. Especially, in many cases, under the same budget, the Pareto

optimal solutions obtained by AP-DI-MOEA dominate solutions obtained by MOEAs

that pursue the entire Pareto front.

The visible orientation of human eyes creates some transparency about people's spatial attention and other mental states. This leads to a dual role for the eyes as a means of sensing and communication. Accordingly, artificial eye models are being explored as communication media in human-machine interaction scenarios. One challenge in the use of eye models for communication consists of resolving spatial reference ambiguities, especially for screen-based models. Here, we introduce an approach for overcoming this challenge through the introduction of reflection-like features that are contingent on artificial eye movements. We conducted a user study with 30 participants in which participants had to use spatial references provided by dynamic eye models to advance in a fast-paced group interaction task. Compared to a non-reflective eye model and a pure reflection mode, their combination in the new approach resulted in a higher identification accuracy and user experience, suggesting a synergistic benefit.

25 Apr 2025

Shared autonomy allows for combining the global planning capabilities of a

human operator with the strengths of a robot such as repeatability and accurate

control. In a real-time teleoperation setting, one possibility for shared

autonomy is to let the human operator decide for the rough movement and to let

the robot do fine adjustments, e.g., when the view of the operator is occluded.

We present a learning-based concept for shared autonomy that aims at supporting

the human operator in a real-time teleoperation setting. At every step, our

system tracks the target pose set by the human operator as accurately as

possible while at the same time satisfying a set of constraints which influence

the robot's behavior. An important characteristic is that the constraints can

be dynamically activated and deactivated which allows the system to provide

task-specific assistance. Since the system must generate robot commands in

real-time, solving an optimization problem in every iteration is not feasible.

Instead, we sample potential target configurations and use Neural Networks for

predicting the constraint costs for each configuration. By evaluating each

configuration in parallel, our system is able to select the target

configuration which satisfies the constraints and has the minimum distance to

the operator's target pose with minimal delay. We evaluate the framework with a

pick and place task on a bi-manual setup with two Franka Emika Panda robot arms

with Robotiq grippers.

When training automated systems, it has been shown to be beneficial to adapt

the representation of data by learning a problem-specific metric. This metric

is global. We extend this idea and, for the widely used family of k nearest

neighbors algorithms, develop a method that allows learning locally adaptive

metrics. These local metrics not only improve performance but are naturally

interpretable. To demonstrate important aspects of how our approach works, we

conduct a number of experiments on synthetic data sets, and we show its

usefulness on real-world benchmark data sets.

14 Oct 2024

Social robots have emerged as valuable contributors to individuals'

well-being coaching. Notably, their integration into long-term human coaching

trials shows particular promise, emphasizing a complementary role alongside

human coaches rather than outright replacement. In this context, robots serve

as supportive entities during coaching sessions, offering insights based on

their knowledge about users' well-being and activity. Traditionally, such

insights have been gathered through methods like written self-reports or

wearable data visualizations. However, the disclosure of people's information

by a robot raises concerns regarding privacy, appropriateness, and trust. To

address this, we conducted an initial study with [n = 22] participants to

quantify their perceptions of privacy regarding disclosures made by a robot

coaching assistant. The study was conducted online, presenting participants

with six prerecorded scenarios illustrating various types of information

disclosure and the robot's role, ranging from active on-demand to proactive

communication conditions.

There are no more papers matching your filters at the moment.