IBM QuantumIBM T.J. Watson Research Center

Researchers from Penn State, in collaboration with industry partners, provide the first comprehensive survey of Reinforcement Learning-based agentic search, systematically organizing its foundational concepts, functional roles, optimization strategies, and applications. This work clarifies the interplay between RL and agentic LLMs, delineating current capabilities, evaluation methods, and critical future research directions.

IBM Research and MIT-IBM Watson Lab researchers formalized an off-policy extension for Group Relative Policy Optimization (GRPO), demonstrating it provides theoretical guarantees for policy improvement and achieves comparable or superior performance to on-policy GRPO on LLM alignment tasks while significantly reducing computational overhead through less frequent model updates.

22 Aug 2025

We introduce a new heuristic decoder, Relay-BP, targeting real-time quantum circuit decoding for large-scale quantum computers. Relay-BP achieves high accuracy across circuit-noise decoding problems: significantly outperforming BP+OSD+CS-10 for bivariate-bicycle codes and comparable to min-weight-matching for surface codes. As a lightweight message-passing decoder, Relay-BP is inherently parallel, enabling rapid low-footprint decoding with FPGA or ASIC real-time implementations, similar to standard BP. A core aspect of our decoder is its enhancement of the standard BP algorithm by incorporating disordered memory strengths. This dampens oscillations and breaks symmetries that trap traditional BP algorithms. By dynamically adjusting memory strengths in a relay approach, Relay-BP can consecutively encounter multiple valid corrections to improve decoding accuracy. We observe that a problem-dependent distribution of memory strengths that includes negative values is indispensable for good performance.

A framework called GradeSQL enhances Text-to-SQL generation from Large Language Models (LLMs) by leveraging Outcome Reward Models (ORMs) to select the semantically most correct query from a pool of candidates during test-time inference. This approach consistently achieved higher execution accuracy, demonstrating, for example, a +5.01% gain over the N=1 baseline on the BIRD dev set through improved semantic evaluation.

18 Dec 2023

Researchers from MIT CSAIL and IBM Research demonstrate that GPT-4 can serve as a powerful generalized planner for PDDL domains, synthesizing executable Python programs from domain descriptions and a few training tasks. Their methodology, enhanced by automated debugging, yields highly efficient and scalable solutions that often surpass traditional generalized planners in performance and runtime efficiency.

IBM QuantumUniversity of ColoradoIBM Research CambridgeIBM T.J. Watson Research CenterRIKEN Center for Emergent Matter Science (CEMS)RIKEN Interdisciplinary Theoretical and Mathematical Sciences Program (iTHEMS)RIKEN Center for Quantum Computing (RQC)RIKEN Center for Computational Science (R-CCS)IBM France Lab

Electronic structure calculations on molecular systems previously intractable for exact classical methods were performed using a quantum-centric supercomputer integrating an IBM Heron processor with the Fugaku supercomputer. This approach, employing the Sample-Based Quantum Diagonalization (SQD) workflow, successfully calculated properties for systems up to 77 qubits and 10,570 gates, aided by a robust self-consistent configuration recovery technique.

The prevalence of unhealthy eating habits has become an increasingly concerning issue in the United States. However, major food recommendation platforms (e.g., Yelp) continue to prioritize users' dietary preferences over the healthiness of their choices. Although efforts have been made to develop health-aware food recommendation systems, the personalization of such systems based on users' specific health conditions remains under-explored. In addition, few research focus on the interpretability of these systems, which hinders users from assessing the reliability of recommendations and impedes the practical deployment of these systems. In response to this gap, we first establish two large-scale personalized health-aware food recommendation benchmarks at the first attempt. We then develop a novel framework, Multi-Objective Personalized Interpretable Health-aware Food Recommendation System (MOPI-HFRS), which provides food recommendations by jointly optimizing the three objectives: user preference, personalized healthiness and nutritional diversity, along with an large language model (LLM)-enhanced reasoning module to promote healthy dietary knowledge through the interpretation of recommended results. Specifically, this holistic graph learning framework first utilizes two structure learning and a structure pooling modules to leverage both descriptive features and health data. Then it employs Pareto optimization to achieve designed multi-facet objectives. Finally, to further promote the healthy dietary knowledge and awareness, we exploit an LLM by utilizing knowledge-infusion, prompting the LLMs with knowledge obtained from the recommendation model for interpretation.

03 Jun 2025

Researchers at IBM Quantum introduce the 'bicycle architecture,' a fault-tolerant quantum computing design leveraging bivariate bicycle codes that achieves an order of magnitude lower physical qubit overhead compared to surface code architectures. This modular approach significantly reduces physical qubit requirements for complex computations and enhances manufacturability.

24 Oct 2025

A Field Programmable Gate Array (FPGA) implementation developed by IBM Quantum researchers decodes advanced quantum low-density parity check (qLDPC) codes in real-time, achieving a 24ns belief propagation iteration time for the [[144, 12, 12]] gross code. This performance, significantly faster than typical syndrome collection rates, resolves the critical 'backlog problem' in quantum error correction while matching floating-point accuracy with 4-6 bits of integer precision.

10 Oct 2025

Entanglement is the quintessential quantum phenomenon and a key enabler of quantum algorithms. The ability to faithfully entangle many distinct particles is often used as a benchmark for the quality of hardware and control in a quantum computer. Greenberger-Horne-Zeilinger (GHZ) states, also known as Schrödinger cat states, are useful for this task. They are easy to verify, but difficult to prepare due to their high sensitivity to noise. In this Letter we report on the largest GHZ state prepared to date consisting of 120 superconducting qubits. We do this via a combination of optimized compilation, low-overhead error detection and temporary uncomputation. We use an automated compiler to maximize error-detection in state preparation circuits subject to arbitrary qubit connectivity constraints and variations in error rates. We measure a GHZ fidelity of 0.56(3) with a post-selection rate of 28%. We certify the fidelity of our GHZ states using multiple methods and show that they are all equivalent, albeit with different practical considerations.

04 Dec 2025

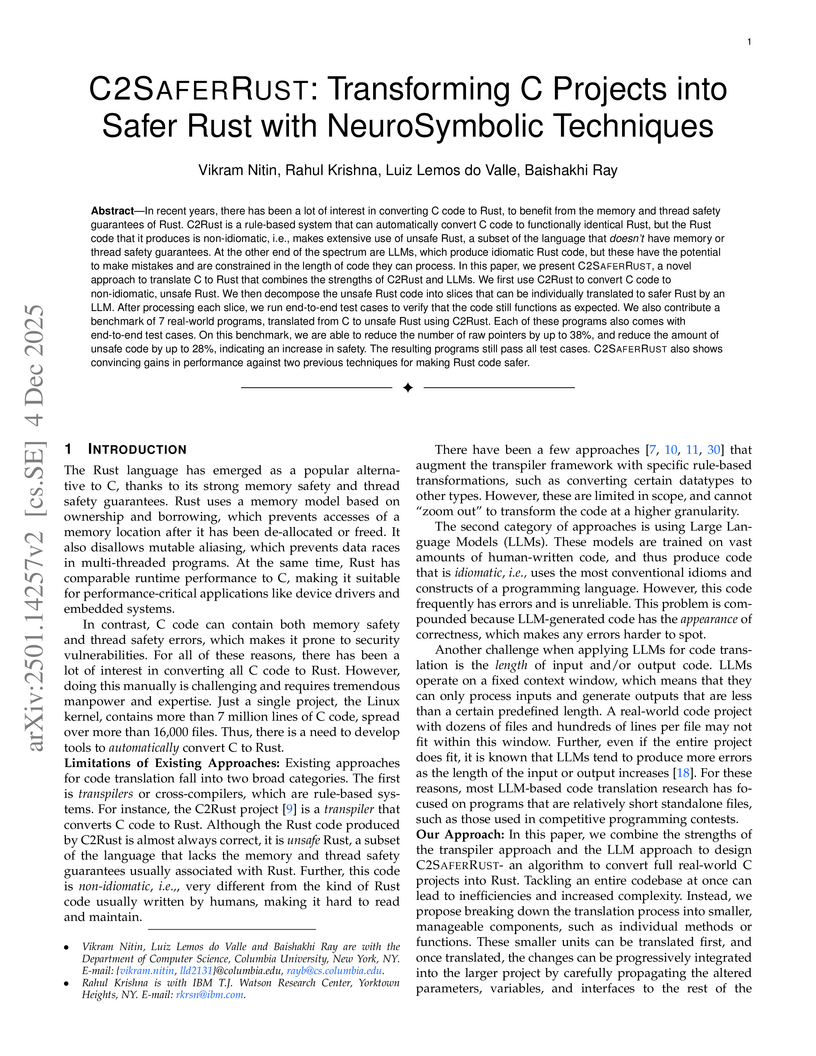

In recent years, there has been a lot of interest in converting C code to Rust, to benefit from the memory and thread safety guarantees of Rust. C2Rust is a rule-based system that can automatically convert C code to functionally identical Rust, but the Rust code that it produces is non-idiomatic, i.e., makes extensive use of unsafe Rust, a subset of the language that doesn't have memory or thread safety guarantees. At the other end of the spectrum are LLMs, which produce idiomatic Rust code, but these have the potential to make mistakes and are constrained in the length of code they can process. In this paper, we present C2SaferRust, a novel approach to translate C to Rust that combines the strengths of C2Rust and LLMs. We first use C2Rust to convert C code to non-idiomatic, unsafe Rust. We then decompose the unsafe Rust code into slices that can be individually translated to safer Rust by an LLM. After processing each slice, we run end-to-end test cases to verify that the code still functions as expected. We also contribute a benchmark of 7 real-world programs, translated from C to unsafe Rust using C2Rust. Each of these programs also comes with end-to-end test cases. On this benchmark, we are able to reduce the number of raw pointers by up to 38%, and reduce the amount of unsafe code by up to 28%, indicating an increase in safety. The resulting programs still pass all test cases. C2SaferRust also shows convincing gains in performance against two previous techniques for making Rust code safer.

25 Sep 2025

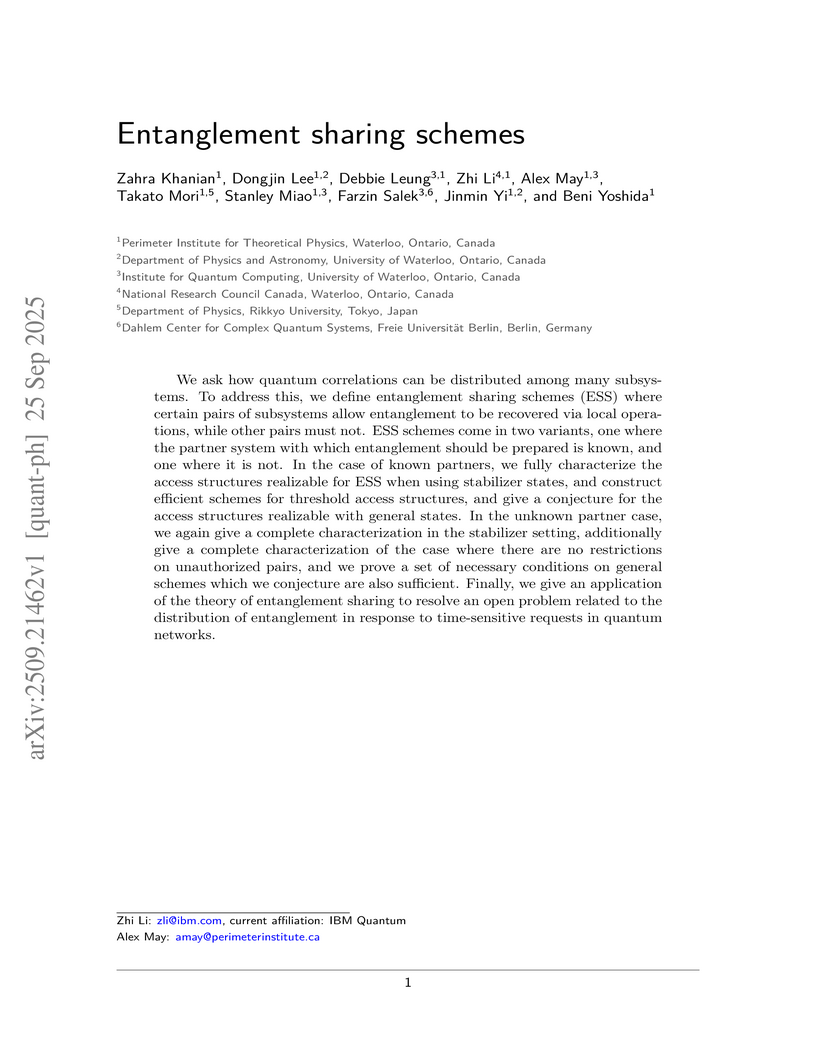

Researchers from Perimeter Institute, University of Waterloo, and collaborators introduced Entanglement Sharing Schemes (ESS), a theoretical framework for distributing quantum correlations among multiple parties. The work defines and characterizes both known and unknown partner ESS, providing constructions and establishing conditions for their realizability, and successfully applied the framework to resolve an open problem in entanglement summoning.

09 Oct 2025

Researchers from The University of Tokyo and IBM Quantum introduce a general theoretical framework for "weak transversal gates," which enable probabilistic, in-place multi-qubit Pauli rotations in fault-tolerant quantum computation. This new approach for non-Clifford operations, integrated into a "Clifford+" architecture, demonstrates reductions in runtime by factors of tens to a hundred and circuit volume by factors of 100+ compared to conventional methods for large-scale circuits.

19 Jun 2024

We describe Qiskit, a software development kit for quantum information science. We discuss the key design decisions that have shaped its development, and examine the software architecture and its core components. We demonstrate an end-to-end workflow for solving a problem in condensed matter physics on a quantum computer that serves to highlight some of Qiskit's capabilities, for example the representation and optimization of circuits at various abstraction levels, its scalability and retargetability to new gates, and the use of quantum-classical computations via dynamic circuits. Lastly, we discuss some of the ecosystem of tools and plugins that extend Qiskit for various tasks, and the future ahead.

27 Oct 2025

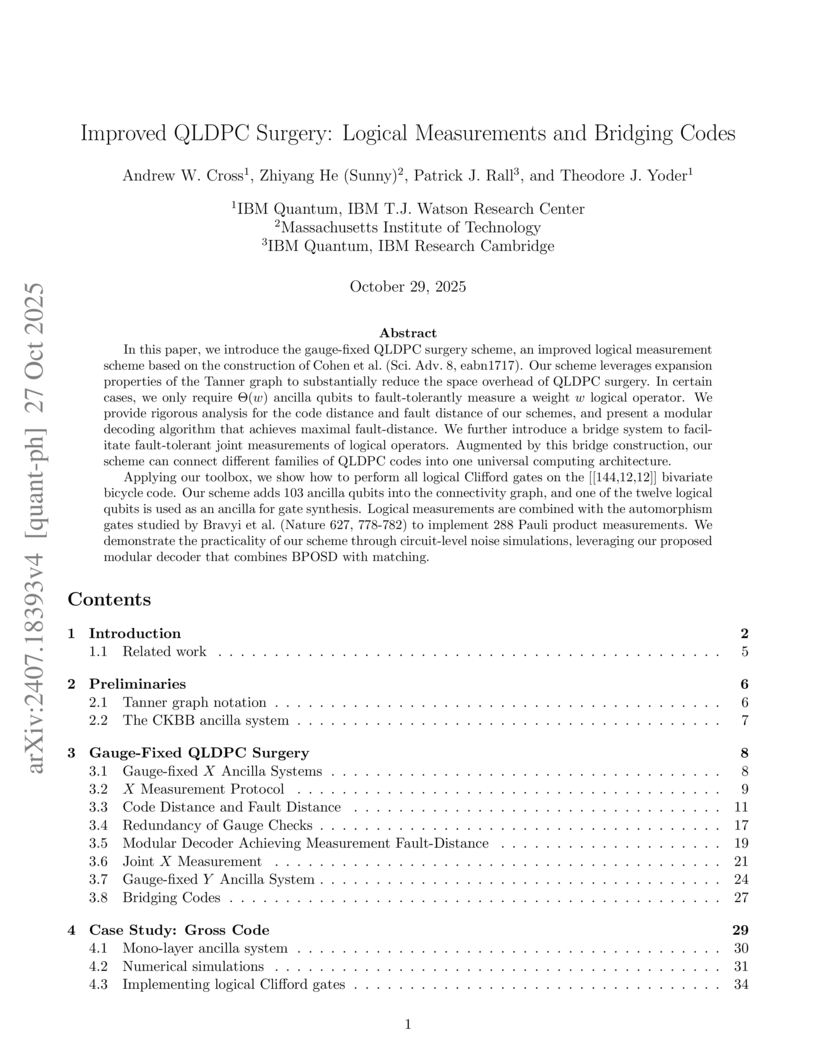

In this paper, we introduce the gauge-fixed QLDPC surgery scheme, an improved logical measurement scheme based on the construction of Cohen et al. (Sci. Adv. 8, eabn1717). Our scheme leverages expansion properties of the Tanner graph to substantially reduce the space overhead of QLDPC surgery. In certain cases, we only require Θ(w) ancilla qubits to fault-tolerantly measure a weight w logical operator. We provide rigorous analysis for the code distance and fault distance of our schemes, and present a modular decoding algorithm that achieves maximal fault-distance. We further introduce a bridge system to facilitate fault-tolerant joint measurements of logical operators. Augmented by this bridge construction, our scheme can be used to connect different families of QLDPC codes into one universal architecture.

Applying our toolbox, we show how to perform all logical Clifford gates on the [[144,12,12]] bivariate bicycle code. Our scheme adds 103 ancilla qubits into the connectivity graph, and one of the twelve logical qubits is used as an ancilla for gate synthesis. Logical measurements are combined with the automorphism gates studied by Bravyi et al. (Nature 627, 778-782) to implement 288 Pauli product measurements. We demonstrate the practicality of our scheme through circuit-level noise simulations, leveraging our proposed modular decoder that combines BPOSD with matching.

21 Feb 2024

Researchers at IBM Quantum introduced Bivariate Bicycle (BB) Low-Density Parity-Check (LDPC) codes, which achieve an error threshold of 0.8%, on par with the surface code, while dramatically reducing the physical qubit overhead by over 10 times. These codes are designed with hardware-compatible connectivity and support fault-tolerant memory operations, making scalable quantum memory feasible for near-term processors.

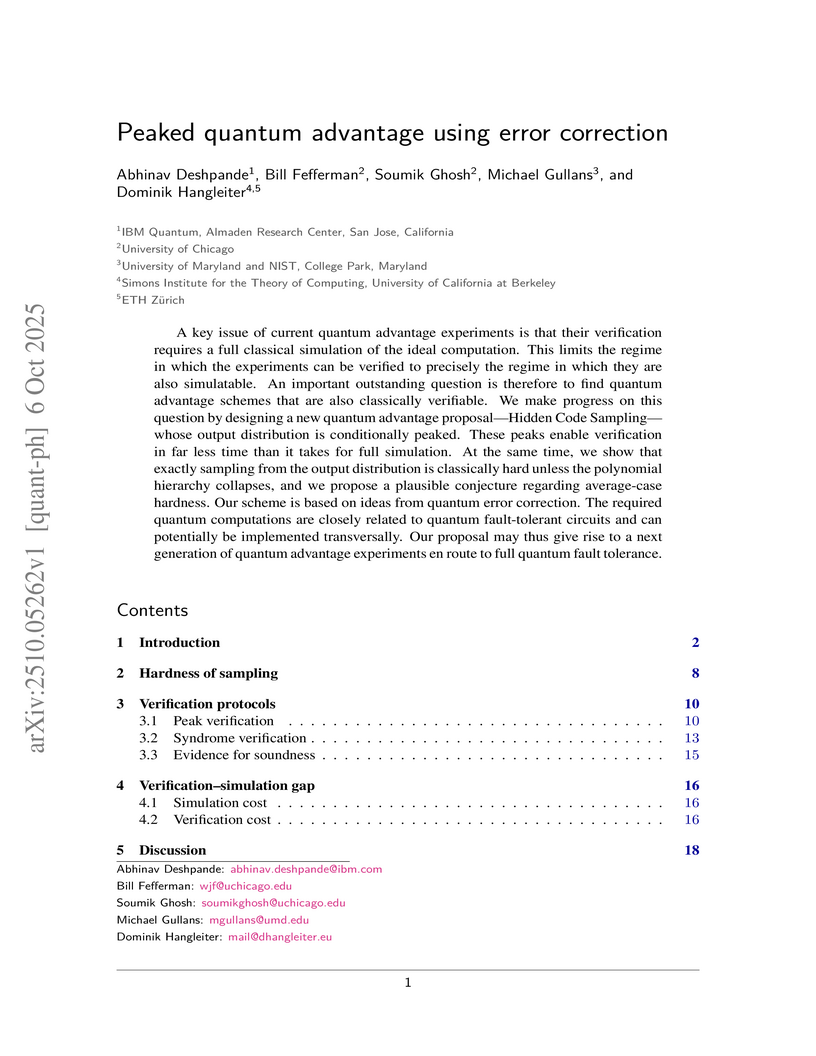

A key issue of current quantum advantage experiments is that their verification requires a full classical simulation of the ideal computation. This limits the regime in which the experiments can be verified to precisely the regime in which they are also simulatable. An important outstanding question is therefore to find quantum advantage schemes that are also classically verifiable. We make progress on this question by designing a new quantum advantage proposal--Hidden Code Sampling--whose output distribution is conditionally peaked. These peaks enable verification in far less time than it takes for full simulation. At the same time, we show that exactly sampling from the output distribution is classically hard unless the polynomial hierarchy collapses, and we propose a plausible conjecture regarding average-case hardness. Our scheme is based on ideas from quantum error correction. The required quantum computations are closely related to quantum fault-tolerant circuits and can potentially be implemented transversally. Our proposal may thus give rise to a next generation of quantum advantage experiments en route to full quantum fault tolerance.

IBM Quantum researchers developed a Reinforcement Learning framework for practical and efficient quantum circuit synthesis and routing, eliminating the need for large datasets and inherently incorporating device-specific constraints. This approach yields near-optimal circuits, achieving up to 60% reduction in CNOT layers for Cliffords and 40% shallower circuits for large quantum volume benchmarks, while being orders of magnitude faster than exact optimization methods.

Qiskit is an open-source quantum computing framework that allows users to design, simulate, and run quantum circuits on real quantum hardware. We explore post-training techniques for LLMs to assist in writing Qiskit code. We introduce quantum verification as an effective method for ensuring code quality and executability on quantum hardware. To support this, we developed a synthetic data pipeline that generates quantum problem-unit test pairs and used it to create preference data for aligning LLMs with DPO. Additionally, we trained models using GRPO, leveraging quantum-verifiable rewards provided by the quantum hardware. Our best-performing model, combining DPO and GRPO, surpasses the strongest open-source baselines on the challenging Qiskit-HumanEval-hard benchmark.

Quantum programs are typically developed using quantum Software Development Kits (SDKs). The rapid advancement of quantum computing necessitates new tools to streamline this development process, and one such tool could be Generative Artificial intelligence (GenAI). In this study, we introduce and use the Qiskit HumanEval dataset, a hand-curated collection of tasks designed to benchmark the ability of Large Language Models (LLMs) to produce quantum code using Qiskit - a quantum SDK. This dataset consists of more than 100 quantum computing tasks, each accompanied by a prompt, a canonical solution, a comprehensive test case, and a difficulty scale to evaluate the correctness of the generated solutions. We systematically assess the performance of a set of LLMs against the Qiskit HumanEval dataset's tasks and focus on the models ability in producing executable quantum code. Our findings not only demonstrate the feasibility of using LLMs for generating quantum code but also establish a new benchmark for ongoing advancements in the field and encourage further exploration and development of GenAI-driven tools for quantum code generation.

There are no more papers matching your filters at the moment.