Institute of SoftwareChina Academy of Sciences

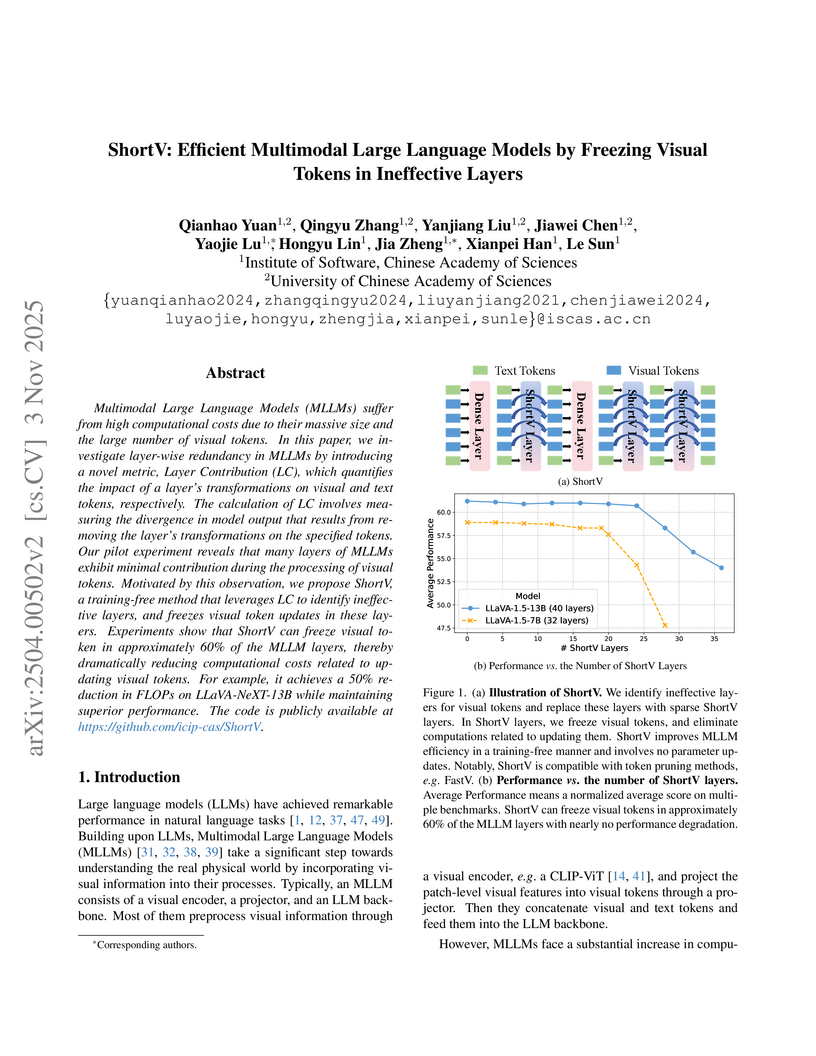

Multimodal Large Language Models (MLLMs) suffer from high computational costs due to their massive size and the large number of visual tokens. In this paper, we investigate layer-wise redundancy in MLLMs by introducing a novel metric, Layer Contribution (LC), which quantifies the impact of a layer's transformations on visual and text tokens, respectively. The calculation of LC involves measuring the divergence in model output that results from removing the layer's transformations on the specified tokens. Our pilot experiment reveals that many layers of MLLMs exhibit minimal contribution during the processing of visual tokens. Motivated by this observation, we propose ShortV, a training-free method that leverages LC to identify ineffective layers, and freezes visual token updates in these layers. Experiments show that ShortV can freeze visual token in approximately 60\% of the MLLM layers, thereby dramatically reducing computational costs related to updating visual tokens. For example, it achieves a 50\% reduction in FLOPs on LLaVA-NeXT-13B while maintaining superior performance. The code will be publicly available at this https URL

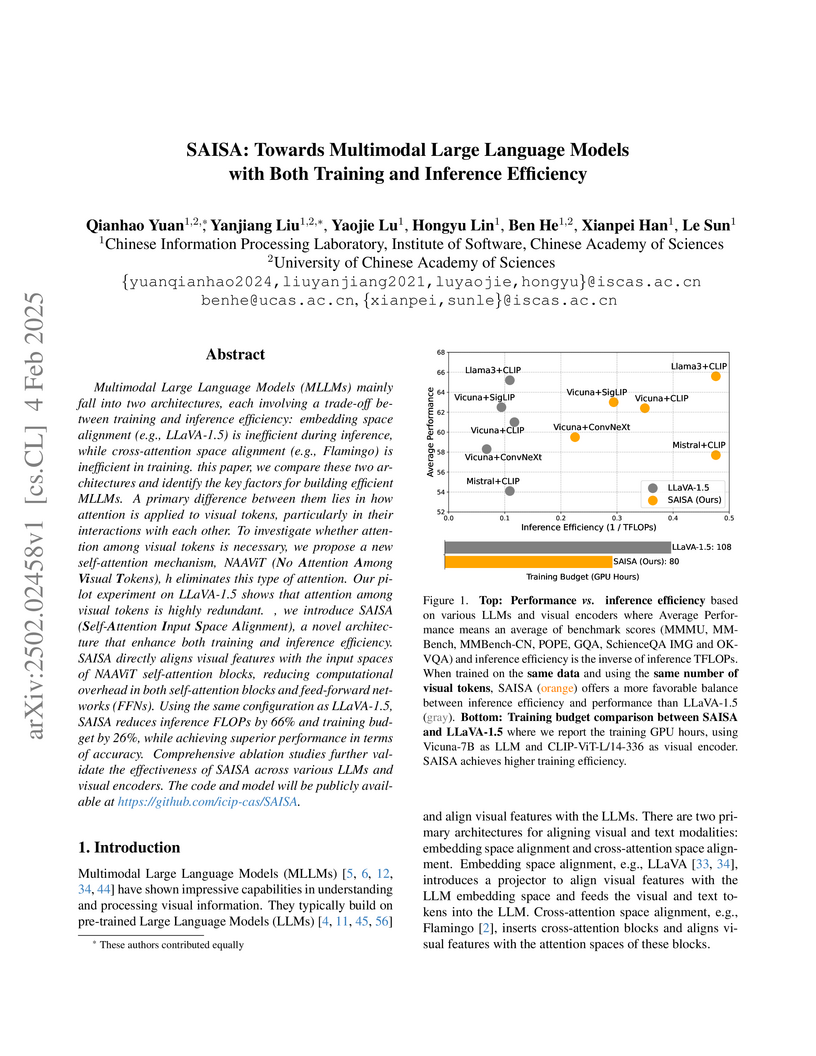

Multimodal Large Language Models (MLLMs) mainly fall into two architectures, each involving a trade-off between training and inference efficiency: embedding space alignment (e.g., LLaVA-1.5) is inefficient during inference, while cross-attention space alignment (e.g., Flamingo) is inefficient in training. In this paper, we compare these two architectures and identify the key factors for building efficient MLLMs. A primary difference between them lies in how attention is applied to visual tokens, particularly in their interactions with each other. To investigate whether attention among visual tokens is necessary, we propose a new self-attention mechanism, NAAViT (\textbf{N}o \textbf{A}ttention \textbf{A}mong \textbf{Vi}sual \textbf{T}okens), which eliminates this type of attention. Our pilot experiment on LLaVA-1.5 shows that attention among visual tokens is highly redundant. Based on these insights, we introduce SAISA (\textbf{S}elf-\textbf{A}ttention \textbf{I}nput \textbf{S}pace \textbf{A}lignment), a novel architecture that enhance both training and inference efficiency. SAISA directly aligns visual features with the input spaces of NAAViT self-attention blocks, reducing computational overhead in both self-attention blocks and feed-forward networks (FFNs). Using the same configuration as LLaVA-1.5, SAISA reduces inference FLOPs by 66\% and training budget by 26\%, while achieving superior performance in terms of accuracy. Comprehensive ablation studies further validate the effectiveness of SAISA across various LLMs and visual encoders. The code and model will be publicly available at this https URL.

27 Nov 2025

Single-pixel imaging(SPI),especially when integrated with deep neural networks like deep image prior networks (DIP-Net) or data-driven networks (DD-Net), has gained considerable attention for its capability to generate high-quality reconstructed images, even in the presence of sub-sampling conditions. However, DIP-Net often requires thousands of iterations to achieve high-quality image reconstruction, and DD-Net performs optimally only when the target closely resembles the features present in its training set. To overcome these limitations, we propose a dual-network iterative optimization (SPI-DNIO) framework that combines the strengths of both DD-Net and DIP-Net. It has been demonstrated that this approach can recover high-quality images with fewer iteration steps. Furthermore, to address the challenge of SPI inputs having less effective information at low sampling rates, we have designed a residual block enriched with gradient information, which can convey details to deeper layers, thereby enhancing the deep network's learning capabilities. We have applied these techniques to both indoor experiments with active lighting and outdoor long-range experiments with passive lighting. Our experimental results confirm the exceptional reconstruction capabilities and generalization performance of the SPI-DNIO framework.

29 Apr 2024

Parameterised quantum circuits (PQCs) hold great promise for demonstrating

quantum advantages in practical applications of quantum computation. Examples

of successful applications include the variational quantum eigensolver, the

quantum approximate optimisation algorithm, and quantum machine learning.

However, before executing PQCs on real quantum devices, they undergo

compilation and optimisation procedures. Given the inherent error-proneness of

these processes, it becomes crucial to verify the equivalence between the

original PQC and its compiled or optimised version. Unfortunately, most

existing quantum circuit verifiers cannot directly handle parameterised quantum

circuits; instead, they require parameter substitution to perform verification.

In this paper, we address the critical challenge of equivalence checking for

PQCs. We propose a novel compact representation for PQCs based on tensor

decision diagrams. Leveraging this representation, we present an algorithm for

verifying PQC equivalence without the need for instantiation. Our approach

ensures both effectiveness and efficiency, as confirmed by experimental

evaluations. The decision-diagram representations offer a powerful tool for

analysing and verifying parameterised quantum circuits, bridging the gap

between theoretical models and practical implementations.

Without loss of generality, existing machine learning techniques may learn spurious correlation dependent on the domain, which exacerbates the generalization of models in out-of-distribution (OOD) scenarios. To address this issue, recent works build a structural causal model (SCM) to describe the causality within data generation process, thereby motivating methods to avoid the learning of spurious correlation by models. However, from the machine learning viewpoint, such a theoretical analysis omits the nuanced difference between the data generation process and representation learning process, resulting in that the causal analysis based on the former cannot well adapt to the latter. To this end, we explore to build a SCM for representation learning process and further conduct a thorough analysis of the mechanisms underlying spurious correlation. We underscore that adjusting erroneous covariates introduces bias, thus necessitating the correct selection of spurious correlation mechanisms based on practical application scenarios. In this regard, we substantiate the correctness of the proposed SCM and further propose to control confounding bias in OOD generalization by introducing a propensity score weighted estimator, which can be integrated into any existing OOD method as a plug-and-play module. The empirical results comprehensively demonstrate the effectiveness of our method on synthetic and large-scale real OOD datasets.

Text-attributed graphs(TAGs) are pervasive in real-world systems,where each node carries its own textual features. In many cases these graphs are inherently heterogeneous, containing multiple node types and diverse edge types. Despite the ubiquity of such heterogeneous TAGs, there remains a lack of large-scale benchmark datasets. This shortage has become a critical bottleneck, hindering the development and fair comparison of representation learning methods on heterogeneous text-attributed graphs. In this paper, we introduce CITE - Catalytic Information Textual Entities Graph, the first and largest heterogeneous text-attributed citation graph benchmark for catalytic materials. CITE comprises over 438K nodes and 1.2M edges, spanning four relation types. In addition, we establish standardized evaluation procedures and conduct extensive benchmarking on the node classification task, as well as ablation experiments on the heterogeneous and textual properties of CITE. We compare four classes of learning paradigms, including homogeneous graph models, heterogeneous graph models, LLM(Large Language Model)-centric models, and LLM+Graph models. In a nutshell, we provide (i) an overview of the CITE dataset, (ii) standardized evaluation protocols, and (iii) baseline and ablation experiments across diverse modeling paradigms.

Event extraction (EE) is a crucial research task for promptly apprehending event information from massive textual data. With the rapid development of deep learning, EE based on deep learning technology has become a research hotspot. Numerous methods, datasets, and evaluation metrics have been proposed in the literature, raising the need for a comprehensive and updated survey. This article fills the research gap by reviewing the state-of-the-art approaches, especially focusing on the general domain EE based on deep learning models. We introduce a new literature classification of current general domain EE research according to the task definition. Afterward, we summarize the paradigm and models of EE approaches, and then discuss each of them in detail. As an important aspect, we summarize the benchmarks that support tests of predictions and evaluation metrics. A comprehensive comparison among different approaches is also provided in this survey. Finally, we conclude by summarizing future research directions facing the research area.

Data contamination presents a critical barrier preventing widespread industrial adoption of advanced software engineering techniques that leverage code language models (CLMs). This phenomenon occurs when evaluation data inadvertently overlaps with the public code repositories used to train CLMs, severely undermining the credibility of performance evaluations. For software companies considering the integration of CLM-based techniques into their development pipeline, this uncertainty about true performance metrics poses an unacceptable business risk. Code refactoring, which comprises code restructuring and variable renaming, has emerged as a promising measure to mitigate data contamination. It provides a practical alternative to the resource-intensive process of building contamination-free evaluation datasets, which would require companies to collect, clean, and label code created after the CLMs' training cutoff dates. However, the lack of automated code refactoring tools and scientifically validated refactoring techniques has hampered widespread industrial implementation. To bridge the gap, this paper presents the first systematic study to examine the efficacy of code refactoring operators at multiple scales (method-level, class-level, and cross-class level) and in different programming languages. In particular, we develop an open-sourced toolkit, CODECLEANER, which includes 11 operators for Python, with nine method-level, one class-level, and one cross-class-level operator. A drop of 65% overlap ratio is found when applying all operators in CODECLEANER, demonstrating their effectiveness in addressing data contamination. Additionally, we migrate four operators to Java, showing their generalizability to another language. We make CODECLEANER online available to facilitate further studies on mitigating CLM data contamination.

Grounded Multimodal Named Entity Recognition (GMNER) extends traditional NER by jointly detecting textual mentions and grounding them to visual regions. While existing supervised methods achieve strong performance, they rely on costly multimodal annotations and often underperform in low-resource domains. Multimodal Large Language Models (MLLMs) show strong generalization but suffer from Domain Knowledge Conflict, producing redundant or incorrect mentions for domain-specific entities. To address these challenges, we propose ReFineG, a three-stage collaborative framework that integrates small supervised models with frozen MLLMs for low-resource GMNER. In the Training Stage, a domain-aware NER data synthesis strategy transfers LLM knowledge to small models with supervised training while avoiding domain knowledge conflicts. In the Refinement Stage, an uncertainty-based mechanism retains confident predictions from supervised models and delegates uncertain ones to the MLLM. In the Grounding Stage, a multimodal context selection algorithm enhances visual grounding through analogical reasoning. In the CCKS2025 GMNER Shared Task, ReFineG ranked second with an F1 score of 0.6461 on the online leaderboard, demonstrating its effectiveness with limited annotations.

04 Dec 2013

The ultimate target of proteomics identification is to identify and quantify

the protein in the organism. Mass spectrometry (MS) based on label-free protein

quantitation has mainly focused on analysis of peptide spectral counts and ion

peak heights. Using several observed peptides (proteotypic) can identify the

origin protein. However, each peptide's possibility to be detected was severely

influenced by the peptide physicochemical properties, which confounded the

results of MS accounting. Using about a million peptide identification

generated by four different kinds of proteomic platforms, we successfully

identified >16,000 proteotypic peptides. We used machine learning

classification to derive peptide detection probabilities that are used to

predict the number of trypic peptides to be observed, which can serve to

estimate the absolutely abundance of protein with highly accuracy. We used the

data of peptides (provides by CAS lab) to derive the best model from different

kinds of methods. We first employed SVM and Random Forest classifier to

identify the proteotypic and unobserved peptides, and then searched the best

parameter for better prediction results. Considering the excellent performance

of our model, we can calculate the absolutely estimation of protein abundance.

The halting probability of a Turing machine,also known as Chaitin's Omega, is an algorithmically random number with many interesting properties. Since Chaitin's seminal work, many popular expositions have appeared, mainly focusing on the metamathematical or philosophical significance of Omega (or debating against it). At the same time, a rich mathematical theory exploring the properties of Chaitin's Omega has been brewing in various technical papers, which quietly reveals the significance of this number to many aspects of contemporary algorithmic information theory. The purpose of this survey is to expose these developments and tell a story about Omega, which outlines its multifaceted mathematical properties and roles in algorithmic randomness.

01 Sep 2014

Drawings of non-planar graphs always result in edge crossings. When there are many edges crossing at small angles, it is often difficult to follow these edges, because of the multiple visual paths resulted from the crossings that slow down eye movements. In this paper we propose an algorithm that disambiguates the edges with automatic selection of distinctive colors. Our proposed algorithm computes a near optimal color assignment of a dual collision graph, using a novel branch-and-bound procedure applied to a space decomposition of the color gamut. We give examples demonstrating the effectiveness of this approach in clarifying drawings of real world graphs and maps.

10 Oct 2012

We propose a protocol for Alice to implement a multiqubit quantum operation

from the restricted sets on distant qubits possessed by Bob, and then we

investigate the communication complexity of the task in different communication

scenarios. By comparing with the previous work, our protocol works without

prior sharing of entanglement, and requires less communication resources than

the previous protocol in the qubit-transmission scenario. Furthermore, we

generalize our protocol to d-dimensional operations.

Inner-approximate reachability analysis involves calculating subsets of reachable sets, known as inner-approximations. This analysis is crucial in the fields of dynamic systems analysis and control theory as it provides a reliable estimation of the set of states that a system can reach from given initial states at a specific time instant. In this paper, we study the inner-approximate reachability analysis problem based on the set-boundary reachability method for systems modelled by ordinary differential equations, in which the computed inner-approximations are represented with zonotopes. The set-boundary reachability method computes an inner-approximation by excluding states reached from the initial set's boundary. The effectiveness of this method is highly dependent on the efficient extraction of the exact boundary of the initial set. To address this, we propose methods leveraging boundary and tiling matrices that can efficiently extract and refine the exact boundary of the initial set represented by zonotopes. Additionally, we enhance the exclusion strategy by contracting the outer-approximations in a flexible way, which allows for the computation of less conservative inner-approximations. To evaluate the proposed method, we compare it with state-of-the-art methods against a series of benchmarks. The numerical results demonstrate that our method is not only efficient but also accurate in computing inner-approximations.

09 Mar 2025

In this paper, we examine necessary and sufficient barrier-like conditions

for infinite-horizon safety verification and reach-avoid verification of

stochastic discrete-time systems, derived through a relaxation of Bellman

equations. Unlike previous methods focused on barrier-like conditions that

primarily address sufficiency, our work rigorously integrates both necessity

and sufficiency for properties pertaining to infinite time. Safety verification

aims to certify the satisfaction of the safety property, which stipulates that

the probability of the system, starting from a specified initial state,

remaining within a safe set always is greater than or equal to a specified

lower bound. A necessary and sufficient barrier-like condition is formulated

for safety verification. In contrast, reach-avoid verification extends beyond

safety to include reachability, seeking to certify the satisfaction of the

reach-avoid property. It requires that the probability of the system, starting

from a specified initial state, reaching a target set eventually while

remaining within a safe set until the first hit of the target, is greater than

or equal to a specified lower bound. Two necessary and sufficient barrier-like

conditions are formulated under certain assumptions.

Owing to the merits of early safety and reliability guarantee, autonomous

driving virtual testing has recently gains increasing attention compared with

closed-loop testing in real scenarios. Although the availability and quality of

autonomous driving datasets and toolsets are the premise to diagnose the

autonomous driving system bottlenecks and improve the system performance, due

to the diversity and privacy of the datasets and toolsets, collecting and

featuring the perspective and quality of them become not only time-consuming

but also increasingly challenging. This paper first proposes a Systematic

Literature review approach for Autonomous driving tests (SLA), then presents an

overview of existing publicly available datasets and toolsets from 2000 to

2020. Quantitative findings with the scenarios concerned, perspectives and

trend inferences and suggestions with 35 automated driving test tool sets and

70 test data sets are also presented. To the best of our knowledge, we are the

first to perform such recent empirical survey on both the datasets and toolsets

using a SLA based survey approach. Our multifaceted analyses and new findings

not only reveal insights that we believe are useful for system designers,

practitioners and users, but also can promote more researches on a systematic

survey analysis in autonomous driving surveys on dataset and toolsets.

We present a study of the third star orbiting around known contact eclipsing

binary J04+25 using spectra from the LAMOST medium-resolution survey (MRS) and

publicly available photometry. This is a rare case of a hierarchical triple,

where the third star is significantly brighter than the inner contact

subsystem. We successfully extracted radial velocities for all three

components, using the binary spectral model in two steps. Third star radial

velocities have high precision and allow direct fitting of the orbit. The low

precision of radial velocity measurements in the contact system is compensated

by large number statistics. We employed a template matching technique for light

curves to find periodic variation due to the light time travel effect (LTTE)

using several photometric datasets. Joint fit of third star radial velocities

and LTTE allowed us to get a consistent orbital solution with

P3=941.40±0.03 day and e3=0.059±0.007. We made estimations of the

masses M12, 3sin3i3=1.05±0.02, 0.90±0.02 M⊙ in a wide

system and discussed possible determination of an astrometric orbit in the

future data release of Gaia. Additionally, we propose an empirical method for

measuring a period and minimal mass of contact systems, based on variation of

the projected rotational velocity (Vsini) from the spectra.

07 Jun 2025

This paper addresses the computation of controlled reach-avoid sets (CRASs)

for discrete-time polynomial systems subject to control inputs. A CRAS is a set

encompassing initial states from which there exist control inputs driving the

system into a target set while avoiding unsafe sets. However, efficiently

computing CRASs remains an open problem, especially for discrete-time systems.

In this paper, we propose a novel framework for computing CRASs which takes

advantage of a probabilistic perspective. This framework transforms the

fundamentally nonlinear problem of computing CRASs into a computationally

tractable convex optimization problem. By regarding control inputs as

disturbances obeying certain probability distributions, a CRAS can be

equivalently treated as a 0-reach-avoid set in the probabilistic sense, which

consists of initial states from which the probability of eventually entering

the target set while remaining within the safe set is greater than zero. Thus,

we can employ the convex optimization method of computing 0-reach-avoid sets to

estimate CRASs. Furthermore, inspired by the ϵ-greedy strategy widely

used in reinforcement learning, we propose an approach that iteratively updates

the aforementioned probability distributions imposed on control inputs to

compute larger CRASs. We demonstrate the effectiveness of the proposed method

on extensive examples.

08 Sep 2016

Hybrid Communicating Sequential Processes (HCSP) is a powerful formal modeling language for hybrid systems, which is an extension of CSP by introducing differential equations for modeling continuous evolution and interrupts for modeling interaction between continuous and discrete dynamics. In this paper, we investigate the semantic foundation for HCSP from an operational point of view by proposing notion of approximate bisimulation, which provides an appropriate criterion to characterize the equivalence between HCSP processes with continuous and discrete behaviour. We give an algorithm to determine whether two HCSP processes are approximately bisimilar. In addition, based on that, we propose an approach on how to discretize HCSP, i.e., given an HCSP process A, we construct another HCSP process B which does not contain any continuous dynamics such that A and B are approximately bisimilar with given precisions. This provides a rigorous way to transform a verified control model to a correct program model, which fills the gap in the design of embedded systems.

10 Dec 2018

Given a quantum gate U acting on a bipartite quantum system, its maximum (average, minimum) entangling power is the maximum (average, minimum) entanglement generation with respect to certain entanglement measure when the inputs are restricted to be product states. In this paper, we mainly focus on the 'weakest' one, i.e., the minimum entangling power, among all these entangling powers. We show that, by choosing von Neumann entropy of reduced density operator or Schmidt rank as entanglement measure, even the 'weakest' entangling power is generically very close to its maximal possible entanglement generation. In other words, maximum, average and minimum entangling powers are generically close. We then study minimum entangling power with respect to other Lipschitiz-continuous entanglement measures and generalize our results to multipartite quantum systems.

As a straightforward application, a random quantum gate will almost surely be an intrinsically fault-tolerant entangling device that will always transform every low-entangled state to near-maximally entangled state.

There are no more papers matching your filters at the moment.