Ask or search anything...

This survey provides a comprehensive review of Optimal Transport (OT) theory, with a focus on its computational methods and applications in data sciences. It highlights how entropic regularization, particularly through the Sinkhorn-Knopp algorithm, has made OT computationally feasible for large-scale problems, detailing various formulations and their use across machine learning, computer vision, and statistics.

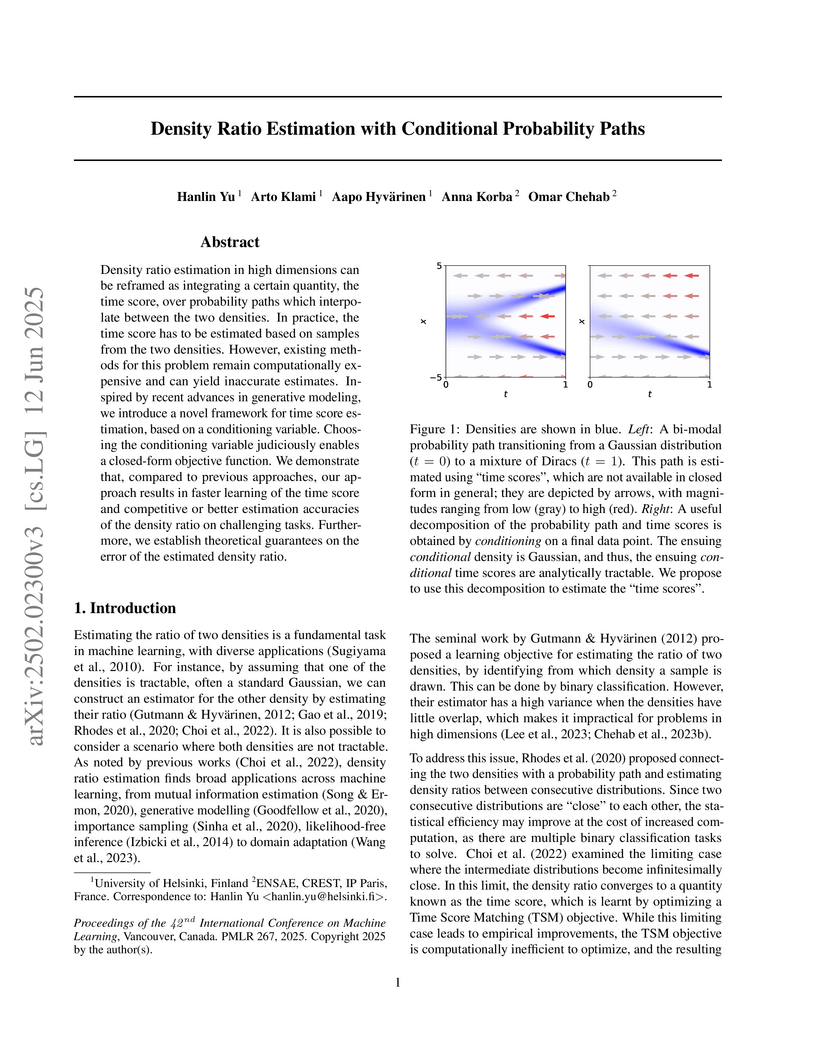

View blogThe paper provides a foundational theoretical analysis of Denoising Diffusion Probabilistic Models (DDPMs), proving they achieve an optimal O(sqrt(D)/ε) convergence rate in Wasserstein-2 distance. It also establishes a rigorous explanation for their empirical robustness to noisy score function evaluations, showing that the impact of random perturbations diminishes with more sampling steps.

View blogResearchers from Oxford and CREST developed a gradient-free stochastic optimization algorithm for additive functions, demonstrating it achieves a minimax optimal convergence rate of d T^(-(β-1)/β). Their analysis reveals that the additive structure does not offer substantial accuracy gains in terms of dimension or query dependency compared to general smooth functions, a finding that challenges intuitions from nonparametric estimation.

View blog CNRS

CNRS

Université Paris-Saclay

Université Paris-Saclay

EPFL

EPFL

Inria

Inria

University College London

University College London

the University of Tokyo

the University of Tokyo

University of Southern California

University of Southern California

University of Tokyo

University of Tokyo

University of Oxford

University of Oxford