LAMSADEPSL

This paper presents a comprehensive overview and taxonomy of time-series anomaly detection methods from the past decade.

In this work, we investigate high-dimensional kernel ridge regression (KRR) on i.i.d. Gaussian data with anisotropic power-law covariance. This setting differs fundamentally from the classical source & capacity conditions for KRR, where power-law assumptions are typically imposed on the kernel eigen-spectrum itself. Our contributions are twofold. First, we derive an explicit characterization of the kernel spectrum for polynomial inner-product kernels, giving a precise description of how the kernel eigen-spectrum inherits the data decay. Second, we provide an asymptotic analysis of the excess risk in the high-dimensional regime for a particular kernel with this spectral behavior, showing that the sample complexity is governed by the effective dimension of the data rather than the ambient dimension. These results establish a fundamental advantage of learning with power-law anisotropic data over isotropic data. To our knowledge, this is the first rigorous treatment of non-linear KRR under power-law data.

13 Aug 2024

Researchers at Université Paris Dauphine - PSL and LAMSADE demonstrate that Large Language Models (LLMs) can effectively solve the Job Shop Scheduling Problem (JSSP), introducing the first end-to-end LLM application for this task. Their fine-tuned Phi-3 model, utilizing a novel natural language dataset and a sampling strategy, achieves performance competitive with specialized neural network approaches like L2D on small-scale instances.

We consider a mean-field control problem in which admissible controls are required to be adapted to the common noise filtration. The main objective is to show how the mean-field control problem can be approximates by time consistent centralized finite population problems in which the central planner has full information on all agents' states and gives an identical signal to all agents. We also aim at establishing the optimal convergence rate. In a first general path-dependent setting, we only prove convergence by using weak convergence techniques of probability measures on the canonical space. Next, when only the drift coefficient is controlled, we obtain a backward SDE characterization of the value process, based on which a convergence rate is established in terms of the Wasserstein distance between the original measure and the empirical one induced by the particles. It requires Lipschitz continuity conditions in the Wasserstein sense. The convergence rate is optimal. In a Markovian setting and under convexity conditions on the running reward function, we next prove uniqueness of the optimal control and provide regularity results on the value function, and then deduce the optimal weak convergence rate in terms of the number of particles. Finally, we apply these results to the study of a classical optimal control problem with partial observation, leading to an original approximation method by particle systems.

A central question in evolutionary biology is how to quantitatively understand the dynamics of genetically diverse populations. Modeling the genotype distribution is challenging, as it ultimately requires tracking all correlations (or cumulants) among alleles at different loci. The quasi-linkage equilibrium (QLE) approximation simplifies this by assuming that correlations between alleles at different loci are weak -- i.e., low linkage disequilibrium -- allowing their dynamics to be modeled perturbatively. However, QLE breaks down under strong selection, significant epistatic interactions, or weak recombination. We extend the multilocus QLE framework to allow cumulants up to order K to evolve dynamically, while higher-order cumulants (>K) are assumed to equilibrate rapidly. This extended QLE (exQLE) framework yields a general equation of motion for cumulants up to order K, which parallels the standard QLE dynamics (recovered when K=1). In this formulation, cumulant dynamics are driven by the gradient of average fitness, mediated by a geometrically interpretable matrix that stems from competition among genotypes. Our analysis shows that the exQLE with K=2 accurately captures cumulant dynamics even when the fitness function includes higher-order (e.g., third-- or fourth--order) epistatic interactions, capabilities that standard QLE lacks. We also applied the exQLE framework to infer fitness parameters from temporal sequence data. Overall, exQLE provides a systematic and interpretable approximation scheme, leveraging analytical cumulant dynamics and reducing complexity by progressively truncating higher-order cumulants.

Decision-focused learning (DFL) is an increasingly popular paradigm for training predictive models whose outputs are used in decision-making tasks. Instead of merely optimizing for predictive accuracy, DFL trains models to directly minimize the loss associated with downstream decisions. However, existing studies focus solely on scenarios where a fixed batch of data is available and the objective function does not change over time. We instead investigate DFL in dynamic environments where the objective function and data distribution evolve over time. This setting is challenging for online learning because the objective function has zero or undefined gradients -- which prevents the use of standard first-order optimization methods -- and is generally non-convex. To address these difficulties, we (i) regularize the objective to make it differentiable and (ii) use perturbation techniques along with a near-optimal oracle to overcome non-convexity. Combining those techniques yields two original online algorithms tailored for DFL, for which we establish respectively static and dynamic regret bounds. These are the first provable guarantees for the online decision-focused problem. Finally, we showcase the effectiveness of our algorithms on a knapsack experiment, where they outperform two standard benchmarks.

Researchers from Inria, ENS, CNRS, and PSL introduce WARI and SMS, two new evaluation measures for time series segmentation, alongside a formal typology of segmentation errors. These measures enhance the interpretability of segmentation quality by accounting for temporal error positions and specific error types, providing diagnostic insights into algorithm performance.

The paper rigorously characterizes the high-dimensional SGD dynamics in simplified one-layer attention networks on sequential data using Sequence Single-Index (SSI) models, deriving the population loss as a function of semantic and positional alignment. This analysis identifies a "Sequence Information Exponent" (SIE) that dictates sample complexity and quantifies how attention mechanisms and positional encodings can accelerate learning.

A new exploration method, von Mises-Fisher exploration (vMF-exp), is introduced to enable efficient and principled exploration in Reinforcement Learning environments with millions of actions represented by hyperspherical embeddings. Deployed at Deezer, vMF-exp increased liked recommended songs by 11% and improved playlist diversity by 35% compared to baselines, demonstrating scalability and unrestricted exploration.

Important research efforts have focused on the design and training of neural

networks with a controlled Lipschitz constant. The goal is to increase and

sometimes guarantee the robustness against adversarial attacks. Recent

promising techniques draw inspirations from different backgrounds to design

1-Lipschitz neural networks, just to name a few: convex potential layers derive

from the discretization of continuous dynamical systems,

Almost-Orthogonal-Layer proposes a tailored method for matrix rescaling.

However, it is today important to consider the recent and promising

contributions in the field under a common theoretical lens to better design new

and improved layers. This paper introduces a novel algebraic perspective

unifying various types of 1-Lipschitz neural networks, including the ones

previously mentioned, along with methods based on orthogonality and spectral

methods. Interestingly, we show that many existing techniques can be derived

and generalized via finding analytical solutions of a common semidefinite

programming (SDP) condition. We also prove that AOL biases the scaled weight to

the ones which are close to the set of orthogonal matrices in a certain

mathematical manner. Moreover, our algebraic condition, combined with the

Gershgorin circle theorem, readily leads to new and diverse parameterizations

for 1-Lipschitz network layers. Our approach, called SDP-based Lipschitz Layers

(SLL), allows us to design non-trivial yet efficient generalization of convex

potential layers. Finally, the comprehensive set of experiments on image

classification shows that SLLs outperform previous approaches on certified

robust accuracy. Code is available at

https://github.com/araujoalexandre/Lipschitz-SLL-Networks.

A prevalent practice in recommender systems consists in averaging item

embeddings to represent users or higher-level concepts in the same embedding

space. This paper investigates the relevance of such a practice. For this

purpose, we propose an expected precision score, designed to measure the

consistency of an average embedding relative to the items used for its

construction. We subsequently analyze the mathematical expression of this score

in a theoretical setting with specific assumptions, as well as its empirical

behavior on real-world data from music streaming services. Our results

emphasize that real-world averages are less consistent for recommendation,

which paves the way for future research to better align real-world embeddings

with assumptions from our theoretical setting.

Rejection sampling methods have recently been proposed to improve the performance of discriminator-based generative models. However, these methods are only optimal under an unlimited sampling budget, and are usually applied to a generator trained independently of the rejection procedure. We first propose an Optimal Budgeted Rejection Sampling (OBRS) scheme that is provably optimal with respect to \textit{any} f-divergence between the true distribution and the post-rejection distribution, for a given sampling budget. Second, we propose an end-to-end method that incorporates the sampling scheme into the training procedure to further enhance the model's overall performance. Through experiments and supporting theory, we show that the proposed methods are effective in significantly improving the quality and diversity of the samples.

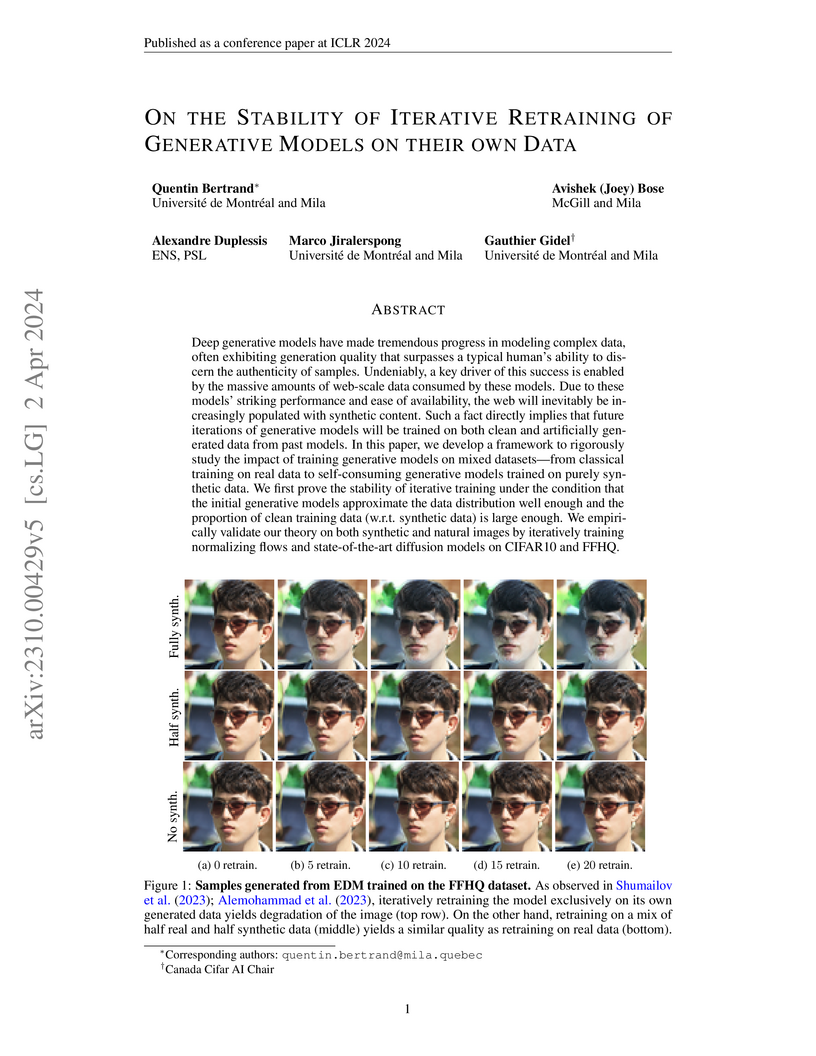

Deep generative models have made tremendous progress in modeling complex data, often exhibiting generation quality that surpasses a typical human's ability to discern the authenticity of samples. Undeniably, a key driver of this success is enabled by the massive amounts of web-scale data consumed by these models. Due to these models' striking performance and ease of availability, the web will inevitably be increasingly populated with synthetic content. Such a fact directly implies that future iterations of generative models will be trained on both clean and artificially generated data from past models. In this paper, we develop a framework to rigorously study the impact of training generative models on mixed datasets -- from classical training on real data to self-consuming generative models trained on purely synthetic data. We first prove the stability of iterative training under the condition that the initial generative models approximate the data distribution well enough and the proportion of clean training data (w.r.t. synthetic data) is large enough. We empirically validate our theory on both synthetic and natural images by iteratively training normalizing flows and state-of-the-art diffusion models on CIFAR10 and FFHQ.

Making inferences with a deep neural network on a batch of states is much

faster with a GPU than making inferences on one state after another. We build

on this property to propose Monte Carlo Tree Search algorithms using batched

inferences. Instead of using either a search tree or a transposition table we

propose to use both in the same algorithm. The transposition table contains the

results of the inferences while the search tree contains the statistics of

Monte Carlo Tree Search. We also propose to analyze multiple heuristics that

improve the search: the μ FPU, the Virtual Mean, the Last Iteration and the

Second Move heuristics. They are evaluated for the game of Go using a MobileNet

neural network.

09 Apr 2025

CNRS

CNRS California Institute of TechnologyWuhan University

California Institute of TechnologyWuhan University University of California, Santa Barbara

University of California, Santa Barbara Chinese Academy of Sciences

Chinese Academy of Sciences the University of Tokyo

the University of Tokyo The University of Texas at Austin

The University of Texas at Austin Space Telescope Science Institute

Space Telescope Science Institute Université Paris-SaclayRochester Institute of TechnologyUniversity of Helsinki

Université Paris-SaclayRochester Institute of TechnologyUniversity of Helsinki Sorbonne Université

Sorbonne Université Aalto University

Aalto University CEAPurple Mountain ObservatoryTechnical University of Denmark

CEAPurple Mountain ObservatoryTechnical University of Denmark Durham UniversityAix-Marseille UnivJet Propulsion LaboratoryInstituto de Astrofísica de CanariasCNESPSLUniversidad de La LagunaUniversity of Hawaii at Manoa

Durham UniversityAix-Marseille UnivJet Propulsion LaboratoryInstituto de Astrofísica de CanariasCNESPSLUniversidad de La LagunaUniversity of Hawaii at Manoa University of California, Santa CruzIPACLAMLERMA, Observatoire de ParisUniversit

Paris Cit

University of California, Santa CruzIPACLAMLERMA, Observatoire de ParisUniversit

Paris CitWe measure galaxy sizes from 2 < z < 10 using COSMOS-Web, the largest-area

JWST imaging survey to date, covering ∼0.54 deg2. We analyze the

rest-frame optical (~5000A) size evolution and its scaling relation with

stellar mass (Re∝M∗α) for star-forming and quiescent galaxies.

For star-forming galaxies, the slope α remains approximately 0.20 at $2

< z < 8$, showing no significant evolution over this redshift range. At higher

redshifts, the slopes are −0.13±0.15 and 0.37±0.36 for 8 < z < 9

and 9 < z < 10, respectively. At fixed galaxy mass, the size evolution for

star-forming galaxies follows Re∝(1+z)−β, with $\beta = 1.21

\pm 0.05.Forquiescentgalaxies,theslopeissteeper\alpha\sim 0.5−0.8$

at 2 < z < 5, and β=0.81±0.26. We find that the size-mass relation is

consistent between UV and optical at z < 8 for star-forming galaxies.

However, we observe a decrease in the slope from UV to optical at z > 8, with

a tentative negative slope in the optical at 8 < z < 9, suggesting a complex

interplay between intrinsic galaxy properties and observational effects such as

dust attenuation. We discuss the ratio between galaxies' half-light radius, and

underlying halos' virial radius, Rvir, and find the median value of

Re/Rvir=2.7%. The star formation rate surface density evolves as

logΣSFR=(0.20±0.08)z+(−0.65±0.51), and the

ΣSFR-M∗ relation remains flat at 23 provides new

insights into galaxy size and related properties in the rest-frame optical.

28 Feb 2025

Dissipative cat-qubits are a promising architecture for quantum processors due to their built-in quantum error correction. By leveraging two-photon stabilization, they achieve an exponentially suppressed bit-flip error rate as the distance in phase-space between their basis states increases, incurring only a linear increase in phase-flip rate. This property substantially reduces the number of qubits required for fault-tolerant quantum computation. Here, we implement a squeezing deformation of the cat qubit basis states, further extending the bit-flip time while minimally affecting the phase-flip rate. We demonstrate a steep reduction in the bit-flip error rate with increasing mean photon number, characterized by a scaling exponent γ=4.3, rising by a factor of 74 per added photon. Specifically, we measure bit-flip times of 22 seconds for a phase-flip time of 1.3 μs in a squeezed cat qubit with an average photon number nˉ=4.1, a 160-fold improvement in bit-flip time compared to a standard cat. Moreover, we demonstrate a two-fold reduction in Z-gate infidelity, with an estimated phase-flip probability of ϵX=0.085 and a bit-flip probability of ϵZ=2.65⋅10−9 which confirms the gate bias-preserving property. This simple yet effective technique enhances cat qubit performances without requiring design modification, moving multi-cat architectures closer to fault-tolerant quantum computation.

The syntactic structures of sentences can be readily read-out from the activations of large language models (LLMs). However, the ``structural probes'' that have been developed to reveal this phenomenon are typically evaluated on an indiscriminate set of sentences. Consequently, it remains unclear whether structural and/or statistical factors systematically affect these syntactic representations. To address this issue, we conduct an in-depth analysis of structural probes on three controlled benchmarks. Our results are three-fold. First, structural probes are biased by a superficial property: the closer two words are in a sentence, the more likely structural probes will consider them as syntactically linked. Second, structural probes are challenged by linguistic properties: they poorly represent deep syntactic structures, and get interfered by interacting nouns or ungrammatical verb forms. Third, structural probes do not appear to be affected by the predictability of individual words. Overall, this work sheds light on the current challenges faced by structural probes. Providing a benchmark made of controlled stimuli to better evaluate their performance.

Neural scaling laws underlie many of the recent advances in deep learning, yet their theoretical understanding remains largely confined to linear models. In this work, we present a systematic analysis of scaling laws for quadratic and diagonal neural networks in the feature learning regime. Leveraging connections with matrix compressed sensing and LASSO, we derive a detailed phase diagram for the scaling exponents of the excess risk as a function of sample complexity and weight decay. This analysis uncovers crossovers between distinct scaling regimes and plateau behaviors, mirroring phenomena widely reported in the empirical neural scaling literature. Furthermore, we establish a precise link between these regimes and the spectral properties of the trained network weights, which we characterize in detail. As a consequence, we provide a theoretical validation of recent empirical observations connecting the emergence of power-law tails in the weight spectrum with network generalization performance, yielding an interpretation from first principles.

Deep convolutional networks provide state of the art classifications and

regressions results over many high-dimensional problems. We review their

architecture, which scatters data with a cascade of linear filter weights and

non-linearities. A mathematical framework is introduced to analyze their

properties. Computations of invariants involve multiscale contractions, the

linearization of hierarchical symmetries, and sparse separations. Applications

are discussed.

03 Jun 2025

Nonlinear optimization methods are typically iterative and make use of gradient information to determine a direction of improvement and function information to effectively check for progress. When this information is corrupted by noise, designing a convergent and practical algorithmic process becomes challenging, as care must be taken to avoid taking bad steps due to erroneous information. For this reason, simple gradient-based schemes have been quite popular, despite being outperformed by more advanced techniques in the noiseless setting. In this paper, we propose a general algorithmic framework based on line search that is endowed with iteration and evaluation complexity guarantees even in a noisy setting. These guarantees are obtained as a result of a restarting condition, that monitors desirable properties for the steps taken at each iteration and can be checked even in the presence of noise. Experiments using a nonlinear conjugate gradient variant and a quasi-Newton variant illustrate that restarting can be performed without compromising practical efficiency and robustness.

There are no more papers matching your filters at the moment.