Medical University of Innsbruck

Liver vessel segmentation in magnetic resonance imaging data is important for the computational analysis of vascular remodelling, associated with a wide spectrum of diffuse liver diseases. Existing approaches rely on contrast enhanced imaging data, but the necessary dedicated imaging sequences are not uniformly acquired. Images without contrast enhancement are acquired more frequently, but vessel segmentation is challenging, and requires large-scale annotated data. We propose a multi-task learning framework to segment vessels in liver MRI without contrast. It exploits auxiliary contrast enhanced MRI data available only during training to reduce the need for annotated training examples. Our approach draws on paired native and contrast enhanced data with and without vessel annotations for model training. Results show that auxiliary data improves the accuracy of vessel segmentation, even if they are not available during inference. The advantage is most pronounced if only few annotations are available for training, since the feature representation benefits from the shared task structure. A validation of this approach to augment a model for brain tumor segmentation confirms its benefits across different domains. An auxiliary informative imaging modality can augment expert annotations even if it is only available during training.

Radiology reports contain rich clinical information that can be used to train imaging models without relying on costly manual annotation. However, existing approaches face critical limitations: rule-based methods struggle with linguistic variability, supervised models require large annotated datasets, and recent LLM-based systems depend on closed-source or resource-intensive models that are unsuitable for clinical use. Moreover, current solutions are largely restricted to English and single-modality, single-taxonomy datasets. We introduce MOSAIC, a multilingual, taxonomy-agnostic, and computationally efficient approach for radiological report classification. Built on a compact open-access language model (MedGemma-4B), MOSAIC supports both zero-/few-shot prompting and lightweight fine-tuning, enabling deployment on consumer-grade GPUs. We evaluate MOSAIC across seven datasets in English, Spanish, French, and Danish, spanning multiple imaging modalities and label taxonomies. The model achieves a mean macro F1 score of 88 across five chest X-ray datasets, approaching or exceeding expert-level performance, while requiring only 24 GB of GPU memory. With data augmentation, as few as 80 annotated samples are sufficient to reach a weighted F1 score of 82 on Danish reports, compared to 86 with the full 1600-sample training set. MOSAIC offers a practical alternative to large or proprietary LLMs in clinical settings. Code and models are open-source. We invite the community to evaluate and extend MOSAIC on new languages, taxonomies, and modalities.

Chinese Academy of Sciences

Chinese Academy of Sciences Imperial College LondonKorea University

Imperial College LondonKorea University Beihang University

Beihang University Shanghai Jiao Tong UniversityHarbin Institute of Technology

Shanghai Jiao Tong UniversityHarbin Institute of Technology University of FloridaShanghaiTech UniversityAarhus University

University of FloridaShanghaiTech UniversityAarhus University Huazhong University of Science and TechnologyUniversity Health NetworkUniversity of GenevaNovosibirsk State UniversityGerman Cancer Research Center (DKFZ)University of CaliforniaAGH University of KrakowYonsei University College of MedicineMedical University of InnsbruckUniversity of Applied Sciences Western Switzerland (HES-SO)Canon Medical Systems (China) Co., Ltd.

Huazhong University of Science and TechnologyUniversity Health NetworkUniversity of GenevaNovosibirsk State UniversityGerman Cancer Research Center (DKFZ)University of CaliforniaAGH University of KrakowYonsei University College of MedicineMedical University of InnsbruckUniversity of Applied Sciences Western Switzerland (HES-SO)Canon Medical Systems (China) Co., Ltd.Multi-class segmentation of the aorta in computed tomography angiography

(CTA) scans is essential for diagnosing and planning complex endovascular

treatments for patients with aortic dissections. However, existing methods

reduce aortic segmentation to a binary problem, limiting their ability to

measure diameters across different branches and zones. Furthermore, no

open-source dataset is currently available to support the development of

multi-class aortic segmentation methods. To address this gap, we organized the

AortaSeg24 MICCAI Challenge, introducing the first dataset of 100 CTA volumes

annotated for 23 clinically relevant aortic branches and zones. This dataset

was designed to facilitate both model development and validation. The challenge

attracted 121 teams worldwide, with participants leveraging state-of-the-art

frameworks such as nnU-Net and exploring novel techniques, including cascaded

models, data augmentation strategies, and custom loss functions. We evaluated

the submitted algorithms using the Dice Similarity Coefficient (DSC) and

Normalized Surface Distance (NSD), highlighting the approaches adopted by the

top five performing teams. This paper presents the challenge design, dataset

details, evaluation metrics, and an in-depth analysis of the top-performing

algorithms. The annotated dataset, evaluation code, and implementations of the

leading methods are publicly available to support further research. All

resources can be accessed at this https URL

Hepatocellular carcinoma (HCC) is the most common type of primary liver cancer in adults, and the most common cause of death of people suffering from cirrhosis. The segmentation of liver lesions in CT images allows assessment of tumor load, treatment planning, prognosis and monitoring of treatment response. Manual segmentation is a very time-consuming task and in many cases, prone to inaccuracies and automatic tools for tumor detection and segmentation are desirable. In this paper, we compare two network architectures, one that is composed of one neural network and manages the segmentation task in one step and one that consists of two consecutive fully convolutional neural networks. The first network segments the liver whereas the second network segments the actual tumor inside the liver. Our networks are trained on a subset of the LiTS (Liver Tumor Segmentation) Challenge and evaluated on data.

09 Jul 2021

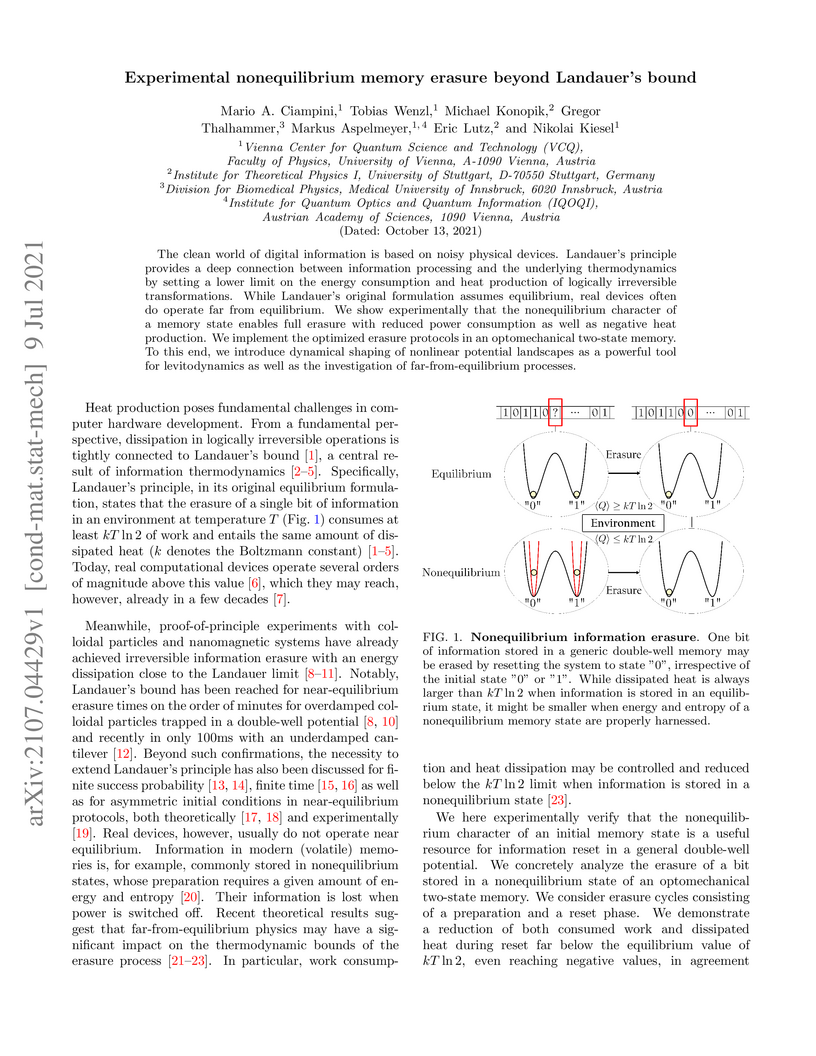

The clean world of digital information is based on noisy physical devices.

Landauer's principle provides a deep connection between information processing

and the underlying thermodynamics by setting a lower limit on the energy

consumption and heat production of logically irreversible transformations.

While Landauer's original formulation assumes equilibrium, real devices often

do operate far from equilibrium. We show experimentally that the nonequilibrium

character of a memory state enables full erasure with reduced power consumption

as well as negative heat production. We implement the optimized erasure

protocols in an optomechanical two-state memory. To this end, we introduce

dynamical shaping of nonlinear potential landscapes as a powerful tool for

levitodynamics as well as the investigation of far-from-equilibrium processes.

The application of machine learning to medical ultrasound videos of the

heart, i.e., echocardiography, has recently gained traction with the

availability of large public datasets. Traditional supervised tasks, such as

ejection fraction regression, are now making way for approaches focusing more

on the latent structure of data distributions, as well as generative methods.

We propose a model trained exclusively by knowledge distillation, either on

real or synthetical data, involving retrieving masks suggested by a teacher

model. We achieve state-of-the-art (SOTA) values on the task of identifying

end-diastolic and end-systolic frames. By training the model only on synthetic

data, it reaches segmentation capabilities close to the performance when

trained on real data with a significantly reduced number of weights. A

comparison with the 5 main existing methods shows that our method outperforms

the others in most cases. We also present a new evaluation method that does not

require human annotation and instead relies on a large auxiliary model. We show

that this method produces scores consistent with those obtained from human

annotations. Relying on the integrated knowledge from a vast amount of records,

this method overcomes certain inherent limitations of human annotator labeling.

Code: this https URL

Purpose High dimensional, multimodal data can nowadays be analyzed by huge deep neural networks with little effort. Several fusion methods for bringing together different modalities have been developed. Given the prevalence of high-dimensional, multimodal patient data in medicine, the development of multimodal models marks a significant advancement. However, how these models process information from individual sources in detail is still underexplored. Methods To this end, we implemented an occlusion-based modality contribution method that is both model- and performance-agnostic. This method quantitatively measures the importance of each modality in the dataset for the model to fulfill its task. We applied our method to three different multimodal medical problems for experimental purposes. Results Herein we found that some networks have modality preferences that tend to unimodal collapses, while some datasets are imbalanced from the ground up. Moreover, we provide fine-grained quantitative and visual attribute importance for each modality. Conclusion Our metric offers valuable insights that can support the advancement of multimodal model development and dataset creation. By introducing this method, we contribute to the growing field of interpretability in deep learning for multimodal research. This approach helps to facilitate the integration of multimodal AI into clinical practice. Our code is publicly available at this https URL.

Noisier2Inverse presents a self-supervised deep learning method for image reconstruction in general inverse problems, specifically designed to handle statistically correlated measurement noise. The approach operates in one step and avoids extrapolation during inference, consistently outperforming existing self-supervised techniques like Noisier2Noise and Noise2Inverse in 2D CT reconstruction tasks.

Most of the recent results in polynomial functional regression have been focused on an in-depth exploration of single-parameter regularization schemes. In contrast, in this study we go beyond that framework by introducing an algorithm for multiple parameter regularization and presenting a theoretically grounded method for dealing with the associated parameters. This method facilitates the aggregation of models with varying regularization parameters. The efficacy of the proposed approach is assessed through evaluations on both synthetic and some real-world medical data, revealing promising results.

Deformable medical image registration is a fundamental task in medical image analysis. While deep learning-based methods have demonstrated superior accuracy and computational efficiency compared to traditional techniques, they often overlook the critical role of regularization in ensuring robustness and anatomical plausibility. We propose DARE (Deformable Adaptive Regularization Estimator), a novel registration framework that dynamically adjusts elastic regularization based on the gradient norm of the deformation field. Our approach integrates strain and shear energy terms, which are adaptively modulated to balance stability and flexibility. To ensure physically realistic transformations, DARE includes a folding-prevention mechanism that penalizes regions with negative deformation Jacobian. This strategy mitigates non-physical artifacts such as folding, avoids over-smoothing, and improves both registration accuracy and anatomical plausibility

Late gadolinium enhancement (LGE) cardiac magnetic resonance (CMR) imaging is

considered the in vivo reference standard for assessing infarct size (IS) and

microvascular obstruction (MVO) in ST-elevation myocardial infarction (STEMI)

patients. However, the exact quantification of those markers of myocardial

infarct severity remains challenging and very time-consuming. As LGE

distribution patterns can be quite complex and hard to delineate from the blood

pool or epicardial fat, automatic segmentation of LGE CMR images is

challenging. In this work, we propose a cascaded framework of two-dimensional

and three-dimensional convolutional neural networks (CNNs) which enables to

calculate the extent of myocardial infarction in a fully automated way. By

artificially generating segmentation errors which are characteristic for 2D

CNNs during training of the cascaded framework we are enforcing the detection

and correction of 2D segmentation errors and hence improve the segmentation

accuracy of the entire method. The proposed method was trained and evaluated in

a five-fold cross validation using the training dataset from the EMIDEC

challenge. We perform comparative experiments where our framework outperforms

state-of-the-art methods of the EMIDEC challenge, as well as 2D and 3D nnU-Net.

Furthermore, in extensive ablation studies we show the advantages that come

with the proposed error correcting cascaded method.

04 Oct 2024

We propose a scalable encoding of combinatorial optimization problems with arbitrary connectivity, including higher-order terms, on arrays of trapped neutral atoms requiring only a global laser drive. Our approach relies on modular arrangements of a small number of problem-independent gadgets. These gadgets represent maximum-weight independent set (MWIS) problems on unit-disk graphs, which are native to such devices. Instead of programming MWIS weights with site-dependent laser detunings, the scheme relies on systematic placements of auxiliary atoms. We show, that these auxiliary atoms can be simultaneously used for both problem-specific programming and the mitigation of unwanted effects originating from the tails of long-range interactions.

The diagnosis of Alzheimer's disease (AD) in routine clinical practice is

most commonly based on subjective clinical interpretations. Quantitative

electroencephalography (QEEG) measures have been shown to reflect

neurodegenerative processes in AD and might qualify as affordable and thereby

widely available markers to facilitate the objectivization of AD assessment.

Here, we present a novel framework combining Riemannian tangent space mapping

and elastic net regression for the development of brain atrophy markers. While

most AD QEEG studies are based on small sample sizes and psychological test

scores as outcome measures, here we train and test our models using data of one

of the largest prospective EEG AD trials ever conducted, including MRI

biomarkers of brain atrophy.

Poor generalization performance caused by distribution shifts in unseen

domains often hinders the trustworthy deployment of deep neural networks. Many

domain generalization techniques address this problem by adding a domain

invariant regularization loss terms during training. However, there is a lack

of modular software that allows users to combine the advantages of different

methods with minimal effort for reproducibility. DomainLab is a modular Python

package for training user specified neural networks with composable

regularization loss terms. Its decoupled design allows the separation of neural

networks from regularization loss construction. Hierarchical combinations of

neural networks, different domain generalization methods, and associated

hyperparameters, can all be specified together with other experimental setup in

a single configuration file. Hierarchical combinations of neural networks,

different domain generalization methods, and associated hyperparameters, can

all be specified together with other experimental setup in a single

configuration file. In addition, DomainLab offers powerful benchmarking

functionality to evaluate the generalization performance of neural networks in

out-of-distribution data. The package supports running the specified benchmark

on an HPC cluster or on a standalone machine. The package is well tested with

over 95 percent coverage and well documented. From the user perspective, it is

closed to modification but open to extension. The package is under the MIT

license, and its source code, tutorial and documentation can be found at

this https URL

The analysis of carotid arteries, particularly plaques, in multi-sequence Magnetic Resonance Imaging (MRI) data is crucial for assessing the risk of atherosclerosis and ischemic stroke. In order to evaluate metrics and radiomic features, quantifying the state of atherosclerosis, accurate segmentation is important. However, the complex morphology of plaques and the scarcity of labeled data poses significant challenges. In this work, we address these problems and propose a semi-supervised deep learning-based approach designed to effectively integrate multi-sequence MRI data for the segmentation of carotid artery vessel wall and plaque. The proposed algorithm consists of two networks: a coarse localization model identifies the region of interest guided by some prior knowledge on the position and number of carotid arteries, followed by a fine segmentation model for precise delineation of vessel walls and plaques. To effectively integrate complementary information across different MRI sequences, we investigate different fusion strategies and introduce a multi-level multi-sequence version of U-Net architecture. To address the challenges of limited labeled data and the complexity of carotid artery MRI, we propose a semi-supervised approach that enforces consistency under various input transformations. Our approach is evaluated on 52 patients with arteriosclerosis, each with five MRI sequences. Comprehensive experiments demonstrate the effectiveness of our approach and emphasize the role of fusion point selection in U-Net-based architectures. To validate the accuracy of our results, we also include an expert-based assessment of model performance. Our findings highlight the potential of fusion strategies and semi-supervised learning for improving carotid artery segmentation in data-limited MRI applications.

Quantifiable image patterns associated with disease progression and treatment response are critical tools for guiding individual treatment, and for developing novel therapies. Here, we show that unsupervised machine learning can identify a pattern vocabulary of liver tissue in magnetic resonance images that quantifies treatment response in diffuse liver disease. Deep clustering networks simultaneously encode and cluster patches of medical images into a low-dimensional latent space to establish a tissue vocabulary. The resulting tissue types capture differential tissue change and its location in the liver associated with treatment response. We demonstrate the utility of the vocabulary on a randomized controlled trial cohort of non-alcoholic steatohepatitis patients. First, we use the vocabulary to compare longitudinal liver change in a placebo and a treatment cohort. Results show that the method identifies specific liver tissue change pathways associated with treatment, and enables a better separation between treatment groups than established non-imaging measures. Moreover, we show that the vocabulary can predict biopsy derived features from non-invasive imaging data. We validate the method on a separate replication cohort to demonstrate the applicability of the proposed method.

Coded wavefront sensing (Coded-WFS) is a snapshot quantitative phase imaging (QPI) technique that has been shown to successfully leverage the memory effect to retrieve the phase of biological specimens. In this paper, we perform QPI on static silica beads and dynamic HEK cells using Coded-WFS. The accuracy of the retrieved phase map is validated using digital holographic microscopy (DHM) for the same specimens. We report comparisons of simultaneous bright-field intensity and optical path delay.

11 Jan 2018

Objects at finite temperature emit thermal radiation with an outward energy-momentum flow, which exerts an outward radiation pressure. At room temperature, a cesium atom scatters on average less than one of these blackbody radiation photons every 10^8 years. Thus, it is generally assumed that any scattering force exerted on atoms by such radiation is negligible. However, atoms also interact coherently with the thermal electromagnetic field. In this work, we measure an attractive force induced by blackbody radiation between a cesium atom and a heated, centimeter-sized cylinder which is orders of magnitude stronger than the outward directed radiation pressure. Using atom interferometry, we find that this force scales with the fourth power of the cylinder`s temperature. The force is in good agreement with that predicted from an ac Stark shift gradient of the atomic ground state in the thermal radiation field. This observed force dominates over both gravity and radiation pressure, and does so for a large temperature range.

Accurate brain tumor classification is critical for intra-operative decision making in neuro-oncological surgery. However, existing approaches are restricted to a fixed set of predefined classes and are therefore unable to capture patterns of tumor types not available during training. Unsupervised learning can extract general-purpose features, but it lacks the ability to incorporate prior knowledge from labelled data, and semi-supervised methods often assume that all potential classes are represented in the labelled data. Generalized Category Discovery (GCD) aims to bridge this gap by categorizing both known and unknown classes within unlabelled data. To reflect the hierarchical structure of brain tumor taxonomies, in this work, we introduce Hierarchical Generalized Category Discovery for Brain Tumor Classification (HGCD-BT), a novel approach that integrates hierarchical clustering with contrastive learning. Our method extends contrastive learning based GCD by incorporating a novel semi-supervised hierarchical clustering loss. We evaluate HGCD-BT on OpenSRH, a dataset of stimulated Raman histology brain tumor images, achieving a +28% improvement in accuracy over state-of-the-art GCD methods for patch-level classification, particularly in identifying previously unseen tumor categories. Furthermore, we demonstrate the generalizability of HGCD-BT on slide-level classification of hematoxylin and eosin stained whole-slide images from the Digital Brain Tumor Atlas, confirming its utility across imaging modalities.

Liver vessel segmentation in magnetic resonance imaging data is important for the computational analysis of vascular remodelling, associated with a wide spectrum of diffuse liver diseases. Existing approaches rely on contrast enhanced imaging data, but the necessary dedicated imaging sequences are not uniformly acquired. Images without contrast enhancement are acquired more frequently, but vessel segmentation is challenging, and requires large-scale annotated data. We propose a multi-task learning framework to segment vessels in liver MRI without contrast. It exploits auxiliary contrast enhanced MRI data available only during training to reduce the need for annotated training examples. Our approach draws on paired native and contrast enhanced data with and without vessel annotations for model training. Results show that auxiliary data improves the accuracy of vessel segmentation, even if they are not available during inference. The advantage is most pronounced if only few annotations are available for training, since the feature representation benefits from the shared task structure. A validation of this approach to augment a model for brain tumor segmentation confirms its benefits across different domains. An auxiliary informative imaging modality can augment expert annotations even if it is only available during training.

There are no more papers matching your filters at the moment.