Mercedes-Benz AG

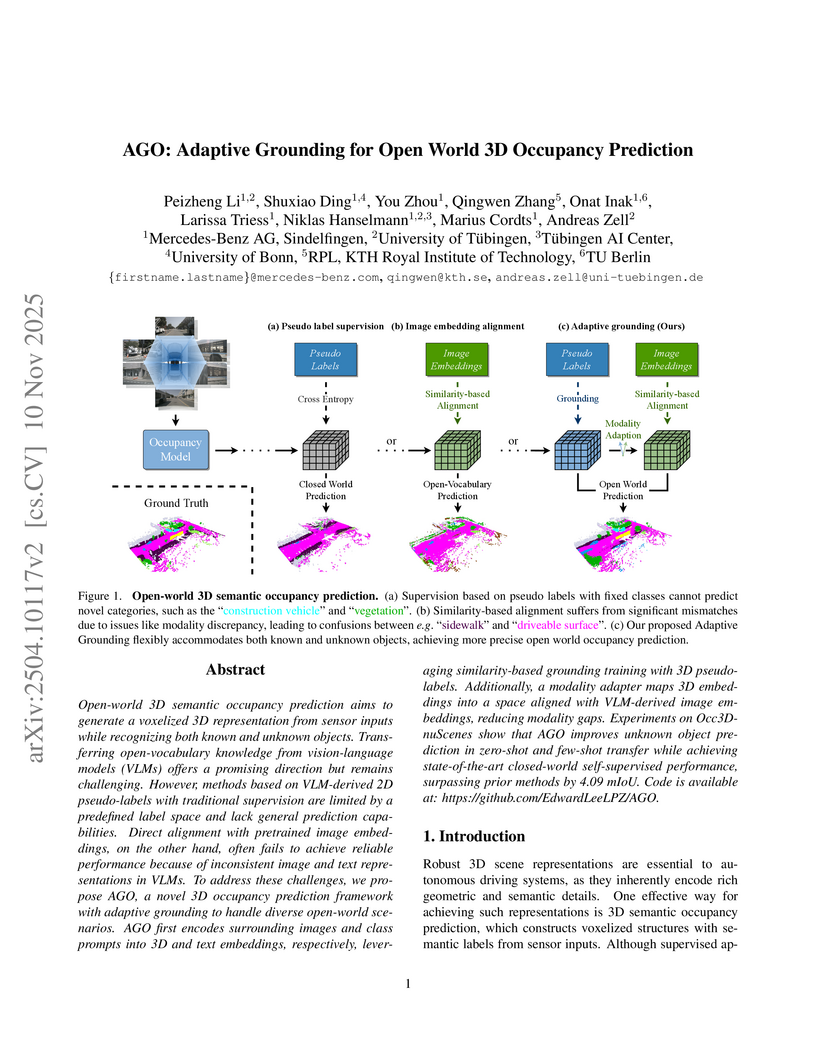

Mercedes-Benz AG and University of Tübingen researchers developed AGO, a framework for adaptive grounding in open-world 3D occupancy prediction, enabling recognition of both known and novel objects from multi-camera inputs. The model achieved a state-of-the-art 19.23 mIoU on the Occ3D-nuScenes benchmark, showing robust generalization to unknown categories and outperforming previous methods by 4.09 mIoU.

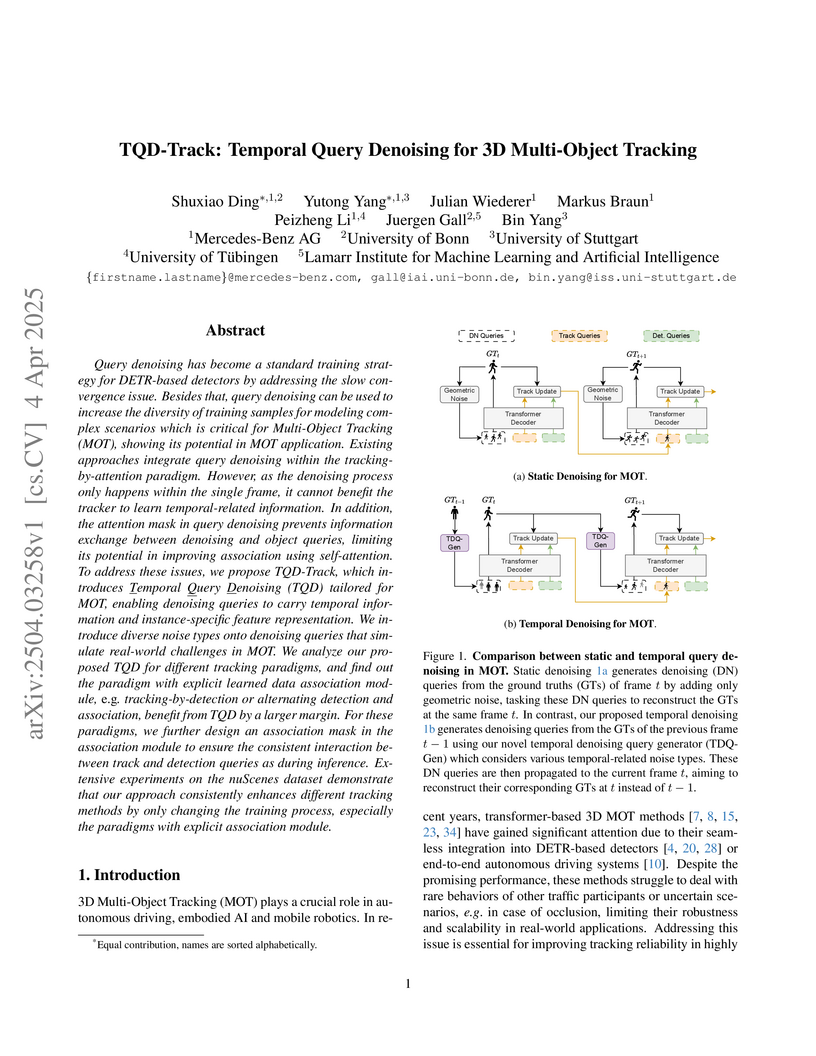

Query denoising has become a standard training strategy for DETR-based

detectors by addressing the slow convergence issue. Besides that, query

denoising can be used to increase the diversity of training samples for

modeling complex scenarios which is critical for Multi-Object Tracking (MOT),

showing its potential in MOT application. Existing approaches integrate query

denoising within the tracking-by-attention paradigm. However, as the denoising

process only happens within the single frame, it cannot benefit the tracker to

learn temporal-related information. In addition, the attention mask in query

denoising prevents information exchange between denoising and object queries,

limiting its potential in improving association using self-attention. To

address these issues, we propose TQD-Track, which introduces Temporal Query

Denoising (TQD) tailored for MOT, enabling denoising queries to carry temporal

information and instance-specific feature representation. We introduce diverse

noise types onto denoising queries that simulate real-world challenges in MOT.

We analyze our proposed TQD for different tracking paradigms, and find out the

paradigm with explicit learned data association module, e.g.

tracking-by-detection or alternating detection and association, benefit from

TQD by a larger margin. For these paradigms, we further design an association

mask in the association module to ensure the consistent interaction between

track and detection queries as during inference. Extensive experiments on the

nuScenes dataset demonstrate that our approach consistently enhances different

tracking methods by only changing the training process, especially the

paradigms with explicit association module.

SeFlow introduces a self-supervised method for 3D scene flow estimation in autonomous driving, addressing data imbalance and enforcing object-level motion consistency using external dynamic classification and novel loss functions. The approach achieves state-of-the-art self-supervised performance, often comparable to supervised methods, with real-time inference speed on Argoverse 2 and Waymo datasets.

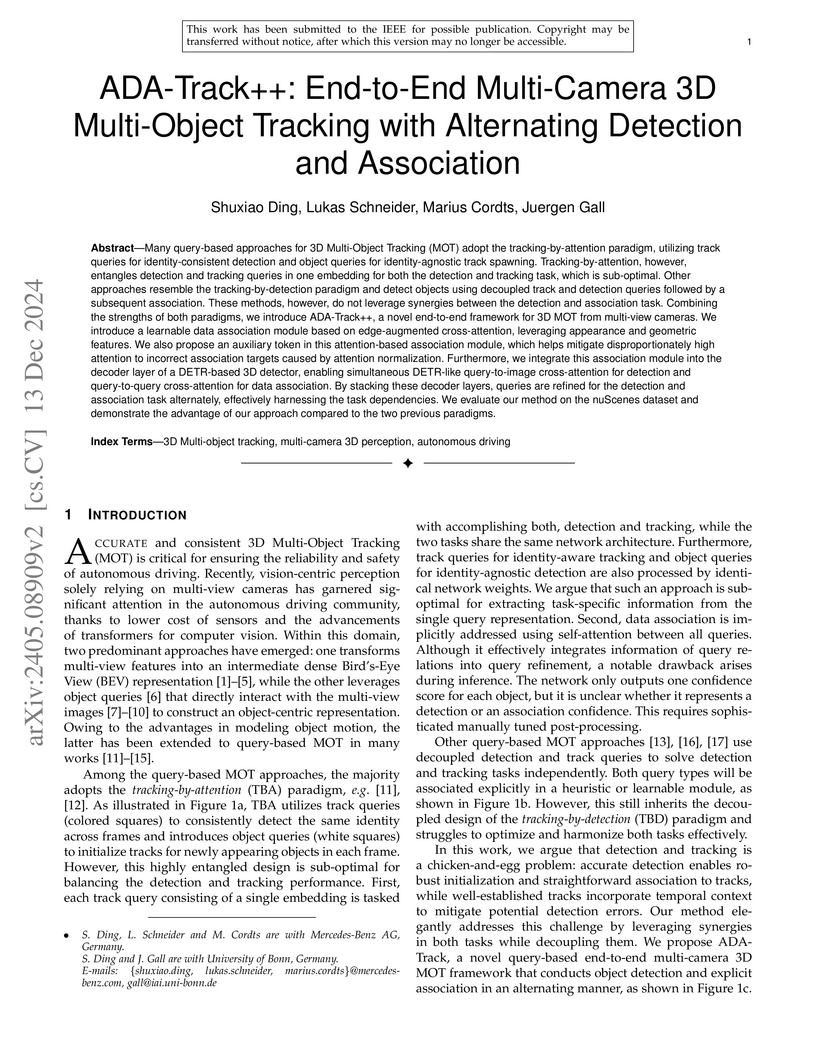

Many query-based approaches for 3D Multi-Object Tracking (MOT) adopt the

tracking-by-attention paradigm, utilizing track queries for identity-consistent

detection and object queries for identity-agnostic track spawning.

Tracking-by-attention, however, entangles detection and tracking queries in one

embedding for both the detection and tracking task, which is sub-optimal. Other

approaches resemble the tracking-by-detection paradigm and detect objects using

decoupled track and detection queries followed by a subsequent association.

These methods, however, do not leverage synergies between the detection and

association task. Combining the strengths of both paradigms, we introduce

ADA-Track++, a novel end-to-end framework for 3D MOT from multi-view cameras.

We introduce a learnable data association module based on edge-augmented

cross-attention, leveraging appearance and geometric features. We also propose

an auxiliary token in this attention-based association module, which helps

mitigate disproportionately high attention to incorrect association targets

caused by attention normalization. Furthermore, we integrate this association

module into the decoder layer of a DETR-based 3D detector, enabling

simultaneous DETR-like query-to-image cross-attention for detection and

query-to-query cross-attention for data association. By stacking these decoder

layers, queries are refined for the detection and association task alternately,

effectively harnessing the task dependencies. We evaluate our method on the

nuScenes dataset and demonstrate the advantage of our approach compared to the

two previous paradigms.

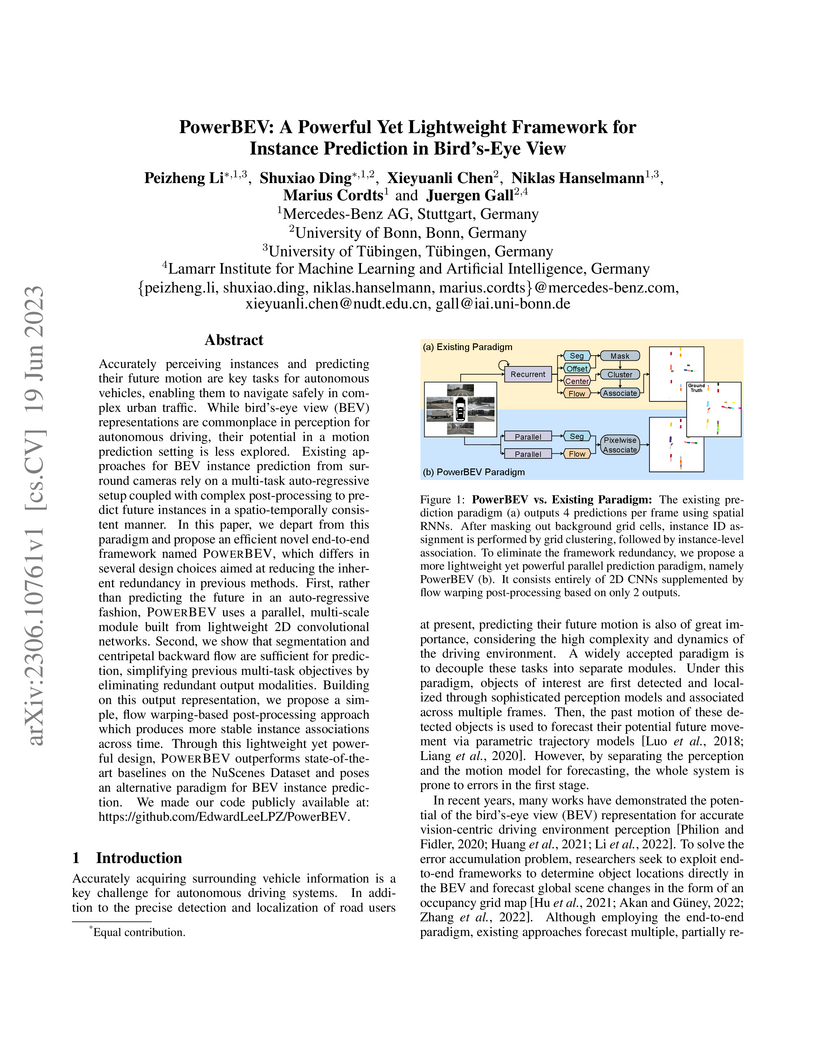

Accurately perceiving instances and predicting their future motion are key tasks for autonomous vehicles, enabling them to navigate safely in complex urban traffic. While bird's-eye view (BEV) representations are commonplace in perception for autonomous driving, their potential in a motion prediction setting is less explored. Existing approaches for BEV instance prediction from surround cameras rely on a multi-task auto-regressive setup coupled with complex post-processing to predict future instances in a spatio-temporally consistent manner. In this paper, we depart from this paradigm and propose an efficient novel end-to-end framework named POWERBEV, which differs in several design choices aimed at reducing the inherent redundancy in previous methods. First, rather than predicting the future in an auto-regressive fashion, POWERBEV uses a parallel, multi-scale module built from lightweight 2D convolutional networks. Second, we show that segmentation and centripetal backward flow are sufficient for prediction, simplifying previous multi-task objectives by eliminating redundant output modalities. Building on this output representation, we propose a simple, flow warping-based post-processing approach which produces more stable instance associations across time. Through this lightweight yet powerful design, POWERBEV outperforms state-of-the-art baselines on the NuScenes Dataset and poses an alternative paradigm for BEV instance prediction. We made our code publicly available at: this https URL.

24 Aug 2025

We present SEER-VAR, a novel framework for egocentric vehicle-based augmented reality (AR) that unifies semantic decomposition, Context-Aware SLAM Branches (CASB), and LLM-driven recommendation. Unlike existing systems that assume static or single-view settings, SEER-VAR dynamically separates cabin and road scenes via depth-guided vision-language grounding. Two SLAM branches track egocentric motion in each context, while a GPT-based module generates context-aware overlays such as dashboard cues and hazard alerts. To support evaluation, we introduce EgoSLAM-Drive, a real-world dataset featuring synchronized egocentric views, 6DoF ground-truth poses, and AR annotations across diverse driving scenarios. Experiments demonstrate that SEER-VAR achieves robust spatial alignment and perceptually coherent AR rendering across varied environments. As one of the first to explore LLM-based AR recommendation in egocentric driving, we address the lack of comparable systems through structured prompting and detailed user studies. Results show that SEER-VAR enhances perceived scene understanding, overlay relevance, and driver ease, providing an effective foundation for future research in this direction. Code and dataset will be made open source.

19 May 2025

Large language models (LLMs) have advanced the field of artificial

intelligence (AI) and are a powerful enabler for interactive systems. However,

they still face challenges in long-term interactions that require adaptation

towards the user as well as contextual knowledge and understanding of the

ever-changing environment. To overcome these challenges, holistic memory

modeling is required to efficiently retrieve and store relevant information

across interaction sessions for suitable responses. Cognitive AI, which aims to

simulate the human thought process in a computerized model, highlights

interesting aspects, such as thoughts, memory mechanisms, and decision-making,

that can contribute towards improved memory modeling for LLMs. Inspired by

these cognitive AI principles, we propose our memory framework CAIM. CAIM

consists of three modules: 1.) The Memory Controller as the central decision

unit; 2.) the Memory Retrieval, which filters relevant data for interaction

upon request; and 3.) the Post-Thinking, which maintains the memory storage. We

compare CAIM against existing approaches, focusing on metrics such as retrieval

accuracy, response correctness, contextual coherence, and memory storage. The

results demonstrate that CAIM outperforms baseline frameworks across different

metrics, highlighting its context-awareness and potential to improve long-term

human-AI interactions.

A framework uses evidential deep learning to quantify both positional and mode probability uncertainty in multi-agent trajectory prediction for autonomous vehicles in a single forward pass. It demonstrates improved prediction accuracy and calibration while enabling uncertainty-driven data sampling that outperforms training on full datasets.

Safe and interpretable motion planning in complex urban environments needs to reason about bidirectional multi-agent interactions. This reasoning requires to estimate the costs of potential ego driving maneuvers. Many existing planners generate initial trajectories with sampling-based methods and refine them by optimizing on learned predictions of future environment states, which requires a cost function that encodes the desired vehicle behavior. Designing such a cost function can be very challenging, especially if a wide range of complex urban scenarios has to be considered. We propose HYPE: HYbrid Planning with Ego proposal-conditioned predictions, a planner that integrates multimodal trajectory proposals from a learned proposal model as heuristic priors into a Monte Carlo Tree Search (MCTS) refinement. To model bidirectional interactions, we introduce an ego-conditioned occupancy prediction model, enabling consistent, scene-aware reasoning. Our design significantly simplifies cost function design in refinement by considering proposal-driven guidance, requiring only minimalistic grid-based cost terms. Evaluations on large-scale real-world benchmarks nuPlan and DeepUrban show that HYPE effectively achieves state-of-the-art performance, especially in safety and adaptability.

Surrogate Gradient learning for ReLU (SUGAR) addresses the "dying ReLU" problem by preserving ReLU's forward pass while modifying gradients during backpropagation via a surrogate function. This approach improved VGG-16 accuracy on CIFAR-100 from 48.73% to 64.47% and ResNet-18 accuracy from 48.99% to 56.51% with the B-SiLU surrogate.

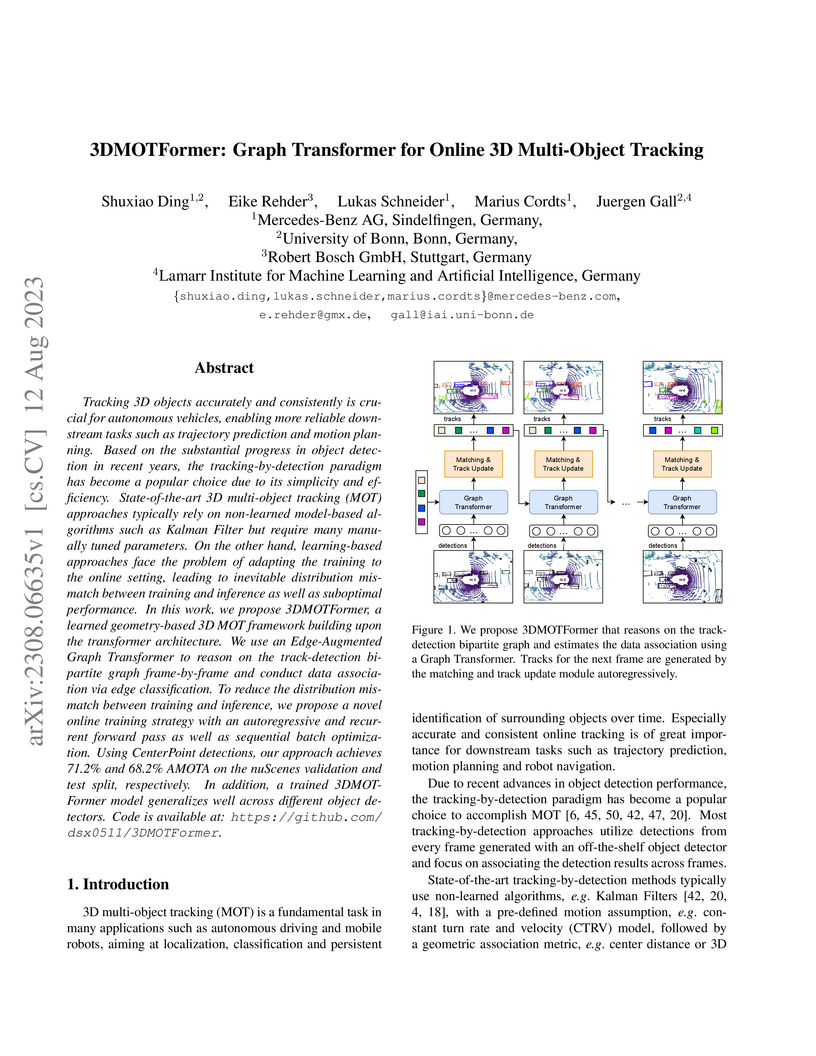

Researchers from Mercedes-Benz AG, Robert Bosch GmbH, and the University of Bonn developed 3DMOTFormer, a 3D Multi-Object Tracking framework that employs Edge-Augmented Graph Transformers and an online training strategy to learn object associations directly from data. This approach achieves superior tracking accuracy on the nuScenes dataset, operating at 54.7 Hz for real-time autonomous driving applications.

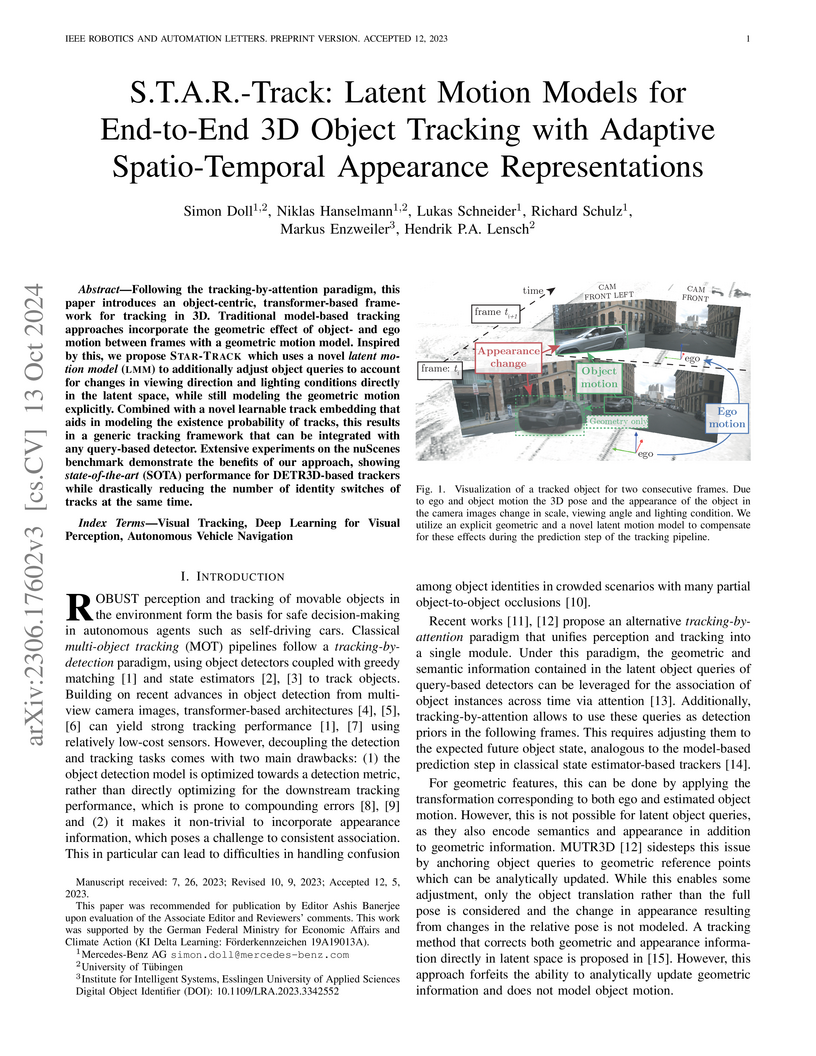

Following the tracking-by-attention paradigm, this paper introduces an object-centric, transformer-based framework for tracking in 3D. Traditional model-based tracking approaches incorporate the geometric effect of object- and ego motion between frames with a geometric motion model. Inspired by this, we propose S.T.A.R.-Track, which uses a novel latent motion model (LMM) to additionally adjust object queries to account for changes in viewing direction and lighting conditions directly in the latent space, while still modeling the geometric motion explicitly. Combined with a novel learnable track embedding that aids in modeling the existence probability of tracks, this results in a generic tracking framework that can be integrated with any query-based detector. Extensive experiments on the nuScenes benchmark demonstrate the benefits of our approach, showing state-of-the-art performance for DETR3D-based trackers while drastically reducing the number of identity switches of tracks at the same time.

This work from the University of Göttingen proposes a three-stage, LLM-based, Retrieval Augmented Generation (RAG) pipeline for abstractive multi-source meeting summarization. It also introduces a method for personalizing summaries based on participant personas, demonstrating improved summary quality, factuality, and relevance while exploring the practical viability of various LLM families.

In the framework of the hybrid quantum-classical variational cluster approach (VCA) to strongly correlated electron systems one of the goals of a quantum subroutine is to find single-particle correlation functions of lattice fermions in polynomial time. Previous works suggested to use variants of the Hadamard test for this purpose, which requires an implementation of controlled single-particle fermionic operators. However, for a number of locality-preserving mappings to encode fermions into qubits, a direct construction of such operators is not possible. In this work, we propose a new quantum algorithm, which uses an analog of the Kubo formula adapted to a quantum circuit simulating the Hubbard model. It allows to access the Green's function of a cluster directly using only bilinears of fermionic operators and circumvents the usage of the Hadamard test. We test our new algorithm in practice by using open-access simulators of noisy IBM superconducting chips.

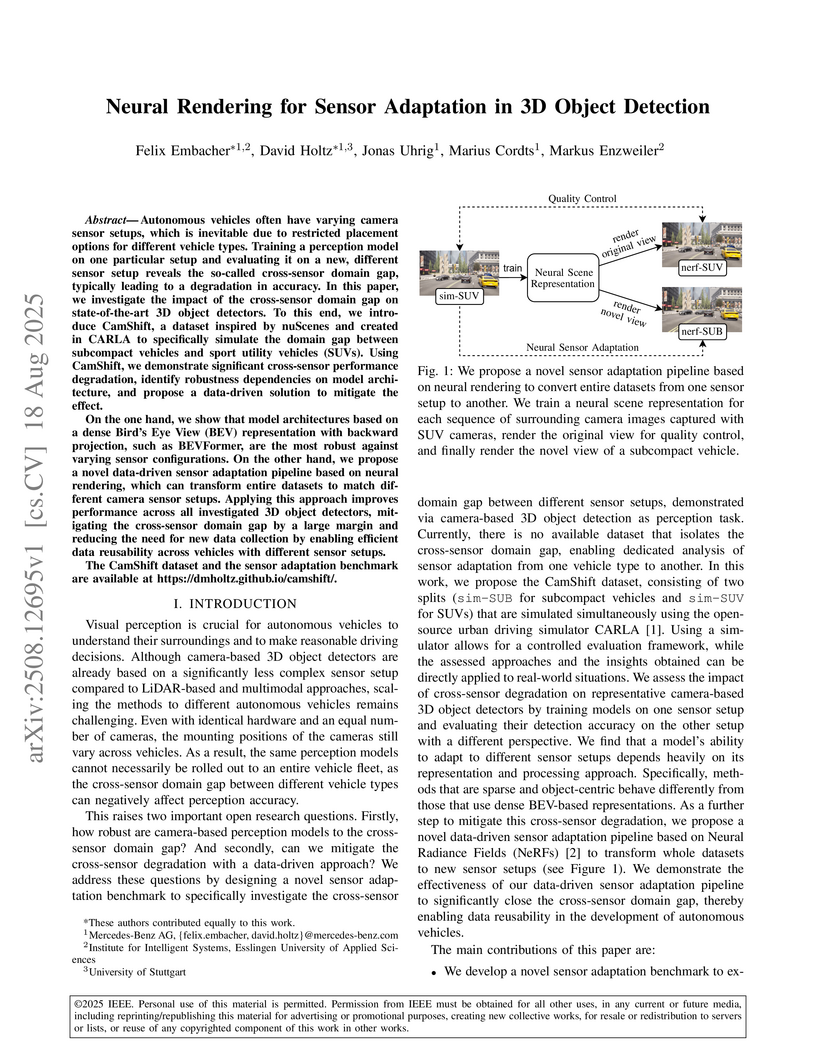

Autonomous vehicles often have varying camera sensor setups, which is inevitable due to restricted placement options for different vehicle types. Training a perception model on one particular setup and evaluating it on a new, different sensor setup reveals the so-called cross-sensor domain gap, typically leading to a degradation in accuracy. In this paper, we investigate the impact of the cross-sensor domain gap on state-of-the-art 3D object detectors. To this end, we introduce CamShift, a dataset inspired by nuScenes and created in CARLA to specifically simulate the domain gap between subcompact vehicles and sport utility vehicles (SUVs). Using CamShift, we demonstrate significant cross-sensor performance degradation, identify robustness dependencies on model architecture, and propose a data-driven solution to mitigate the effect. On the one hand, we show that model architectures based on a dense Bird's Eye View (BEV) representation with backward projection, such as BEVFormer, are the most robust against varying sensor configurations. On the other hand, we propose a novel data-driven sensor adaptation pipeline based on neural rendering, which can transform entire datasets to match different camera sensor setups. Applying this approach improves performance across all investigated 3D object detectors, mitigating the cross-sensor domain gap by a large margin and reducing the need for new data collection by enabling efficient data reusability across vehicles with different sensor setups. The CamShift dataset and the sensor adaptation benchmark are available at this https URL.

Knowledge Distillation (KD) is a well-known training paradigm in deep neural

networks where knowledge acquired by a large teacher model is transferred to a

small student. KD has proven to be an effective technique to significantly

improve the student's performance for various tasks including object detection.

As such, KD techniques mostly rely on guidance at the intermediate feature

level, which is typically implemented by minimizing an lp-norm distance between

teacher and student activations during training. In this paper, we propose a

replacement for the pixel-wise independent lp-norm based on the structural

similarity (SSIM). By taking into account additional contrast and structural

cues, feature importance, correlation and spatial dependence in the feature

space are considered in the loss formulation. Extensive experiments on MSCOCO

demonstrate the effectiveness of our method across different training schemes

and architectures. Our method adds only little computational overhead, is

straightforward to implement and at the same time it significantly outperforms

the standard lp-norms. Moreover, more complex state-of-the-art KD methods using

attention-based sampling mechanisms are outperformed, including a +3.5 AP gain

using a Faster R-CNN R-50 compared to a vanilla model.

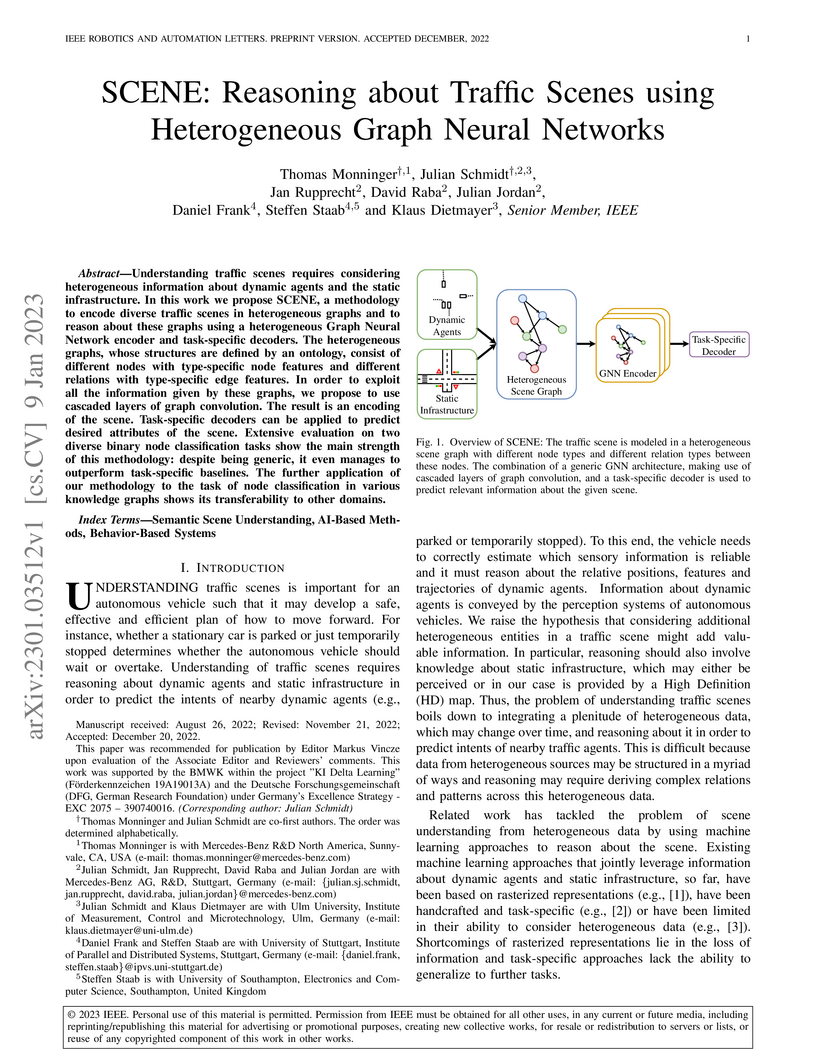

A heterogeneous graph neural network, SCENE, models traffic scenes by integrating dynamic agents and static infrastructure to reason about complex relationships. This approach achieves higher F1-scores on tasks such as classifying parked vehicles (91.17%) and identifying false positive agent detections (80.56%), while also offering an order of magnitude lower computational complexity than prior methods and demonstrating transferability to knowledge graph tasks.

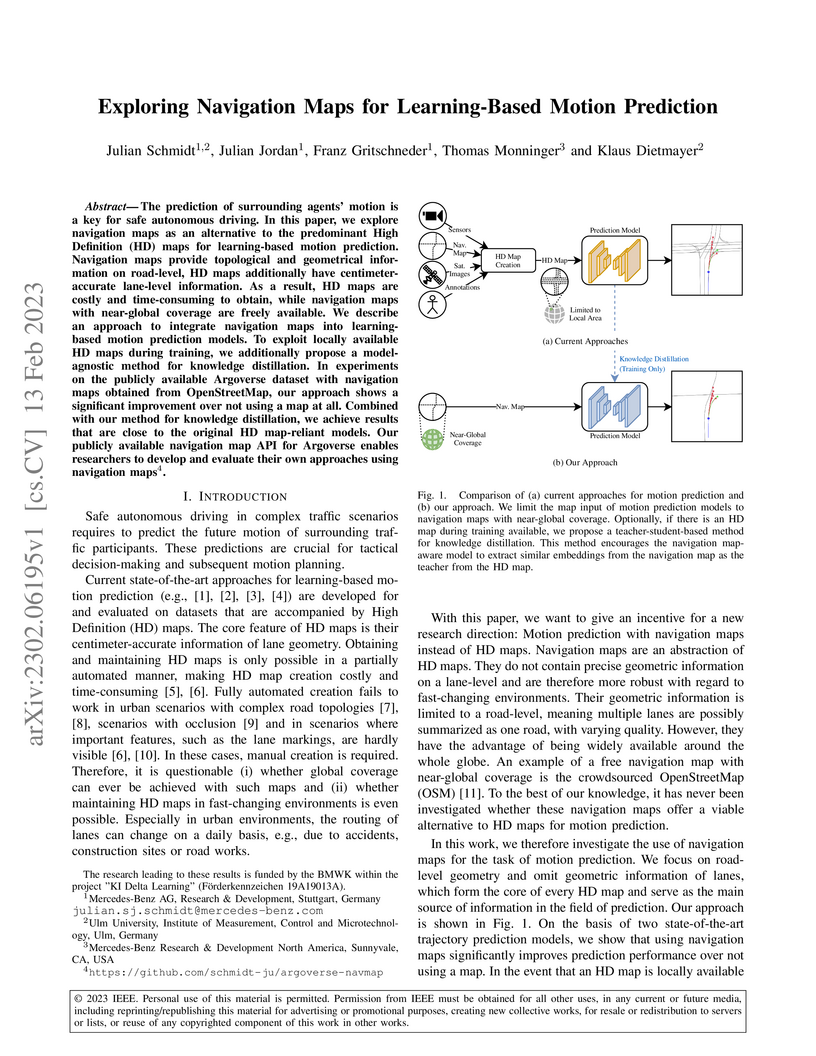

The prediction of surrounding agents' motion is a key for safe autonomous driving. In this paper, we explore navigation maps as an alternative to the predominant High Definition (HD) maps for learning-based motion prediction. Navigation maps provide topological and geometrical information on road-level, HD maps additionally have centimeter-accurate lane-level information. As a result, HD maps are costly and time-consuming to obtain, while navigation maps with near-global coverage are freely available. We describe an approach to integrate navigation maps into learning-based motion prediction models. To exploit locally available HD maps during training, we additionally propose a model-agnostic method for knowledge distillation. In experiments on the publicly available Argoverse dataset with navigation maps obtained from OpenStreetMap, our approach shows a significant improvement over not using a map at all. Combined with our method for knowledge distillation, we achieve results that are close to the original HD map-reliant models. Our publicly available navigation map API for Argoverse enables researchers to develop and evaluate their own approaches using navigation maps.

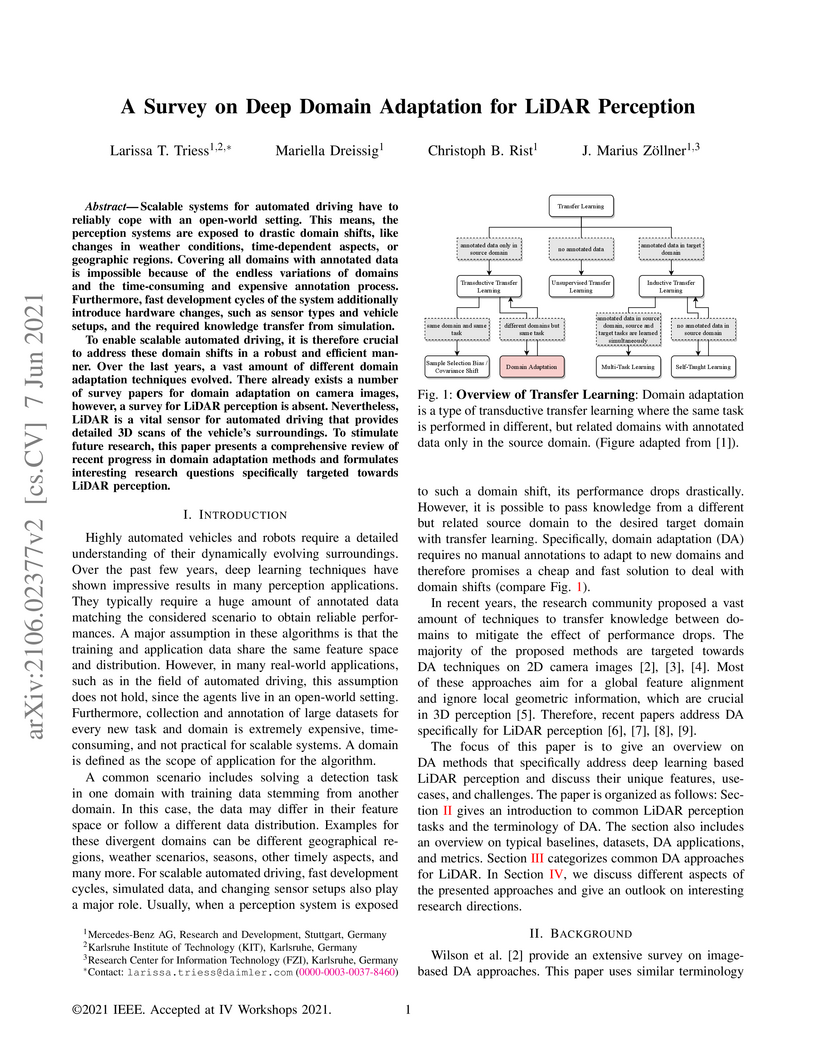

Scalable systems for automated driving have to reliably cope with an

open-world setting. This means, the perception systems are exposed to drastic

domain shifts, like changes in weather conditions, time-dependent aspects, or

geographic regions. Covering all domains with annotated data is impossible

because of the endless variations of domains and the time-consuming and

expensive annotation process. Furthermore, fast development cycles of the

system additionally introduce hardware changes, such as sensor types and

vehicle setups, and the required knowledge transfer from simulation. To enable

scalable automated driving, it is therefore crucial to address these domain

shifts in a robust and efficient manner. Over the last years, a vast amount of

different domain adaptation techniques evolved. There already exists a number

of survey papers for domain adaptation on camera images, however, a survey for

LiDAR perception is absent. Nevertheless, LiDAR is a vital sensor for automated

driving that provides detailed 3D scans of the vehicle's surroundings. To

stimulate future research, this paper presents a comprehensive review of recent

progress in domain adaptation methods and formulates interesting research

questions specifically targeted towards LiDAR perception.

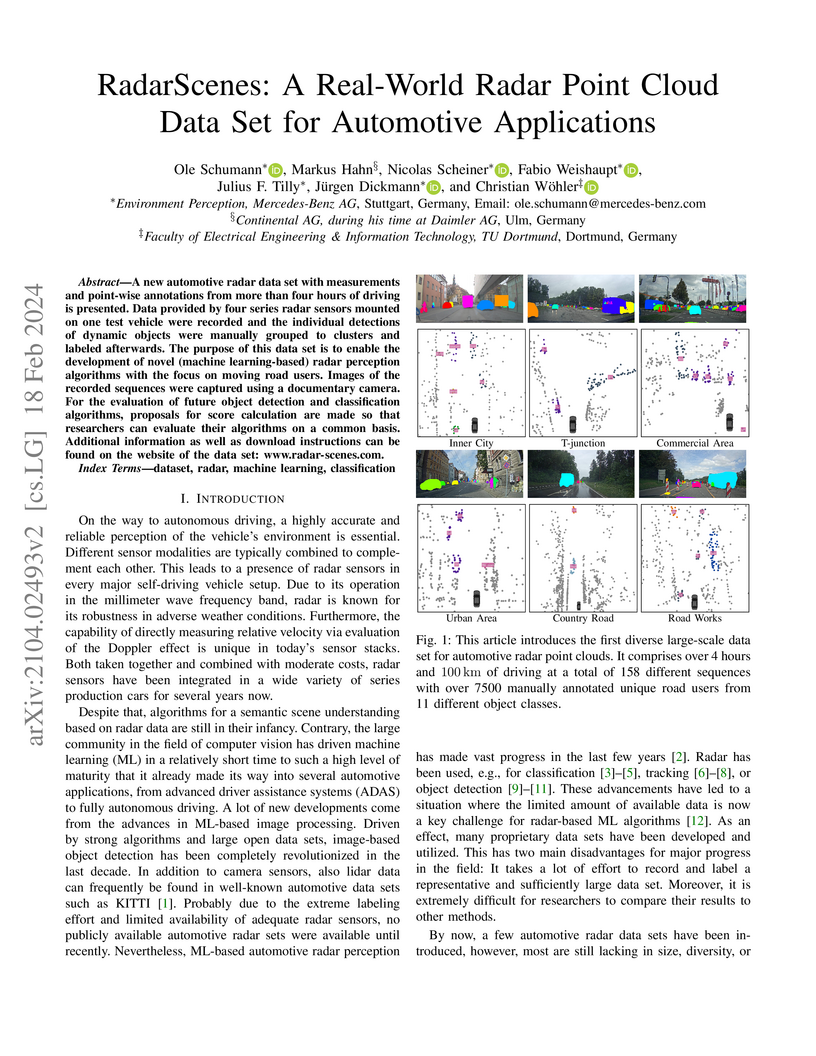

A new automotive radar data set with measurements and point-wise annotations from more than four hours of driving is presented. Data provided by four series radar sensors mounted on one test vehicle were recorded and the individual detections of dynamic objects were manually grouped to clusters and labeled afterwards. The purpose of this data set is to enable the development of novel (machine learning-based) radar perception algorithms with the focus on moving road users. Images of the recorded sequences were captured using a documentary camera. For the evaluation of future object detection and classification algorithms, proposals for score calculation are made so that researchers can evaluate their algorithms on a common basis. Additional information as well as download instructions can be found on the website of the data set: this http URL.

There are no more papers matching your filters at the moment.