NCSoft

We introduce VARCO-VISION-2.0, an open-weight bilingual vision-language model (VLM) for Korean and English with improved capabilities compared to the previous model VARCO-VISION-14B. The model supports multi-image understanding for complex inputs such as documents, charts, and tables, and delivers layoutaware OCR by predicting both textual content and its spatial location. Trained with a four-stage curriculum with memory-efficient techniques, the model achieves enhanced multimodal alignment, while preserving core language abilities and improving safety via preference optimization. Extensive benchmark evaluations demonstrate strong spatial grounding and competitive results for both languages, with the 14B model achieving 8th place on the OpenCompass VLM leaderboard among models of comparable scale. Alongside the 14B-scale model, we release a 1.7B version optimized for on-device deployment. We believe these models advance the development of bilingual VLMs and their practical applications. Two variants of VARCO-VISION-2.0 are available at Hugging Face: a full-scale 14B model and a lightweight 1.7B model.

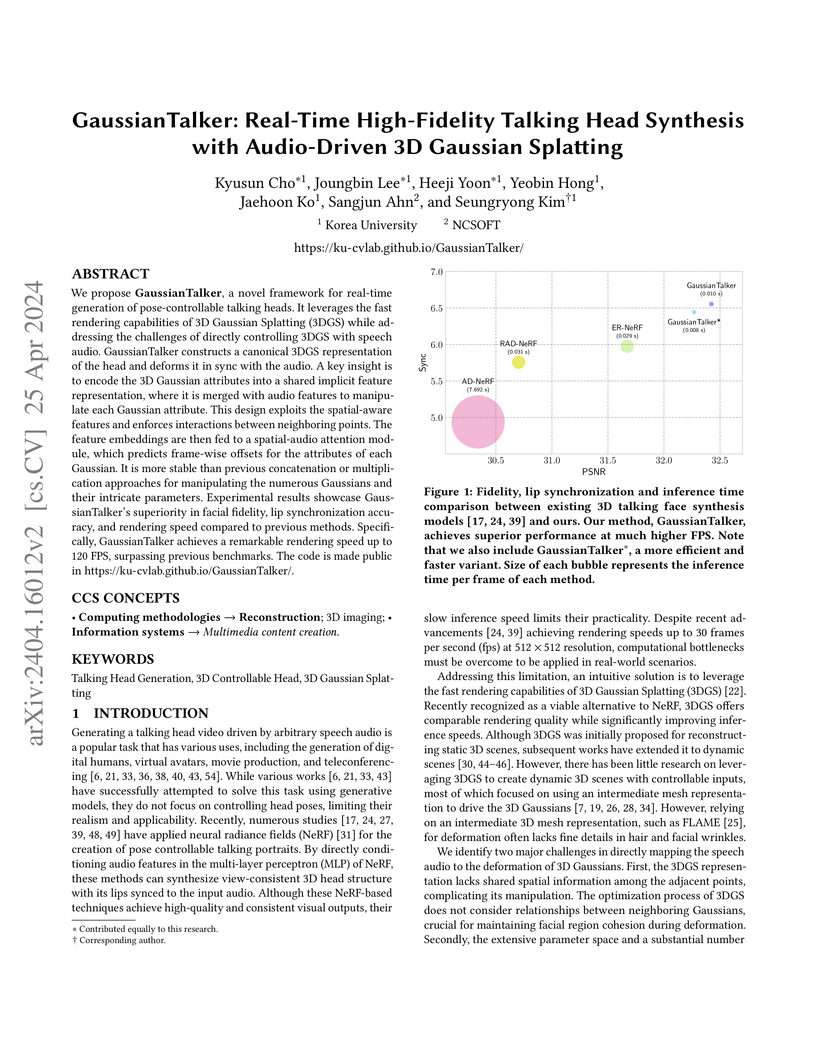

We propose GaussianTalker, a novel framework for real-time generation of pose-controllable talking heads. It leverages the fast rendering capabilities of 3D Gaussian Splatting (3DGS) while addressing the challenges of directly controlling 3DGS with speech audio. GaussianTalker constructs a canonical 3DGS representation of the head and deforms it in sync with the audio. A key insight is to encode the 3D Gaussian attributes into a shared implicit feature representation, where it is merged with audio features to manipulate each Gaussian attribute. This design exploits the spatial-aware features and enforces interactions between neighboring points. The feature embeddings are then fed to a spatial-audio attention module, which predicts frame-wise offsets for the attributes of each Gaussian. It is more stable than previous concatenation or multiplication approaches for manipulating the numerous Gaussians and their intricate parameters. Experimental results showcase GaussianTalker's superiority in facial fidelity, lip synchronization accuracy, and rendering speed compared to previous methods. Specifically, GaussianTalker achieves a remarkable rendering speed up to 120 FPS, surpassing previous benchmarks. Our code is made available at this https URL .

Recent advancements in Large Language Models (LLMs) have led to their

adaptation in various domains as conversational agents. We wonder: can

personality tests be applied to these agents to analyze their behavior, similar

to humans? We introduce TRAIT, a new benchmark consisting of 8K multi-choice

questions designed to assess the personality of LLMs. TRAIT is built on two

psychometrically validated small human questionnaires, Big Five Inventory (BFI)

and Short Dark Triad (SD-3), enhanced with the ATOMIC-10X knowledge graph to a

variety of real-world scenarios. TRAIT also outperforms existing personality

tests for LLMs in terms of reliability and validity, achieving the highest

scores across four key metrics: Content Validity, Internal Validity, Refusal

Rate, and Reliability. Using TRAIT, we reveal two notable insights into

personalities of LLMs: 1) LLMs exhibit distinct and consistent personality,

which is highly influenced by their training data (e.g., data used for

alignment tuning), and 2) current prompting techniques have limited

effectiveness in eliciting certain traits, such as high psychopathy or low

conscientiousness, suggesting the need for further research in this direction.

Reinforcement learning combined with deep neural networks has performed remarkably well in many genres of games recently. It has surpassed human-level performance in fixed game environments and turn-based two player board games. However, to the best of our knowledge, current research has yet to produce a result that has surpassed human-level performance in modern complex fighting games. This is due to the inherent difficulties with real-time fighting games, including: vast action spaces, action dependencies, and imperfect information. We overcame these challenges and made 1v1 battle AI agents for the commercial game "Blade & Soul". The trained agents competed against five professional gamers and achieved a win rate of 62%. This paper presents a practical reinforcement learning method that includes a novel self-play curriculum and data skipping techniques. Through the curriculum, three different styles of agents were created by reward shaping and were trained against each other. Additionally, this paper suggests data skipping techniques that could increase data efficiency and facilitate explorations in vast spaces. Since our method can be generally applied to all two-player competitive games with vast action spaces, we anticipate its application to game development including level design and automated balancing.

NC Research from NCSOFT introduced VARCO-VISION-14B, an open-source Korean-English Vision-Language Model, alongside five new Korean multimodal evaluation benchmarks. The model consistently outperforms other open-source VLMs of similar scale on both Korean and English tasks, showing competitive results against proprietary models and strong capabilities in text-only and OCR functionalities.

We propose a novel method for unsupervised image-to-image translation, which incorporates a new attention module and a new learnable normalization function in an end-to-end manner. The attention module guides our model to focus on more important regions distinguishing between source and target domains based on the attention map obtained by the auxiliary classifier. Unlike previous attention-based method which cannot handle the geometric changes between domains, our model can translate both images requiring holistic changes and images requiring large shape changes. Moreover, our new AdaLIN (Adaptive Layer-Instance Normalization) function helps our attention-guided model to flexibly control the amount of change in shape and texture by learned parameters depending on datasets. Experimental results show the superiority of the proposed method compared to the existing state-of-the-art models with a fixed network architecture and hyper-parameters. Our code and datasets are available at this https URL or this https URL.

The task of predicting future actions from a video is crucial for a

real-world agent interacting with others. When anticipating actions in the

distant future, we humans typically consider long-term relations over the whole

sequence of actions, i.e., not only observed actions in the past but also

potential actions in the future. In a similar spirit, we propose an end-to-end

attention model for action anticipation, dubbed Future Transformer (FUTR), that

leverages global attention over all input frames and output tokens to predict a

minutes-long sequence of future actions. Unlike the previous autoregressive

models, the proposed method learns to predict the whole sequence of future

actions in parallel decoding, enabling more accurate and fast inference for

long-term anticipation. We evaluate our method on two standard benchmarks for

long-term action anticipation, Breakfast and 50 Salads, achieving

state-of-the-art results.

13 Oct 2024

In the financial sector, a sophisticated financial time series simulator is essential for evaluating financial products and investment strategies. Traditional back-testing methods have mainly relied on historical data-driven approaches or mathematical model-driven approaches, such as various stochastic processes. However, in the current era of AI, data-driven approaches, where models learn the intrinsic characteristics of data directly, have emerged as promising techniques. Generative Adversarial Networks (GANs) have surfaced as promising generative models, capturing data distributions through adversarial learning. Financial time series, characterized 'stylized facts' such as random walks, mean-reverting patterns, unexpected jumps, and time-varying volatility, present significant challenges for deep neural networks to learn their intrinsic characteristics. This study examines the ability of GANs to learn diverse and complex temporal patterns (i.e., stylized facts) of both univariate and multivariate financial time series. Our extensive experiments revealed that GANs can capture various stylized facts of financial time series, but their performance varies significantly depending on the choice of generator architecture. This suggests that naively applying GANs might not effectively capture the intricate characteristics inherent in financial time series, highlighting the importance of carefully considering and validating the modeling choices.

While piano music has become a significant area of study in Music Information

Retrieval (MIR), there is a notable lack of datasets for piano solo music with

text labels. To address this gap, we present PIAST (PIano dataset with Audio,

Symbolic, and Text), a piano music dataset. Utilizing a piano-specific taxonomy

of semantic tags, we collected 9,673 tracks from YouTube and added human

annotations for 2,023 tracks by music experts, resulting in two subsets:

PIAST-YT and PIAST-AT. Both include audio, text, tag annotations, and

transcribed MIDI utilizing state-of-the-art piano transcription and beat

tracking models. Among many possible tasks with the multi-modal dataset, we

conduct music tagging and retrieval using both audio and MIDI data and report

baseline performances to demonstrate its potential as a valuable resource for

MIR research.

Text-to-texture generation has recently attracted increasing attention, but existing methods often suffer from the problems of view inconsistencies, apparent seams, and misalignment between textures and the underlying mesh. In this paper, we propose a robust text-to-texture method for generating consistent and seamless textures that are well aligned with the mesh. Our method leverages state-of-the-art 2D diffusion models, including SDXL and multiple ControlNets, to capture structural features and intricate details in the generated textures. The method also employs a symmetrical view synthesis strategy combined with regional prompts for enhancing view consistency. Additionally, it introduces novel texture blending and soft-inpainting techniques, which significantly reduce the seam regions. Extensive experiments demonstrate that our method outperforms existing state-of-the-art methods.

Domain specificity of embedding models is critical for effective performance.

However, existing benchmarks, such as FinMTEB, are primarily designed for

high-resource languages, leaving low-resource settings, such as Korean,

under-explored. Directly translating established English benchmarks often fails

to capture the linguistic and cultural nuances present in low-resource domains.

In this paper, titled TWICE: What Advantages Can Low-Resource Domain-Specific

Embedding Models Bring? A Case Study on Korea Financial Texts, we introduce

KorFinMTEB, a novel benchmark for the Korean financial domain, specifically

tailored to reflect its unique cultural characteristics in low-resource

languages. Our experimental results reveal that while the models perform

robustly on a translated version of FinMTEB, their performance on KorFinMTEB

uncovers subtle yet critical discrepancies, especially in tasks requiring

deeper semantic understanding, that underscore the limitations of direct

translation. This discrepancy highlights the necessity of benchmarks that

incorporate language-specific idiosyncrasies and cultural nuances. The insights

from our study advocate for the development of domain-specific evaluation

frameworks that can more accurately assess and drive the progress of embedding

models in low-resource settings.

This paper presents our participation in the FinNLP-2023 shared task on multi-lingual environmental, social, and corporate governance issue identification (ML-ESG). The task's objective is to classify news articles based on the 35 ESG key issues defined by the MSCI ESG rating guidelines. Our approach focuses on the English and French subtasks, employing the CerebrasGPT, OPT, and Pythia models, along with the zero-shot and GPT3Mix Augmentation techniques. We utilize various encoder models, such as RoBERTa, DeBERTa, and FinBERT, subjecting them to knowledge distillation and additional training.

Our approach yielded exceptional results, securing the first position in the English text subtask with F1-score 0.69 and the second position in the French text subtask with F1-score 0.78. These outcomes underscore the effectiveness of our methodology in identifying ESG issues in news articles across different languages. Our findings contribute to the exploration of ESG topics and highlight the potential of leveraging advanced language models for ESG issue identification.

The advent of scalable deep models and large datasets has improved the

performance of Neural Machine Translation. Knowledge Distillation (KD) enhances

efficiency by transferring knowledge from a teacher model to a more compact

student model. However, KD approaches to Transformer architecture often rely on

heuristics, particularly when deciding which teacher layers to distill from. In

this paper, we introduce the 'Align-to-Distill' (A2D) strategy, designed to

address the feature mapping problem by adaptively aligning student attention

heads with their teacher counterparts during training. The Attention Alignment

Module in A2D performs a dense head-by-head comparison between student and

teacher attention heads across layers, turning the combinatorial mapping

heuristics into a learning problem. Our experiments show the efficacy of A2D,

demonstrating gains of up to +3.61 and +0.63 BLEU points for WMT-2022 De->Dsb

and WMT-2014 En->De, respectively, compared to Transformer baselines.

Humans usually have conversations by making use of prior knowledge about a topic and background information of the people whom they are talking to. However, existing conversational agents and datasets do not consider such comprehensive information, and thus they have a limitation in generating the utterances where the knowledge and persona are fused properly. To address this issue, we introduce a call For Customized conversation (FoCus) dataset where the customized answers are built with the user's persona and Wikipedia knowledge. To evaluate the abilities to make informative and customized utterances of pre-trained language models, we utilize BART and GPT-2 as well as transformer-based models. We assess their generation abilities with automatic scores and conduct human evaluations for qualitative results. We examine whether the model reflects adequate persona and knowledge with our proposed two sub-tasks, persona grounding (PG) and knowledge grounding (KG). Moreover, we show that the utterances of our data are constructed with the proper knowledge and persona through grounding quality assessment.

Researchers at NCSOFT's Speech AI Lab and Handong Global University developed VocGAN, a neural vocoder that addresses quality limitations of fast vocoders like MelGAN by introducing a hierarchically-nested adversarial network and joint conditional/unconditional loss. It achieves a MOS of 4.202, outperforming MelGAN (3.898) and Parallel WaveGAN (4.098), while maintaining 3.24x real-time inference on CPU.

Current texture synthesis methods, which generate textures from fixed viewpoints, suffer from inconsistencies due to the lack of global context and geometric understanding. Meanwhile, recent advancements in video generation models have demonstrated remarkable success in achieving temporally consistent videos. In this paper, we introduce VideoTex, a novel framework for seamless texture synthesis that leverages video generation models to address both spatial and temporal inconsistencies in 3D textures. Our approach incorporates geometry-aware conditions, enabling precise utilization of 3D mesh structures. Additionally, we propose a structure-wise UV diffusion strategy, which enhances the generation of occluded areas by preserving semantic information, resulting in smoother and more coherent textures. VideoTex not only achieves smoother transitions across UV boundaries but also ensures high-quality, temporally stable textures across video frames. Extensive experiments demonstrate that VideoTex outperforms existing methods in texture fidelity, seam blending, and stability, paving the way for dynamic real-time applications that demand both visual quality and temporal coherence.

Understanding videos to localize moments with natural language often requires large expensive annotated video regions paired with language queries. To eliminate the annotation costs, we make a first attempt to train a natural language video localization model in zero-shot manner. Inspired by unsupervised image captioning setup, we merely require random text corpora, unlabeled video collections, and an off-the-shelf object detector to train a model. With the unpaired data, we propose to generate pseudo-supervision of candidate temporal regions and corresponding query sentences, and develop a simple NLVL model to train with the pseudo-supervision. Our empirical validations show that the proposed pseudo-supervised method outperforms several baseline approaches and a number of methods using stronger supervision on Charades-STA and ActivityNet-Captions.

The Learnable human Mesh Triangulation (LMT) method reconstructs 3D human meshes from multi-view images by first estimating dense mesh vertices and then fitting the SMPL model to them. This two-stage approach improves the accuracy of joint rotation and human shape estimation, leveraging per-vertex visibility information to enhance robustness against occlusions.

We present a novel generative model, called Bidirectional GaitNet, that learns the relationship between human anatomy and its gait. The simulation model of human anatomy is a comprehensive, full-body, simulation-ready, musculoskeletal model with 304 Hill-type musculotendon units. The Bidirectional GaitNet consists of forward and backward models. The forward model predicts a gait pattern of a person with specific physical conditions, while the backward model estimates the physical conditions of a person when his/her gait pattern is provided. Our simulation-based approach first learns the forward model by distilling the simulation data generated by a state-of-the-art predictive gait simulator and then constructs a Variational Autoencoder (VAE) with the learned forward model as its decoder. Once it is learned its encoder serves as the backward model. We demonstrate our model on a variety of healthy/impaired gaits and validate it in comparison with physical examination data of real patients.

Unsupervised learning objectives like autoregressive and masked language

modeling constitute a significant part in producing pre-trained representations

that perform various downstream applications from natural language

understanding to conversational tasks. However, despite impressive generative

capabilities of recent large language models, their abilities to capture

syntactic or semantic structure within text lag behind. We hypothesize that the

mismatch between linguistic performance and competence in machines is

attributable to insufficient learning of linguistic structure knowledge via

currently popular pre-training objectives. Working with English, we show that

punctuation restoration as a learning objective improves performance on

structure-related tasks like named entity recognition, open information

extraction, chunking, and part-of-speech tagging. Punctuation restoration

results in ▲≥2%p improvement in 16 out of 18 experiments,

across 6 out of 7 tasks. Our results show that punctuation restoration is an

effective learning objective that can improve structure understanding and yield

a more robust structure-aware representations of natural language in base-sized

models.

There are no more papers matching your filters at the moment.