National Institute of Technology Srinagar

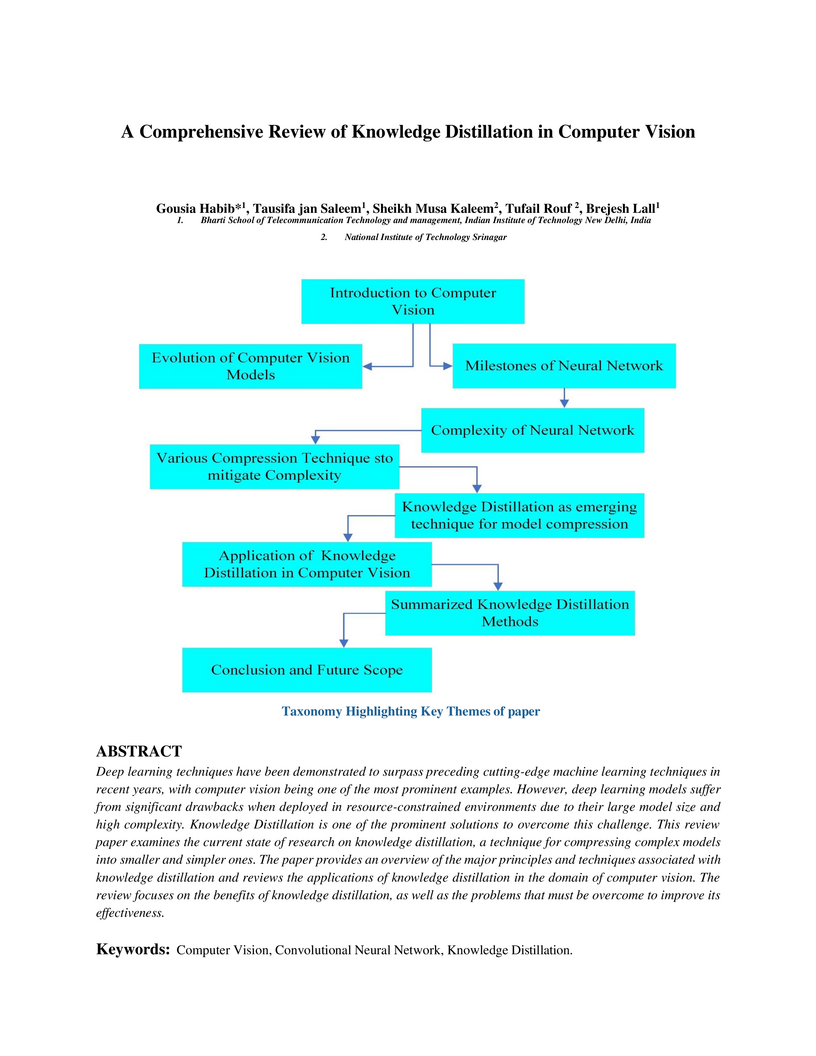

Deep learning techniques have been demonstrated to surpass preceding cutting-edge machine learning techniques in recent years, with computer vision being one of the most prominent examples. However, deep learning models suffer from significant drawbacks when deployed in resource-constrained environments due to their large model size and high complexity. Knowledge Distillation is one of the prominent solutions to overcome this challenge. This review paper examines the current state of research on knowledge distillation, a technique for compressing complex models into smaller and simpler ones. The paper provides an overview of the major principles and techniques associated with knowledge distillation and reviews the applications of knowledge distillation in the domain of computer vision. The review focuses on the benefits of knowledge distillation, as well as the problems that must be overcome to improve its effectiveness.

Non-Markovian dynamics is central to quantum information processing, as memory effects strongly influence coherence preservation, metrology, and communication. In this work, we investigate the role of stochastic system--bath couplings in shaping non-Markovian behavior of open quantum systems, using the central spin model within a time-convolutionless master equation framework. We show that the character of the reduced dynamics depends jointly on the intrinsic memory of the environment and on the structure of the system--environment interaction. In certain regimes, the dynamics simplify to pure dephasing, while in general both amplitude damping and dephasing contribute to the evolution. By employing two complementary measures: the Quantum Fisher Information (QFI) flow and the Breuer--Laine--Piilo (BLP) measure, we demonstrate that QFI flow may fail to witness memory effects in weak-coupling and near-resonant regimes, whereas the BLP measure still detects information backflow. Furthermore, external modulation of the interaction kernel produces qualitatively richer behavior, including irregular and frequency-dependent revivals of non-Markovianity. These results clarify the physical origin of memory effects, highlight the limitations of single-witness approaches, and suggest that stochasticity and modulation can be harnessed to engineer robust, noise-resilient quantum technologies.

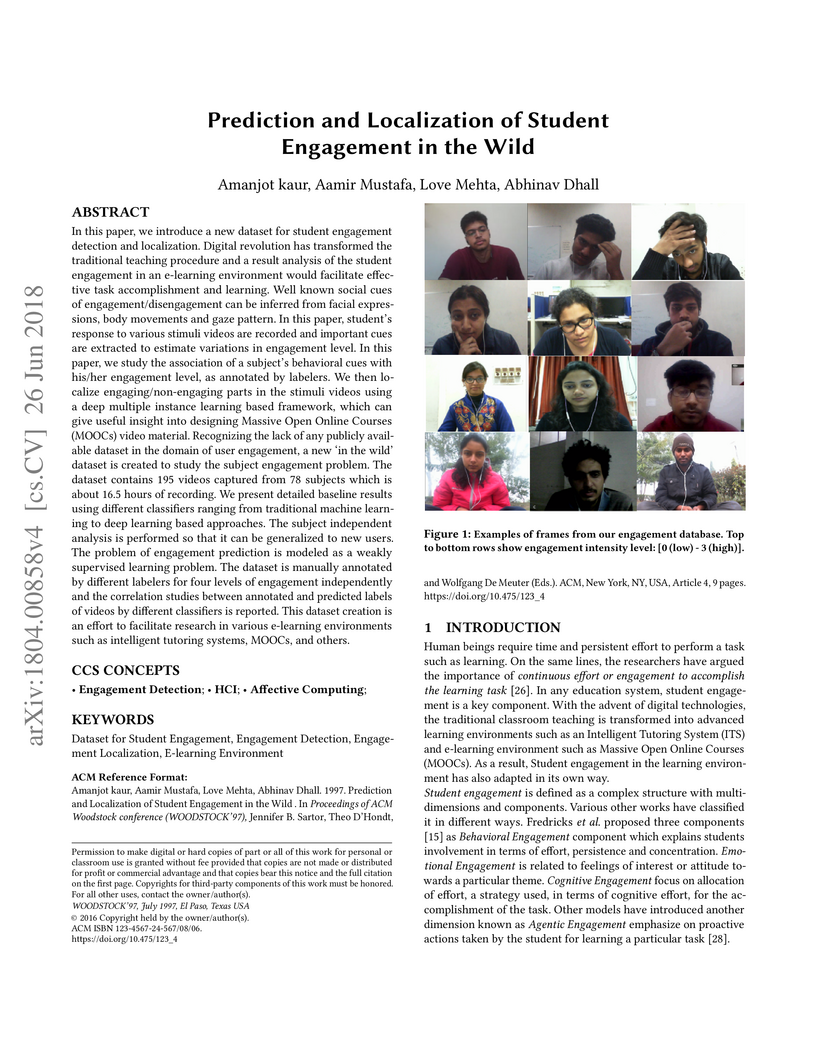

In this paper, we introduce a new dataset for student engagement detection

and localization. Digital revolution has transformed the traditional teaching

procedure and a result analysis of the student engagement in an e-learning

environment would facilitate effective task accomplishment and learning. Well

known social cues of engagement/disengagement can be inferred from facial

expressions, body movements and gaze pattern. In this paper, student's response

to various stimuli videos are recorded and important cues are extracted to

estimate variations in engagement level. In this paper, we study the

association of a subject's behavioral cues with his/her engagement level, as

annotated by labelers. We then localize engaging/non-engaging parts in the

stimuli videos using a deep multiple instance learning based framework, which

can give useful insight into designing Massive Open Online Courses (MOOCs)

video material. Recognizing the lack of any publicly available dataset in the

domain of user engagement, a new `in the wild' dataset is created to study the

subject engagement problem. The dataset contains 195 videos captured from 78

subjects which is about 16.5 hours of recording. We present detailed baseline

results using different classifiers ranging from traditional machine learning

to deep learning based approaches. The subject independent analysis is

performed so that it can be generalized to new users. The problem of engagement

prediction is modeled as a weakly supervised learning problem. The dataset is

manually annotated by different labelers for four levels of engagement

independently and the correlation studies between annotated and predicted

labels of videos by different classifiers is reported. This dataset creation is

an effort to facilitate research in various e-learning environments such as

intelligent tutoring systems, MOOCs, and others.

Biomedical image analysis is of paramount importance for the advancement of

healthcare and medical research. Although conventional convolutional neural

networks (CNNs) are frequently employed in this domain, facing limitations in

capturing intricate spatial and temporal relationships at the pixel level due

to their reliance on fixed-sized windows and immutable filter weights

post-training. These constraints impede their ability to adapt to input

fluctuations and comprehend extensive long-range contextual information. To

overcome these challenges, a novel architecture based on self-attention

mechanisms as an alternative to conventional CNNs.The proposed model utilizes

attention-based mechanisms to surpass the limitations of CNNs. The key

component of our strategy is the combination of non-overlapping (vanilla

patching) and novel overlapped Shifted Patching Techniques (S.P.T.s), which

enhances the model's capacity to capture local context and improves

generalization. Additionally, we introduce the Lancoz5 interpolation technique,

which adapts variable image sizes to higher resolutions, facilitating better

analysis of high-resolution biomedical images. Our methods address critical

challenges faced by attention-based vision models, including inductive bias,

weight sharing, receptive field limitations, and efficient data handling.

Experimental evidence shows the effectiveness of proposed model in generalizing

to various biomedical imaging tasks. The attention-based model, combined with

advanced data augmentation methodologies, exhibits robust modeling capabilities

and superior performance compared to existing approaches. The integration of

S.P.T.s significantly enhances the model's ability to capture local context,

while the Lancoz5 interpolation technique ensures efficient handling of

high-resolution images.

The research paper investigates the thermodynamic behavior of a black hole in a cloud of strings (CoS) and perfect fluid dark matter (PFDM), incorporating a minor correction to the Anti de Sitter (AdS) length scale. The study reveals that while the entropy remains unaffected by the AdS length correction, other thermodynamic quantities such as enthalpy, free energy, pressure, internal energy, Gibbs free energy, and heat capacity exhibit significant modifications. Positive corrections to the AdS length generally enhance these quantities, leading to faster growth or slower decay with increasing black hole size, while negative corrections often produce the opposite effect, sometimes resulting in divergent or negative values. These findings highlight the sensitivity of black hole thermodynamics to geometric corrections and exotic matter fields, providing deeper information about the interplay between such modifications and thermodynamic stability. These results underscore the importance of considering minor corrections in theoretical models to better understand black hole behavior in the presence of dark matter and string-like matter distributions.

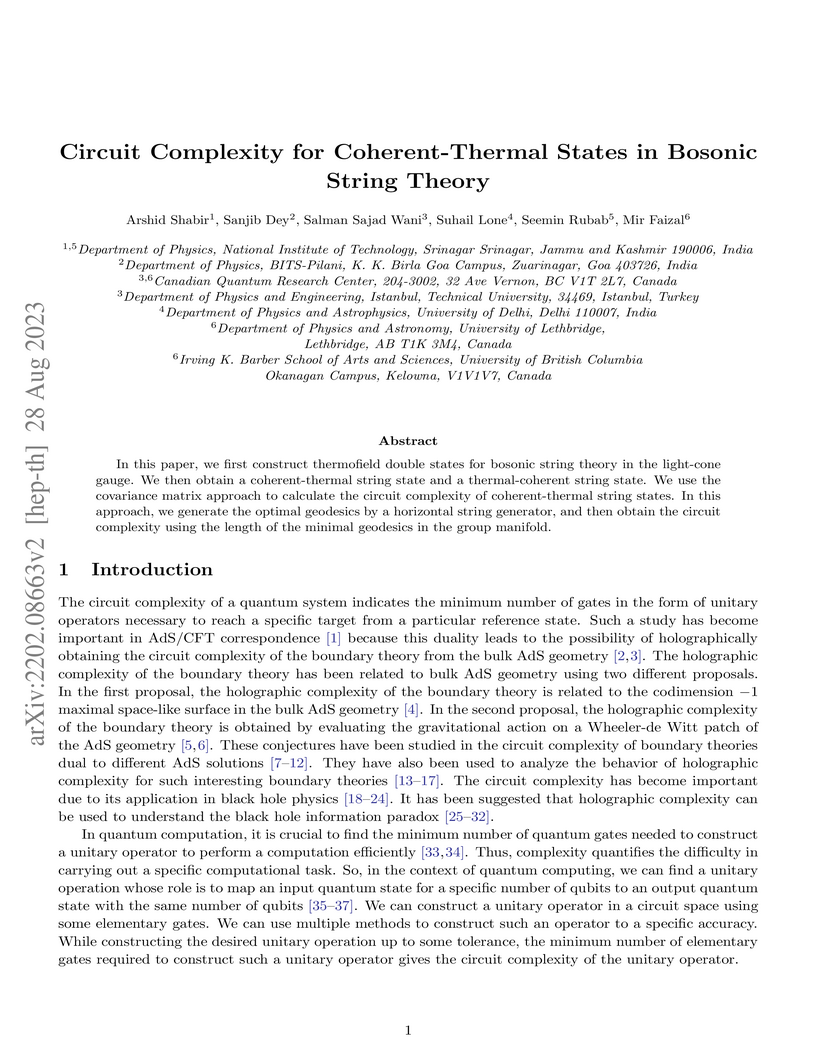

In this paper, we first construct thermofield double states for bosonic

string theory in the light-cone gauge. We then obtain a coherent-thermal string

state and a thermal-coherent string state. We use the covariance matrix

approach to calculate the circuit complexity of coherent-thermal string states.

In this approach, we generate the optimal geodesics by a horizontal string

generator, and then obtain the circuit complexity using the length of the

minimal geodesics in the group manifold.

10 Jul 2024

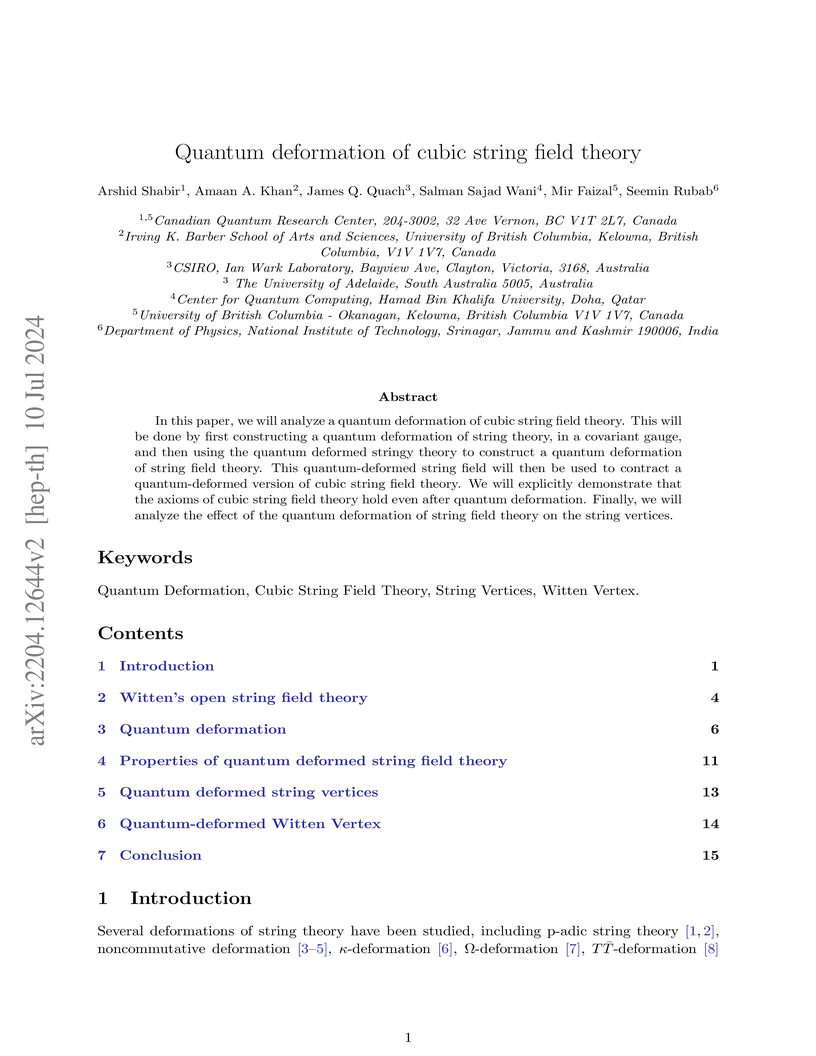

In this paper, we will analyze a quantum deformation of cubic string field

theory. This will be done by first constructing a quantum deformation of string

theory, in a covariant gauge, and then using the quantum deformed stringy

theory to construct a quantum deformation of string field theory. This quantum

deformed string field will then be used to contract a quantum deformed version

of cubic string field theory. We will explicitly demonstrate that the axioms of

cubic string field theory hold even after quantum deformation. Finally, we will

analyze the effect of the quantum deformation of string field theory on the

string vertices.

09 Jan 2023

Vehicular ad-hoc network (VANET) is subclass of mobile ad-hoc network which is vehicle to vehicle and vehicle to infrastructure communication environment, where nodes involve themselves as servers and/or clients to exchange and share information. VANET have some unique characteristics like high dynamic topology, frequent disconnections, restricted topology etc, so it need special class of routing protocol. To simulate the VANET scenarios we require two types of simulators, traffic simulator for generating traffic and network simulator. In this project I created a sample scenario of VANET for AODV, DSDV, DSR routing protocols. I have used SUMO for generating traffic mobility files and NS-3 for testing performance of routing protocols on the mobility files created using Traffic simulator SUMO.

We study robust and efficient distributed algorithms for building and maintaining distributed data structures in dynamic Peer-to-Peer (P2P) networks. P2P networks are characterized by a high level of dynamicity with abrupt heavy node \emph{churn} (nodes that join and leave the network continuously over time). We present a novel algorithm that builds and maintains with high probability a skip list for poly(n) rounds despite O(n/logn) churn \emph{per round} (n is the stable network size). We assume that the churn is controlled by an oblivious adversary (that has complete knowledge and control of what nodes join and leave and at what time and has unlimited computational power, but is oblivious to the random choices made by the algorithm). Moreover, the maintenance overhead is proportional to the churn rate. Furthermore, the algorithm is scalable in that the messages are small (i.e., at most polylog(n) bits) and every node sends and receives at most polylog(n) messages per round.

Our algorithm crucially relies on novel distributed and parallel algorithms to merge two n-elements skip lists and delete a large subset of items, both in O(logn) rounds with high probability. These procedures may be of independent interest due to their elegance and potential applicability in other contexts in distributed data structures.

To the best of our knowledge, our work provides the first-known fully-distributed data structure that provably works under highly dynamic settings (i.e., high churn rate). Furthermore, they are localized (i.e., do not require any global topological knowledge). Finally, we believe that our framework can be generalized to other distributed and dynamic data structures including graphs, potentially leading to stable distributed computation despite heavy churn.

Accurate classification of software bugs is essential for improving software

quality. This paper presents a rule-based automated framework for classifying

issues in quantum software repositories by bug type, category, severity, and

impacted quality attributes, with additional focus on quantum-specific bug

types. The framework applies keyword and heuristic-based techniques tailored to

quantum computing. To assess its reliability, we manually classified a

stratified sample of 4,984 issues from a dataset of 12,910 issues across 36

Qiskit repositories. Automated classifications were compared with ground truth

using accuracy, precision, recall, and F1-score. The framework achieved up to

85.21% accuracy, with F1-scores ranging from 0.7075 (severity) to 0.8393

(quality attribute). Statistical validation via paired t-tests and Cohen's

Kappa showed substantial to almost perfect agreement for bug type (k = 0.696),

category (k = 0.826), quality attribute (k = 0.818), and quantum-specific bug

type (k = 0.712). Severity classification showed slight agreement (k = 0.162),

suggesting room for improvement. Large-scale analysis revealed that classical

bugs dominate (67.2%), with quantum-specific bugs at 27.3%. Frequent bug

categories included compatibility, functional, and quantum-specific defects,

while usability, maintainability, and interoperability were the most impacted

quality attributes. Most issues (93.7%) were low severity; only 4.3% were

critical. A detailed review of 1,550 quantum-specific bugs showed that over

half involved quantum circuit-level problems, followed by gate errors and

hardware-related issues.

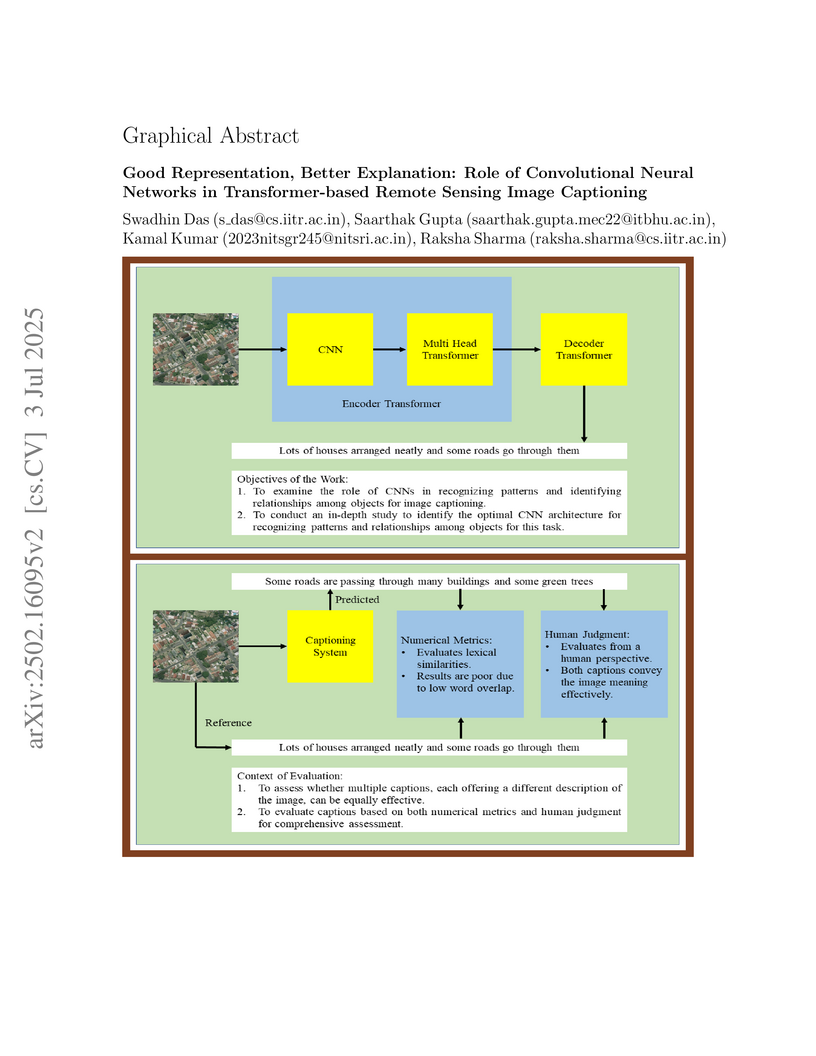

Remote Sensing Image Captioning (RSIC) is the process of generating meaningful descriptions from remote sensing images. Recently, it has gained significant attention, with encoder-decoder models serving as the backbone for generating meaningful captions. The encoder extracts essential visual features from the input image, transforming them into a compact representation, while the decoder utilizes this representation to generate coherent textual descriptions. Recently, transformer-based models have gained significant popularity due to their ability to capture long-range dependencies and contextual information. The decoder has been well explored for text generation, whereas the encoder remains relatively unexplored. However, optimizing the encoder is crucial as it directly influences the richness of extracted features, which in turn affects the quality of generated captions. To address this gap, we systematically evaluate twelve different convolutional neural network (CNN) architectures within a transformer-based encoder framework to assess their effectiveness in RSIC. The evaluation consists of two stages: first, a numerical analysis categorizes CNNs into different clusters, based on their performance. The best performing CNNs are then subjected to human evaluation from a human-centric perspective by a human annotator. Additionally, we analyze the impact of different search strategies, namely greedy search and beam search, to ensure the best caption. The results highlight the critical role of encoder selection in improving captioning performance, demonstrating that specific CNN architectures significantly enhance the quality of generated descriptions for remote sensing images. By providing a detailed comparison of multiple encoders, this study offers valuable insights to guide advances in transformer-based image captioning models.

13 May 2019

A recent area of interest in thermal applications has been nanofluids, with

extensive research conducted in the field, and numerous publications expounding

on new results coming to the fore. The properties of such fluids depend on

their components, and by the use of combined and complex nanoparticles with

diverse characteristics, the desirable qualities of multiple nanoparticle

suspensions can be obtained in a single mixture. The paper covers these hybrid

nanofluids, the techniques for their synthesis, their properties,

characteristics, and the areas of debate and outstanding questions.

One of the most employed yet simple algorithm for cluster analysis is the

k-means algorithm. k-means has successfully witnessed its use in artificial

intelligence, market segmentation, fraud detection, data mining, psychology,

etc., only to name a few. The k-means algorithm, however, does not always yield

the best quality results. Its performance heavily depends upon the number of

clusters supplied and the proper initialization of the cluster centroids or

seeds. In this paper, we conduct an analysis of the performance of k-means on

image data by employing parametric entropies in an entropy based centroid

initialization method and propose the best fitting entropy measures for general

image datasets. We use several entropies like Taneja entropy, Kapur entropy,

Aczel Daroczy entropy, Sharma Mittal entropy. We observe that for different

datasets, different entropies provide better results than the conventional

methods. We have applied our proposed algorithm on these datasets: Satellite,

Toys, Fruits, Cars, Brain MRI, Covid X-Ray.

24 Jan 2023

The Measurement-based quantum computation provides an alternate model for

quantum computation compared to the well-known gate-based model. It uses qubits

prepared in a specific entangled state followed by single-qubit measurements.

The stabilizers of cluster states are well defined because of their graph

structure. We exploit this graph structure extensively to design non-CSS codes

using measurement in a specific basis on the cluster state. % We aim to

construct [[n,1]] non-CSS code from a (n+1) qubit cluster state. The

procedure is general and can be used specifically as an encoding technique to

design any non-CSS codes with one logical qubit. We show there exists a (n+1)

qubit cluster state which upon measurement gives the desired [[n,1]] code.

This paper empirically analyzes the performance of different acquisition functions and optimizers for the inner optimization step in Bayesian Optimization. It found that L-BFGS consistently processes new sample proposals faster than TNC, and that Maximum Probability of Improvement can converge more rapidly than Expected Improvement when initial samples are strategically positioned near the global optimum.

20 May 2025

In this paper, we study Hawking radiation in Jackiw-Teitelboim gravity for

minimally coupled massless and massive scalar fields. We employ a

holography-inspired technique to derive the Bogoliubov coefficients. We

consider both black holes in equilibrium and black holes attached to a bath. In

the latter case, we compute semiclassical deviations from the thermal spectrum.

Climate change stands as one of the most pressing global challenges of the twenty-first century, with far-reaching consequences such as rising sea levels, melting glaciers, and increasingly extreme weather patterns. Accurate forecasting is critical for monitoring these phenomena and supporting mitigation strategies. While recent data-driven models for time-series forecasting, including CNNs, RNNs, and attention-based transformers, have shown promise, they often struggle with sequential dependencies and limited parallelization, especially in long-horizon, multivariate meteorological datasets. In this work, we present Focal Modulated Attention Encoder (FATE), a novel transformer architecture designed for reliable multivariate time-series forecasting. Unlike conventional models, FATE introduces a tensorized focal modulation mechanism that explicitly captures spatiotemporal correlations in time-series data. We further propose two modulation scores that offer interpretability by highlighting critical environmental features influencing predictions. We benchmark FATE across seven diverse real-world datasets including ETTh1, ETTm2, Traffic, Weather5k, USA-Canada, Europe, and LargeST datasets, and show that it consistently outperforms all state-of-the-art methods, including temperature datasets. Our ablation studies also demonstrate that FATE generalizes well to broader multivariate time-series forecasting tasks. For reproducible research, code is released at this https URL.

04 Sep 2024

Let D be a digraph of order n with adjacency matrix A(D). For

α∈[0,1), the Aα matrix of D is defined as

Aα(D)=αΔ+(D)+(1−α)A(D), where

Δ+(D)=\mboxdiag (d1+,d2+,…,dn+) is the diagonal

matrix of vertex outdegrees of D. Let

σ1α(D),σ2α(D),…,σnα(D) be the

singular values of Aα(D). Then the trace norm of Aα(D),

which we call α trace norm of D, is defined as

∥Aα(D)∥∗=∑i=1nσiα(D). In this paper, we find

the singular values of some basic digraphs and characterize the digraphs D

with \mboxRank (Aα(D))=1. As an application of these results, we

obtain a lower bound for the trace norm of Aα matrix of digraphs and

determine the extremal digraphs. In particular, we determine the oriented trees

for which the trace norm of Aα matrix attains minimum. We obtain a

lower bound for the α spectral norm σ1α(D) of digraphs

and characterize the extremal digraphs. As an application of this result, we

obtain an upper bound for the α trace norm of digraphs and characterize

the extremal digraphs.

19 Mar 2021

Density functional theory (DFT) based ab-initio calculations of electronic, phononic, and superconducting properties of 1T MoS2 are reported. The phonon dispersions are computed within density-functional-perturbation-theory (DFPT). We have also computed Eliashberg function alpha2Fomega and electron-phonon coupling constant lambda from the same. The superconducting transition temperature (Tc) computed within the McMillan-Allen-Dynes formula is found in good agreement with the recent experimental report. We have also evaluated the effect of pressure on the superconducting behavior of this system. Our results show that 1T MoS2 exhibits electron-phonon mediated superconductivity and the superconducting transition temperature rises slightly with pressure and then decreases with further increase in pressure.

06 Dec 2024

This paper explores the operational principles and monomiality principle that significantly shape the development of various special polynomial families. We argue that applying the monomiality principle yields novel results while remaining consistent with established findings. The primary focus of this study is the introduction of degenerate multidimensional Hermite-based Appell polynomials (DMHAP), denoted as HAn[r](l1,l2,l3,…,lr;ϑ). These DMHAP form essential families of orthogonal polynomials, demonstrating strong connections with classical Hermite and Appell polynomials. Additionally, we derive symmetric identities and examine the fundamental properties of these polynomials. Finally, we establish an operational framework to investigate and develop these polynomials further.

There are no more papers matching your filters at the moment.