ONERA

14 Nov 2024

Two of the many trends in neural network research of the past few years have

been (i) the learning of dynamical systems, especially with recurrent neural

networks such as long short-term memory networks (LSTMs) and (ii) the

introduction of transformer neural networks for natural language processing

(NLP) tasks.

While some work has been performed on the intersection of these two trends,

those efforts were largely limited to using the vanilla transformer directly

without adjusting its architecture for the setting of a physical system.

In this work we develop a transformer-inspired neural network and use it to

learn a dynamical system. We (for the first time) change the activation

function of the attention layer to imbue the transformer with

structure-preserving properties to improve long-term stability. This is shown

to be of great advantage when applying the neural network to learning the

trajectory of a rigid body.

24 Apr 2025

The Loewner framework is an interpolatory approach for the approximation of linear and nonlinear systems. The purpose here is to extend this framework to linear parametric systems with an arbitrary number n of parameters. To achieve this, a new generalized multivariate rational function realization is proposed. Then, we introduce the n-dimensional multivariate Loewner matrices and show that they can be computed by solving a set of coupled Sylvester equations. The null space of these Loewner matrices allows the construction of the multivariate barycentric rational function. The principal result of this work is to show how the null space of the n-dimensional Loewner matrix can be computed using a sequence of 1-dimensional Loewner matrices, leading to a drastic reduction of the computational burden. Equally importantly, this burden is alleviated by avoiding the explicit construction of large-scale n-dimensional Loewner matrices of size N×N. Instead, the proposed methodology achieves decoupling of variables, leading to (i) a complexity reduction from O(N3) to below O(N1.5) when n>5 and (ii) to memory storage bounded by the largest variable dimension rather than their product, thus taming the curse of dimensionality and making the solution scalable to very large data sets. This decoupling of the variables leads to a result similar to the Kolmogorov superposition theorem for rational functions. Thus, making use of barycentric representations, every multivariate rational function can be computed using the composition and superposition of single-variable functions. Finally, we suggest two algorithms (one direct and one iterative) to construct, directly from data, multivariate (or parametric) realizations ensuring (approximate) interpolation. Numerical examples highlight the effectiveness and scalability of the method.

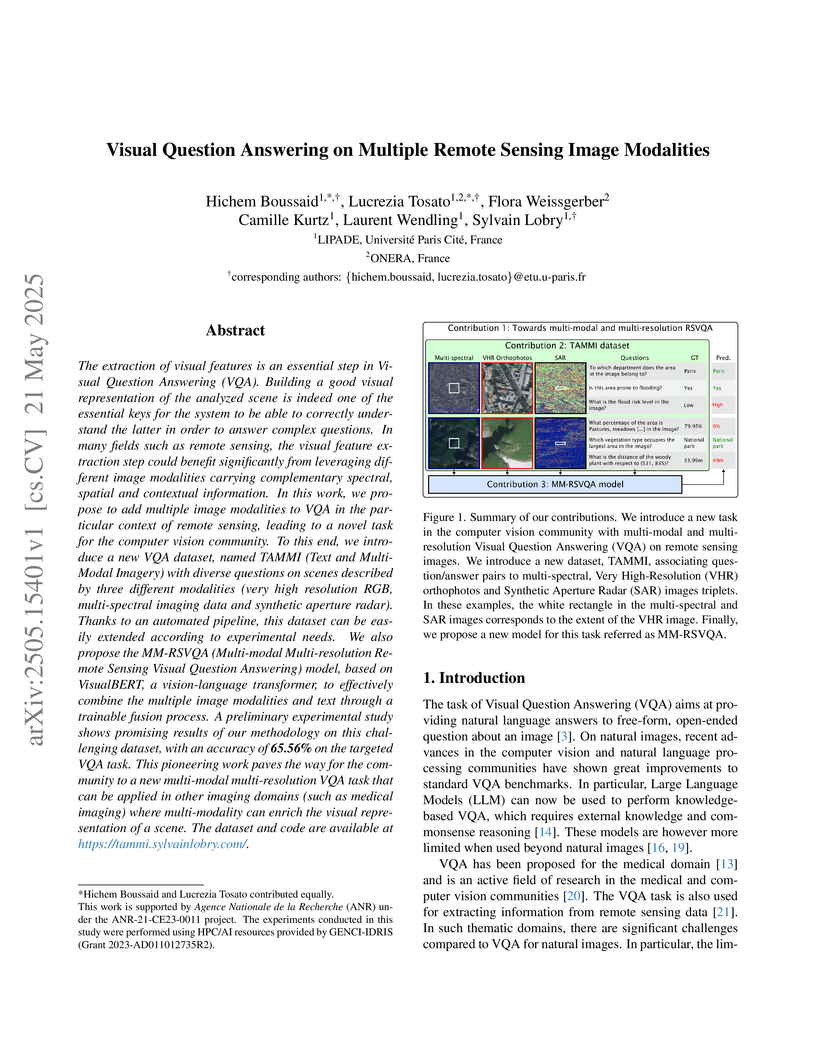

This paper addresses Remote Sensing Visual Question Answering (RSVQA) by introducing a comprehensive approach that leverages Very High Resolution (VHR) RGB orthophotos, multispectral imagery, and Synthetic Aperture Radar (SAR) data. The research also presents the TAMMI dataset, specifically designed for multimodal RSVQA, and demonstrates that integrating these three modalities improves question answering accuracy by 3-7% over single-modality baselines while maintaining robustness to missing data.

Remote Sensing Visual Question Answering (RSVQA) presents unique challenges in ensuring that model decisions are both understandable and grounded in visual content. Current models often suffer from a lack of interpretability and explainability, as well as from biases in dataset distributions that lead to shortcut learning. In this work, we tackle these issues by introducing a novel RSVQA dataset, Chessboard, designed to minimize biases through 3'123'253 questions and a balanced answer distribution. Each answer is linked to one or more cells within the image, enabling fine-grained visual reasoning.

Building on this dataset, we develop an explainable and interpretable model called Checkmate that identifies the image cells most relevant to its decisions. Through extensive experiments across multiple model architectures, we show that our approach improves transparency and supports more trustworthy decision-making in RSVQA systems.

24 Sep 2025

Machine Learning (ML) applications demand significant computational resources, posing challenges for safety-critical domains like aeronautics. The Versatile Tensor Accelerator (VTA) is a promising FPGA-based solution, but its adoption was hindered by its dependency on the TVM compiler and by other code non-compliant with certification requirements. This paper presents an open-source, standalone Python compiler pipeline for the VTA, developed from scratch and designed with certification requirements, modularity, and extensibility in mind. The compiler's effectiveness is demonstrated by compiling and executing LeNet-5 Convolutional Neural Network (CNN) using the VTA simulators, and preliminary results indicate a strong potential for scaling its capabilities to larger CNN architectures. All contributions are publicly available.

This work introduces a unified mathematical framework for modeling and optimizing complex systems with hierarchical, conditional, and mixed-variable input spaces, implemented in the open-source Surrogate Modeling Toolbox (SMT 2.0). The framework formalizes hierarchical dependencies using a generalized role graph, a novel distance function, and a Symmetric Positive Definite (SPD) kernel, demonstrating improved efficiency in design space processing and effective optimization of a green aircraft concept.

The Earth Observation Satellite Planning (EOSP) is a difficult optimization problem with considerable practical interest. A set of requested observations must be scheduled on an agile Earth observation satellite while respecting constraints on their visibility window, as well as maneuver constraints that impose varying delays between successive observations. In addition, the problem is largely oversubscribed: there are much more candidate observations than what can possibly be achieved. Therefore, one must select the set of observations that will be performed while maximizing their weighted cumulative benefit, and propose a feasible schedule for these observations. As previous work mostly focused on heuristic and iterative search algorithms, this paper presents a new technique for selecting and scheduling observations based on Graph Neural Networks (GNNs) and Deep Reinforcement Learning (DRL). GNNs are used to extract relevant information from the graphs representing instances of the EOSP, and DRL drives the search for optimal schedules. Our simulations show that it is able to learn on small problem instances and generalize to larger real-world instances, with very competitive performance compared to traditional approaches.

The Koopman operator presents an attractive approach to achieve global

linearization of nonlinear systems, making it a valuable method for simplifying

the understanding of complex dynamics. While data-driven methodologies have

exhibited promise in approximating finite Koopman operators, they grapple with

various challenges, such as the judicious selection of observables,

dimensionality reduction, and the ability to predict complex system behaviours

accurately. This study presents a novel approach termed Mori-Zwanzig

autoencoder (MZ-AE) to robustly approximate the Koopman operator in

low-dimensional spaces. The proposed method leverages a nonlinear autoencoder

to extract key observables for approximating a finite invariant Koopman

subspace and integrates a non-Markovian correction mechanism using the

Mori-Zwanzig formalism. Consequently, this approach yields an approximate

closure of the dynamics within the latent manifold of the nonlinear

autoencoder, thereby enhancing the accuracy and stability of the Koopman

operator approximation. Demonstrations showcase the technique's improved

predictive capability for flow around a cylinder. It also provides a low

dimensional approximation for Kuramoto-Sivashinsky (KS) with promising

short-term predictability and robust long-term statistical performance. By

bridging the gap between data-driven techniques and the mathematical

foundations of Koopman theory, MZ-AE offers a promising avenue for improved

understanding and prediction of complex nonlinear dynamics.

For classification models based on neural networks, the maximum predicted

class probability is often used as a confidence score. This score rarely

predicts well the probability of making a correct prediction and requires a

post-processing calibration step. However, many confidence calibration methods

fail for problems with many classes. To address this issue, we transform the

problem of calibrating a multiclass classifier into calibrating a single

surrogate binary classifier. This approach allows for more efficient use of

standard calibration methods. We evaluate our approach on numerous neural

networks used for image or text classification and show that it significantly

enhances existing calibration methods.

3D reconstruction of a scene from Synthetic Aperture Radar (SAR) images

mainly relies on interferometric measurements, which involve strict constraints

on the acquisition process. These last years, progress in deep learning has

significantly advanced 3D reconstruction from multiple views in optical

imaging, mainly through reconstruction-by-synthesis approaches pioneered by

Neural Radiance Fields. In this paper, we propose a new inverse rendering

method for 3D reconstruction from unconstrained SAR images, drawing inspiration

from optical approaches. First, we introduce a new simplified differentiable

SAR rendering model, able to synthesize images from a digital elevation model

and a radar backscattering coefficients map. Then, we introduce a

coarse-to-fine strategy to train a Multi-Layer Perceptron (MLP) to fit the

height and appearance of a given radar scene from a few SAR views. Finally, we

demonstrate the surface reconstruction capabilities of our method on synthetic

SAR images produced by ONERA's physically-based EMPRISE simulator. Our method

showcases the potential of exploiting geometric disparities in SAR images and

paves the way for multi-sensor data fusion.

As the interest in autonomous systems continues to grow, one of the major challenges is collecting sufficient and representative real-world data. Despite the strong practical and commercial interest in autonomous landing systems in the aerospace field, there is a lack of open-source datasets of aerial images. To address this issue, we present a dataset-lard-of high-quality aerial images for the task of runway detection during approach and landing phases. Most of the dataset is composed of synthetic images but we also provide manually labelled images from real landing footages, to extend the detection task to a more realistic setting. In addition, we offer the generator which can produce such synthetic front-view images and enables automatic annotation of the runway corners through geometric transformations. This dataset paves the way for further research such as the analysis of dataset quality or the development of models to cope with the detection tasks. Find data, code and more up-to-date information at this https URL

The Resource-Constrained Project Scheduling Problem (RCPSP) is a classical scheduling problem that has received significant attention due to of its numerous applications in industry. However, in practice, task durations are subject to uncertainty that must be considered in order to propose resilient scheduling. In this paper, we address the RCPSP variant with uncertain tasks duration (modeled using known probabilities) and aim to minimize the overall expected project duration. Our objective is to produce a baseline schedule that can be reused multiple times in an industrial setting regardless of the actual duration scenario. We leverage Graph Neural Networks in conjunction with Deep Reinforcement Learning (DRL) to develop an effective policy for task scheduling. This policy operates similarly to a priority dispatch rule and is paired with a Serial Schedule Generation Scheme to produce a schedule. Our empirical evaluation on standard benchmarks demonstrates the approach's superiority in terms of performance and its ability to generalize. The developed framework, Wheatley, is made publicly available online to facilitate further research and reproducibility.

This paper presents a data-driven finite volume method for solving 1D and 2D hyperbolic partial differential equations. This work builds upon the prior research incorporating a data-driven finite-difference approximation of smooth solutions of scalar conservation laws, where optimal coefficients of neural networks approximating space derivatives are learned based on accurate, but cumbersome solutions to these equations. We extend this approach to flux-limited finite volume schemes for hyperbolic scalar and systems of conservation laws. We also train the discretization to efficiently capture discontinuous solutions with shock and contact waves, as well as to the application of boundary conditions. The learning procedure of the data-driven model is extended through the definition of a new loss, paddings and adequate database. These new ingredients guarantee computational stability, preserve the accuracy of fine-grid solutions, and enhance overall performance. Numerical experiments using test cases from the literature in both one- and two-dimensional spaces demonstrate that the learned model accurately reproduces fine-grid results on very coarse meshes.

26 Jun 2024

In a recent study [1], we demonstrated a multi-heterodyne differential absorption lidar (DIAL) for greenhouse gas monitoring utilizing solid targets. The multi-frequency absorption measurement was achieved using electro-optic dual-comb spectroscopy (DCS) with a minimal number of comb teeth to maximize the optical power per tooth. In this work, we examine the lidar's working principle and architecture. Additionally, we present measurements of atmospheric CO2 at 1572 nm over a 1.4 km optical path conducted in summer 2023. Furthermore, we show that, due to the high power per comb tooth, multi-frequency Doppler wind speed measurements can be performed using the DCS signal from aerosol backscattering. This enables simultaneous radial wind speed and path-average gas concentration measurements, offering promising prospects for novel concepts of tunable multi-frequency lidar systems capable of simultaneously monitoring wind speed and gas concentrations.

[1] W. Patiño Rosas and N. Cézard, "Greenhouse gas monitoring using an IPDA lidar based on a dual-comb spectrometer," Opt. Express 32, 13614-13627 (2024).

02 Oct 2025

In binary alloys, domain walls play a central role not only on the phase transitions but also on their physical properties and were at the heart of the 70's metallurgy research. Whereas it can be predicted, with simple physics arguments, that such domain walls cannot exist at the nanometer scale due to the typical lengths of the statistical fluctuations of the order parameter, here we show, with both experimental and numerical approaches how orientational domain walls are formed in CuAu nanoparticles binary model systems. We demonstrate that the formation of domains in larger NPs is driven by elastic strain relaxation which is not needed in smaller NPs where surface effects dominate. Finally, we show how the multivariants NPs tend to form an isotropic material through a continuous model of elasticity.

21 Jul 2023

We present and analyse an architecture for a European-scale quantum network

using satellite links to connect Quantum Cities, which are metropolitan quantum

networks with minimal hardware requirements for the end users. Using NetSquid,

a quantum network simulation tool based on discrete events, we assess and

benchmark the performance of such a network linking distant locations in Europe

in terms of quantum key distribution rates, considering realistic parameters

for currently available or near-term technology. Our results highlight the key

parameters and the limits of current satellite quantum communication links and

can be used to assist the design of future missions. We also discuss the

possibility of using high-altitude balloons as an alternative to satellites.

The Surrogate Modeling Toolbox (SMT) is an open-source Python package that offers a collection of surrogate modeling methods, sampling techniques, and a set of sample problems. This paper presents SMT 2.0, a major new release of SMT that introduces significant upgrades and new features to the toolbox. This release adds the capability to handle mixed-variable surrogate models and hierarchical variables. These types of variables are becoming increasingly important in several surrogate modeling applications. SMT 2.0 also improves SMT by extending sampling methods, adding new surrogate models, and computing variance and kernel derivatives for Kriging. This release also includes new functions to handle noisy and use multifidelity data. To the best of our knowledge, SMT 2.0 is the first open-source surrogate library to propose surrogate models for hierarchical and mixed inputs. This open-source software is distributed under the New BSD license.

Due to its all-weather and day-and-night capabilities, Synthetic Aperture Radar imagery is essential for various applications such as disaster management, earth monitoring, change detection and target recognition. However, the scarcity of labeled SAR data limits the performance of most deep learning algorithms. To address this issue, we propose a novel self-supervised learning framework based on masked Siamese Vision Transformers to create a General SAR Feature Extractor coined SAFE. Our method leverages contrastive learning principles to train a model on unlabeled SAR data, extracting robust and generalizable features. SAFE is applicable across multiple SAR acquisition modes and resolutions. We introduce tailored data augmentation techniques specific to SAR imagery, such as sub-aperture decomposition and despeckling. Comprehensive evaluations on various downstream tasks, including few-shot classification, segmentation, visualization, and pattern detection, demonstrate the effectiveness and versatility of the proposed approach. Our network competes with or surpasses other state-of-the-art methods in few-shot classification and segmentation tasks, even without being trained on the sensors used for the evaluation.

The combination of machine learning models with physical models is a recent

research path to learn robust data representations. In this paper, we introduce

p3VAE, a variational autoencoder that integrates prior physical knowledge

about the latent factors of variation that are related to the data acquisition

conditions. p3VAE combines standard neural network layers with non-trainable

physics layers in order to partially ground the latent space to physical

variables. We introduce a semi-supervised learning algorithm that strikes a

balance between the machine learning part and the physics part. Experiments on

simulated and real data sets demonstrate the benefits of our framework against

competing physics-informed and conventional machine learning models, in terms

of extrapolation capabilities and interpretability. In particular, we show that

p3VAE naturally has interesting disentanglement capabilities. Our code and

data have been made publicly available at

this https URL

13 Dec 2024

With the rise of simulation-based inference (SBI) methods, simulations need to be fast as well as realistic. UFig v1 is a public Python package that generates simulated astronomical images with exceptional speed - taking approximately the same time as source extraction. This makes it particularly well-suited for simulation-based inference (SBI) methods where computational efficiency is crucial. To render an image, UFig requires a galaxy catalog and a description of the point spread function (PSF). It can also add background noise, sample stars using the Besançon model of the Milky Way, and run SExtractor to extract sources from the rendered image. The extracted sources can be matched to the intrinsic catalog, flagged based on SExtractor output and survey masks, and emulators can be used to bypass the image simulation and extraction steps. A first version of UFig was presented in Bergé et al. (2013) and the software has since been used and further developed in a variety of forward modelling applications.

There are no more papers matching your filters at the moment.