RheinMain University of Applied Sciences

We introduce SpERT, an attention model for span-based joint entity and relation extraction. Our key contribution is a light-weight reasoning on BERT embeddings, which features entity recognition and filtering, as well as relation classification with a localized, marker-free context representation. The model is trained using strong within-sentence negative samples, which are efficiently extracted in a single BERT pass. These aspects facilitate a search over all spans in the sentence.

In ablation studies, we demonstrate the benefits of pre-training, strong negative sampling and localized context. Our model outperforms prior work by up to 2.6% F1 score on several datasets for joint entity and relation extraction.

AI-based Classification of Customer Support Tickets: State of the Art and Implementation with AutoML

AI-based Classification of Customer Support Tickets: State of the Art and Implementation with AutoML

This research from RheinMain University of Applied Sciences investigates the applicability of AutoML for classifying customer support tickets using Google Vertex AI. It demonstrates that AutoML can achieve good classification performance (F1 scores up to 0.886) even with relatively small, well-prepared datasets, emphasizing that data quality and class balance are more critical than data volume for successful model training.

While head-mounted displays (HMDs) for Virtual Reality (VR) have become widely available in the consumer market, they pose a considerable obstacle for a realistic face-to-face conversation in VR since HMDs hide a significant portion of the participants faces. Even with image streams from cameras directly attached to an HMD, stitching together a convincing image of an entire face remains a challenging task because of extreme capture angles and strong lens distortions due to a wide field of view. Compared to the long line of research in VR, reconstruction of faces hidden beneath an HMD is a very recent topic of research. While the current state-of-the-art solutions demonstrate photo-realistic 3D reconstruction results, they require high-cost laboratory equipment and large computational costs. We present an approach that focuses on low-cost hardware and can be used on a commodity gaming computer with a single GPU. We leverage the benefits of an end-to-end pipeline by means of Generative Adversarial Networks (GAN). Our GAN produces a frontal-facing 2.5D point cloud based on a training dataset captured with an RGBD camera. In our approach, the training process is offline, while the reconstruction runs in real-time. Our results show adequate reconstruction quality within the 'learned' expressions. Expressions not learned by the network produce artifacts and can trigger the Uncanny Valley effect.

Recent advances in training large language models (LLMs) using massive

amounts of solely textual data lead to strong generalization across many

domains and tasks, including document-specific tasks. Opposed to that there is

a trend to train multi-modal transformer architectures tailored for document

understanding that are designed specifically to fuse textual inputs with the

corresponding document layout. This involves a separate fine-tuning step for

which additional training data is required. At present, no document

transformers with comparable generalization to LLMs are available That raises

the question which type of model is to be preferred for document understanding

tasks. In this paper we investigate the possibility to use purely text-based

LLMs for document-specific tasks by using layout enrichment. We explore drop-in

modifications and rule-based methods to enrich purely textual LLM prompts with

layout information. In our experiments we investigate the effects on the

commercial ChatGPT model and the open-source LLM Solar. We demonstrate that

using our approach both LLMs show improved performance on various standard

document benchmarks. In addition, we study the impact of noisy OCR and layout

errors, as well as the limitations of LLMs when it comes to utilizing document

layout. Our results indicate that layout enrichment can improve the performance

of purely text-based LLMs for document understanding by up to 15% compared to

just using plain document text. In conclusion, this approach should be

considered for the best model choice between text-based LLM or multi-modal

document transformers.

19 Nov 2024

Due to technological development, Augmented Reality (AR) can be applied in

different domains. However, innovative technologies refer to new interaction

paradigms, thus creating a new experience for the user. This so-called User

Experience (UX) is essential for developing and designing interactive products.

Moreover, UX must be measured to get insights into the user's perception and,

thus, to improve innovative technologies. We conducted a Systematic Literature

Review (SLR) to provide an overview of the current research concerning UX

evaluation of AR. In particular, we aim to identify (1) research referring to

UX evaluation of AR and (2) articles containing AR-specific UX models or

frameworks concerning the theoretical foundation. The SLR is a five-step

approach including five scopes. From a total of 498 records based on eight

search terms referring to two databases, 30 relevant articles were identified

and further analyzed. Results show that most approaches concerning UX

evaluation of AR are quantitative. In summary, five UX models/frameworks were

identified. Concerning the UX evaluation results of AR in Training and

Education, the UX was consistently positive. Negative aspects refer to errors

and deficiencies concerning the AR system and its functionality. No specific

metric for UX evaluation of AR in the field of Training and Education exists.

Only three AR-specific standardized UX questionnaires could be found. However,

the questionnaires do not refer to the field of Training and Education. Thus,

there is a lack of research in the field of UX evaluation of AR in Training and

Education.

We address the detection of emission reduction goals in corporate reports, an important task for monitoring companies' progress in addressing climate change. Specifically, we focus on the issue of integrating expert feedback in the form of labeled example passages into LLM-based pipelines, and compare the two strategies of (1) a dynamic selection of few-shot examples and (2) the automatic optimization of the prompt by the LLM itself. Our findings on a public dataset of 769 climate-related passages from real-world business reports indicate that automatic prompt optimization is the superior approach, while combining both methods provides only limited benefit. Qualitative results indicate that optimized prompts do indeed capture many intricacies of the targeted emission goal extraction task.

03 Jun 2025

Automated Program Repair (APR) proposes bug fixes to aid developers in

maintaining software. The state of the art in this domain focuses on using

LLMs, leveraging their strong capabilities to comprehend specifications in

natural language and to generate program code. Recent works have shown that

LLMs can be used to generate repairs. However, despite the APR community's

research achievements and several industry deployments in the last decade, APR

still lacks the capabilities to generalize broadly. In this work, we present an

intensive empirical evaluation of LLMs for generating patches. We evaluate a

diverse set of 13 recent models, including open ones (e.g., Llama 3.3, Qwen 2.5

Coder, and DeepSeek R1 (dist.)) and closed ones (e.g., o3-mini, GPT-4o, Claude

3.7 Sonnet, Gemini 2.0 Flash). In particular, we explore language-agnostic

repairs by utilizing benchmarks for Java (e.g., Defects4J), JavaScript (e.g.,

BugsJS), Python (e.g., BugsInPy), and PHP (e.g., BugsPHP). Besides the

generalization between different languages and levels of patch complexity, we

also investigate the effects of fault localization (FL) as a preprocessing step

and compare the progress for open vs closed models. Our evaluation represents a

snapshot of the current repair capabilities of the latest LLMs. Key results

include: (1) Different LLMs tend to perform best for different languages, which

makes it hard to develop cross-platform repair techniques with single LLMs. (2)

The combinations of models add value with respect to uniquely fixed bugs, so a

committee of expert models should be considered. (3) Under realistic

assumptions of imperfect FL, we observe significant drops in accuracy from the

usual practice of using perfect FL. Our findings and insights will help both

researchers and practitioners develop reliable and generalizable APR techniques

and evaluate them in realistic and fair environments.

The surge of digital documents in various formats, including less

standardized documents such as business reports and environmental assessments,

underscores the growing importance of Document Understanding. While Large

Language Models (LLMs) have showcased prowess across diverse natural language

processing tasks, their direct application to Document Understanding remains a

challenge. Previous research has demonstrated the utility of LLMs in this

domain, yet their significant computational demands make them challenging to

deploy effectively. Additionally, proprietary Blackbox LLMs often outperform

their open-source counterparts, posing a barrier to widespread accessibility.

In this paper, we delve into the realm of document understanding, leveraging

distillation methods to harness the power of large LLMs while accommodating

computational limitations. Specifically, we present a novel approach wherein we

distill document understanding knowledge from the proprietary LLM ChatGPT into

FLAN-T5. Our methodology integrates labeling and curriculum-learning mechanisms

to facilitate efficient knowledge transfer. This work contributes to the

advancement of document understanding methodologies by offering a scalable

solution that bridges the gap between resource-intensive LLMs and practical

applications. Our findings underscore the potential of distillation techniques

in facilitating the deployment of sophisticated language models in real-world

scenarios, thereby fostering advancements in natural language processing and

document comprehension domains.

Innovative technologies, such as Augmented Reality (AR), introduce new interaction paradigms, demanding the identification of software requirements during the software development process. In general, design recommendations are related to this, supporting the design of applications positively and meeting stakeholder needs. However, current research lacks context-specific AR design recommendations. This study addresses this gap by identifying and analyzing practical AR design recommendations relevant to the evaluation phase of the User-Centered Design (UCD) process. We rely on an existing dataset of Mixed Reality (MR) design recommendations. We applied a multi-method approach by (1) extending the dataset with AR-specific recommendations published since 2020, (2) classifying the identified recommendations using a NLP classification approach based on a pre-trained Sentence Transformer model, (3) summarizing the content of all topics, and (4) evaluating their relevance concerning AR in Corporate Training (CT) both based on a qualitative Round Robin approach with five experts. As a result, an updated dataset of 597 practitioner design recommendations, classified into 84 topics, is provided with new insights into their applicability in the context of AR in CT. Based on this, 32 topics with a total of 284 statements were evaluated as relevant for AR in CT. This research directly contributes to the authors' work for extending their AR-specific User Experience (UX) measurement approach, supporting AR authors in targeting the improvement of AR applications for CT scenarios.

20 Nov 2024

Measuring User Experience (UX) with standardized questionnaires is a widely used method. A questionnaire is based on different scales that represent UX factors and items. However, the questionnaires have no common ground concerning naming different factors and the items used to measure them. This study aims to identify general UX factors based on the formulation of the measurement items. Items from a set of 40 established UX questionnaires were analyzed by Generative AI (GenAI) to identify semantically similar items and to cluster similar topics. We used the LLM ChatGPT-4 for this analysis. Results show that ChatGPT-4 can classify items into meaningful topics and thus help to create a deeper understanding of the structure of the UX research field. In addition, we show that ChatGPT-4 can filter items related to a predefined UX concept out of a pool of UX items.

07 Feb 2025

In this work, we study the solution of shortest vector problems (SVPs)

arising in terms of learning with error problems (LWEs). LWEs are linear

systems of equations over a modular ring, where a perturbation vector is added

to the right-hand side. This type of problem is of great interest, since LWEs

have to be solved in order to be able to break lattice-based cryptosystems as

the Module-Lattice-Based Key-Encapsulation Mechanism published by NIST in 2024.

Due to this fact, several classical and quantum-based algorithms have been

studied to solve SVPs. Two well-known algorithms that can be used to simplify a

given SVP are the Lenstra-Lenstra-Lov\'asz (LLL) algorithm and the Block

Korkine-Zolotarev (BKZ) algorithm. LLL and BKZ construct bases that can be used

to compute or approximate solutions of the SVP. We study the performance of

both algorithms for SVPs with different sizes and modular rings. Thereby,

application of LLL or BKZ to a given SVP is considered to be successful if they

produce bases containing a solution vector of the SVP.

12 Feb 2025

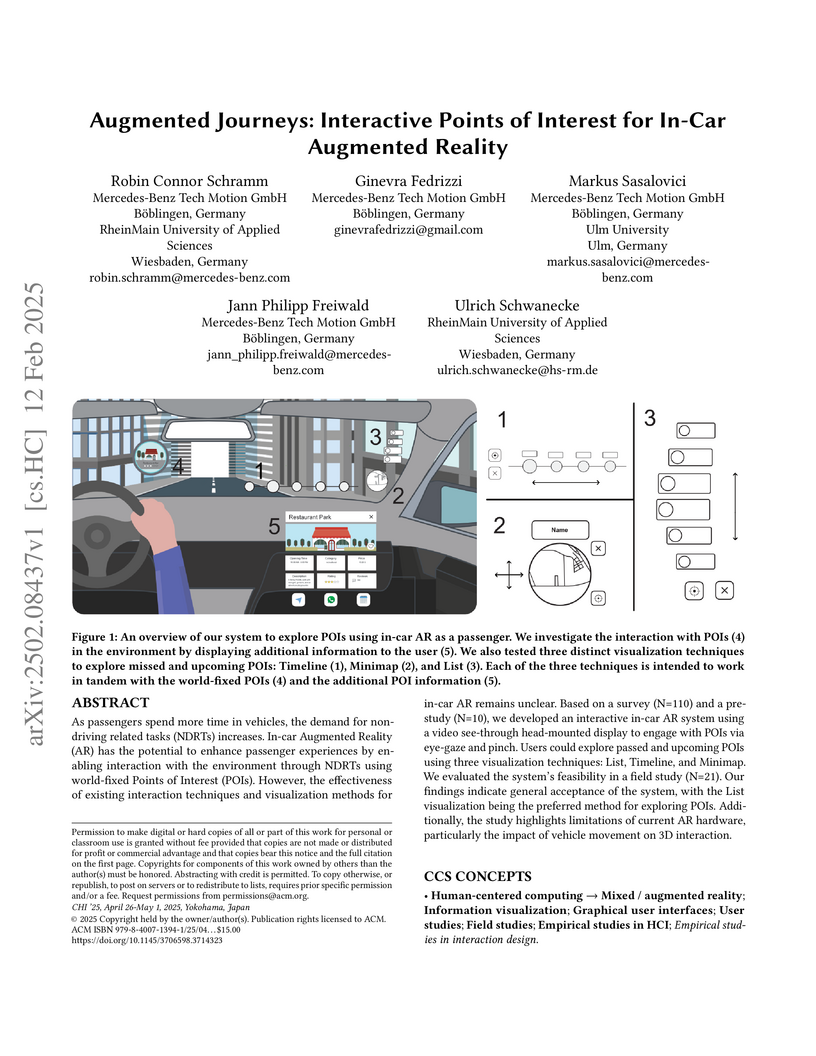

As passengers spend more time in vehicles, the demand for non-driving related

tasks (NDRTs) increases. In-car Augmented Reality (AR) has the potential to

enhance passenger experiences by enabling interaction with the environment

through NDRTs using world-fixed Points of Interest (POIs). However, the

effectiveness of existing interaction techniques and visualization methods for

in-car AR remains unclear. Based on a survey (N=110) and a pre-study (N=10), we

developed an interactive in-car AR system using a video see-through

head-mounted display to engage with POIs via eye-gaze and pinch. Users could

explore passed and upcoming POIs using three visualization techniques: List,

Timeline, and Minimap. We evaluated the system's feasibility in a field study

(N=21). Our findings indicate general acceptance of the system, with the List

visualization being the preferred method for exploring POIs. Additionally, the

study highlights limitations of current AR hardware, particularly the impact of

vehicle movement on 3D interaction.

12 Feb 2025

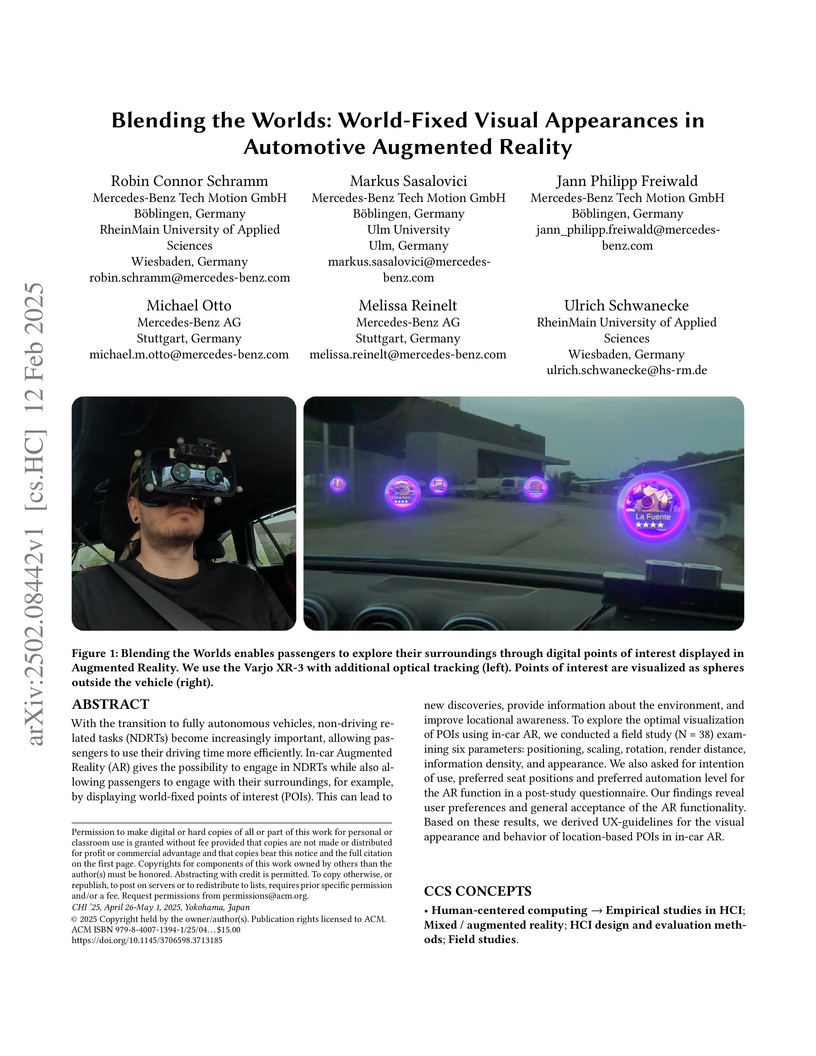

With the transition to fully autonomous vehicles, non-driving related tasks

(NDRTs) become increasingly important, allowing passengers to use their driving

time more efficiently. In-car Augmented Reality (AR) gives the possibility to

engage in NDRTs while also allowing passengers to engage with their

surroundings, for example, by displaying world-fixed points of interest (POIs).

This can lead to new discoveries, provide information about the environment,

and improve locational awareness. To explore the optimal visualization of POIs

using in-car AR, we conducted a field study (N = 38) examining six parameters:

positioning, scaling, rotation, render distance, information density, and

appearance. We also asked for intention of use, preferred seat positions and

preferred automation level for the AR function in a post-study questionnaire.

Our findings reveal user preferences and general acceptance of the AR

functionality. Based on these results, we derived UX-guidelines for the visual

appearance and behavior of location-based POIs in in-car AR.

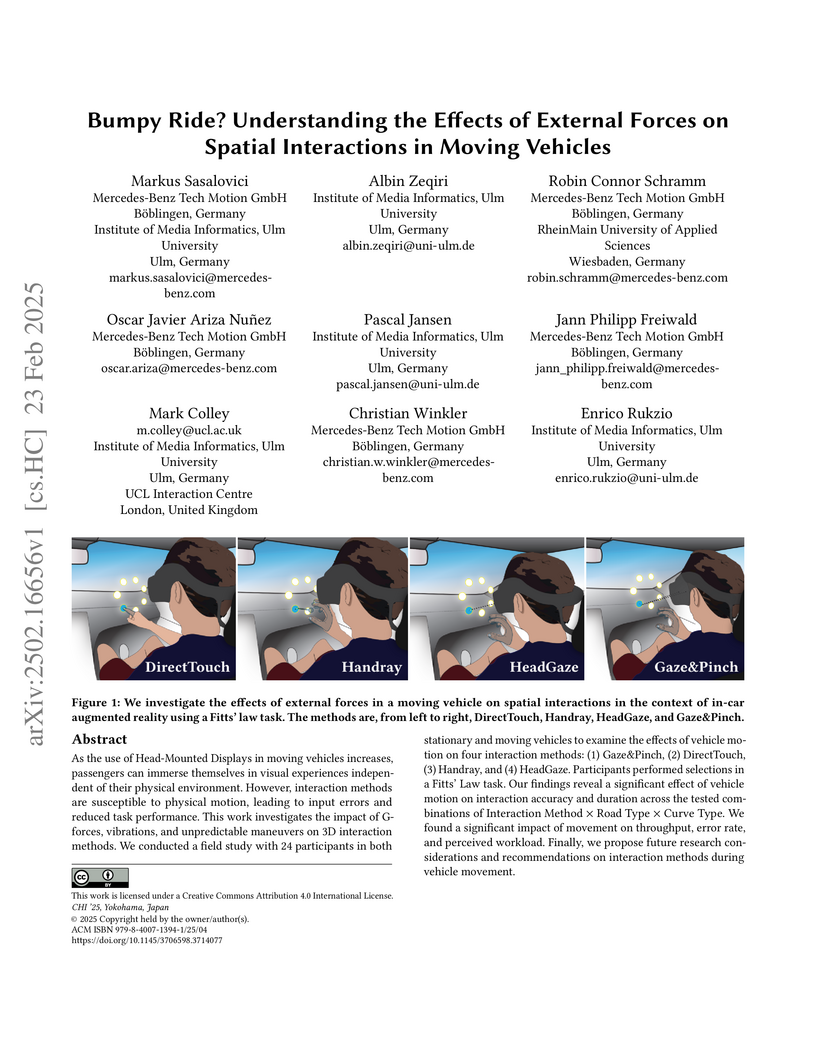

23 Feb 2025

As the use of Head-Mounted Displays in moving vehicles increases, passengers

can immerse themselves in visual experiences independent of their physical

environment. However, interaction methods are susceptible to physical motion,

leading to input errors and reduced task performance. This work investigates

the impact of G-forces, vibrations, and unpredictable maneuvers on 3D

interaction methods. We conducted a field study with 24 participants in both

stationary and moving vehicles to examine the effects of vehicle motion on four

interaction methods: (1) Gaze&Pinch, (2) DirectTouch, (3) Handray, and (4)

HeadGaze. Participants performed selections in a Fitts' Law task. Our findings

reveal a significant effect of vehicle motion on interaction accuracy and

duration across the tested combinations of Interaction Method x Road Type x

Curve Type. We found a significant impact of movement on throughput, error

rate, and perceived workload. Finally, we propose future research

considerations and recommendations on interaction methods during vehicle

movement.

In this paper we present the results of a user study on exploratory search

activities in a social science digital library. We conducted a user study with

32 participants with a social sciences background -- 16 postdoctoral

researchers and 16 students -- who were asked to solve a task on searching

related work to a given topic. The exploratory search task was performed in a

10-minutes time slot. The use of certain search activities is measured and

compared to gaze data recorded with an eye tracking device. We use a novel tree

graph representation to visualise the users' search patterns and introduce a

way to combine multiple search session trees. The tree graph representation is

capable to create one single tree for multiple users and to identify common

search patterns. In addition, the information behaviour of students and

postdoctoral researchers is being compared. The results show that search

activities on the stratagem level are frequently utilised by both user groups.

The most heavily used search activities were keyword search, followed by

browsing through references and citations, and author searching. The eye

tracking results showed an intense examination of documents metadata,

especially on the level of citations and references. When comparing the group

of students and postdoctoral researchers we found significant differences

regarding gaze data on the area of the journal name of the seed document. In

general, we found a tendency of the postdoctoral researchers to examine the

metadata records more intensively with regards to dwell time and the number of

fixations.

Entity linking, the task of mapping textual mentions to known entities, has recently been tackled using contextualized neural networks. We address the question whether these results -- reported for large, high-quality datasets such as Wikipedia -- transfer to practical business use cases, where labels are scarce, text is low-quality, and terminology is highly domain-specific. Using an entity linking model based on BERT, a popular transformer network in natural language processing, we show that a neural approach outperforms and complements hand-coded heuristics, with improvements of about 20% top-1 accuracy. Also, the benefits of transfer learning on a large corpus are demonstrated, while fine-tuning proves difficult. Finally, we compare different BERT-based architectures and show that a simple sentence-wise encoding (Bi-Encoder) offers a fast yet efficient search in practice.

Many recent approaches towards neural information retrieval mitigate their

computational costs by using a multi-stage ranking pipeline. In the first

stage, a number of potentially relevant candidates are retrieved using an

efficient retrieval model such as BM25. Although BM25 has proven decent

performance as a first-stage ranker, it tends to miss relevant passages. In

this context we propose CoRT, a simple neural first-stage ranking model that

leverages contextual representations from pretrained language models such as

BERT to complement term-based ranking functions while causing no significant

delay at query time. Using the MS MARCO dataset, we show that CoRT

significantly increases the candidate recall by complementing BM25 with missing

candidates. Consequently, we find subsequent re-rankers achieve superior

results with less candidates. We further demonstrate that passage retrieval

using CoRT can be realized with surprisingly low latencies.

We present a joint model for entity-level relation extraction from documents.

In contrast to other approaches - which focus on local intra-sentence mention

pairs and thus require annotations on mention level - our model operates on

entity level. To do so, a multi-task approach is followed that builds upon

coreference resolution and gathers relevant signals via multi-instance learning

with multi-level representations combining global entity and local mention

information. We achieve state-of-the-art relation extraction results on the

DocRED dataset and report the first entity-level end-to-end relation extraction

results for future reference. Finally, our experimental results suggest that a

joint approach is on par with task-specific learning, though more efficient due

to shared parameters and training steps.

We address contextualized code retrieval, the search for code snippets

helpful to fill gaps in a partial input program. Our approach facilitates a

large-scale self-supervised contrastive training by splitting source code

randomly into contexts and targets. To combat leakage between the two, we

suggest a novel approach based on mutual identifier masking, dedentation, and

the selection of syntax-aligned targets. Our second contribution is a new

dataset for direct evaluation of contextualized code retrieval, based on a

dataset of manually aligned subpassages of code clones. Our experiments

demonstrate that our approach improves retrieval substantially, and yields new

state-of-the-art results for code clone and defect detection.

We address the challenge of building domain-specific knowledge models for industrial use cases, where labelled data and taxonomic information is initially scarce. Our focus is on inductive link prediction models as a basis for practical tools that support knowledge engineers with exploring text collections and discovering and linking new (so-called open-world) entities to the knowledge graph. We argue that - though neural approaches to text mining have yielded impressive results in the past years - current benchmarks do not reflect the typical challenges encountered in the industrial wild properly. Therefore, our first contribution is an open benchmark coined IRT2 (inductive reasoning with text) that (1) covers knowledge graphs of varying sizes (including very small ones), (2) comes with incidental, low-quality text mentions, and (3) includes not only triple completion but also ranking, which is relevant for supporting experts with discovery tasks.

We investigate two neural models for inductive link prediction, one based on end-to-end learning and one that learns from the knowledge graph and text data in separate steps. These models compete with a strong bag-of-words baseline. The results show a significant advance in performance for the neural approaches as soon as the available graph data decreases for linking. For ranking, the results are promising, and the neural approaches outperform the sparse retriever by a wide margin.

There are no more papers matching your filters at the moment.