Semnan University

20 Sep 2025

We consider an extension to the Standard Model (SM) with four new fields including scalar(S), spinor(ψ1,2) and vector(Vμ) under new U(1) gauge group in the hidden sector. The scalar particle interacts with the SM Higgs particle and is an intermediary between the dark and the SM parts . Our dark matter(DM) candidate is the spinor particle. We show that the model successfully explains the relic density of the DM in the universe and evades the strong bounds from direct detection experiments while respecting the theoretical constraints and the vacuum stability conditions. In addition, we study the hierarchy problem within the Veltman approach by solving the renormalization group equations at one-loop. We demonstrate that the addition of the new fields contributes to the Veltman parameters which in turn results in satisfying the Veltman conditions much lower than the Planck scale. For the our DM model we find one representative point in the viable parameter space which satisfy also the Veltman conditions at Λ = 1 TeV. Therefore, the presence of the extra particle solves the fine-tuning problem of the Higgs mass.

Decentralized optimization strategies are helpful for various applications,

from networked estimation to distributed machine learning. This paper studies

finite-sum minimization problems described over a network of nodes and proposes

a computationally efficient algorithm that solves distributed convex problems

and optimally finds the solution to locally non-convex objective functions. In

contrast to batch gradient optimization in some literature, our algorithm is on

a single-time scale with no extra inner consensus loop. It evaluates one

gradient entry per node per time. Further, the algorithm addresses link-level

nonlinearity representing, for example, logarithmic quantization of the

exchanged data or clipping of the exchanged data bits. Leveraging

perturbation-based theory and algebraic Laplacian network analysis proves

optimal convergence and dynamics stability over time-varying and switching

networks. The time-varying network setup might be due to packet drops or link

failures. Despite the nonlinear nature of the dynamics, we prove exact

convergence in the face of odd sign-preserving sector-bound nonlinear data

transmission over the links. Illustrative numerical simulations further

highlight our contributions.

Distributed resource allocation (DRA) is fundamental to modern networked systems, spanning applications from economic dispatch in smart grids to CPU scheduling in data centers. Conventional DRA approaches require reliable communication, yet real-world networks frequently suffer from link failures, packet drops, and communication delays due to environmental conditions, network congestion, and security threats.

We introduce a novel resilient DRA algorithm that addresses these critical challenges, and our main contributions are as follows: (1) guaranteed constraint feasibility at all times, ensuring resource-demand balance even during algorithm termination or network disruption; (2) robust convergence despite sector-bound nonlinearities at nodes/links, accommodating practical constraints like quantization and saturation; and (3) optimal performance under merely uniformly-connected networks, eliminating the need for continuous connectivity.

Unlike existing approaches that require persistent network connectivity and provide only asymptotic feasibility, our graph-theoretic solution leverages network percolation theory to maintain performance during intermittent disconnections. This makes it particularly valuable for mobile multi-agent systems where nodes frequently move out of communication range. Theoretical analysis and simulations demonstrate that our algorithm converges to optimal solutions despite heterogeneous time delays and substantial link failures, significantly advancing the reliability of distributed resource allocation in practical network environments.

28 Nov 2021

According to AdS/DL (Anti de Sitter/ Deep Learning) correspondence given by \cite{Has}, in this paper with a data-driven approach and leveraging holography principle we have designed an artificial neural network architecture to produce metric field of planar BTZ and quintessence black holes. Data has been collected by choosing minimally coupled massive scalar field with quantum fluctuations and try to process two emergent and ground-truth metrics versus the holographic parameter which plays role of depth of the neural network. Loss or error function which shows rate of deviation of these two metrics in presence of penalty regularization term reaches to its minimum value when values of the learning rate approach to the observed steepest gradient point. Values of the regularization or penalty term of the quantum scalar field has critical role to matching this two mentioned metric.

Also we design an algorithm which helps us to find optimum value for learning parameter and at last we understand that loss function convergence heavily depends on the number of epochs and learning rate.

15 Sep 2024

We investigate the behavior of various measures of quantum coherence and

quantum correlation in the spin-1/2 Heisenberg XYZ model with added

Dzyaloshinsky-Moriya (DM) and Kaplan--Shekhtman--Entin-Wohlman--Aharony (KSEA)

interactions at a thermal regime described by a Gibbs density operator. We aim

to understand the restricted hierarchical classification of different quantum

resources, where Bell nonlocality ⊆ quantum steering ⊆

quantum entanglement ⊆ quantum discord ⊆ quantum coherence.

This hierarchy highlights the increasingly stringent conditions required as we

move from quantum coherence to more specific quantum phenomena. In order to

enhance quantum coherence, quantum correlation, and fidelity of teleportation,

our analysis encompasses the effects of independently provided sinusoidal

magnetic field control as well as DM and KSEA interactions on the considered

system. The results reveal that enhancing the entanglement or quantum

correlation of the channel does not always guarantee successful teleportation

or even an improvement in teleportation fidelity. Thus, the relationship

between teleportation fidelity and the channel's underlying quantum properties

is intricate. Our study provides valuable insights into the complex interplay

of quantum coherence and correlation hierarchy, offering potential applications

for quantum communication and information processing technologies.

Distributed optimization finds applications in large-scale machine learning, data processing and classification over multi-agent networks. In real-world scenarios, the communication network of agents may encounter latency that may affect the convergence of the optimization protocol. This paper addresses the case where the information exchange among the agents (computing nodes) over data-transmission channels (links) might be subject to communication time-delays, which is not well addressed in the existing literature. Our proposed algorithm improves the state-of-the-art by handling heterogeneous and arbitrary but bounded and fixed (time-invariant) delays over general strongly-connected directed networks. Arguments from matrix theory, algebraic graph theory, and augmented consensus formulation are applied to prove the convergence to the optimal value. Simulations are provided to verify the results and compare the performance with some existing delay-free algorithms.

Distributed allocation finds applications in many scenarios including CPU scheduling, distributed energy resource management, and networked coverage control. In this paper, we propose a fast convergent optimization algorithm with a tunable rate using the signum function. The convergence rate of the proposed algorithm can be managed by changing two parameters. We prove convergence over uniformly-connected multi-agent networks. Therefore, the solution converges even if the network loses connectivity at some finite time intervals. The proposed algorithm is all-time feasible, implying that at any termination time of the algorithm, the resource-demand feasibility holds. This is in contrast to asymptotic feasibility in many dual formulation solutions (e.g., ADMM) that meet resource-demand feasibility over time and asymptotically.

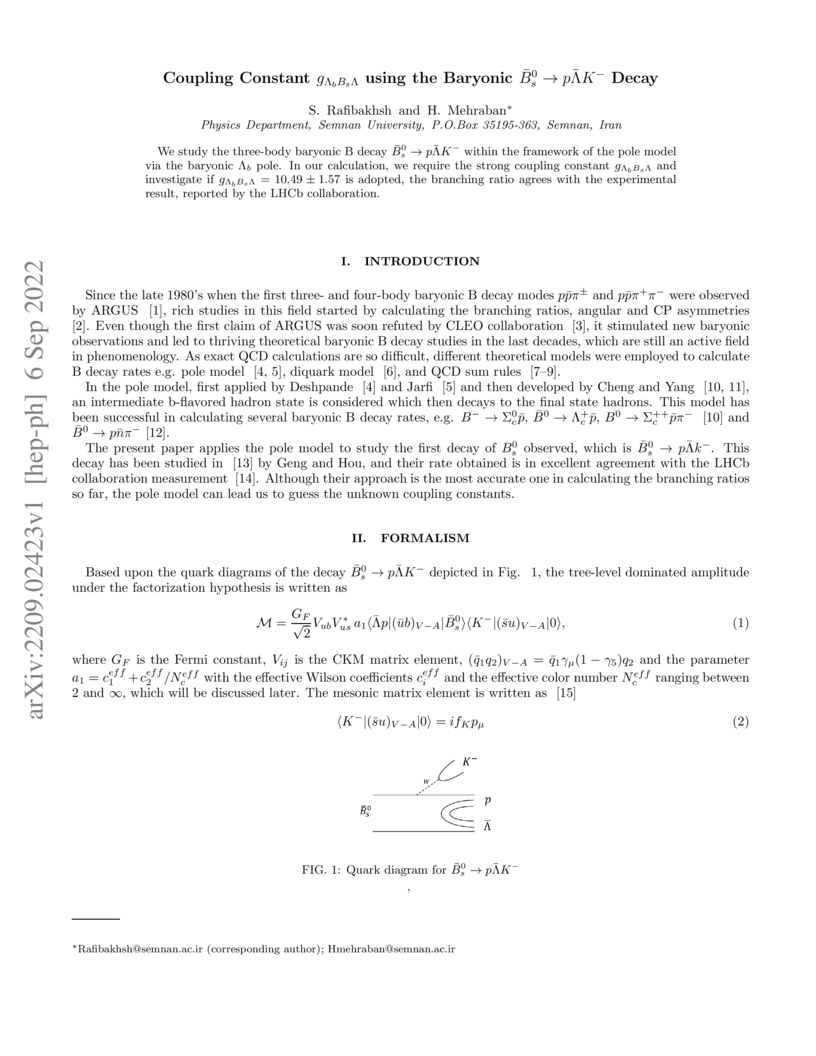

06 Sep 2022

We study the three-body baryonic B decay Bˉs0→pΛˉK− within the framework of the pole model via the baryonic Λb pole. In our calculation, we require the strong coupling constant gΛbBsΛ and investigate if gΛbBsΛ=10.49±1.57 is adopted, the branching ratio agrees with the experimental result, reported by the LHCb collaboration.

29 Sep 2025

In this paper, we introduce and explore new classes of S-acts over a monoid S, namely, strongly Hopfian and strongly co-Hopfian acts, as well as their weaker counterparts, Hopfian and co-Hopfian acts. We investigate the relationships between these newly defined structures and well-studied classes of S-acts, including Noetherian, Artinian, injective, projective, quasi-injective, and quasi-projective acts. A key result shows that, under certain conditions, a quasi-projective (respectively quasi-injective) S-act that is strongly co-Hopfian (respectively strongly Hopfian) is also strongly Hopfian (respectively strongly co-Hopfian). Moreover, we provide a variety of examples and structural results concerning the behavior of subacts and quotient acts of strongly Hopfian and strongly co-Hopfian S-acts, further elucidating the internal structure and interrelationships within this extended framework.

11 Aug 2020

The Λ cold dark matter (ΛCDM) scenario well describes the

Universe at large scales, but shows some serious difficulties at small scales:

the inner dark matter (DM) density profiles of spiral galaxies generally appear

to be cored, without the r−1 predicted by N-body simulations in the above

scenario.

In a more physical context, the baryons in the galaxy might backreact and

erase the original cusp through supernova explosions. Before that this effect

be investigated, it is important to determine how wide and frequent the

discrepancy between observed and N-body predicted profiles is and what its

features are. We used more than 3200 quite extended rotation curves (RCs) of

good quality and high resolution of disk systems. The curves cover all

magnitude ranges. These RCs were condensed into 26 coadded RCs, each of them

built with individual RCs of galaxies of similar luminosity and morphology. We

performed mass models of these 26 RCs using the Navarro-Frenk-White (NFW)

profile for the contribution of the DM halo to the circular velocity and the

exponential Freeman disk for that of the stellar disk. The fits are generally

poor in all the 26 cases: in several cases, we find χred2>2. Moreover,

the best-fitting values of three parameters of the model (c, MD, and

Mvir) combined with those of their 1σ uncertainty clearly

contradict well-known expectations of the ΛCDM scenario. We also tested

the scaling relations that exist in spirals with the fitting outcome: the

modeling does not account for these scaling relations.

Therefore, NFW halo density law cannot account for the kinematics of the

whole family of disk galaxies. It is therefore mandatory for the ΛCDM

scenario in any disk galaxy of any luminosity to transform initial cusps into

the observed cores.

05 Mar 2025

Meta-analysis employs statistical techniques to synthesize the results of individual studies, providing an estimate of the overall effect size for a specific outcome of interest. The direction and magnitude of this estimate, along with its confidence interval, offer valuable insights into the underlying phenomenon or relationship. As an extension of standard meta-analysis, meta-regression analysis incorporates multiple moderators -- capturing key study characteristics -- into the model to explain heterogeneity in true effect sizes across studies. This study provides a comprehensive overview of meta-analytic procedures tailored to economic research, addressing key challenges such as between-study heterogeneity, publication bias, and effect size dependence. It equips researchers with essential tools and insights to conduct rigorous and informative meta-analyses in economics and related fields.

01 Nov 2014

We define the concepts of weakly precious and precious rings which generalize the notions of weakly clean and nil-clean rings. We obtain some fundamental properties of these rings. We also obtain certain subclasses of such rings and then offer new kinds of weakly clean rings and nil-clean rings.

02 Jan 2024

Studying the relations between entanglement and coherence is essential in many quantum information applications. For this, we consider the concurrence, intrinsic concurrence and first-order coherence, and evaluate the proposed trade-off relations between them. In particular, we study the temporal evolution of a general two-qubit XYZ Heisenberg model with asymmetric spin-orbit interaction under decoherence and analyze the trade-off relations of quantum resource theory. For XYZ Heisenberg model, we confirm that the trade-off relation between intrinsic concurrence and first-order coherence holds. Furthermore, we show that the lower bound of intrinsic concurrence is universally valid, but the upper bound is generally not. These relations in Heisenberg models can provide a way to explore how quantum resources are distributed in spins, which may inspire future applications in quantum information processing.

21 Apr 2020

The dynamic behavior of the cylindrical shell can be predicted by more

simplified beam models for a wide range of applications. The present paper

deals with finding design conditions in which the cylindrical shell performs

like a beam.

Zero-Shot Learning (ZSL) has rapidly advanced in recent years. Towards

overcoming the annotation bottleneck in the Sign Language Recognition (SLR), we

explore the idea of Zero-Shot Sign Language Recognition (ZS-SLR) with no

annotated visual examples, by leveraging their textual descriptions. In this

way, we propose a multi-modal Zero-Shot Sign Language Recognition (ZS-SLR)

model harnessing from the complementary capabilities of deep features fused

with the skeleton-based ones. A Transformer-based model along with a C3D model

is used for hand detection and deep features extraction, respectively. To make

a trade-off between the dimensionality of the skeletonbased and deep features,

we use an Auto-Encoder (AE) on top of the Long Short Term Memory (LSTM)

network. Finally, a semantic space is used to map the visual features to the

lingual embedding of the class labels, achieved via the Bidirectional Encoder

Representations from Transformers (BERT) model. Results on four large-scale

datasets, RKS-PERSIANSIGN, First-Person, ASLVID, and isoGD, show the

superiority of the proposed model compared to state-of-the-art alternatives in

ZS-SLR.

26 Feb 2018

The paper "Hyper-rational choice theory" from Semnan University introduces a new concept of rationality that incorporates an individual's consideration for the profit or loss of other actors, alongside their own. This extended framework reinterprets classic game theory scenarios like the Prisoner's Dilemma, showing how cooperation or spiteful behaviors can emerge as hyper-rational outcomes, providing a more comprehensive model for human interactive decisions.

Federated Learning marks a turning point in the implementation of

decentralized machine learning (especially deep learning) for wireless devices

by protecting users' privacy and safeguarding raw data from third-party access.

It assigns the learning process independently to each client. First, clients

locally train a machine learning model based on local data. Next, clients

transfer local updates of model weights and biases (training data) to a server.

Then, the server aggregates updates (received from clients) to create a global

learning model. However, the continuous transfer between clients and the server

increases communication costs and is inefficient from a resource utilization

perspective due to the large number of parameters (weights and biases) used by

deep learning models. The cost of communication becomes a greater concern when

the number of contributing clients and communication rounds increases. In this

work, we propose a novel framework, FedZip, that significantly decreases the

size of updates while transferring weights from the deep learning model between

clients and their servers. FedZip implements Top-z sparsification, uses

quantization with clustering, and implements compression with three different

encoding methods. FedZip outperforms state-of-the-art compression frameworks

and reaches compression rates up to 1085x, and preserves up to 99% of bandwidth

and 99% of energy for clients during communication.

In this study, considering the importance of how to exploit renewable natural resources, we analyze a fishing model with nonlinear harvesting function in which the players at the equilibrium point do a static game with complete information that, according to the calculations, will cause a waste of energy for both players and so the selection of cooperative strategies along with the agreement between the players is the result of this research.

04 Dec 2024

Mobile target tracking is crucial in various applications such as surveillance and autonomous navigation. This study presents a decentralized tracking framework utilizing a Consensus-Based Estimation Filter (CBEF) integrated with the Nearly-Constant-Velocity (NCV) model to predict a moving target's state. The framework facilitates agents in a network to collaboratively estimate the target's position by sharing local observations and achieving consensus despite communication constraints and measurement noise. A saturation-based filtering technique is employed to enhance robustness by mitigating the impact of noisy sensor data. Simulation results demonstrate that the proposed method effectively reduces the Mean Squared Estimation Error (MSEE) over time, indicating improved estimation accuracy and reliability. The findings underscore the effectiveness of the CBEF in decentralized environments, highlighting its scalability and resilience in the presence of uncertainties.

Decentralized algorithms have gained substantial interest owing to

advancements in cloud computing, Internet of Things (IoT), intelligent

transportation networks, and parallel processing over sensor networks. The

convergence of such algorithms is directly related to specific properties of

the underlying network topology. Specifically, the clustering coefficient is

known to affect, for example, the controllability/observability and the

epidemic growth over networks. In this work, we study the effects of the

clustering coefficient on the convergence rate of networked optimization

approaches. In this regard, we model the structure of large-scale distributed

systems by random scale-free (SF) and clustered scale-free (CSF) networks and

compare the convergence rate by tuning the network clustering coefficient. This

is done by keeping other relevant network properties (such as power-law degree

distribution, number of links, and average degree) unchanged. Monte-Carlo-based

simulations are used to compare the convergence rate over many trials of SF

graph topologies. Furthermore, to study the convergence rate over real case

studies, we compare the clustering coefficient of some real-world networks with

the eigenspectrum of the underlying network (as a measure of convergence rate).

The results interestingly show higher convergence rate over low-clustered

networks. This is significant as one can improve the learning rate of many

existing decentralized machine-learning scenarios by tuning the network

clustering.

There are no more papers matching your filters at the moment.