Terra Quantum AG

11 May 2025

In the rapidly evolving field of quantum computing, tensor networks serve as

an important tool due to their multifaceted utility. In this paper, we review

the diverse applications of tensor networks and show that they are an important

instrument for quantum computing. Specifically, we summarize the application of

tensor networks in various domains of quantum computing, including simulation

of quantum computation, quantum circuit synthesis, quantum error correction and

mitigation, and quantum machine learning. Finally, we provide an outlook on the

opportunities and the challenges of the tensor-network techniques.

Image classification, a pivotal task in multiple industries, faces computational challenges due to the burgeoning volume of visual data. This research addresses these challenges by introducing two quantum machine learning models that leverage the principles of quantum mechanics for effective computations. Our first model, a hybrid quantum neural network with parallel quantum circuits, enables the execution of computations even in the noisy intermediate-scale quantum era, where circuits with a large number of qubits are currently infeasible. This model demonstrated a record-breaking classification accuracy of 99.21% on the full MNIST dataset, surpassing the performance of known quantum-classical models, while having eight times fewer parameters than its classical counterpart. Also, the results of testing this hybrid model on a Medical MNIST (classification accuracy over 99%), and on CIFAR-10 (classification accuracy over 82%), can serve as evidence of the generalizability of the model and highlights the efficiency of quantum layers in distinguishing common features of input data. Our second model introduces a hybrid quantum neural network with a Quanvolutional layer, reducing image resolution via a convolution process. The model matches the performance of its classical counterpart, having four times fewer trainable parameters, and outperforms a classical model with equal weight parameters. These models represent advancements in quantum machine learning research and illuminate the path towards more accurate image classification systems.

30 Mar 2025

We present the Quantum Memory Matrix (QMM) hypothesis, which addresses the

longstanding Black Hole Information Paradox rooted in the apparent conflict

between Quantum Mechanics (QM) and General Relativity (GR). This paradox raises

the question of how information is preserved during black hole formation and

evaporation, given that Hawking radiation appears to result in information

loss, challenging unitarity in quantum mechanics. The QMM hypothesis proposes

that space-time itself acts as a dynamic quantum information reservoir, with

quantum imprints encoding information about quantum states and interactions

directly into the fabric of space-time at the Planck scale. By defining a

quantized model of space-time and mechanisms for information encoding and

retrieval, QMM aims to conserve information in a manner consistent with

unitarity during black hole processes. We develop a mathematical framework that

includes space-time quantization, definitions of quantum imprints, and

interactions that modify quantum state evolution within this structure.

Explicit expressions for the interaction Hamiltonians are provided,

demonstrating unitarity preservation in the combined system of quantum fields

and the QMM. This hypothesis is compared with existing theories, including the

holographic principle, black hole complementarity, and loop quantum gravity,

noting its distinctions and examining its limitations. Finally, we discuss

observable implications of QMM, suggesting pathways for experimental

evaluation, such as potential deviations from thermality in Hawking radiation

and their effects on gravitational wave signals. The QMM hypothesis aims to

provide a pathway towards resolving the Black Hole Information Paradox while

contributing to broader discussions in quantum gravity and cosmology.

The Berezinskii-Kosterlitz-Thouless (BKT) transition is the prototype of a phase transition driven by the formation and interaction of topological defects in two-dimensional (2D) systems. In typical models these are vortices: above a transition temperature TBKT vortices are free, below this transition temperature they get confined. In this work we extend the concept of BKT transition to quantum systems in two dimensions. In particular, we demonstrate that a zero-temperature quantum BKT phase transition, driven by a coupling constant can occur in 2D models governed by an effective gauge field theory with a diverging dielectric constant. One particular example is that of a compact U(1) gauge theory with a diverging dielectric constant, where the quantum BKT transition is induced by non-relativistic, purely 2D magnetic monopoles, which can be viewed also as electric vortices. These quantum BKT transitions have the same diverging exponent z as the quantum Griffiths transition but have nothing to do with disorder.

Predicting solar panel power output is crucial for advancing the transition

to renewable energy but is complicated by the variable and non-linear nature of

solar energy. This is influenced by numerous meteorological factors,

geographical positioning, and photovoltaic cell properties, posing significant

challenges to forecasting accuracy and grid stability. Our study introduces a

suite of solutions centered around hybrid quantum neural networks designed to

tackle these complexities. The first proposed model, the Hybrid Quantum Long

Short-Term Memory, surpasses all tested models by achieving mean absolute

errors and mean squared errors that are more than 40% lower. The second

proposed model, the Hybrid Quantum Sequence-to-Sequence neural network, once

trained, predicts photovoltaic power with 16% lower mean absolute error for

arbitrary time intervals without the need for prior meteorological data,

highlighting its versatility. Moreover, our hybrid models perform better even

when trained on limited datasets, underlining their potential utility in

data-scarce scenarios. These findings represent progress towards resolving time

series prediction challenges in energy forecasting through hybrid quantum

models, showcasing the transformative potential of quantum machine learning in

catalyzing the renewable energy transition.

Image recognition is one of the primary applications of machine learning algorithms. Nevertheless, machine learning models used in modern image recognition systems consist of millions of parameters that usually require significant computational time to be adjusted. Moreover, adjustment of model hyperparameters leads to additional overhead. Because of this, new developments in machine learning models and hyperparameter optimization techniques are required. This paper presents a quantum-inspired hyperparameter optimization technique and a hybrid quantum-classical machine learning model for supervised learning. We benchmark our hyperparameter optimization method over standard black-box objective functions and observe performance improvements in the form of reduced expected run times and fitness in response to the growth in the size of the search space. We test our approaches in a car image classification task and demonstrate a full-scale implementation of the hybrid quantum ResNet model with the tensor train hyperparameter optimization. Our tests show a qualitative and quantitative advantage over the corresponding standard classical tabular grid search approach used with a deep neural network ResNet34. A classification accuracy of 0.97 was obtained by the hybrid model after 18 iterations, whereas the classical model achieved an accuracy of 0.92 after 75 iterations.

11 Nov 2025

University of FlorenceMax Planck Institute for Chemical Physics of SolidsLeibniz Institute for Solid State and Materials Research DresdenIstituto dei Sistemi Complessi, Consiglio Nazionale delle RicercheTerra Quantum AGUniversity of Rome ".La Sapienza"Leibniz Institute for Solid State and Materials Research Dresden (IFW Dresden)Technische Universit

at DresdenUniversity of Naples

“Federico II”Technische Universit

V at DresdenUniversity of Rome

V La Sapienza

V

Recent advances in the manipulation of complex oxide layers, particularly the fabrication of atomically thin cuprate superconducting films via molecular beam epitaxy, have revealed new ways in which nanoscale engineering can govern superconductivity and its interwoven electronic orders. In parallel, the creation of twisted cuprate heterostructures through cryogenic stacking techniques marks a pivotal step forward, exploiting cuprate superconductors to deepen our understanding of exotic quantum states and propel next-generation quantum technologies. This review explores over three decades of research in the emerging field of cuprate twistronics, examining both experimental breakthroughs and theoretical progress. It also highlights the methodologies poised to surmount the outstanding challenges in leveraging these complex quantum materials, underscoring their potential to expand the frontiers of quantum science and technology.

04 Apr 2025

We extend the Quantum Memory Matrix (QMM) framework, originally developed to

reconcile quantum mechanics and general relativity by treating space-time as a

dynamic information reservoir, to incorporate the full suite of Standard Model

gauge interactions. In this discretized, Planck-scale formulation, each

space-time cell possesses a finite-dimensional Hilbert space that acts as a

local memory, or quantum imprint, for matter and gauge field configurations. We

focus on embedding non-Abelian SU(3)c (quantum chromodynamics) and SU(2)L x

U(1)Y (electroweak interactions) into QMM by constructing gauge-invariant

imprint operators for quarks, gluons, electroweak bosons, and the Higgs

mechanism. This unified approach naturally enforces unitarity by allowing black

hole horizons, or any high-curvature region, to store and later retrieve

quantum information about color and electroweak charges, thereby preserving

subtle non-thermal correlations in evaporation processes. Moreover, the

discretized nature of QMM imposes a Planck-scale cutoff, potentially taming UV

divergences and modifying running couplings at trans-Planckian energies. We

outline major challenges, such as the precise formulation of non-Abelian

imprint operators and the integration of QMM with loop quantum gravity, as well

as possible observational strategies - ranging from rare decay channels to

primordial black hole evaporation spectra - that could provide indirect probes

of this discrete, memory-based view of quantum gravity and the Standard Model.

14 Jul 2024

Finding the distribution of the velocities and pressures of a fluid by

solving the Navier-Stokes equations is a principal task in the chemical,

energy, and pharmaceutical industries, as well as in mechanical engineering and

the design of pipeline systems. With existing solvers, such as OpenFOAM and

Ansys, simulations of fluid dynamics in intricate geometries are

computationally expensive and require re-simulation whenever the geometric

parameters or the initial and boundary conditions are altered. Physics-informed

neural networks are a promising tool for simulating fluid flows in complex

geometries, as they can adapt to changes in the geometry and mesh definitions,

allowing for generalization across fluid parameters and transfer learning

across different shapes. We present a hybrid quantum physics-informed neural

network that simulates laminar fluid flows in 3D Y-shaped mixers. Our approach

combines the expressive power of a quantum model with the flexibility of a

physics-informed neural network, resulting in a 21% higher accuracy compared to

a purely classical neural network. Our findings highlight the potential of

machine learning approaches, and in particular hybrid quantum physics-informed

neural network, for complex shape optimization tasks in computational fluid

dynamics. By improving the accuracy of fluid simulations in complex geometries,

our research using hybrid quantum models contributes to the development of more

efficient and reliable fluid dynamics solvers.

We propose a fundamental duality between the geometric properties of

spacetime and the informational content of quantum fields. Specifically, we

establish that the curvature of spacetime is directly related to the

entanglement entropy of quantum states, with geometric invariants mapping to

informational measures. This framework modifies Einstein's field equations by

introducing an informational stress-energy tensor derived from quantum

entanglement entropy. Our findings have implications for black hole

thermodynamics, cosmology, and quantum gravity, suggesting that quantum

information fundamentally shapes the structure of spacetime. We incorporate

this informational stress-energy tensor into Einstein's field equations,

leading to modified spacetime geometry, particularly in regimes of strong

gravitational fields, such as near black holes. We compute corrections to

Newton's constant (G) due to entanglement entropy contributions from various

quantum fields and explore the consequences for black hole thermodynamics and

cosmology. These corrections include explicit dependence on fundamental

constants (h-bar, c, and k_B), ensuring dimensional consistency in our

calculations. Our results indicate that quantum information plays a crucial

role in gravitational dynamics, providing new insights into the nature of

spacetime and potential solutions to long-standing challenges in quantum

gravity.

Cancer is one of the leading causes of death worldwide. It is caused by a

variety of genetic mutations, which makes every instance of the disease unique.

Since chemotherapy can have extremely severe side effects, each patient

requires a personalized treatment plan. Finding the dosages that maximize the

beneficial effects of the drugs and minimize their adverse side effects is

vital. Deep neural networks automate and improve drug selection. However, they

require a lot of data to be trained on. Therefore, there is a need for

machine-learning approaches that require less data. Hybrid quantum neural

networks were shown to provide a potential advantage in problems where training

data availability is limited. We propose a novel hybrid quantum neural network

for drug response prediction, based on a combination of convolutional, graph

convolutional, and deep quantum neural layers of 8 qubits with 363 layers. We

test our model on the reduced Genomics of Drug Sensitivity in Cancer dataset

and show that the hybrid quantum model outperforms its classical analog by 15%

in predicting IC50 drug effectiveness values. The proposed hybrid quantum

machine learning model is a step towards deep quantum data-efficient algorithms

with thousands of quantum gates for solving problems in personalized medicine,

where data collection is a challenge.

14 Jun 2025

Primordial black holes (PBHs) remain one of the most intriguing candidates for dark matter and a unique probe of physics at extreme curvatures. Here, we examine their formation in a bounce cosmology when the post-crunch universe inherits a highly inhomogeneous distribution of imprint entropy from the Quantum Memory Matrix (QMM). Within QMM, every Planck-scale cell stores quantum information about infalling matter; the surviving entropy field S(x) contributes an effective dust component T^QMM_{\mu\nu} = lambda * [ (nabla_mu S)(nabla_nu S) - (1/2) * g_{\mu\nu} * (nabla S)^2 + ... ] that deepens curvature wherever S is large. We show that (i) reasonable bounce temperatures and a QMM coupling lambda ~ O(1) naturally amplify these "information wells" until the density contrast exceeds the critical value delta_c ~ 0.3; (ii) the resulting PBH mass spectrum spans 10^{-16} to 10^3 solar masses, matching current microlensing and PTA windows; and (iii) the same mechanism links PBH abundance to earlier QMM explanations of dark matter and the cosmic matter-antimatter imbalance. Observable signatures include a mild blue tilt in small-scale power, characteristic mu-distortions, and an enhanced integrated Sachs-Wolfe signal - all of which will be tested by upcoming CMB, PTA, and lensing surveys.

23 May 2023

The solution of computational fluid dynamics problems is one of the most

computationally hard tasks, especially in the case of complex geometries and

turbulent flow regimes. We propose to use Tensor Train (TT) methods, which

possess logarithmic complexity in problem size and have great similarities with

quantum algorithms in the structure of data representation. We develop the

Tensor train Finite Element Method -- TetraFEM -- and the explicit numerical

scheme for the solution of the incompressible Navier-Stokes equation via Tensor

Trains. We test this approach on the simulation of liquids mixing in a T-shape

mixer, which, to our knowledge, was done for the first time using tensor

methods in such non-trivial geometries. As expected, we achieve exponential

compression in memory of all FEM matrices and demonstrate an exponential

speed-up compared to the conventional FEM implementation on dense meshes. In

addition, we discuss the possibility of extending this method to a quantum

computer to solve more complex problems. This paper is based on work we

conducted for Evonik Industries AG.

Accurate prediction and stabilization of blast furnace temperatures are

crucial for optimizing the efficiency and productivity of steel production.

Traditional methods often struggle with the complex and non-linear nature of

the temperature fluctuations within blast furnaces. This paper proposes a novel

approach that combines hybrid quantum machine learning with pulverized coal

injection control to address these challenges. By integrating classical machine

learning techniques with quantum computing algorithms, we aim to enhance

predictive accuracy and achieve more stable temperature control. For this we

utilized a unique prediction-based optimization method. Our method leverages

quantum-enhanced feature space exploration and the robustness of classical

regression models to forecast temperature variations and optimize pulverized

coal injection values. Our results demonstrate a significant improvement in

prediction accuracy over 25 percent and our solution improved temperature

stability to +-7.6 degrees of target range from the earlier variance of +-50

degrees, highlighting the potential of hybrid quantum machine learning models

in industrial steel production applications.

04 Feb 2025

An essential component of many sophisticated metaheuristics for solving combinatorial optimization problems is some variation of a local search routine that iteratively searches for a better solution within a chosen set of immediate neighbors. The size l of this set is limited due to the computational costs required to run the method on classical processing units. We present a qubit-efficient variational quantum algorithm that implements a quantum version of local search with only ⌈log2l⌉ qubits and, therefore, can potentially work with classically intractable neighborhood sizes when realized on near-term quantum computers. Increasing the amount of quantum resources employed in the algorithm allows for a larger neighborhood size, improving the quality of obtained solutions. This trade-off is crucial for present and near-term quantum devices characterized by a limited number of logical qubits. Numerically simulating our algorithm, we successfully solved the largest graph coloring instance that was tackled by a quantum method. This achievement highlights the algorithm's potential for solving large-scale combinatorial optimization problems on near-term quantum devices.

08 Jul 2024

The primary objective of this paper is to conduct a comparative analysis

between two Machine Learning approaches: Tensor Networks (TN) and Variational

Quantum Classifiers (VQC). While both approaches share similarities in their

representation of the Hilbert space using a logarithmic number of parameters,

they diverge in the manifolds they cover. Thus, the aim is to evaluate and

compare the expressibility and trainability of these approaches. By conducting

this comparison, we can gain insights into potential areas where quantum

advantage may be found. Our findings indicate that VQC exhibits advantages in

terms of speed and accuracy when dealing with data, characterized by a small

number of features. However, for high-dimensional data, TN surpasses VQC in

overall classification accuracy. We believe that this disparity is primarily

attributed to challenges encountered during the training of quantum circuits.

We want to stress that in this article, we focus on only one particular task

and do not conduct thorough averaging of the results. Consequently, we

recommend considering the results of this article as a unique case without

excessive generalization.

30 Jun 2021

State-of-the-art noisy intermediate-scale quantum devices (NISQ), although imperfect, enable computational tasks that are manifestly beyond the capabilities of modern classical supercomputers. However, present quantum computations are restricted to exploring specific simplified protocols, whereas the implementation of full-scale quantum algorithms aimed at solving concrete large scale problems arising in data analysis and numerical modelling remains a challenge. Here we introduce and implement a hybrid quantum algorithm for solving linear systems of equations with exponential speedup, utilizing quantum phase estimation, one of the exemplary core protocols for quantum computing. We introduce theoretically classes of linear systems that are suitable for current generation quantum machines and solve experimentally a 217-dimensional problem on superconducting IBMQ devices, a record for linear system solution on quantum computers. The considered large-scale algorithm shows superiority over conventional solutions, demonstrates advantages of quantum data processing via phase estimation and holds high promise for meeting practically relevant challenges.

The question "What is real?" can be traced back to the shadows in Plato's

cave. Two thousand years later, Rene Descartes lacked knowledge about arguing

against an evil deceiver feeding us the illusion of sensation. Descartes'

epistemological concept later led to various theories of sensory experiences.

The concept of "illusionism", proposing that even the very conscious experience

we have is an illusion, is not only a red-pill scenario found in the 1999

science fiction movie "The Matrix" but is also a philosophical concept promoted

by modern tinkers, most prominently by Daniel Dennett. Reflection upon a

possible simulation and our perceived reality was beautifully visualized in

"The Matrix", bringing the old ideas of Descartes to coffee houses around the

world. Irish philosopher Bishop Berkeley was the father of what was later

coined as "subjective idealism", basically stating that "what you perceive is

real". With the advent of quantum technologies based on the control of

individual fundamental particles, the question of whether our universe is a

simulation isn't just intriguing. Our ever-advancing understanding of

fundamental physical processes will likely lead us to build quantum computers

utilizing quantum effects for simulating nature quantum-mechanically in all

complexity, as famously envisioned by Richard Feynman. In this article, we

outline constraints on the limits of computability and predictability in/of the

universe, which we then use to design experiments allowing for first

conclusions as to whether we participate in a simulation chain. Eventually, in

a simulation in which the computer simulating a universe is governed by the

same physical laws as the simulation, the exhaustion of computational resources

will halt all simulations down the simulation chain unless an external

programmer intervenes, which we may be able to observe.

Quantum machine learning has become an area of growing interest but has

certain theoretical and hardware-specific limitations. Notably, the problem of

vanishing gradients, or barren plateaus, renders the training impossible for

circuits with high qubit counts, imposing a limit on the number of qubits that

data scientists can use for solving problems. Independently, angle-embedded

supervised quantum neural networks were shown to produce truncated Fourier

series with a degree directly dependent on two factors: the depth of the

encoding and the number of parallel qubits the encoding applied to. The degree

of the Fourier series limits the model expressivity. This work introduces two

new architectures whose Fourier degrees grow exponentially: the sequential and

parallel exponential quantum machine learning architectures. This is done by

efficiently using the available Hilbert space when encoding, increasing the

expressivity of the quantum encoding. Therefore, the exponential growth allows

staying at the low-qubit limit to create highly expressive circuits avoiding

barren plateaus. Practically, parallel exponential architecture was shown to

outperform the existing linear architectures by reducing their final mean

square error value by up to 44.7% in a one-dimensional test problem.

Furthermore, the feasibility of this technique was also shown on a trapped ion

quantum processing unit.

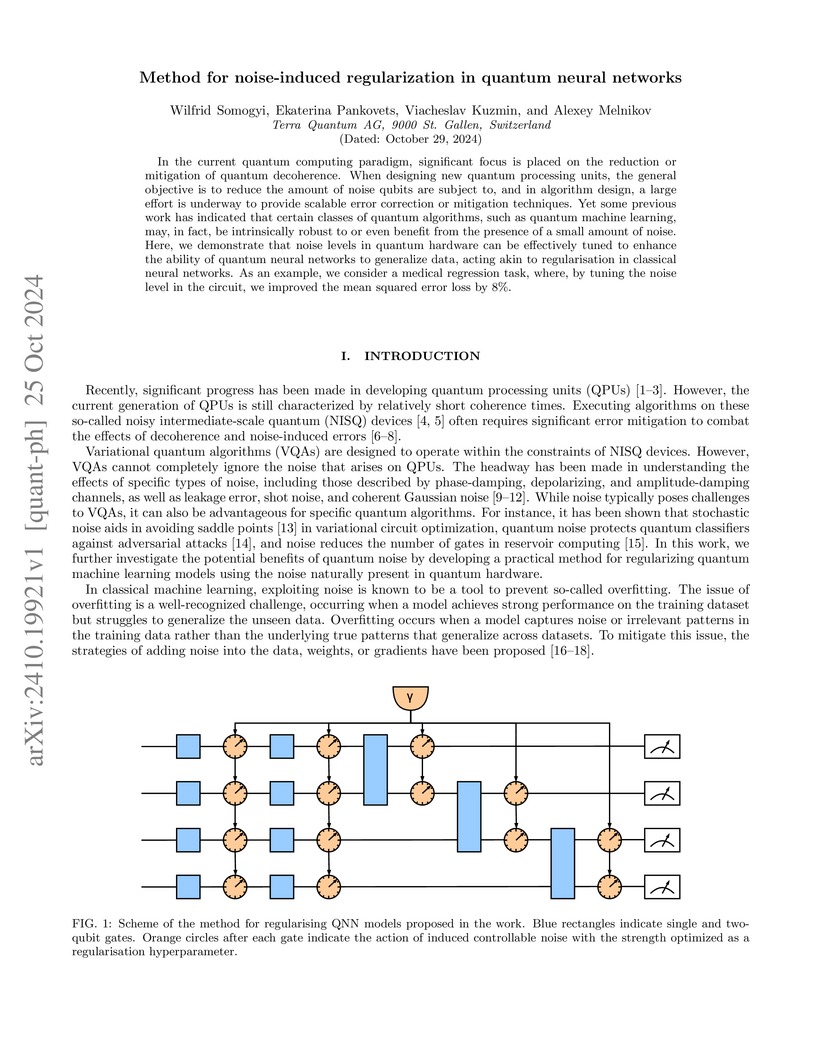

In the current quantum computing paradigm, significant focus is placed on the reduction or mitigation of quantum decoherence. When designing new quantum processing units, the general objective is to reduce the amount of noise qubits are subject to, and in algorithm design, a large effort is underway to provide scalable error correction or mitigation techniques. Yet some previous work has indicated that certain classes of quantum algorithms, such as quantum machine learning, may, in fact, be intrinsically robust to or even benefit from the presence of a small amount of noise. Here, we demonstrate that noise levels in quantum hardware can be effectively tuned to enhance the ability of quantum neural networks to generalize data, acting akin to regularisation in classical neural networks. As an example, we consider a medical regression task, where, by tuning the noise level in the circuit, we improved the mean squared error loss by 8%.

There are no more papers matching your filters at the moment.