Ask or search anything...

Harvard University

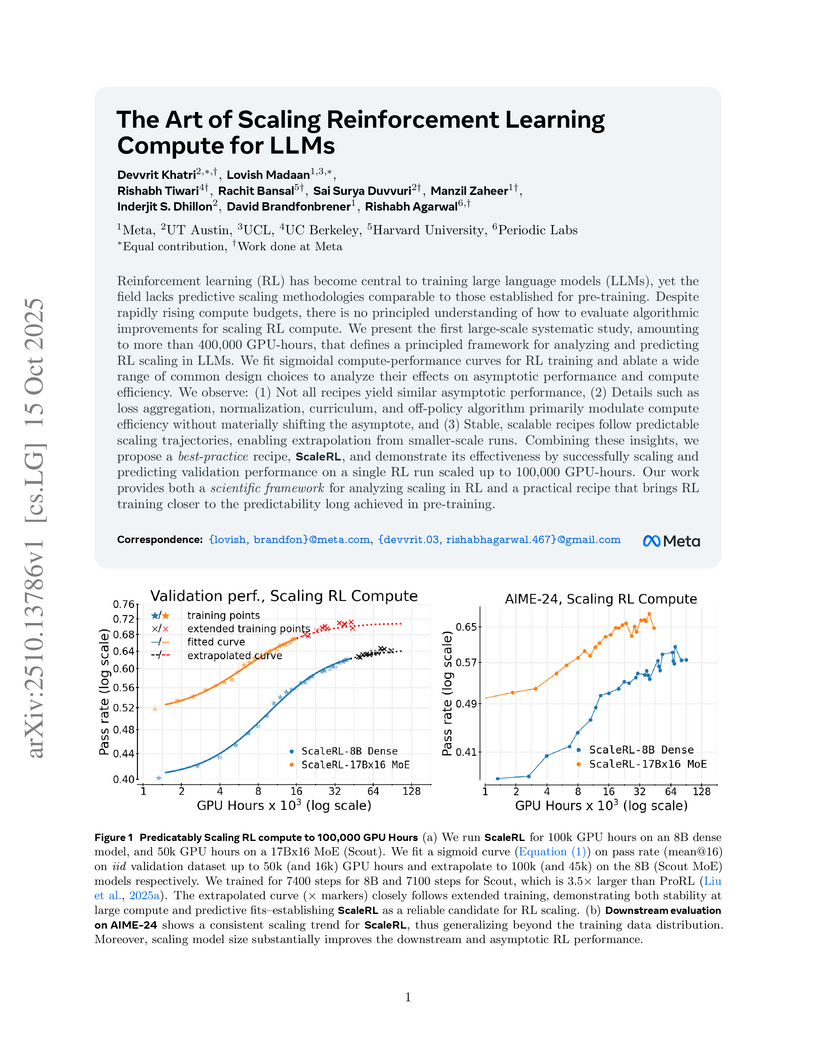

Harvard UniversityResearchers introduced a predictive framework for Reinforcement Learning (RL) in Large Language Models (LLMs) using a sigmoidal compute-performance curve, enabling performance extrapolation from smaller runs. Their ScaleRL recipe, demonstrated over 100,000 GPU-hours, achieves an asymptotic reward of 0.61 on verifiable math problems, outperforming established methods while exhibiting predictable scaling across model size, generation length, and multi-task settings.

View blogResearchers at Harvard University developed power sampling, a training-free method leveraging the Metropolis-Hastings algorithm to sample from a sharpened distribution of a base large language model. This technique unlocks latent reasoning capabilities, achieving single-shot performance comparable to or exceeding reinforcement learning post-training methods across various tasks, while also preserving generation diversity.

View blogResearchers from the University of Massachusetts Amherst and Harvard University developed BOTS, a method combining Batch Bayesian Optimization with Extended Thompson Sampling, to optimize adaptive interventions in severely episode-limited reinforcement learning environments. It effectively overcomes the myopic nature of standard contextual bandits and achieves superior performance in mobile health simulations, even with very few trials.

View blogA computational tool, AcrosticSleuth, identifies and probabilistically ranks acrostics in multilingual corpora, achieving F1 scores up to 0.66 on known Russian acrostics. This tool, developed by researchers from Tufts, UW-Madison, UT Austin, and Harvard, also led to the discovery of previously unrecognized acrostics in significant historical texts, including one in Thomas Hobbes' *The Elements of Law*.

View blogA comprehensive survey from an international research consortium led by Peking University examines Large Language Model (LLM) agents through a methodology-centered taxonomy, analyzing their construction, collaboration mechanisms, and evolution while providing a unified architectural framework for understanding agent systems across different application domains.

View blogResearchers at Harvard University, Google DeepMind, and collaborating institutions reverse-engineered successful Implicit Chain-of-Thought (ICoT) Transformers to understand why standard models fail at multi-digit multiplication. They discovered that ICoT models establish long-range dependencies through attention trees for partial product caching and represent digits using Fourier bases, findings that led to a simple auxiliary loss intervention enabling a standard Transformer to achieve 99% accuracy on 4x4 multiplication.

View blogEquilibrium Matching (EqM) introduces a generative modeling framework that learns a time-invariant equilibrium gradient of an implicit energy landscape, enabling high-fidelity image generation without explicit time-conditioning. The method achieves an FID of 1.90 on ImageNet 256x256, outperforming leading diffusion and flow-based models, and supports flexible optimization-based sampling and intrinsic capabilities like out-of-distribution detection and image composition.

View blogOpenAI researchers and collaborators evaluate GPT-5's utility in accelerating scientific research across diverse fields, demonstrating its capacity for contributing to known result rediscovery, literature search, collaborative problem-solving, and the generation of novel scientific findings. The model proved to compress research timelines from months to hours and provided verifiable new insights in mathematics, physics, and biology.

View blogThis paper introduces Energy-Based Transformers (EBTs), a new class of models that enable scalable System 2 thinking through unsupervised learning by reframing prediction as an optimization process over a learned energy function. EBTs demonstrate superior scaling rates compared to standard Transformers in language and video, improve performance by up to 29% with increased inference-time computation, and achieve better generalization on out-of-distribution data across diverse modalities.

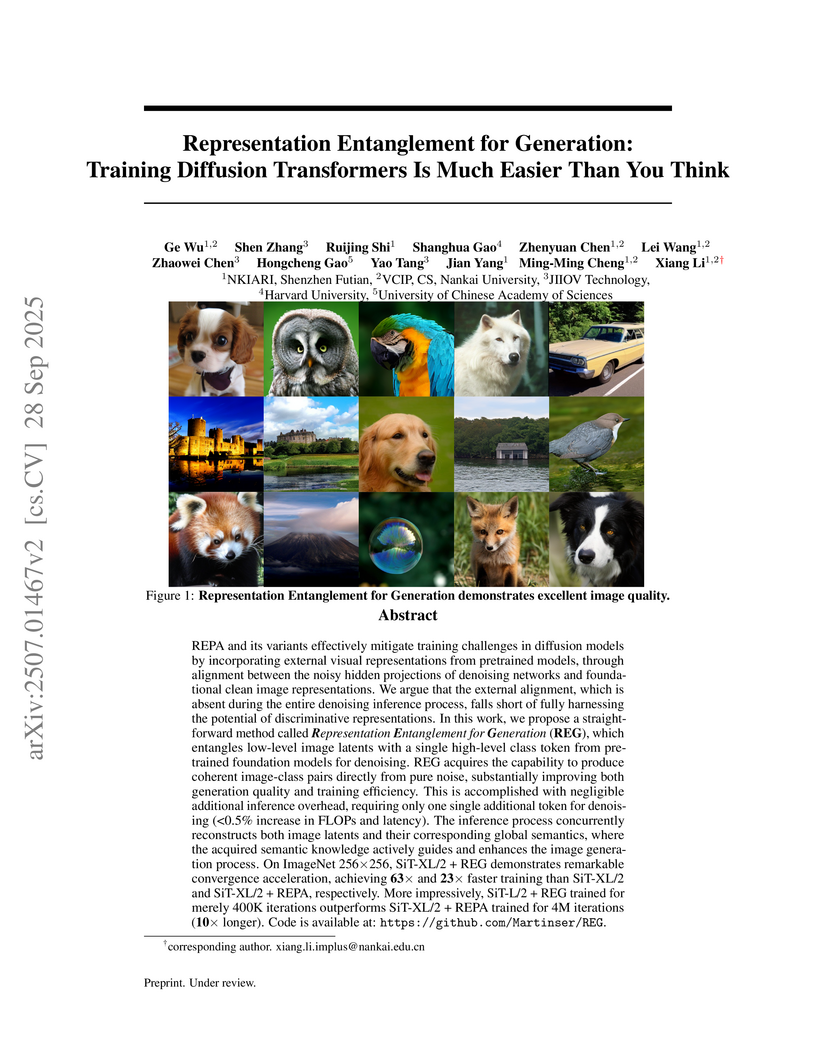

View blogRepresentation Entanglement for Generation (REG) introduces an image-class denoising paradigm, achieving up to 63x faster training for Diffusion Transformers while setting a new FID record of 1.8 on ImageNet 256x256 by structurally integrating a high-level class token with image latents.

View blogTOOLUNIVERSE establishes an open-source ecosystem that standardizes AI-tool interaction and empowers AI scientists to autonomously discover, create, optimize, and compose scientific tools. The platform successfully demonstrated its utility in a therapeutic discovery case study, identifying a novel drug candidate for hypercholesterolemia with validated properties.

View blogA theoretical framework, supported by empirical simulations, clarifies how reinforcement learning (RL) applied after next-token prediction facilitates reasoning in Large Language Models (LLMs). The work shows RL effectively up-samples rare, high-quality chain-of-thought demonstrations, leading to rapid generalization and a concurrent increase in response length.

View blogFlexMDMs enable discrete diffusion models to generate sequences of variable lengths and perform token insertions by extending the stochastic interpolant framework with a novel joint interpolant for continuous-time Markov chains. The approach more accurately models length distributions, boosts planning task success rates by nearly 60%, and improves performance on math and code infilling tasks by up to 13% after efficient retrofitting of existing large-scale MDMs.

View blogMatryoshka Representation Learning from researchers at the University of Washington, Google Research, and Harvard University develops a method to encode multi-fidelity information within a single embedding, allowing truncated prefixes to serve as progressively finer-grained representations. This approach enables substantial efficiency gains in large-scale classification and retrieval tasks, achieving comparable accuracy to full-dimensional models with significantly reduced computational cost and memory footprint.

View blog

University of Amsterdam

University of Amsterdam

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign

Duke University

Duke University

University of Texas at Austin

University of Texas at Austin

Argonne National Laboratory

Argonne National Laboratory

University of Washington

University of Washington

Google DeepMind

Google DeepMind University of Waterloo

University of Waterloo

MIT

MIT

University of Cambridge

University of Cambridge

Chinese Academy of Sciences

Chinese Academy of Sciences

New York University

New York University