Tianjin University

30 Jan 2024

The transport of water and protons in the cathode catalyst layer (CCL) of proton exchange membrane (PEM) fuel cells is critical for cell performance, but the underlying mechanism is still unclear. Herein, the ionomer structure and the distribution/transport characteristics of water and protons in CCLs are investigated via all-atom molecular dynamics simulations. The results show that at low water contents, isolated water clusters form in ionomer pores, while proton transport is mainly via the charged sites of the ionomer side chains and the Grotthuss mechanism. Moreover, with increasing water content, water clusters are interconnected to form continuous water channels, which provide effective paths for proton transfer via the vehicular and Grotthuss mechanisms. Increasing the ionomer mass content can enhance the dense arrangement of the ionomer, which in turn increases the density of charge sites and improves the proton transport efficiency. When the ionomer mass content is high, the clustering effect reduces the space for water diffusion, increases the proton transport path, and finally decreases the proton transport efficiency. By providing physics insights into the proton transport mechanism, this study is helpful for the structural design and performance improvement of CCLs of PEM fuel cells.

Existing deep learning-based shadow removal methods still produce images with shadow remnants. These shadow remnants typically exist in homogeneous regions with low-intensity values, making them untraceable in the existing image-to-image mapping paradigm. We observe that shadows mainly degrade images at the image-structure level (in which humans perceive object shapes and continuous colors). Hence, in this paper, we propose to remove shadows at the image structure level. Based on this idea, we propose a novel structure-informed shadow removal network (StructNet) to leverage the image-structure information to address the shadow remnant problem. Specifically, StructNet first reconstructs the structure information of the input image without shadows and then uses the restored shadow-free structure prior to guiding the image-level shadow removal. StructNet contains two main novel modules: (1) a mask-guided shadow-free extraction (MSFE) module to extract image structural features in a non-shadow-to-shadow directional manner, and (2) a multi-scale feature & residual aggregation (MFRA) module to leverage the shadow-free structure information to regularize feature consistency. In addition, we also propose to extend StructNet to exploit multi-level structure information (MStructNet), to further boost the shadow removal performance with minimum computational overheads. Extensive experiments on three shadow removal benchmarks demonstrate that our method outperforms existing shadow removal methods, and our StructNet can be integrated with existing methods to improve them further.

18 May 2025

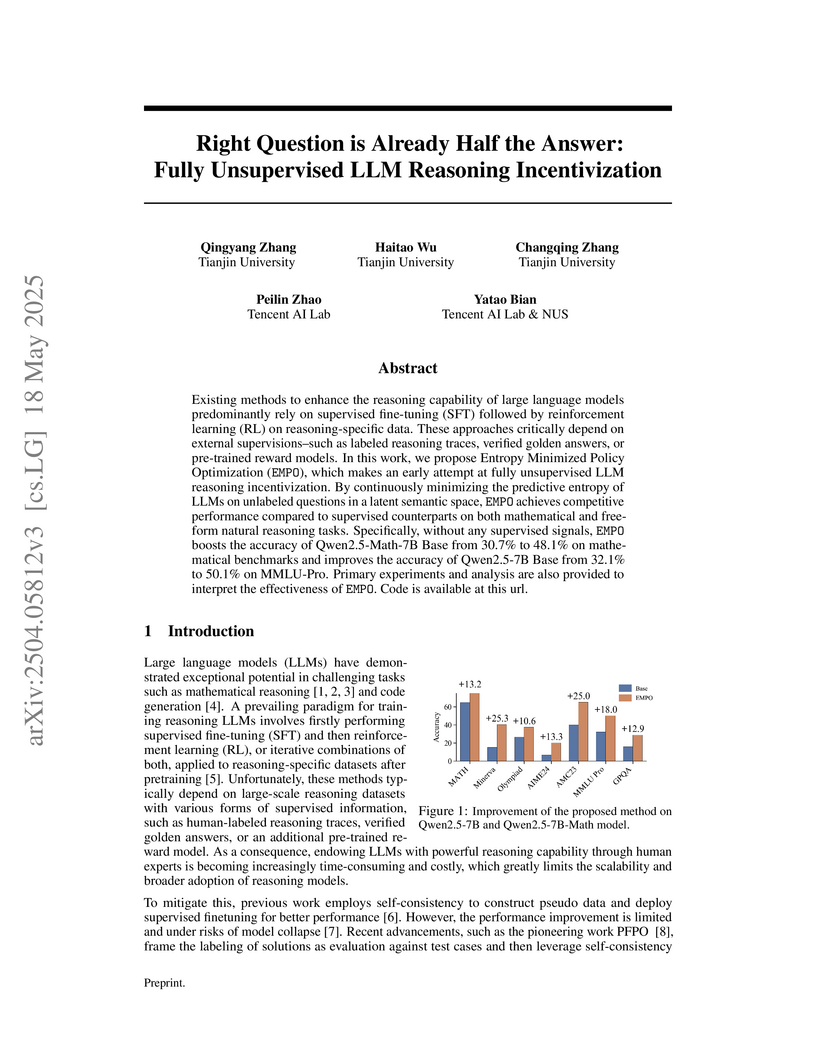

A fully unsupervised method called EMPO enhances Large Language Model reasoning by minimizing semantic entropy as an intrinsic reward signal. The approach improves mathematical reasoning accuracy on models like Qwen2.5-Math-7B from 30.7% to 48.1% and boosts natural reasoning on benchmarks like MMLU-Pro for Qwen2.5-7B from 32.1% to 50.1%, achieving performance comparable to or exceeding supervised methods.

Embodied-R1 introduces a unified 'pointing' representation for general robotic manipulation, leveraging a reinforced fine-tuning curriculum to bridge high-level vision-language understanding with low-level action primitives. The model achieves state-of-the-art results on 11 diverse spatial and pointing benchmarks and demonstrates robust zero-shot generalization with an 87.5% success rate on real-world robot tasks.

Researchers from Nanyang Technological University and collaborators developed HOUYI, a systematic black-box prompt injection attack framework for LLM-integrated applications, demonstrating 86.1% susceptibility across 36 real-world commercial applications. The study revealed severe consequences including intellectual property theft via prompt leaking and financial losses from prompt abusing, with a daily financial loss of $259.2 estimated for a single compromised application.

23 Sep 2025

Tianjin UniversityHuawei Noah’s Ark Lab Chinese Academy of Sciences

Chinese Academy of Sciences Imperial College London

Imperial College London Sun Yat-Sen University

Sun Yat-Sen University University of Manchester

University of Manchester University College LondonTongji University

University College LondonTongji University Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Tsinghua University

Tsinghua University Peking University

Peking University King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)

King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)

Chinese Academy of Sciences

Chinese Academy of Sciences Imperial College London

Imperial College London Sun Yat-Sen University

Sun Yat-Sen University University of Manchester

University of Manchester University College LondonTongji University

University College LondonTongji University Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Tsinghua University

Tsinghua University Peking University

Peking University King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)

King’s College LondonTU DarmstadtPengcheng LaboratoryHong Kong University of Science and Technology (Guangzhou)Researchers from a global consortium, including Tianjin University and Huawei Noah’s Ark Lab, developed Embodied Arena, a comprehensive platform for evaluating Embodied AI agents, featuring a systematic capability taxonomy and an automated, LLM-driven data generation pipeline. This platform integrates over 22 benchmarks and 30 models, revealing that specialized embodied models often outperform general models on targeted tasks and identifying object and spatial perception as key performance bottlenecks.

Autonomous agents have recently achieved remarkable progress across diverse domains, yet most evaluations focus on short-horizon, fully observable tasks. In contrast, many critical real-world tasks, such as large-scale software development, commercial investment, and scientific discovery, unfold in long-horizon and partially observable scenarios where success hinges on sustained reasoning, planning, memory management, and tool use. Existing benchmarks rarely capture these long-horizon challenges, leaving a gap in systematic evaluation. To bridge this gap, we introduce \textbf{UltraHorizon} a novel benchmark that measures the foundational capabilities essential for complex real-world challenges. We use exploration as a unifying task across three distinct environments to validate these core competencies. Agents are designed in long-horizon discovery tasks where they must iteratively uncover hidden rules through sustained reasoning, planning, memory and tools management, and interaction with environments. Under the heaviest scale setting, trajectories average \textbf{200k+} tokens and \textbf{400+} tool calls, whereas in standard configurations they still exceed \textbf{35k} tokens and involve more than \textbf{60} tool calls on average. Our extensive experiments reveal that LLM-agents consistently underperform in these settings, whereas human participants achieve higher scores, underscoring a persistent gap in agents' long-horizon abilities. We also observe that simple scaling fails in our task. To better illustrate the failure of agents, we conduct an in-depth analysis of collected trajectories. We identify eight types of errors and attribute them to two primary causes: in-context locking and functional fundamental capability gaps. \href{this https URL}{Our code will be available here.}

13 Aug 2025

GeoVLA introduces an end-to-end framework that integrates 3D point cloud information into Vision-Language-Action (VLA) models, allowing robots to better perceive their physical environment for manipulation tasks. This architecture consistently achieves state-of-the-art performance on manipulation benchmarks and exhibits robust generalization across various real-world spatial, scale, and viewpoint changes.

Human speech goes beyond the mere transfer of information; it is a profound exchange of emotions and a connection between individuals. While Text-to-Speech (TTS) models have made huge progress, they still face challenges in controlling the emotional expression in the generated speech. In this work, we propose EmoVoice, a novel emotion-controllable TTS model that exploits large language models (LLMs) to enable fine-grained freestyle natural language emotion control, and a phoneme boost variant design that makes the model output phoneme tokens and audio tokens in parallel to enhance content consistency, inspired by chain-of-thought (CoT) and chain-of-modality (CoM) techniques. Besides, we introduce EmoVoice-DB, a high-quality 40-hour English emotion dataset featuring expressive speech and fine-grained emotion labels with natural language descriptions. EmoVoice achieves state-of-the-art performance on the English EmoVoice-DB test set using only synthetic training data, and on the Chinese Secap test set using our in-house data. We further investigate the reliability of existing emotion evaluation metrics and their alignment with human perceptual preferences, and explore using SOTA multimodal LLMs GPT-4o-audio and Gemini to assess emotional speech. Dataset, code, checkpoints, and demo samples are available at this https URL.

This research introduces AUTOTRITON, the first large language model powered by reinforcement learning specifically for automatic Triton kernel generation. The 8B-parameter model demonstrates improved correctness on established benchmarks, outperforming larger, general-purpose LLMs and achieving comparable runtime performance.

Researchers from Tianjin University, Beijing University of Posts and Telecommunications, and A*STAR introduce Logits-induced Token Uncertainty (LogTokU), a framework that estimates LLM uncertainty by leveraging raw logits as evidence, providing a more accurate and efficient alternative to existing methods. LogTokU significantly improves dynamic decoding strategies by up to 11.4% and enhances response reliability estimation by up to 7.5% on various LLMs.

10 Sep 2025

This survey provides a comprehensive review of dexterous manipulation using imitation learning, categorizing algorithmic advancements, analyzing end-effector designs and data acquisition methods, and outlining key challenges and future research directions. It consolidates a rapidly evolving interdisciplinary field to guide researchers and practitioners toward more capable robotic systems.

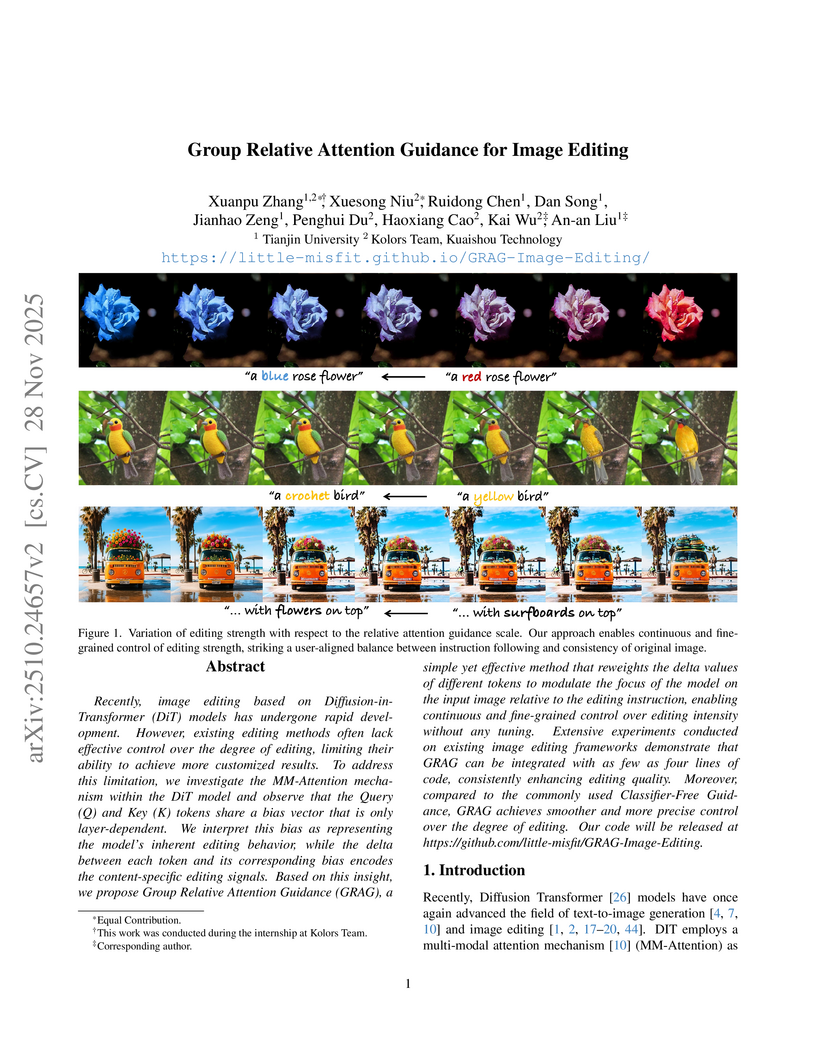

Recently, image editing based on Diffusion-in-Transformer models has undergone rapid development. However, existing editing methods often lack effective control over the degree of editing, limiting their ability to achieve more customized results. To address this limitation, we investigate the MM-Attention mechanism within the DiT model and observe that the Query and Key tokens share a bias vector that is only layer-dependent. We interpret this bias as representing the model's inherent editing behavior, while the delta between each token and its corresponding bias encodes the content-specific editing signals. Based on this insight, we propose Group Relative Attention Guidance, a simple yet effective method that reweights the delta values of different tokens to modulate the focus of the model on the input image relative to the editing instruction, enabling continuous and fine-grained control over editing intensity without any tuning. Extensive experiments conducted on existing image editing frameworks demonstrate that GRAG can be integrated with as few as four lines of code, consistently enhancing editing quality. Moreover, compared to the commonly used Classifier-Free Guidance, GRAG achieves smoother and more precise control over the degree of editing. Our code will be released at this https URL.

14 Feb 2025

The survey by Peng et al. presents the first comprehensive categorization of LLM-powered agents in recommender systems, classifying them into recommender-oriented, interaction-oriented, and simulation-oriented paradigms. It also proposes a unified four-module architecture (Profile, Memory, Planning, Action) to structure the understanding of these systems, addressing challenges like nuanced user understanding and transparency.

MC-LLaVA introduces the first multi-concept personalization paradigm for Vision-Language Models, enabling them to understand and generate responses involving multiple user-defined concepts simultaneously. The model achieves superior recognition accuracy (0.845 for multi-concept) and competitive VQA performance (BLEU 0.658) on a newly contributed dataset, outperforming prior single-concept approaches.

TRITONBENCH introduces the first comprehensive benchmark to evaluate large language models' capabilities in generating high-performance Triton operators for GPUs. The benchmark reveals that current LLMs generally struggle to produce efficient Triton code, though domain-specific fine-tuning and one-shot examples can improve execution accuracy for certain models and tasks.

GlimpsePrune introduces a dynamic visual token pruning framework for Large Vision-Language Models, enabling efficient processing of high-resolution visual inputs. The method achieves an average 92.6% visual token pruning rate while fully retaining baseline performance, and reduces peak GPU memory usage by 72.8% during generation, demonstrating robustness in free-form visual question answering.

A study systematically explores entropy dynamics in Reinforcement Learning with Verifiable Rewards (RLVR) for large language models, identifying that positive advantages drive entropy collapse and proposing the Progressive Advantage Reweighting method to regulate it. The findings show a strong correlation between entropy and response diversity, while also improving model calibration and achieving competitive performance.

Researchers from Tsinghua University and Huawei Noah’s Ark Lab developed iVideoGPT, a scalable autoregressive transformer that functions as an interactive world model for embodied AI. The model leverages a novel compressive video tokenization technique and large-scale pre-training on diverse manipulation datasets, demonstrating strong performance in video prediction, visual planning, and sample-efficient model-based reinforcement learning.

The TFPS framework addresses time series forecasting under patch-level distribution shifts by employing a dual-domain encoder and dynamically identified pattern-specific experts. It achieves top-1 performance in 57 out of 72 experimental configurations, significantly reducing MSE by an average of 9.5% over time-domain methods and 16.9% over frequency-domain methods.

There are no more papers matching your filters at the moment.