Universität der Bundeswehr München

Model inversion attacks (MIAs) aim to create synthetic images that reflect the class-wise characteristics from a target classifier's private training data by exploiting the model's learned knowledge. Previous research has developed generative MIAs that use generative adversarial networks (GANs) as image priors tailored to a specific target model. This makes the attacks time- and resource-consuming, inflexible, and susceptible to distributional shifts between datasets. To overcome these drawbacks, we present Plug & Play Attacks, which relax the dependency between the target model and image prior, and enable the use of a single GAN to attack a wide range of targets, requiring only minor adjustments to the attack. Moreover, we show that powerful MIAs are possible even with publicly available pre-trained GANs and under strong distributional shifts, for which previous approaches fail to produce meaningful results. Our extensive evaluation confirms the improved robustness and flexibility of Plug & Play Attacks and their ability to create high-quality images revealing sensitive class characteristics.

Researchers at Imperial College London and collaborators developed DermaSynth, a dataset of 92,020 synthetic image-text pairs from 45,205 dermatological images, addressing the scarcity of high-quality paired data for Vision Large Language Models in dermatology. They also fine-tuned DermatoLlama 1.0, a baseline model demonstrating enhanced medically informed descriptions.

Item Response Theory (IRT) has been widely used in educational psychometrics

to assess student ability, as well as the difficulty and discrimination of test

questions. In this context, discrimination specifically refers to how

effectively a question distinguishes between students of different ability

levels, and it does not carry any connotation related to fairness. In recent

years, IRT has been successfully used to evaluate the predictive performance of

Machine Learning (ML) models, but this paper marks its first application in

fairness evaluation. In this paper, we propose a novel Fair-IRT framework to

evaluate a set of predictive models on a set of individuals, while

simultaneously eliciting specific parameters, namely, the ability to make fair

predictions (a feature of predictive models), as well as the discrimination and

difficulty of individuals that affect the prediction results. Furthermore, we

conduct a series of experiments to comprehensively understand the implications

of these parameters for fairness evaluation. Detailed explanations for item

characteristic curves (ICCs) are provided for particular individuals. We

propose the flatness of ICCs to disentangle the unfairness between individuals

and predictive models. The experiments demonstrate the effectiveness of this

framework as a fairness evaluation tool. Two real-world case studies illustrate

its potential application in evaluating fairness in both classification and

regression tasks. Our paper aligns well with the Responsible Web track by

proposing a Fair-IRT framework to evaluate fairness in ML models, which

directly contributes to the development of a more inclusive, equitable, and

trustworthy AI.

In this article we call a sequence (an)n of elements of a metric space nearly computably Cauchy if for every strictly increasing computable function r:N→N the sequence (d(ar(n+1),ar(n)))n converges computably to 0. We show that there exists a strictly increasing sequence of rational numbers that is nearly computably Cauchy and unbounded. Then we call a real number α nearly computable if there exists a computable sequence (an)n of rational numbers that converges to α and is nearly computably Cauchy. It is clear that every computable real number is nearly computable, and it follows from a result by Downey and LaForte (2002) that there exists a nearly computable and left-computable number that is not computable. We observe that the set of nearly computable real numbers is a real closed field and closed under computable real functions with open domain, but not closed under arbitrary computable real functions. Among other things we strengthen results by Hoyrup (2017) and by Stephan and Wu (2005) by showing that any nearly computable real number that is not computable is weakly 1-generic (and, therefore, hyperimmune and not Martin-Löf random) and strongly Kurtz random (and, therefore, not K-trivial), and we strengthen a result by Downey and LaForte (2002) by showing that no promptly simple set can be Turing reducible to a nearly computable real number.

In the near term, programming quantum computers will remain severely limited

by low quantum volumes. Therefore, it is desirable to implement quantum

circuits with the fewest resources possible. For the common Clifford+T

circuits, most research is focused on reducing the number of T gates, since

they are an order of magnitude more expensive than Clifford gates in quantum

error corrected encoding schemes. However, this optimization sometimes leads to

more 2-qubit gates, which, even though they are less expensive in terms of

fault-tolerance, contribute significantly to the overall circuit cost.

Approaches based on the ZX-calculus have recently gained some popularity in the

field, but reduction of 2-qubit gates is not their focus. In this work, we

present an alternative for improving 2-qubit gate count of a quantum circuit

with the ZX-calculus by using heuristics in ZX-diagram simplification. Our

approach maintains the good reduction of the T gate count provided by other

strategies based on ZX-calculus, thus serving as an extension for other

optimization algorithms. Our results show that combining the available

ZX-calculus-based optimizations with our algorithms can reduce the number of

2-qubit gates by as much as 40% compared to current approaches using

ZX-calculus. Additionally, we improve the results of the best currently

available optimization technique of Nam et. al for some circuits by up to 15%.

23 Jul 2024

The usage of quick response (QR) codes was limited in the pre-era of the

COVID-19 pandemic. Due to the widespread and frequent application since then,

this opened up an attractive phishing opportunity for malicious actors. They

trick users into scanning the codes and redirecting them to malicious websites.

In order to explore whether phishing with QR codes is another successful attack

vector, we conducted a real-world phishing campaign with two different QR code

variants at a research campus. The first version was rather plain, whereas the

second version was more professionally designed and included the possibility to

win a voucher. After the study was completed, a qualitative survey on phishing

and QR codes was conducted to verify the results of the phishing campaign.

Both, the phishing campaign and the survey, show that a professional design

receives more attention. They also illustrate that QR codes are used more

frequently by curious users because of their easy functionality. Although the

results confirm that technical-savvy users are more aware of the risks, they

also underpin the malicious potential for non-technical-savvy users and suggest

further work regarding countermeasures.

10 Oct 2025

In computable analysis typically topological spaces with countable bases are considered. The Theorem of Kreitz-Weihrauch implies that the subbase representation of a second-countable T0 space is admissible with respect to the topology that the subbase generates. We consider generalizations of this setting to bases that are representable, but not necessarily countable. We introduce the notions of a computable presubbase and a computable prebase. We prove a generalization of the Theorem of Kreitz-Weihrauch for the presubbase representation that shows that any such representation is admissible with respect to the topology generated by compact intersections of the presubbase elements. For computable prebases we obtain representations that are admissible with respect to the topology that they generate. These concepts provide a natural way to investigate many topological spaces that have been studied in computable analysis. The benefit of this approach is that topologies can be described by their usual subbases and standard constructions for such subbases can be applied. Finally we discuss a Galois connection between presubbases and representations of T0 spaces that indicates that presubbases and representations offer particular views on the same mathematical structure from different perspectives.

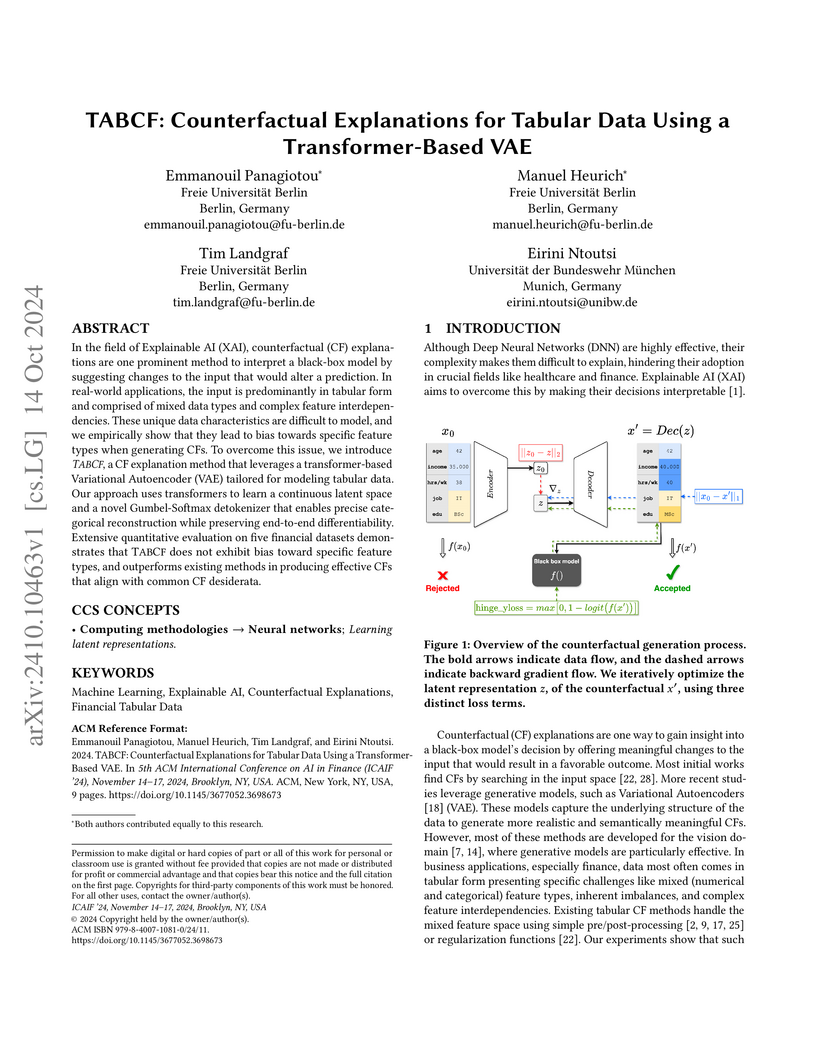

TABCF introduces a transformer-based variational autoencoder with a Gumbel-Softmax detokenizer to generate counterfactual explanations for tabular data. The method achieves 99% validity and addresses a pervasive feature-type bias, demonstrating balanced utilization of numerical and categorical features unlike prior approaches, leading to more actionable explanations.

In this paper, we describe {\sc quantitative graph theory} and argue it is a

new graph-theoretical branch in network science, however, with significant

different features compared to classical graph theory. The main goal of

quantitative graph theory is the structural quantification of information

contained in complex networks by employing a {\it measurement approach} based

on numerical invariants and comparisons. Furthermore, the methods as well as

the networks do not need to be deterministic but can be statistic. As such this

complements the field of classical graph theory, which is descriptive and

deterministic in nature. We provide examples of how quantitative graph theory

can be used for novel applications in the context of the overarching concept

network science.

The increase of Internet services has not only created several digital identities but also more information available about the persons behind them. The data can be collected and used for attacks on digital identities as well as on identity management systems, which manage digital identities. In order to identify possible attack vectors and take countermeasures at an early stage, it is important for individuals and organizations to systematically search for and analyze the data. This paper proposes a classification of data and open-source intelligence (OSINT) tools related to identities. This classification helps to systematically search for data. In the next step, the data can be analyzed and countermeasures can be taken. Last but not least, an OSINT framework approach applying this classification for searching and analyzing data is presented and discussed.

Hyperedge replacement (HR) grammars can generate NP-complete graph languages,

which makes parsing hard even for fixed HR languages. Therefore, we study

predictive shift-reduce (PSR) parsing that yields efficient parsers for a

subclass of HR grammars, by generalizing the concepts of SLR(1) string parsing

to graphs. We formalize the construction of PSR parsers and show that it is

correct. PSR parsers run in linear space and time, and are more efficient than

the predictive top-down (PTD) parsers recently developed by the authors.

Cyber attacks are ubiquitous and a constantly growing threat in the age of digitization. In order to protect important data, developers and system administrators must be trained and made aware of possible threats. Practical training can be used for students alike to introduce them to the topic. A constant threat to websites that require user authentication is so-called brute-force attacks, which attempt to crack a password by systematically trying every possible combination. As this is a typical threat, but comparably easy to detect, it is ideal for beginners. Therefore, three open-source blue team scenarios are designed and systematically described. They are contiguous to maximize the learning effect.

23 May 2025

In this article, we present a technology development of a superconducting

qubit device 3D-integrated by flip-chip-bonding and processed following CMOS

fabrication standards and contamination rules on 200 mm wafers. We present the

utilized proof-of-concept chip designs for qubit- and carrier chip, as well as

the respective front-end and back-end fabrication techniques. In

characterization of the newly developed microbump technology based on

metallized KOH-etched Si-islands, we observe a superconducting transition of

the used metal stacks and radio frequency (RF) signal transfer through the bump

connection with negligible attenuation. In time-domain spectroscopy of the

qubits we find high yield qubit excitation with energy relaxation times of up

to 15 us.

Due to their data-driven nature, Machine Learning (ML) models are susceptible to bias inherited from data, especially in classification problems where class and group imbalances are prevalent. Class imbalance (in the classification target) and group imbalance (in protected attributes like sex or race) can undermine both ML utility and fairness. Although class and group imbalances commonly coincide in real-world tabular datasets, limited methods address this scenario. While most methods use oversampling techniques, like interpolation, to mitigate imbalances, recent advancements in synthetic tabular data generation offer promise but have not been adequately explored for this purpose. To this end, this paper conducts a comparative analysis to address class and group imbalances using state-of-the-art models for synthetic tabular data generation and various sampling strategies. Experimental results on four datasets, demonstrate the effectiveness of generative models for bias mitigation, creating opportunities for further exploration in this direction.

The purpose of this paper is three-fold. Firstly we attack a nonlinear interface problem on an unbounded domain with nonmonotone set-valued transmission conditions. The investigated problem involves a nonlinear monotone partial differential equation in the interior domain and the Laplacian in the exterior domain. Such a scalar interface problem models nonmonotone frictional contact of elastic infinite media. The variational formulation of the interface problem leads to a hemivariational inequality (HVI), which however lives on the unbounded domain, and thus cannot analyzed in a reflexive Banach space setting. By boundary integral methods we obtain another HVI that is amenable to functional analytic methods using standard Sobolev spaces on the interior domain and Sobolev spaces of fractional order on the coupling boundary. Secondly broadening the scope of the paper, we consider extended real-valued HVIs augmented by convex extended real-valued functions. Under a smallness hypothesis, we provide existence and uniqueness results, also establish a stability result with respect to the extended real-valued function as parameter. Thirdly based on the latter stability result, we prove the existence of optimal controls for four kinds of optimal control problems: distributed control on the bounded domain, boundary control, simultaneous distributed-boundary control governed by the interface problem, as well as control of the obstacle driven by a related bilateral obstacle interface problem.

In this article we call a sequence (an)n of elements of a metric space nearly computably Cauchy if for every strictly increasing computable function r:N→N the sequence (d(ar(n+1),ar(n)))n converges computably to 0. We show that there exists a strictly increasing sequence of rational numbers that is nearly computably Cauchy and unbounded. Then we call a real number α nearly computable if there exists a computable sequence (an)n of rational numbers that converges to α and is nearly computably Cauchy. It is clear that every computable real number is nearly computable, and it follows from a result by Downey and LaForte (2002) that there exists a nearly computable and left-computable number that is not computable. We observe that the set of nearly computable real numbers is a real closed field and closed under computable real functions with open domain, but not closed under arbitrary computable real functions. Among other things we strengthen results by Hoyrup (2017) and by Stephan and Wu (2005) by showing that any nearly computable real number that is not computable is weakly 1-generic (and, therefore, hyperimmune and not Martin-Löf random) and strongly Kurtz random (and, therefore, not K-trivial), and we strengthen a result by Downey and LaForte (2002) by showing that no promptly simple set can be Turing reducible to a nearly computable real number.

16 Jun 2024

In this research, we introduce a novel methodology for assessing Emotional Mimicry Intensity (EMI) as part of the 6th Workshop and Competition on Affective Behavior Analysis in-the-wild. Our methodology utilises the Wav2Vec 2.0 architecture, which has been pre-trained on an extensive podcast dataset, to capture a wide array of audio features that include both linguistic and paralinguistic components. We refine our feature extraction process by employing a fusion technique that combines individual features with a global mean vector, thereby embedding a broader contextual understanding into our analysis. A key aspect of our approach is the multi-task fusion strategy that not only leverages these features but also incorporates a pre-trained Valence-Arousal-Dominance (VAD) model. This integration is designed to refine emotion intensity prediction by concurrently processing multiple emotional dimensions, thereby embedding a richer contextual understanding into our framework. For the temporal analysis of audio data, our feature fusion process utilises a Long Short-Term Memory (LSTM) network. This approach, which relies solely on the provided audio data, shows marked advancements over the existing baseline, offering a more comprehensive understanding of emotional mimicry in naturalistic settings, achieving the second place in the EMI challenge.

30 Jan 2023

We investigate finite-dimensional constrained structured optimization

problems, featuring composite objective functions and set-membership

constraints. Offering an expressive yet simple language, this problem class

provides a modeling framework for a variety of applications. We study

stationarity and regularity concepts, and propose a flexible augmented

Lagrangian scheme. We provide a theoretical characterization of the algorithm

and its asymptotic properties, deriving convergence results for fully nonconvex

problems. It is demonstrated how the inner subproblems can be solved by

off-the-shelf proximal methods, notwithstanding the possibility to adopt any

solvers, insofar as they return approximate stationary points. Finally, we

describe our matrix-free implementation of the proposed algorithm and test it

numerically. Illustrative examples show the versatility of constrained

composite programs as a modeling tool and expose difficulties arising in this

vast problem class.

IP Geolocation is a key enabler for the Future Internet to provide geographical location information for application services. For example, this data is used by Content Delivery Networks to assign users to mirror servers, which are close by, hence providing enhanced traffic management. It is still a challenging task to obtain precise and stable location information, whereas proper results are only achieved by the use of active latency measurements. This paper presents an advanced approach for an accurate and self-optimizing model for location determination, including identification of optimized Landmark positions, which are used for probing. Moreover, the selection of correlated data and the estimated target location requires a sophisticated strategy to identify the correct position. We present an improved approximation of network distances of usually unknown TIER infrastructures using the road network. Our concept is evaluated under real-world conditions focusing Europe.

04 Mar 2025

With the increasing use of online services, the protection of the privacy of

users becomes more and more important. This is particularly critical as

authentication and authorization as realized on the Internet nowadays,

typically relies on centralized identity management solutions. Although those

are very convenient from a user's perspective, they are quite intrusive from a

privacy perspective and are currently far from implementing the concept of data

minimization. Fortunately, cryptography offers exciting primitives such as

zero-knowledge proofs and advanced signature schemes to realize various forms

of so-called anonymous credentials. Such primitives allow to realize online

authentication and authorization with a high level of built-in privacy

protection (what we call privacy-preserving authentication). Though these

primitives have already been researched for various decades and are well

understood in the research community, unfortunately, they lack widespread

adoption. In this paper, we look at the problems, what cryptography can do,

some deployment examples, and barriers to widespread adoption. Latter using the

example of the EU Digital Identity Wallet (EUDIW) and the recent discussion and

feedback from cryptography experts around this topic. We also briefly comment

on the transition to post-quantum cryptography.

There are no more papers matching your filters at the moment.