University of Aveiro

01 Oct 2025

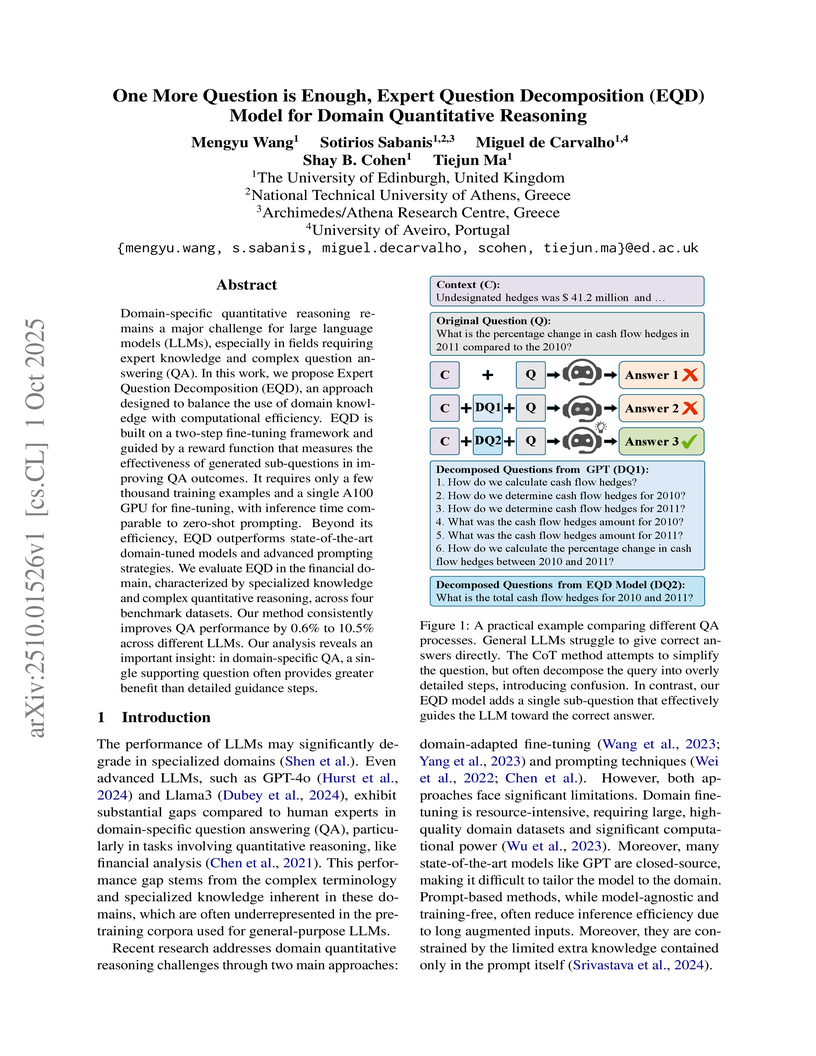

Domain-specific quantitative reasoning remains a major challenge for large language models (LLMs), especially in fields requiring expert knowledge and complex question answering (QA). In this work, we propose Expert Question Decomposition (EQD), an approach designed to balance the use of domain knowledge with computational efficiency. EQD is built on a two-step fine-tuning framework and guided by a reward function that measures the effectiveness of generated sub-questions in improving QA outcomes. It requires only a few thousand training examples and a single A100 GPU for fine-tuning, with inference time comparable to zero-shot prompting. Beyond its efficiency, EQD outperforms state-of-the-art domain-tuned models and advanced prompting strategies. We evaluate EQD in the financial domain, characterized by specialized knowledge and complex quantitative reasoning, across four benchmark datasets. Our method consistently improves QA performance by 0.6% to 10.5% across different LLMs. Our analysis reveals an important insight: in domain-specific QA, a single supporting question often provides greater benefit than detailed guidance steps.

Symmetry, a fundamental concept to understand our environment, often

oversimplifies reality from a mathematical perspective. Humans are a prime

example, deviating from perfect symmetry in terms of appearance and cognitive

biases (e.g. having a dominant hand). Nevertheless, our brain can easily

overcome these imperfections and efficiently adapt to symmetrical tasks. The

driving motivation behind this work lies in capturing this ability through

reinforcement learning. To this end, we introduce Adaptive Symmetry Learning

(ASL), a model-minimization actor-critic extension that addresses incomplete or

inexact symmetry descriptions by adapting itself during the learning process.

ASL consists of a symmetry fitting component and a modular loss function that

enforces a common symmetric relation across all states while adapting to the

learned policy. The performance of ASL is compared to existing

symmetry-enhanced methods in a case study involving a four-legged ant model for

multidirectional locomotion tasks. The results show that ASL can recover from

large perturbations and generalize knowledge to hidden symmetric states. It

achieves comparable or better performance than alternative methods in most

scenarios, making it a valuable approach for leveraging model symmetry while

compensating for inherent perturbations.

California Institute of TechnologySLAC National Accelerator LaboratoryUniversity of Zurich

California Institute of TechnologySLAC National Accelerator LaboratoryUniversity of Zurich Google

Google Stanford University

Stanford University University of MichiganUniversity of Ljubljana

University of MichiganUniversity of Ljubljana University of California, San Diego

University of California, San Diego Columbia University

Columbia University CERN

CERN Rutgers University

Rutgers University Lawrence Berkeley National LaboratoryUniversity of Heidelberg

Lawrence Berkeley National LaboratoryUniversity of Heidelberg MITUniversity of SussexUniversity of CaliforniaUniversität HamburgJozef Stefan InstituteThe Johns Hopkins UniversityUniversity of KansasUniversity of AveiroReed CollegeUniversity of GenovaUniversidad Aut

´

onoma de MadridUniversit

Clermont Auvergne

MITUniversity of SussexUniversity of CaliforniaUniversität HamburgJozef Stefan InstituteThe Johns Hopkins UniversityUniversity of KansasUniversity of AveiroReed CollegeUniversity of GenovaUniversidad Aut

´

onoma de MadridUniversit

Clermont AuvergneA new paradigm for data-driven, model-agnostic new physics searches at

colliders is emerging, and aims to leverage recent breakthroughs in anomaly

detection and machine learning. In order to develop and benchmark new anomaly

detection methods within this framework, it is essential to have standard

datasets. To this end, we have created the LHC Olympics 2020, a community

challenge accompanied by a set of simulated collider events. Participants in

these Olympics have developed their methods using an R&D dataset and then

tested them on black boxes: datasets with an unknown anomaly (or not). This

paper will review the LHC Olympics 2020 challenge, including an overview of the

competition, a description of methods deployed in the competition, lessons

learned from the experience, and implications for data analyses with future

datasets as well as future colliders.

Perception and prediction modules are critical components of autonomous driving systems, enabling vehicles to navigate safely through complex environments. The perception module is responsible for perceiving the environment, including static and dynamic objects, while the prediction module is responsible for predicting the future behavior of these objects. These modules are typically divided into three tasks: object detection, object tracking, and motion prediction. Traditionally, these tasks are developed and optimized independently, with outputs passed sequentially from one to the next. However, this approach has significant limitations: computational resources are not shared across tasks, the lack of joint optimization can amplify errors as they propagate throughout the pipeline, and uncertainty is rarely propagated between modules, resulting in significant information loss. To address these challenges, the joint perception and prediction paradigm has emerged, integrating perception and prediction into a unified model through multi-task learning. This strategy not only overcomes the limitations of previous methods, but also enables the three tasks to have direct access to raw sensor data, allowing richer and more nuanced environmental interpretations. This paper presents the first comprehensive survey of joint perception and prediction for autonomous driving. We propose a taxonomy that categorizes approaches based on input representation, scene context modeling, and output representation, highlighting their contributions and limitations. Additionally, we present a qualitative analysis and quantitative comparison of existing methods. Finally, we discuss future research directions based on identified gaps in the state-of-the-art.

A C++ library for sensitivity analysis of optimisation problems involving ordinary differential equations (ODEs) enabled by automatic differentiation (AD) and SIMD (Single Instruction, Multiple data) vectorization is presented. The discrete adjoint sensitivity analysis method is implemented for adaptive explicit Runge-Kutta (ERK) methods. Automatic adjoint differentiation (AAD) is employed for efficient evaluations of products of vectors and the Jacobian matrix of the right hand side of the ODE system. This approach avoids the low-level drawbacks of the black box approach of employing AAD on the entire ODE solver and opens the possibility to leverage parallelization. SIMD vectorization is employed to compute the vector-Jacobian products concurrently. We study the performance of other methods and implementations of sensitivity analysis and we find that our algorithm presents a small advantage compared to equivalent existing software.

27 Oct 2021

Humanoid robots are made to resemble humans but their locomotion abilities

are far from ours in terms of agility and versatility. When humans walk on

complex terrains, or face external disturbances, they combine a set of

strategies, unconsciously and efficiently, to regain stability. This paper

tackles the problem of developing a robust omnidirectional walking framework,

which is able to generate versatile and agile locomotion on complex terrains.

The Linear Inverted Pendulum Model and Central Pattern Generator concepts are

used to develop a closed-loop walk engine, which is then combined with a

reinforcement learning module. This module learns to regulate the walk engine

parameters adaptively, and generates residuals to adjust the robot's target

joint positions (residual physics). Additionally, we propose a proximal

symmetry loss function to increase the sample efficiency of the Proximal Policy

Optimization algorithm, by leveraging model symmetries and the trust region

concept. The effectiveness of the proposed framework was demonstrated and

evaluated across a set of challenging simulation scenarios. The robot was able

to generalize what it learned in unforeseen circumstances, displaying

human-like locomotion skills, even in the presence of noise and external

pushes.

30 Sep 2025

This paper investigates the extension of lattice-based logics into modal languages. We observe that such extensions admit multiple approaches, as the interpretation of the necessity operator is not uniquely determined by the underlying lattice structure. The most natural interpretation defines necessity as the meet of the truth values of a formula across all accessible worlds -- an approach we refer to as the \textitnormal interpretation. We examine the logical properties that emerge under this and other interpretations, including the conditions under which the resulting modal logic satisfies the axiom K and other common modal validities. Furthermore, we consider cases in which necessity is attributed exclusively to formulas that hold in all accessible worlds.

20 Aug 2025

In today's data-driven ecosystems, ensuring data integrity, traceability and accountability is important. Provenance polynomials constitute a powerful formalism for tracing the origin and the derivations made to produce database query results. Despite their theoretical expressiveness, current implementations have limitations in handling aggregations and nested queries, and some of them and tightly coupled to a single Database Management System (DBMS), hindering interoperability and broader applicability.

This paper presents a query rewriting-based approach for annotating Structured Query Language (SQL) queries with provenance polynomials. The proposed methods are DBMS-independent and support Select-Projection-Join-Union-Aggregation (SPJUA) operations and nested queries, through recursive propagation of provenance annotations. This constitutes the first full implementation of semiring-based theory for provenance polynomials extended with semimodule structures. It also presents an experimental evaluation to assess the validity of the proposed methods and compare the performance against state-of-the-art systems using benchmark data and queries. The results indicate that our solution delivers a comprehensive implementation of the theoretical formalisms proposed in the literature, and demonstrates improved performance and scalability, outperforming existing methods.

15 Sep 2025

We propose a new dynamic SIR model that, in contrast with the available model on time scales, is biological relevant. For the new SIR model we obtain an explicit solution, we prove the asymptotic stability of the extinction and disease-free equilibria, and deduce some necessary conditions for the monotonic behavior of the infected population. The new results are illustrated with several examples in the discrete, continuous, and quantum settings.

Charles et al. developed a comprehensive mathematical model for rabies transmission that accounts for humans, domestic dogs, free-ranging dogs, and environmental viral reservoirs, using statistical methods to estimate transmission parameters and their uncertainties. The study indicates that domestic dogs may play a more prominent role in human infections than previously assumed, informing evidence-based control strategies.

Maximum Distance Separable (MDS) convolutional codes are cha- racterized through the property that the free distance meets the generalized Singleton bound. The existence of free MDS convolutional codes over Z p r was recently discovered in [26] via the Hensel lift of a cyclic code. In this paper we further investigate this important class of convolutional codes over Z p r from a new perspective. We introduce the notions of p-standard form and r- optimal parameters to derive a novel upper bound of Singleton type on the free distance. Moreover, we present a constructive method for building general (non necessarily free) MDS convolutional codes over Z p r for any given set of parameters.

28 Mar 2024

Accurate dynamic models are crucial for many robotic applications.

Traditional approaches to deriving these models are based on the application of

Lagrangian or Newtonian mechanics. Although these methods provide a good

insight into the physical behaviour of the system, they rely on the exact

knowledge of parameters such as inertia, friction and joint flexibility. In

addition, the system is often affected by uncertain and nonlinear effects, such

as saturation and dead zones, which can be difficult to model. A popular

alternative is the application of Machine Learning (ML) techniques - e.g.,

Neural Networks (NNs) - in the context of a "black-box" methodology. This paper

reports on our experience with this approach for a real-life 6 degrees of

freedom (DoF) manipulator. Specifically, we considered several NN

architectures: single NN, multiple NNs, and cascade NN. We compared the

performance of the system by using different policies for selecting the NN

hyperparameters. Our experiments reveal that the best accuracy and performance

are obtained by a cascade NN, in which we encode our prior physical knowledge

about the dependencies between joints, complemented by an appropriate

optimisation of the hyperparameters.

21 Apr 2024

We propose a constraint-based algorithm, which automatically determines

causal relevance thresholds, to infer causal networks from data. We call these

topological thresholds. We present two methods for determining the threshold:

the first seeks a set of edges that leaves no disconnected nodes in the

network; the second seeks a causal large connected component in the data.

We tested these methods both for discrete synthetic and real data, and

compared the results with those obtained for the PC algorithm, which we took as

the benchmark. We show that this novel algorithm is generally faster and more

accurate than the PC algorithm.

The algorithm for determining the thresholds requires choosing a measure of

causality. We tested our methods for Fisher Correlations, commonly used in PC

algorithm (for instance in \cite{kalisch2005}), and further proposed a discrete

and asymmetric measure of causality, that we called Net Influence, which

provided very good results when inferring causal networks from discrete data.

This metric allows for inferring directionality of the edges in the process of

applying the thresholds, speeding up the inference of causal DAGs.

01 Jul 2022

Inductive Conformal Prediction (ICP) is a set of distribution-free and model

agnostic algorithms devised to predict with a user-defined confidence with

coverage guarantee. Instead of having point predictions, i.e., a real number in

the case of regression or a single class in multi class classification, models

calibrated using ICP output an interval or a set of classes, respectively. ICP

takes special importance in high-risk settings where we want the true output to

belong to the prediction set with high probability. As an example, a

classification model might output that given a magnetic resonance image a

patient has no latent diseases to report. However, this model output was based

on the most likely class, the second most likely class might tell that the

patient has a 15% chance of brain tumor or other severe disease and therefore

further exams should be conducted. Using ICP is therefore way more informative

and we believe that should be the standard way of producing forecasts. This

paper is a hands-on introduction, this means that we will provide examples as

we introduce the theory.

01 Oct 2025

As already mentioned by Lawvere in his 1973 paper, the characterisation of Cauchy completeness of metric spaces in terms of representability of adjoint distributors amounts to the idempotent-split property of an ordinary category when the governing symmetric monoidal-closed category is changed from the extended real half-line to the category of sets. In this paper, for any commutative quantale V, we extend these two characterisations of Lawvere-style completeness to V-normed categories, thus replacing [0,∞] and Set more generally by the category Set//V of V-normed sets. We also establish improvements of recent results regarding the normed convergence of Cauchy sequences in two important V-normed categories.

19 Nov 2014

The rapid depletion of fossil fuel resources and environmental concerns has

given awareness on generation of renewable energy resources. Among the various

renewable resources, hybrid solar and wind energy seems to be promising

solutions to provide reliable power supply with improved system efficiency and

reduced storage requirements for stand-alone applications. This paper presents

a feasibility assessment and optimum size of photovoltaic (PV) array, wind

turbine and battery bank for a standalone hybrid Solar/Wind Power system

(HSWPS) at remote telecom station of Nepal at Latitude (27{\deg}23'50") and

Longitude (86{\deg}44'23") consisting a telecommunication load of Very Small

Aperture Terminal (VSAT), Repeater station and Code Division Multiple Access

Base Transceiver Station (CDMA 2C10 BTS). In any RES based system, the

feasibility assessment is considered as the first step analysis. In this work,

feasibility analysis is carried through hybrid optimization model for electric

renewables (HOMER) and mathematical models were implemented in the MATLAB

environment to perform the optimal configuration for a given load and a desired

loss of power supply probability (LPSP) from a set of systems components with

the lowest value of cost function defined in terms of reliability and levelized

unit electricity cost (LUCE). The simulation results for the existing and the

proposed models are compared. The simulation results shows that existing

architecture consisting of 6.12 kW KC85T photovoltaic modules, 1kW H3.1 wind

turbine and 1600 Ah GFM-800 battery bank have a 36.6% of unmet load during a

year. On the other hand, the proposed system includes 1kW *2 H3.1 Wind turbine,

8.05 kW TSM-175DA01 photovoltaic modules and 1125 Ah T-105 battery bank with

system reliability of 99.99% with a significant cost reduction as well as

reliable energy production.

12 Aug 2019

As composites of constant, (co)product, identity, and powerset functors, Kripke polynomial functors form a relevant class of Set-functors in the theory of coalgebras. The main goal of this paper is to expand the theory of limits in categories of coalgebras of Kripke polynomial functors to the context of quantale-enriched categories. To assume the role of the powerset functor we consider "powerset-like" functors based on the Hausdorff V-category structure. As a starting point, we show that for a lifting of a SET-functor to a topological category X over Set that commutes with the forgetful functor, the corresponding category of coalgebras over X is topological over the category of coalgebras over Set and, therefore, it is "as complete" but cannot be "more complete". Secondly, based on a Cantor-like argument, we observe that Hausdorff functors on categories of quantale-enriched categories do not admit a terminal coalgebra. Finally, in order to overcome these "negative" results, we combine quantale-enriched categories and topology \emph{à la} Nachbin. Besides studying some basic properties of these categories, we investigate "powerset-like" functors which simultaneously encode the classical Hausdorff metric and Vietoris topology and show that the corresponding categories of coalgebras of "Kripke polynomial" functors are (co)complete.

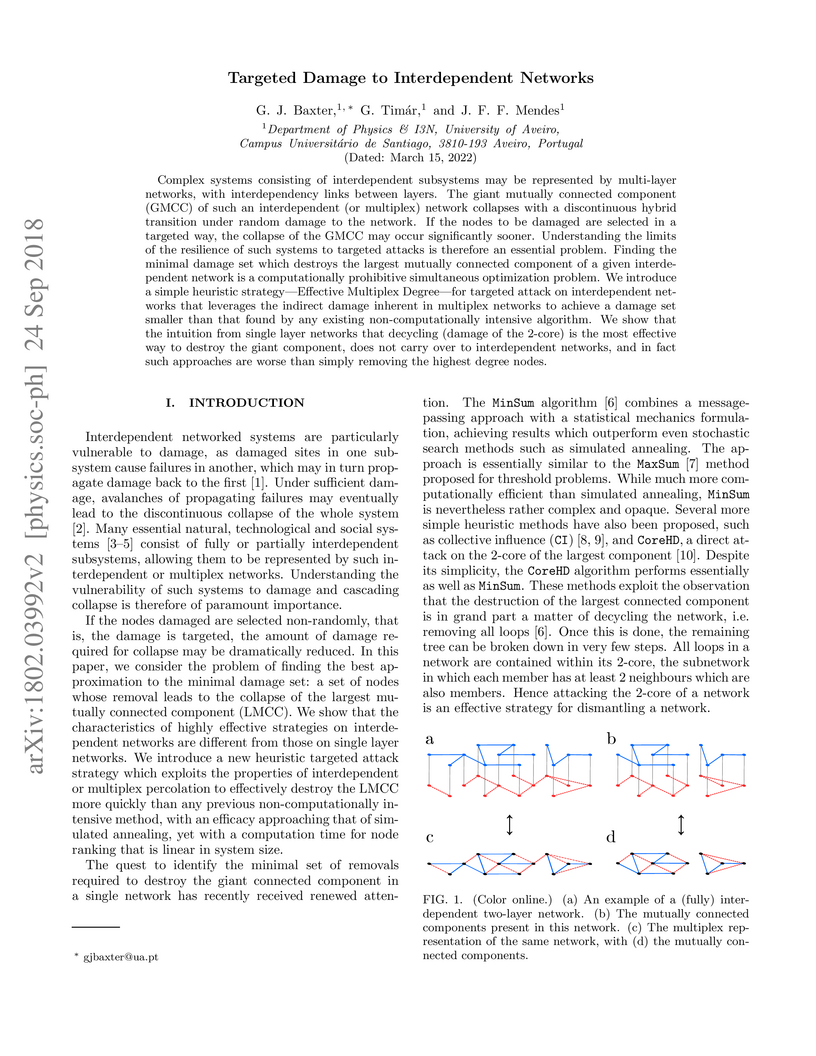

The giant mutually connected component (GMCC) of an interdependent or

multiplex network collapses with a discontinuous hybrid transition under random

damage to the network. If the nodes to be damaged are selected in a targeted

way, the collapse of the GMCC may occur significantly sooner. Finding the

minimal damage set which destroys the largest mutually connected component of a

given interdependent network is a computationally prohibitive simultaneous

optimization problem. We introduce a simple heuristic strategy -- Effective

Multiplex Degree -- for targeted attack on interdependent networks that

leverages the indirect damage inherent in multiplex networks to achieve a

damage set smaller than that found by any other non computationally intensive

algorithm. We show that the intuition from single layer networks that decycling

(damage of the 2-core) is the most effective way to destroy the giant

component, does not carry over to interdependent networks, and in fact such

approaches are worse than simply removing the highest degree nodes.

15 Feb 2024

We propose a novel dynamical model for blood alcohol concentration that

incorporates ψ-Caputo fractional derivatives. Using the generalized

Laplace transform technique, we successfully derive an analytic solution for

both the alcohol concentration in the stomach and the alcohol concentration in

the blood of an individual. These analytical formulas provide us a

straightforward numerical scheme, which demonstrates the efficacy of the

ψ-Caputo derivative operator in achieving a better fit to real

experimental data on blood alcohol levels available in the literature. In

comparison to existing classical and fractional models found in the literature,

our model outperforms them significantly. Indeed, by employing a simple yet

non-standard kernel function ψ(t), we are able to reduce the error by more

than half, resulting in an impressive gain improvement of 59 percent.

California Institute of Technology

California Institute of Technology NASA Goddard Space Flight CenterCONICET

NASA Goddard Space Flight CenterCONICET University of Florida

University of Florida Space Telescope Science Institute

Space Telescope Science Institute Brown University

Brown University European Southern ObservatoryUniversity of KansasSETI InstituteUniversity of AveiroUniversidad Nacional de CórdobaMeisei UniversityBarcelona Institute of Science and TechnologyOxford DynamicsLudwig-Maximilians-Universität MünchenUniversity of Naples

“Federico II”

European Southern ObservatoryUniversity of KansasSETI InstituteUniversity of AveiroUniversidad Nacional de CórdobaMeisei UniversityBarcelona Institute of Science and TechnologyOxford DynamicsLudwig-Maximilians-Universität MünchenUniversity of Naples

“Federico II”The Transiting Exoplanet Survey Satellite (TESS) has detected thousands of

exoplanet candidates since 2018, most of which have yet to be confirmed. A key

step in the confirmation process of these candidates is ruling out false

positives through vetting. Vetting also eases the burden on follow-up

observations, provides input for demographics studies, and facilitates training

machine learning algorithms. Here we present the TESS Triple-9 (TT9) catalog --

a uniformly-vetted catalog containing dispositions for 999 exoplanet candidates

listed on ExoFOP-TESS, known as TESS Objects of Interest (TOIs). The TT9 was

produced using the Discovery And Vetting of Exoplanets pipeline, DAVE, and

utilizing the power of citizen science as part of the Planet Patrol project.

More than 70% of the TOIs listed in the TT9 pass our diagnostic tests, and are

thus marked as true planetary candidates. We flagged 144 candidates as false

positives, and identified 146 as potential false positives. At the time of

writing, the TT9 catalog contains ~20% of the entire ExoFOP-TESS TOIs list,

demonstrates the synergy between automated tools and citizen science, and

represents the first stage of our efforts to vet all TOIs. The DAVE generated

results are publicly available on ExoFOP-TESS.

There are no more papers matching your filters at the moment.