University of California at San Diego

We demonstrate that our recently developed theory of electric field wave propagation in anisotropic and inhomogeneous brain tissues, which has been shown to explain a broad range of observed coherent synchronous brain electrical processes, also explains the spiking behavior of single neurons, thus bridging the gap between the fundamental element of brain electrical activity (the neuron) and large-scale coherent synchronous electrical activity.

Our analysis indicates that the membrane interface of the axonal cellular system can be mathematically described by a nonlinear system with several small parameters. This allows for the rigorous derivation of an accurate yet simpler nonlinear model following the formal small parameter expansion. The resulting action potential model exhibits a smooth, continuous transition from the linear wave oscillatory regime to the nonlinear spiking regime, as well as a critical transition to a non-oscillatory regime. These transitions occur with changes in the criticality parameter and include several different bifurcation types, representative of the various experimentally detected neuron types.

This new theory overcomes the limitations of the Hodgkin-Huxley model, such as the inability to explain extracellular spiking, efficient brain synchronization, saltatory conduction along myelinated axons, and a variety of other observed coherent macroscopic brain electrical phenomena. We also show that the standard cable axon theory can be recovered by our approach, using the very crude assumptions of piece-wise homogeneity and isotropy. However, the diffusion process described by the cable equation is not capable of supporting action potential propagation across a wide range of experimentally reported axon parameters.

27 Feb 2024

CNRS

CNRS University of Southern California

University of Southern California National University of Singapore

National University of Singapore Georgia Institute of Technology

Georgia Institute of Technology Beihang University

Beihang University Osaka University

Osaka University Zhejiang University

Zhejiang University Cornell University

Cornell University Northwestern University

Northwestern University University of Texas at Austin

University of Texas at Austin Nanyang Technological University

Nanyang Technological University Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles

Purdue UniversityUniversity of Illinois at ChicagoUniversity of ViennaUniversity of Texas at DallasVirginia Commonwealth UniversityUniversity of California at Los Angeles University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de Montpellier

University of VirginiaUniversity of MessinaPontifical Catholic University of Rio de JaneiroUniversity of South CarolinaKanazawa UniversityIndian Institute of Technology RoorkeeUniversity of GothenburgFederal University of Rio de JaneiroThalesPolitecnico di BariUniversity of California at Santa BarbaraTechnical University DelftUniversity of California at San DiegoToshiba CorporationA* STARUniversity of Duisberg-EssenInteruniversity Microelectronics Center (IMEC)Laboratoire d'Informatique, de Robotique et de Microélectronique de MontpellierIn the "Beyond Moore's Law" era, with increasing edge intelligence, domain-specific computing embracing unconventional approaches will become increasingly prevalent. At the same time, adopting a variety of nanotechnologies will offer benefits in energy cost, computational speed, reduced footprint, cyber resilience, and processing power. The time is ripe for a roadmap for unconventional computing with nanotechnologies to guide future research, and this collection aims to fill that need. The authors provide a comprehensive roadmap for neuromorphic computing using electron spins, memristive devices, two-dimensional nanomaterials, nanomagnets, and various dynamical systems. They also address other paradigms such as Ising machines, Bayesian inference engines, probabilistic computing with p-bits, processing in memory, quantum memories and algorithms, computing with skyrmions and spin waves, and brain-inspired computing for incremental learning and problem-solving in severely resource-constrained environments. These approaches have advantages over traditional Boolean computing based on von Neumann architecture. As the computational requirements for artificial intelligence grow 50 times faster than Moore's Law for electronics, more unconventional approaches to computing and signal processing will appear on the horizon, and this roadmap will help identify future needs and challenges. In a very fertile field, experts in the field aim to present some of the dominant and most promising technologies for unconventional computing that will be around for some time to come. Within a holistic approach, the goal is to provide pathways for solidifying the field and guiding future impactful discoveries.

11 Mar 2005

We present an Effective Field Theory (EFT) formalism which describes the

dynamics of non-relativistic extended objects coupled to gravity. The formalism

is relevant to understanding the gravitational radiation power spectra emitted

by binary star systems, an important class of candidate signals for

gravitational wave observatories such as LIGO or VIRGO. The EFT allows for a

clean separation of the three relevant scales: r_s, the size of the compact

objects, r the orbital radius and r/v, the wavelength of the physical radiation

(where the velocity v is the expansion parameter). In the EFT radiation is

systematically included in the v expansion without need to separate integrals

into near zones and radiation zones. We show that the renormalization of

ultraviolet divergences which arise at v^6 in post-Newtonian (PN) calculations

requires the presence of two non-minimal worldline gravitational couplings

linear in the Ricci curvature. However, these operators can be removed by a

redefinition of the metric tensor, so that the divergences at arising at v^6

have no physically observable effect. Because in the EFT finite size features

are encoded in the coefficients of non-minimal couplings, this implies a simple

proof of the decoupling of internal structure for spinless objects to at least

order v^6. Neglecting absorptive effects, we find that the power counting rules

of the EFT indicate that the next set of short distance operators, which are

quadratic in the curvature and are associated with tidal deformations, do not

play a role until order v^10. These operators, which encapsulate finite size

properties of the sources, have coefficients that can be fixed by a matching

calculation. By including the most general set of such operators, the EFT

allows one to work within a point particle theory to arbitrary orders in v.

08 Aug 2016

Low-mass "dwarf" galaxies represent the most significant challenges to the cold dark matter (CDM) model of cosmological structure formation. Because these faint galaxies are (best) observed within the Local Group (LG) of the Milky Way (MW) and Andromeda (M31), understanding their formation in such an environment is critical. We present first results from the Latte Project: the Milky Way on FIRE (Feedback in Realistic Environments). This simulation models the formation of a MW-mass galaxy to z = 0 within LCDM cosmology, including dark matter, gas, and stars at unprecedented resolution: baryon particle mass of 7070 Msun with gas kernel/softening that adapts down to 1 pc (with a median of 25 - 60 pc at z = 0). Latte was simulated using the GIZMO code with a mesh-free method for accurate hydrodynamics and the FIRE-2 model for star formation and explicit feedback within a multi-phase interstellar medium. For the first time, Latte self-consistently resolves the spatial scales corresponding to half-light radii of dwarf galaxies that form around a MW-mass host down to Mstar > 10^5 Msun. Latte's population of dwarf galaxies agrees with the LG across a broad range of properties: (1) distributions of stellar masses and stellar velocity dispersions (dynamical masses), including their joint relation; (2) the mass-metallicity relation; and (3) a diverse range of star-formation histories, including their mass dependence. Thus, Latte produces a realistic population of dwarf galaxies at Mstar > 10^5 Msun that does not suffer from the "missing satellites" or "too big to fail" problems of small-scale structure formation. We conclude that baryonic physics can reconcile observed dwarf galaxies with standard LCDM cosmology.

Rhombohedral multilayer graphene hosts a rich landscape of correlated symmetry-broken phases, driven by strong interactions from its flat band edges. Aligning to hexagonal boron nitride (hBN) creates a moiré pattern, leading to recent observations of exotic ground states such as integer and fractional quantum anomalous Hall effects. Here, we show that the moiré effects and resulting correlated phase diagrams are critically influenced by a previously underestimated structural choice: the hBN alignment orientation. This binary parameter distinguishes between configurations where the rhombohedral graphene and hBN lattices are aligned near 0° or 180°, a distinction that arises only because both materials break inversion symmetry. Although the two orientations produce the same moiré wavelength, we find their distinct local stacking configurations result in markedly different moiré potential strengths. Using low-temperature transport and scanning SQUID-on-tip magnetometry, we compare nearly identical devices that differ only in alignment orientation and observe sharply contrasting sequences of symmetry-broken states. Theoretical analysis reveals a simple mechanism based on lattice relaxation and the atomic-scale electronic structure of rhombohedral graphene, supported by detailed modeling. These findings establish hBN alignment orientation as a key control parameter in moiré-engineered graphene systems and provide a framework for interpreting both prior and future experiments.

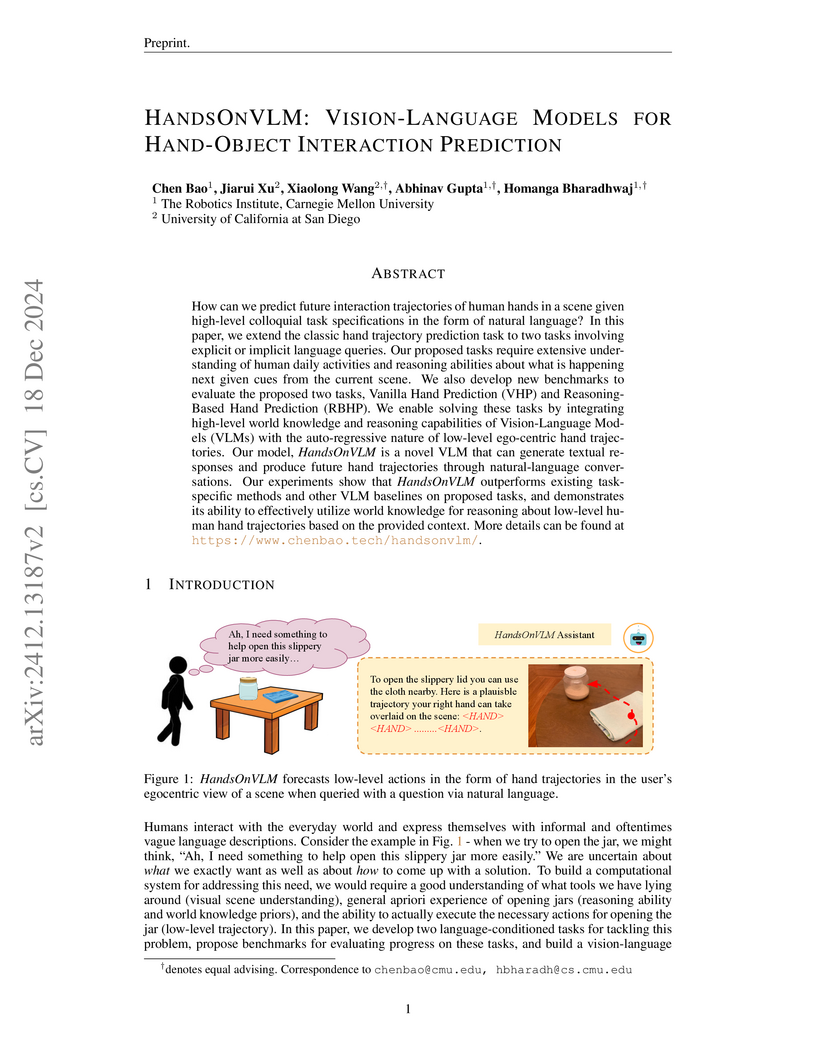

How can we predict future interaction trajectories of human hands in a scene

given high-level colloquial task specifications in the form of natural

language? In this paper, we extend the classic hand trajectory prediction task

to two tasks involving explicit or implicit language queries. Our proposed

tasks require extensive understanding of human daily activities and reasoning

abilities about what should be happening next given cues from the current

scene. We also develop new benchmarks to evaluate the proposed two tasks,

Vanilla Hand Prediction (VHP) and Reasoning-Based Hand Prediction (RBHP). We

enable solving these tasks by integrating high-level world knowledge and

reasoning capabilities of Vision-Language Models (VLMs) with the

auto-regressive nature of low-level ego-centric hand trajectories. Our model,

HandsOnVLM is a novel VLM that can generate textual responses and produce

future hand trajectories through natural-language conversations. Our

experiments show that HandsOnVLM outperforms existing task-specific methods and

other VLM baselines on proposed tasks, and demonstrates its ability to

effectively utilize world knowledge for reasoning about low-level human hand

trajectories based on the provided context. Our website contains code and

detailed video results this https URL

19 Nov 2024

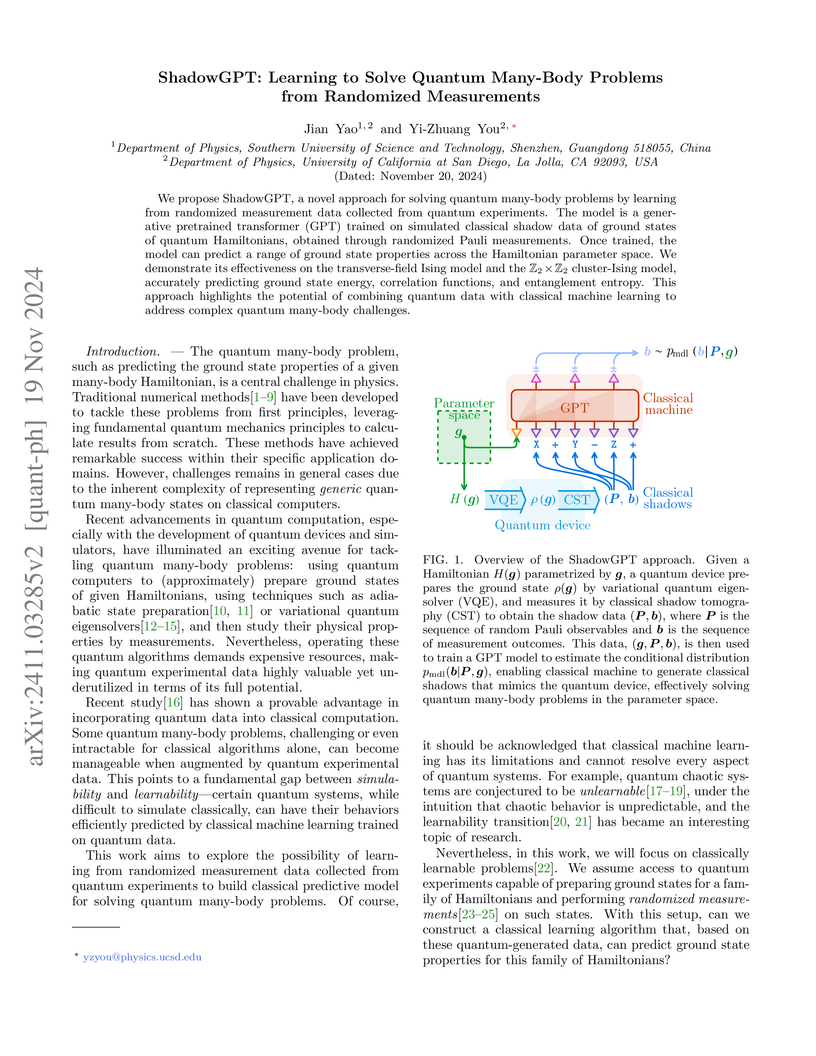

ShadowGPT, a generative pretrained transformer model, predicts quantum many-body ground state properties by learning from randomized measurement data, or classical shadows. The model accurately estimated ground state energies and local correlation functions for N=10 qubit transverse-field Ising and Z2xZ2 cluster-Ising models across continuous parameter spaces.

How much current does a sliding electron crystal carry? The answer to this simple question has important implications for the dynamic properties of the crystal, such as the frequency of its cyclotron motion, and its phonon spectrum. In this work we introduce a precise definition of a sliding crystal and compute the corresponding current jc for topological electron crystals in the presence of magnetic field. Our result is fully non-perturbative, does not rely on Galilean invariance, and applies equally to Wigner crystals and (anomalous) Hall crystals. In terms of the electron density ρ and magnetic flux density ϕ, we find that jc=e(ρ−Cϕ)v. Surprisingly, the current receives a contribution from the many-body Chern number C of the crystal. When ρ=Cϕ, sliding crystals therefore carry zero current. The crystalline current fixes the Lorentz force felt by the sliding crystal and the dispersion of low-energy phonons of such crystals. This gives us a simple counting rule for the number of gapless phonons: if a sliding crystal carries nonzero current in a magnetic field, there is a single gapless mode, while otherwise there are two gapless modes. This result can also be understood from anomaly-matching of emanant discrete translation symmetries -- an idea that is also applicable to the dispersion of skyrmion crystals. Our results lead to novel experimental implications and invite further conceptual developments for electron crystals.

I define a set of wavefunctions for SU(N) lattice antiferromagnets, analogous to the valence bond solid states of Affleck, Kennedy, Lieb, and Tasaki (AKLT), in which the singlets are extended over N-site simplices. As with the valence bond solids, the new simplex solid (SS) states are extinguished by certain local projection operators, allowing us to construct Hamiltonians with local interactions which render the SS states exact ground states. Using a coherent state representation, we show that the quantum correlations in each SS state are calculable as the finite temperature correlations of an associated classical model, with N-spin interactions, on the same lattice. In three and higher dimensions, the SS states can spontaneously break SU(N) and exhibit N-sublattice long-ranged order, as a function of a discrete parameter which fixes the local representation of SU(N). I analyze this transition using a classical mean field approach. For N>2 the ordered state is selected via an "order by disorder" mechanism. As in the AKLT case, the bulk representations fractionalize at an edge, and the ground state entropy is proportional to the volume of the boundary.

25 Sep 2025

Multi-robot target tracking is a fundamental problem that requires coordinated monitoring of dynamic entities in applications such as precision agriculture, environmental monitoring, disaster response, and security surveillance. While Federated Learning (FL) has the potential to enhance learning across multiple robots without centralized data aggregation, its use in multi-Unmanned Aerial Vehicle (UAV) target tracking remains largely underexplored. Key challenges include limited onboard computational resources, significant data heterogeneity in FL due to varying targets and the fields of view, and the need for tight coupling between trajectory prediction and multi-robot planning. In this paper, we introduce DroneFL, the first federated learning framework specifically designed for efficient multi-UAV target tracking. We design a lightweight local model to predict target trajectories from sensor inputs, using a frozen YOLO backbone and a shallow transformer for efficient onboard training. The updated models are periodically aggregated in the cloud for global knowledge sharing. To alleviate the data heterogeneity that hinders FL convergence, DroneFL introduces a position-invariant model architecture with altitude-based adaptive instance normalization. Finally, we fuse predictions from multiple UAVs in the cloud and generate optimal trajectories that balance target prediction accuracy and overall tracking performance. Our results show that DroneFL reduces prediction error by 6%-83% and tracking distance by 0.4%-4.6% compared to a distributed non-FL framework. In terms of efficiency, DroneFL runs in real time on a Raspberry Pi 5 and has on average just 1.56 KBps data rate to the cloud.

20 Mar 2024

The gauge-invariant operators up to dimension six in the low-energy effective field theory below the electroweak scale are classified. There are 70 Hermitian dimension-five and 3631 Hermitian dimension-six operators that conserve baryon and lepton number, as well as ΔB=±ΔL=±1, ΔL=±2, and ΔL=±4 operators. The matching onto these operators from the Standard Model Effective Field Theory (SMEFT) up to order 1/Λ2 is computed at tree level. SMEFT imposes constraints on the coefficients of the low-energy effective theory, which can be checked experimentally to determine whether the electroweak gauge symmetry is broken by a single fundamental scalar doublet as in SMEFT. Our results, when combined with the one-loop anomalous dimensions of the low-energy theory and the one-loop anomalous dimensions of SMEFT, allow one to compute the low-energy implications of new physics to leading-log accuracy, and combine them consistently with high-energy LHC constraints.

18 Nov 2022

We compute the one-loop matching between the Standard Model Effective Field

Theory and the low-energy effective field theory below the electroweak scale,

where the heavy gauge bosons, the Higgs particle, and the top quark are

integrated out. The complete set of matching equations is derived including

effects up to dimension six in the power counting of both theories. We present

the results for general flavor structures and include both the CP-even and

CP-odd sectors. The matching equations express the masses, gauge couplings,

as well as the coefficients of dipole, three-gluon, and four-fermion operators

in the low-energy theory in terms of the parameters of the Standard Model

Effective Field Theory. Using momentum insertion, we also obtain the matching

for the CP-violating theta angles. Our results provide an ingredient for a

model-independent analysis of constraints on physics beyond the Standard Model.

They can be used for fixed-order calculations at one-loop accuracy and

represent a first step towards a systematic next-to-leading-log analysis.

29 Jun 2022

Researchers at UCSD developed a principled methodology for designing fair and efficient treatment allocation rules by integrating Pareto optimality with lexicographic preferences, first ensuring no group's welfare can be improved without harming another, and then minimizing unfairness among these efficient options. This approach offers theoretical guarantees for uniform convergence and optimal regret bounds for common fairness metrics, demonstrating superior performance in a calibrated educational policy targeting application.

28 Jul 2023

Studying the impact of new-physics models on low-energy observables

necessitates matching to effective field theories at the relevant mass

thresholds. We introduce the first public version of Matchete, a computer tool

for matching weakly-coupled models at one-loop order. It uses functional

methods to directly compute all matching contributions in a manifestly

gauge-covariant manner, while simplification methods eliminate redundant

operators from the output. We sketch the workings of the program and provide

examples of how to match simple Standard Model extensions. The package,

documentation, and example notebooks are publicly available at

this https URL

16 Sep 2025

Quantum circuits form a foundational framework in quantum science, enabling the description, analysis, and implementation of quantum computations. However, designing efficient circuits, typically constructed from single- and two-qubit gates, remains a major challenge for specific computational tasks. In this work, we introduce a novel artificial intelligence-driven protocol for quantum circuit design, benchmarked using shadow tomography for efficient quantum state readout. Inspired by techniques from natural language processing (NLP), our approach first selects a compact gate dictionary by optimizing the entangling power of two-qubit gates. We identify the iSWAP gate as a key element that significantly enhances sample efficiency, resulting in a minimal gate set of {I, SWAP, iSWAP}. Building on this, we implement a recurrent neural network trained via reinforcement learning to generate high-performing quantum circuits. The trained model demonstrates strong generalization ability, discovering efficient circuit architectures with low sample complexity beyond the training set. Our NLP-inspired framework offers broad potential for quantum computation, including extracting properties of logical qubits in quantum error correction.

15 Sep 2020

We present a model-independent anatomy of the ΔF=2 transitions K0−Kˉ0, Bs,d−Bˉs,d and D0−Dˉ0 in the context of the Standard Model Effective Field Theory (SMEFT). We present two master formulae for the mixing amplitude [M12]BSM. One in terms of the Wilson coefficients (WCs) of the Low-Energy Effective Theory (LEFT) operators evaluated at the electroweak scale μew and one in terms of the WCs of the SMEFT operators evaluated at the BSM scale Λ. The coefficients Paij entering these formulae contain all the information below the scales μew and Λ, respectively. Renormalization group effects from the top-quark Yukawa coupling play the most important role. The collection of the individual contributions of the SMEFT operators to [M12]BSM can be considered as the SMEFT ATLAS of ΔF=2 transitions and constitutes a travel guide to such transitions far beyond the scales explored by the LHC. We emphasize that this ATLAS depends on whether the down-basis or the up-basis for SMEFT operators is considered. We illustrate this technology with tree-level exchanges of heavy gauge bosons (Z′, G′) and corresponding heavy scalars.

We develop a theory of gapped domain wall between topologically ordered

systems in two spatial dimensions. We find a new type of superselection sector

-- referred to as the parton sector -- that subdivides the known superselection

sectors localized on gapped domain walls. Moreover, we introduce and study the

properties of composite superselection sectors that are made out of the parton

sectors. We explain a systematic method to define these sectors, their fusion

spaces, and their fusion rules, by deriving nontrivial identities relating

their quantum dimensions and fusion multiplicities. We propose a set of axioms

regarding the ground state entanglement entropy of systems that can host gapped

domain walls, generalizing the bulk axioms proposed in [B. Shi, K. Kato, and I.

H. Kim, Ann. Phys. 418, 168164 (2020)]. Similar to our analysis in the bulk, we

derive our main results by examining the self-consistency relations of an

object called information convex set. As an application, we define an analog of

topological entanglement entropy for gapped domain walls and derive its exact

expression.

Replay is the reactivation of one or more neural patterns, which are similar

to the activation patterns experienced during past waking experiences. Replay

was first observed in biological neural networks during sleep, and it is now

thought to play a critical role in memory formation, retrieval, and

consolidation. Replay-like mechanisms have been incorporated into deep

artificial neural networks that learn over time to avoid catastrophic

forgetting of previous knowledge. Replay algorithms have been successfully used

in a wide range of deep learning methods within supervised, unsupervised, and

reinforcement learning paradigms. In this paper, we provide the first

comprehensive comparison between replay in the mammalian brain and replay in

artificial neural networks. We identify multiple aspects of biological replay

that are missing in deep learning systems and hypothesize how they could be

utilized to improve artificial neural networks.

The repulsive Hubbard model has been immensely useful in understanding strongly correlated electron systems, and serves as the paradigmatic model of the field. Despite its simplicity, it exhibits a strikingly rich phenomenology which is reminiscent of that observed in quantum materials. Nevertheless, much of its phase diagram remains controversial. Here, we review a subset of what is known about the Hubbard model, based on exact results or controlled approximate solutions in various limits, for which there is a suitable small parameter. Our primary focus is on the ground state properties of the system on various lattices in two spatial dimensions, although both lower and higher dimensions are discussed as well. Finally, we highlight some of the important outstanding open questions.

ETH Zurich

ETH Zurich California Institute of TechnologyUniversity of Zurich

California Institute of TechnologyUniversity of Zurich University of Chicago

University of Chicago Stanford University

Stanford University Columbia University

Columbia University University of MarylandThe Hebrew University

University of MarylandThe Hebrew University University of ArizonaUniversity of SurreyFermi National Accelerator LaboratoryUniversity of California at BerkeleyNew Mexico State UniversityUniversidad Autonoma de MadridUniversity of California at San DiegoMax-Planck-Institut f ur Astrophysik

University of ArizonaUniversity of SurreyFermi National Accelerator LaboratoryUniversity of California at BerkeleyNew Mexico State UniversityUniversidad Autonoma de MadridUniversity of California at San DiegoMax-Planck-Institut f ur AstrophysikWe introduce the AGORA project, a comprehensive numerical study of

well-resolved galaxies within the LCDM cosmology. Cosmological hydrodynamic

simulations with force resolutions of ~100 proper pc or better will be run with

a variety of code platforms to follow the hierarchical growth, star formation

history, morphological transformation, and the cycle of baryons in and out of 8

galaxies with halo masses M_vir ~= 1e10, 1e11, 1e12, and 1e13 Msun at z=0 and

two different ("violent" and "quiescent") assembly histories. The numerical

techniques and implementations used in this project include the smoothed

particle hydrodynamics codes GADGET and GASOLINE, and the adaptive mesh

refinement codes ART, ENZO, and RAMSES. The codes will share common initial

conditions and common astrophysics packages including UV background,

metal-dependent radiative cooling, metal and energy yields of supernovae, and

stellar initial mass function. These are described in detail in the present

paper. Subgrid star formation and feedback prescriptions will be tuned to

provide a realistic interstellar and circumgalactic medium using a

non-cosmological disk galaxy simulation. Cosmological runs will be

systematically compared with each other using a common analysis toolkit, and

validated against observations to verify that the solutions are robust - i.e.,

that the astrophysical assumptions are responsible for any success, rather than

artifacts of particular implementations. The goals of the AGORA project are,

broadly speaking, to raise the realism and predictive power of galaxy

simulations and the understanding of the feedback processes that regulate

galaxy "metabolism." The proof-of-concept dark matter-only test of the

formation of a galactic halo with a z=0 mass of M_vir ~= 1.7e11 Msun by 9

different versions of the participating codes is also presented to validate the

infrastructure of the project.

There are no more papers matching your filters at the moment.