University of Hildesheim

Real-world visual data exhibit intrinsic hierarchical structures that can be represented effectively in hyperbolic spaces. Hyperbolic neural networks (HNNs) are a promising approach for learning feature representations in such spaces. However, current HNNs in computer vision rely on Euclidean backbones and only project features to the hyperbolic space in the task heads, limiting their ability to fully leverage the benefits of hyperbolic geometry. To address this, we present HCNN, a fully hyperbolic convolutional neural network (CNN) designed for computer vision tasks. Based on the Lorentz model, we generalize fundamental components of CNNs and propose novel formulations of the convolutional layer, batch normalization, and multinomial logistic regression. {Experiments on standard vision tasks demonstrate the promising performance of our HCNN framework in both hybrid and fully hyperbolic settings.} Overall, we believe our contributions provide a foundation for developing more powerful HNNs that can better represent complex structures found in image data. Our code is publicly available at this https URL.

Hyperbolic deep learning has become a growing research direction in computer

vision due to the unique properties afforded by the alternate embedding space.

The negative curvature and exponentially growing distance metric provide a

natural framework for capturing hierarchical relationships between datapoints

and allowing for finer separability between their embeddings. However, current

hyperbolic learning approaches are still prone to overfitting, computationally

expensive, and prone to instability, especially when attempting to learn the

manifold curvature to adapt to tasks and different datasets. To address these

issues, our paper presents a derivation for Riemannian AdamW that helps

increase hyperbolic generalization ability. For improved stability, we

introduce a novel fine-tunable hyperbolic scaling approach to constrain

hyperbolic embeddings and reduce approximation errors. Using this along with

our curvature-aware learning schema for Lorentzian Optimizers enables the

combination of curvature and non-trivialized hyperbolic parameter learning. Our

approach demonstrates consistent performance improvements across Computer

Vision, EEG classification, and hierarchical metric learning tasks achieving

state-of-the-art results in two domains and drastically reducing runtime.

Time series forecasting is a crucial task in machine learning, as it has a wide range of applications including but not limited to forecasting electricity consumption, traffic, and air quality. Traditional forecasting models rely on rolling averages, vector auto-regression and auto-regressive integrated moving averages. On the other hand, deep learning and matrix factorization models have been recently proposed to tackle the same problem with more competitive performance. However, one major drawback of such models is that they tend to be overly complex in comparison to traditional techniques. In this paper, we report the results of prominent deep learning models with respect to a well-known machine learning baseline, a Gradient Boosting Regression Tree (GBRT) model. Similar to the deep neural network (DNN) models, we transform the time series forecasting task into a window-based regression problem. Furthermore, we feature-engineered the input and output structure of the GBRT model, such that, for each training window, the target values are concatenated with external features, and then flattened to form one input instance for a multi-output GBRT model. We conducted a comparative study on nine datasets for eight state-of-the-art deep-learning models that were presented at top-level conferences in the last years. The results demonstrate that the window-based input transformation boosts the performance of a simple GBRT model to levels that outperform all state-of-the-art DNN models evaluated in this paper.

Werner et al. introduce CDALBench, a cross-domain benchmark for Active Learning designed to provide statistically robust evaluations across diverse datasets (images, text, tabular) using 50 experimental repetitions. The benchmark reveals that no single AL method universally outperforms others and underscores the impact of evaluation rigor on reported performance.

Data augmentation is important for improving machine learning model

performance when faced with limited real-world data. In time series forecasting

(TSF), where accurate predictions are crucial in fields like finance,

healthcare, and manufacturing, traditional augmentation methods for

classification tasks are insufficient to maintain temporal coherence. This

research introduces two augmentation approaches using the discrete wavelet

transform (DWT) to adjust frequency elements while preserving temporal

dependencies in time series data. Our methods, Wavelet Masking (WaveMask) and

Wavelet Mixing (WaveMix), are evaluated against established baselines across

various forecasting horizons. To the best of our knowledge, this is the first

study to conduct extensive experiments on multivariate time series using

Discrete Wavelet Transform as an augmentation technique. Experimental results

demonstrate that our techniques achieve competitive results with previous

methods. We also explore cold-start forecasting using downsampled training

datasets, comparing outcomes to baseline methods.

Used car pricing is a critical aspect of the automotive industry, influenced by many economic factors and market dynamics. With the recent surge in online marketplaces and increased demand for used cars, accurate pricing would benefit both buyers and sellers by ensuring fair transactions. However, the transition towards automated pricing algorithms using machine learning necessitates the comprehension of model uncertainties, specifically the ability to flag predictions that the model is unsure about. Although recent literature proposes the use of boosting algorithms or nearest neighbor-based approaches for swift and precise price predictions, encapsulating model uncertainties with such algorithms presents a complex challenge. We introduce ProbSAINT, a model that offers a principled approach for uncertainty quantification of its price predictions, along with accurate point predictions that are comparable to state-of-the-art boosting techniques. Furthermore, acknowledging that the business prefers pricing used cars based on the number of days the vehicle was listed for sale, we show how ProbSAINT can be used as a dynamic forecasting model for predicting price probabilities for different expected offer duration. Our experiments further indicate that ProbSAINT is especially accurate on instances where it is highly certain. This proves the applicability of its probabilistic predictions in real-world scenarios where trustworthiness is crucial.

Modeling and calibrating the fidelity of synthetic data is paramount in

shaping the future of safe and reliable self-driving technology by offering a

cost-effective and scalable alternative to real-world data collection. We focus

on its role in safety-critical applications, introducing four types of

instance-level fidelity that go beyond mere visual input characteristics. The

aim is to ensure that applying testing on synthetic data can reveal real-world

safety issues, and the absence of safety-critical issues when testing under

synthetic data can provide a strong safety guarantee in real-world behavior. We

suggest an optimization method to refine the synthetic data generator, reducing

fidelity gaps identified by deep learning components. Experiments show this

tuning enhances the correlation between safety-critical errors in synthetic and

real data.

Given a new dataset D and a low compute budget, how should we choose a pre-trained model to fine-tune to D, and set the fine-tuning hyperparameters without risking overfitting, particularly if D is small? Here, we extend automated machine learning (AutoML) to best make these choices. Our domain-independent meta-learning approach learns a zero-shot surrogate model which, at test time, allows to select the right deep learning (DL) pipeline (including the pre-trained model and fine-tuning hyperparameters) for a new dataset D given only trivial meta-features describing D such as image resolution or the number of classes. To train this zero-shot model, we collect performance data for many DL pipelines on a large collection of datasets and meta-train on this data to minimize a pairwise ranking objective. We evaluate our approach under the strict time limit of the vision track of the ChaLearn AutoDL challenge benchmark, clearly outperforming all challenge contenders.

Researchers from the Information Systems and Machine Learning Lab (ISMLL) at the University of Hildesheim introduce CARCA, a next-item recommendation model that holistically integrates sequential user interactions, dynamic contextual features, and rich item attributes. The model leverages a cross-attention mechanism for scoring, which allows it to consider the entire user history, resulting in performance improvements of up to 53% in NDCG and Hit-Ratio compared to existing baselines.

Time-series forecasting research has converged to a small set of datasets and

a standardized collection of evaluation scenarios. Such a standardization is to

a specific extent needed for comparable research. However, the underlying

assumption is, that the considered setting is a representative for the problem

as a whole. In this paper, we challenge this assumption and show that the

current scenario gives a strongly biased perspective on the state of

time-series forecasting research. To be more detailed, we show that the current

evaluation scenario is heavily biased by the simplicity of the current

datasets. We furthermore emphasize, that when the lookback-window is properly

tuned, current models usually do not need any information flow across channels.

However, when using more complex benchmark data, the situation changes: Here,

modeling channel-interactions in a sophisticated manner indeed enhances

performances. Furthermore, in this complex evaluation scenario, Crossformer, a

method regularly neglected as an important baseline, is the SOTA method for

time series forecasting. Based on this, we present the Fast Channel-dependent

Transformer (FaCT), a simplified version of Crossformer which closes the

runtime gap between Crossformer and TimeMixer, leading to an efficient model

for complex forecasting datasets.

Time series data is ubiquitous in research as well as in a wide variety of industrial applications. Effectively analyzing the available historical data and providing insights into the far future allows us to make effective decisions. Recent research has witnessed the superior performance of transformer-based architectures, especially in the regime of far horizon time series forecasting. However, the current state of the art sparse Transformer architectures fail to couple down- and upsampling procedures to produce outputs in a similar resolution as the input. We propose the Yformer model, based on a novel Y-shaped encoder-decoder architecture that (1) uses direct connection from the downscaled encoder layer to the corresponding upsampled decoder layer in a U-Net inspired architecture, (2) Combines the downscaling/upsampling with sparse attention to capture long-range effects, and (3) stabilizes the encoder-decoder stacks with the addition of an auxiliary reconstruction loss. Extensive experiments have been conducted with relevant baselines on four benchmark datasets, demonstrating an average improvement of 19.82, 18.41 percentage MSE and 13.62, 11.85 percentage MAE in comparison to the current state of the art for the univariate and the multivariate settings respectively.

Tabular regression is a well-studied problem with numerous industrial applications, yet most existing approaches focus on point estimation, often leading to overconfident predictions. This issue is particularly critical in industrial automation, where trustworthy decision-making is essential. Probabilistic regression models address this challenge by modeling prediction uncertainty. However, many conventional methods assume a fixed-shape distribution (typically Gaussian), and resort to estimating distribution parameters. This assumption is often restrictive, as real-world target distributions can be highly complex. To overcome this limitation, we introduce TabResFlow, a Normalizing Spline Flow model designed specifically for univariate tabular regression, where commonly used simple flow networks like RealNVP and Masked Autoregressive Flow (MAF) are unsuitable. TabResFlow consists of three key components: (1) An MLP encoder for each numerical feature. (2) A fully connected ResNet backbone for expressive feature extraction. (3) A conditional spline-based normalizing flow for flexible and tractable density estimation. We evaluate TabResFlow on nine public benchmark datasets, demonstrating that it consistently surpasses existing probabilistic regression models on likelihood scores. Our results demonstrate 9.64% improvement compared to the strongest probabilistic regression model (TreeFlow), and on average 5.6 times speed-up in inference time compared to the strongest deep learning alternative (NodeFlow). Additionally, we validate the practical applicability of TabResFlow in a real-world used car price prediction task under selective regression. To measure performance in this setting, we introduce a novel Area Under Risk Coverage (AURC) metric and show that TabResFlow achieves superior results across this metric.

A new benchmark dataset for irregularly-sampled multivariate time series forecasting, Physiome-ODE, is presented by researchers from the University of Hildesheim and TU Berlin. This benchmark generates genuinely irregular and complex data based on biological ODE models, introducing a Joint Gradient Deviation score to quantify time series difficulty and better evaluate forecasting model performance.

19 Sep 2022

Solving the Traveling Salesperson Problem with Precedence Constraints by Deep Reinforcement Learning

Solving the Traveling Salesperson Problem with Precedence Constraints by Deep Reinforcement Learning

This work presents solutions to the Traveling Salesperson Problem with precedence constraints (TSPPC) using Deep Reinforcement Learning (DRL) by adapting recent approaches that work well for regular TSPs. Common to these approaches is the use of graph models based on multi-head attention (MHA) layers. One idea for solving the pickup and delivery problem (PDP) is using heterogeneous attentions to embed the different possible roles each node can take. In this work, we generalize this concept of heterogeneous attentions to the TSPPC. Furthermore, we adapt recent ideas to sparsify attentions for better scalability. Overall, we contribute to the research community through the application and evaluation of recent DRL methods in solving the TSPPC.

Probabilistic forecasting of irregularly sampled multivariate time series with missing values is an important problem in many fields, including health care, astronomy, and climate. State-of-the-art methods for the task estimate only marginal distributions of observations in single channels and at single timepoints, assuming a fixed-shape parametric distribution. In this work, we propose a novel model, ProFITi, for probabilistic forecasting of irregularly sampled time series with missing values using conditional normalizing flows. The model learns joint distributions over the future values of the time series conditioned on past observations and queried channels and times, without assuming any fixed shape of the underlying distribution. As model components, we introduce a novel invertible triangular attention layer and an invertible non-linear activation function on and onto the whole real line. We conduct extensive experiments on four datasets and demonstrate that the proposed model provides 4 times higher likelihood over the previously best model.

With the growth of social media, the spread of hate speech is also increasing rapidly. Social media are widely used in many countries. Also Hate Speech is spreading in these countries. This brings a need for multilingual Hate Speech detection algorithms. Much research in this area is dedicated to English at the moment. The HASOC track intends to provide a platform to develop and optimize Hate Speech detection algorithms for Hindi, German and English. The dataset is collected from a Twitter archive and pre-classified by a machine learning system. HASOC has two sub-task for all three languages: task A is a binary classification problem (Hate and Not Offensive) while task B is a fine-grained classification problem for three classes (HATE) Hate speech, OFFENSIVE and PROFANITY. Overall, 252 runs were submitted by 40 teams. The performance of the best classification algorithms for task A are F1 measures of 0.51, 0.53 and 0.52 for English, Hindi, and German, respectively. For task B, the best classification algorithms achieved F1 measures of 0.26, 0.33 and 0.29 for English, Hindi, and German, respectively. This article presents the tasks and the data development as well as the results. The best performing algorithms were mainly variants of the transformer architecture BERT. However, also other systems were applied with good success

Neural Combinatorial Optimization has been researched actively in the last

eight years. Even though many of the proposed Machine Learning based approaches

are compared on the same datasets, the evaluation protocol exhibits essential

flaws and the selection of baselines often neglects State-of-the-Art Operations

Research approaches. To improve on both of these shortcomings, we propose the

Routing Arena, a benchmark suite for Routing Problems that provides a seamless

integration of consistent evaluation and the provision of baselines and

benchmarks prevalent in the Machine Learning- and Operations Research field.

The proposed evaluation protocol considers the two most important evaluation

cases for different applications: First, the solution quality for an a priori

fixed time budget and secondly the anytime performance of the respective

methods. By setting the solution trajectory in perspective to a Best Known

Solution and a Base Solver's solutions trajectory, we furthermore propose the

Weighted Relative Average Performance (WRAP), a novel evaluation metric that

quantifies the often claimed runtime efficiency of Neural Routing Solvers. A

comprehensive first experimental evaluation demonstrates that the most recent

Operations Research solvers generate state-of-the-art results in terms of

solution quality and runtime efficiency when it comes to the vehicle routing

problem. Nevertheless, some findings highlight the advantages of neural

approaches and motivate a shift in how neural solvers should be conceptualized.

Amharic is the official language of the Federal Democratic Republic of

Ethiopia. There are lots of historic Amharic and Ethiopic handwritten documents

addressing various relevant issues including governance, science, religious,

social rules, cultures and art works which are very reach indigenous knowledge.

The Amharic language has its own alphabet derived from Ge'ez which is currently

the liturgical language in Ethiopia. Handwritten character recognition for non

Latin scripts like Amharic is not addressed especially using the advantages of

the state of the art techniques. This research work designs for the first time

a model for Amharic handwritten character recognition using a convolutional

neural network. The dataset was organized from collected sample handwritten

documents and data augmentation was applied for machine learning. The model was

further enhanced using multi-task learning from the relationships of the

characters. Promising results are observed from the later model which can

further be applied to word prediction.

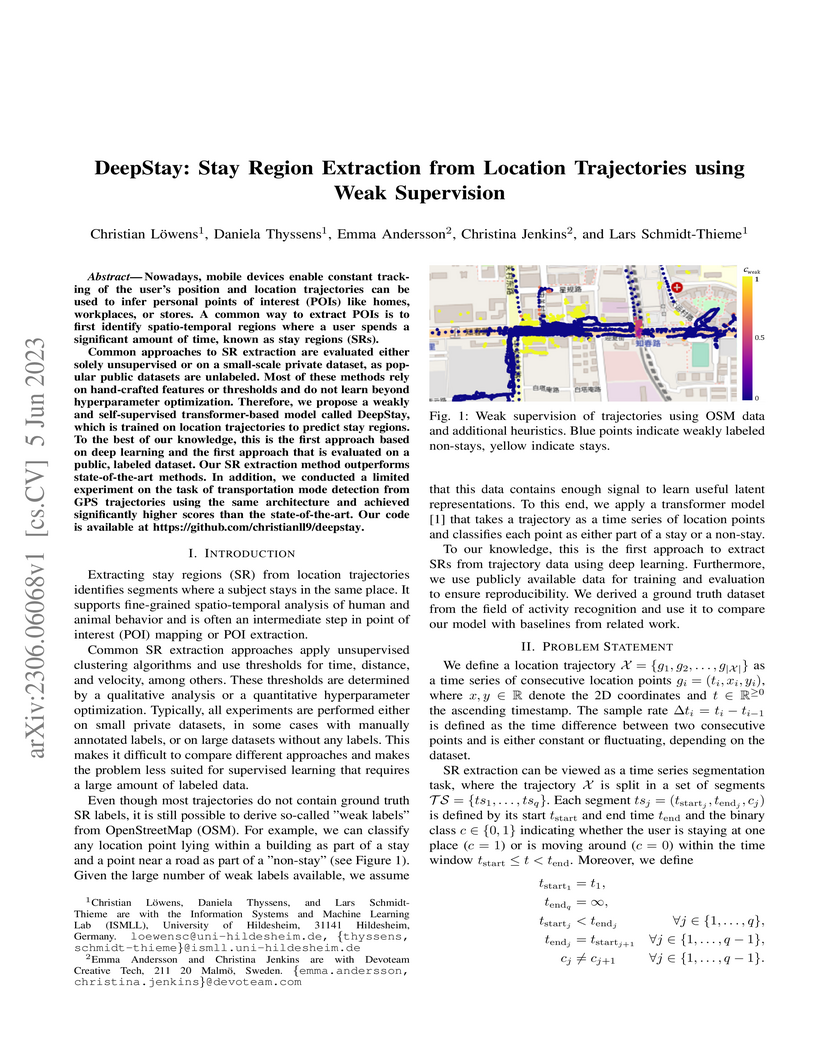

Nowadays, mobile devices enable constant tracking of the user's position and

location trajectories can be used to infer personal points of interest (POIs)

like homes, workplaces, or stores. A common way to extract POIs is to first

identify spatio-temporal regions where a user spends a significant amount of

time, known as stay regions (SRs).

Common approaches to SR extraction are evaluated either solely unsupervised

or on a small-scale private dataset, as popular public datasets are unlabeled.

Most of these methods rely on hand-crafted features or thresholds and do not

learn beyond hyperparameter optimization. Therefore, we propose a weakly and

self-supervised transformer-based model called DeepStay, which is trained on

location trajectories to predict stay regions. To the best of our knowledge,

this is the first approach based on deep learning and the first approach that

is evaluated on a public, labeled dataset. Our SR extraction method outperforms

state-of-the-art methods. In addition, we conducted a limited experiment on the

task of transportation mode detection from GPS trajectories using the same

architecture and achieved significantly higher scores than the

state-of-the-art. Our code is available at

this https URL

The minimization of loss functions is the heart and soul of Machine Learning. In this paper, we propose an off-the-shelf optimization approach that can minimize virtually any non-differentiable and non-decomposable loss function (e.g. Miss-classification Rate, AUC, F1, Jaccard Index, Mathew Correlation Coefficient, etc.) seamlessly. Our strategy learns smooth relaxation versions of the true losses by approximating them through a surrogate neural network. The proposed loss networks are set-wise models which are invariant to the order of mini-batch instances. Ultimately, the surrogate losses are learned jointly with the prediction model via bilevel optimization. Empirical results on multiple datasets with diverse real-life loss functions compared with state-of-the-art baselines demonstrate the efficiency of learning surrogate losses.

There are no more papers matching your filters at the moment.