VŠB – Technical University of Ostrava

15 Sep 2025

The accurate calculation of electronic potential energy surfaces for ground and excited states is crucial for understanding photochemical processes, particularly near conical intersections. While classical methods are limited by scaling and quantum algorithms by hardware, this thesis focuses on the State-Averaged Orbital-Optimized Variational Quantum Eigensolver (SA-OO-VQE). This hybrid quantum-classical algorithm provides a balanced description of multiple electronic states by combining quantum state preparation with classical state-averaged orbital optimization.

A key contribution is the implementation and evaluation of the Differential Evolution algorithm within the SA-OO-VQE framework, with a comparative study against classical optimizers like the Broyden-Fletcher-Goldfarb-Shanno (BFGS) and Sequential Least Squares Programming (SLSQP) algorithms. The performance of these optimizers is assessed by calculating ground and first excited state energies for H2, H4, and LiH.

The thesis also demonstrates SA-OO-VQE's capability to accurately model potential energy surfaces near conical intersections, using formaldimine as a case study. The results show that orbital optimization is essential for correctly capturing the potential energy surface topology, a task where standard methods with fixed orbitals fail. Our findings indicate that while Differential Evolution presents efficiency challenges, gradient-based methods like BFGS and SLSQP offer superior performance, confirming that the SA-OO-VQE approach is crucial for treating complex electronic structures.

The performance of the meta-heuristic algorithms often depends on their

parameter settings. Appropriate tuning of the underlying parameters can

drastically improve the performance of a meta-heuristic. The Ant Colony

Optimization (ACO), a population based meta-heuristic algorithm inspired by the

foraging behavior of the ants, is no different. Fundamentally, the ACO depends

on the construction of new solutions, variable by variable basis using Gaussian

sampling of the selected variables from an archive of solutions. A

comprehensive performance analysis of the underlying parameters such as:

selection strategy, distance measure metric and pheromone evaporation rate of

the ACO suggests that the Roulette Wheel Selection strategy enhances the

performance of the ACO due to its ability to provide non-uniformity and

adequate diversity in the selection of a solution. On the other hand, the

Squared Euclidean distance-measure metric offers better performance than other

distance-measure metrics. It is observed from the analysis that the ACO is

sensitive towards the evaporation rate. Experimental analysis between classical

ACO and other meta-heuristic suggested that the performance of the well-tuned

ACO surpasses its counterparts.

This study proposes a novel artificial protozoa optimizer (APO) that is

inspired by protozoa in nature. The APO mimics the survival mechanisms of

protozoa by simulating their foraging, dormancy, and reproductive behaviors.

The APO was mathematically modeled and implemented to perform the optimization

processes of metaheuristic algorithms. The performance of the APO was verified

via experimental simulations and compared with 32 state-of-the-art algorithms.

Wilcoxon signed-rank test was performed for pairwise comparisons of the

proposed APO with the state-of-the-art algorithms, and Friedman test was used

for multiple comparisons. First, the APO was tested using 12 functions of the

2022 IEEE Congress on Evolutionary Computation benchmark. Considering

practicality, the proposed APO was used to solve five popular engineering

design problems in a continuous space with constraints. Moreover, the APO was

applied to solve a multilevel image segmentation task in a discrete space with

constraints. The experiments confirmed that the APO could provide highly

competitive results for optimization problems. The source codes of Artificial

Protozoa Optimizer are publicly available at

this https URL and

this https URL

05 Nov 2025

Interatomic potentials are essential for molecular dynamics simulations of magnetic materials, yet incorporating magnetic features into potentials for complex antiferromagnets remains challenging. Nickel oxide (NiO), a prototypical cubic antiferromagnet, exemplifies this difficulty. Here we develop a methodology to integrate magnetic properties into interatomic potentials for cubic antiferromagnets by adding a magnetic Hamiltonian which includes both the Heisenberg exchange and Néel model. We apply this approach to NiO by constructing two potentials: one based on the Born model of ionic solids and another using a reference-free modified embedded atom method. Both potentials include magnetoelastic interactions and are validated against Density Functional Theory calculations, showing excellent agreement in mechanical and magnetic properties at zero temperature. These models enable large-scale simulations of magnetoelastic phenomena in antiferromagnets and open avenues for molecular dynamics studies involving coupled electric and magnetic fields in metal oxides.

30 Sep 2024

This paper presents the outcome of a study focused on the evolution of

internal damage in fresh cement mortar over 25 hours of hardening. In situ

timelapse X-ray computed micro-tomography ({\mu}XCT) imaging method was used to

detect internal damage and capture its evolution in cement mortar hardening.

During {\mu}XCT scans, the temperature released during the cement hydration was

measured, which provided insight into the internal damage evolution with a link

to a hydration temperature rise. The measured temperature during cement mortar

hardening was compared with an analytical model, which showed a relatively good

agreement with the experimental data. Using 20 CT scans acquired throughout the

observed cement mortar hardening, it was possible to obtain a quantified

characterisation of the porous space. Additionally, the use of timelapse

{\mu}XCT imaging over 25 hours allowed for studying the crack growth inside the

meso-structure including its volume and surface characterisation. The results

provide valuable insights into cement mortar shrinkage and serve as a

proof-of-concept methodology for future material characterisation.

Recently, continual learning has received a lot of attention. One of the

significant problems is the occurrence of \emph{concept drift}, which consists

of changing probabilistic characteristics of the incoming data. In the case of

the classification task, this phenomenon destabilizes the model's performance

and negatively affects the achieved prediction quality. Most current methods

apply statistical learning and similarity analysis over the raw data. However,

similarity analysis in streaming data remains a complex problem due to time

limitation, non-precise values, fast decision speed, scalability, etc. This

article introduces a novel method for monitoring changes in the probabilistic

distribution of multi-dimensional data streams. As a measure of the rapidity of

changes, we analyze the popular Kullback-Leibler divergence. During the

experimental study, we show how to use this metric to predict the concept drift

occurrence and understand its nature. The obtained results encourage further

work on the proposed methods and its application in the real tasks where the

prediction of the future appearance of concept drift plays a crucial role, such

as predictive maintenance.

14 Jan 2025

OstravaJ is a Python package for high-throughput calculation of exchange interaction terms in the Heisenberg model for magnetic materials. It uses the total energy difference method, where calculations are based on the total energy of the system in different magnetic configurations, calculated by means of density functional theory. OstravaJ can propose a suitable set of magnetic configurations, generate VASP configuration files in cooperation with the user, and read VASP calculation results, which minimizes necessary human interaction. It can also calculate other relevant properties (e. g. MFA and RPA critical temperature, spin-wave stiffness) and provide input for various atomistic spin dynamics codes.

We present results for a number of materials from various classes (metals, transition metal oxides), compared to other methods. They show that the total energy difference method is a useful method for exchange interaction calculation from first principles.

15 Sep 2025

The accurate calculation of electronic potential energy surfaces for ground and excited states is crucial for understanding photochemical processes, particularly near conical intersections. While classical methods are limited by scaling and quantum algorithms by hardware, this thesis focuses on the State-Averaged Orbital-Optimized Variational Quantum Eigensolver (SA-OO-VQE). This hybrid quantum-classical algorithm provides a balanced description of multiple electronic states by combining quantum state preparation with classical state-averaged orbital optimization.

A key contribution is the implementation and evaluation of the Differential Evolution algorithm within the SA-OO-VQE framework, with a comparative study against classical optimizers like the Broyden-Fletcher-Goldfarb-Shanno (BFGS) and Sequential Least Squares Programming (SLSQP) algorithms. The performance of these optimizers is assessed by calculating ground and first excited state energies for H2, H4, and LiH.

The thesis also demonstrates SA-OO-VQE's capability to accurately model potential energy surfaces near conical intersections, using formaldimine as a case study. The results show that orbital optimization is essential for correctly capturing the potential energy surface topology, a task where standard methods with fixed orbitals fail. Our findings indicate that while Differential Evolution presents efficiency challenges, gradient-based methods like BFGS and SLSQP offer superior performance, confirming that the SA-OO-VQE approach is crucial for treating complex electronic structures.

22 Aug 2019

Applications often communicate data that is non-contiguous in the send- or

the receive-buffer, e.g., when exchanging a column of a matrix stored in

row-major order. While non-contiguous transfers are well supported in HPC

(e.g., MPI derived datatypes), they can still be up to 5x slower than

contiguous transfers of the same size. As we enter the era of network

acceleration, we need to investigate which tasks to offload to the NIC: In this

work we argue that non-contiguous memory transfers can be transparently

networkaccelerated, truly achieving zero-copy communications. We implement and

extend sPIN, a packet streaming processor, within a Portals 4 NIC SST model,

and evaluate strategies for NIC-offloaded processing of MPI datatypes, ranging

from datatype-specific handlers to general solutions for any MPI datatype. We

demonstrate up to 10x speedup in the unpack throughput of real applications,

demonstrating that non-contiguous memory transfers are a first-class candidate

for network acceleration.

27 Mar 2025

Graph Neural Networks have been extensively applied in the field of machine

learning to find features of graphs, and recommendation systems are no

exception. The ratings of users on considered items can be represented by

graphs which are input for many efficient models to find out the

characteristics of the users and the items. From these insights, relevant items

are recommended to users. However, user's decisions on the items have varying

degrees of effects on different users, and this information should be learned

so as not to be lost in the process of information mining.

In this publication, we propose to build an additional graph showing the

recommended weight of an item to a target user to improve the accuracy of GNN

models. Although the users' friendships were not recorded, their correlation

was still evident through the commonalities in consumption behavior. We build a

model WiGCN (Weighted input GCN) to describe and experiment on well-known

datasets. Conclusions will be stated after comparing our results with

state-of-the-art such as GCMC, NGCF and LightGCN. The source code is also

included at this https URL

This article introduces a robust hybrid method for solving supervised learning tasks, which uses the Echo State Network (ESN) model and the Particle Swarm Optimization (PSO) algorithm. An ESN is a Recurrent Neural Network with the hidden-hidden weights fixed in the learning process. The recurrent part of the network stores the input information in internal states of the network. Another structure forms a free-memory method used as supervised learning tool. The setting procedure for initializing the recurrent structure of the ESN model can impact on the model performance. On the other hand, the PSO has been shown to be a successful technique for finding optimal points in complex spaces. Here, we present an approach to use the PSO for finding some initial hidden-hidden weights of the ESN model. We present empirical results that compare the canonical ESN model with this hybrid method on a wide range of benchmark problems.

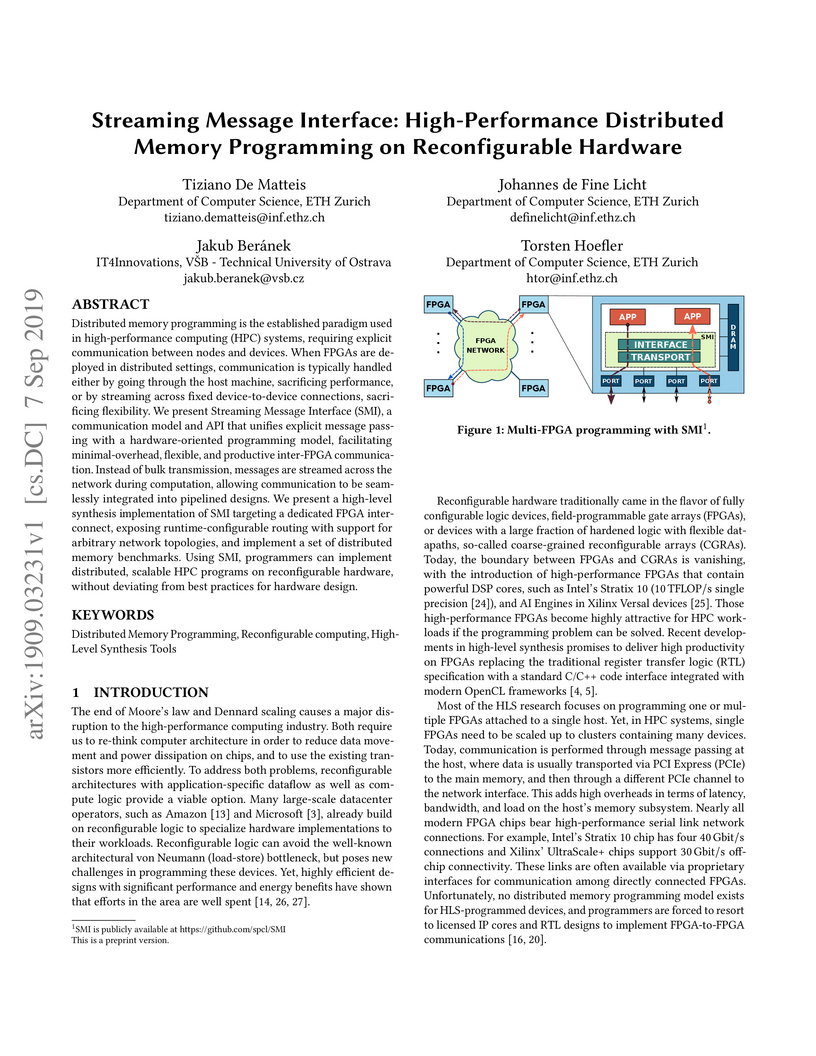

Distributed memory programming is the established paradigm used in

high-performance computing (HPC) systems, requiring explicit communication

between nodes and devices. When FPGAs are deployed in distributed settings,

communication is typically handled either by going through the host machine,

sacrificing performance, or by streaming across fixed device-to-device

connections, sacrificing flexibility. We present Streaming Message Interface

(SMI), a communication model and API that unifies explicit message passing with

a hardware-oriented programming model, facilitating minimal-overhead, flexible,

and productive inter-FPGA communication. Instead of bulk transmission, messages

are streamed across the network during computation, allowing communication to

be seamlessly integrated into pipelined designs. We present a high-level

synthesis implementation of SMI targeting a dedicated FPGA interconnect,

exposing runtime-configurable routing with support for arbitrary network

topologies, and implement a set of distributed memory benchmarks. Using SMI,

programmers can implement distributed, scalable HPC programs on reconfigurable

hardware, without deviating from best practices for hardware design.

30 Jul 2021

Inhibition of steel corrosion with imidazolium-based compounds -- experimental and theoretical study

Inhibition of steel corrosion with imidazolium-based compounds -- experimental and theoretical study

This work aims to investigate the corrosion inhibition of the mild steel in

the 1 M HCl solution by 1-octyl-3-methylimidazolium hydrogen sulphate

1-butyl-3-methylimidazolium hydrogen sulphate, and 1-octyl-3-methylimidazolium

chloride, using electrochemical, weight loss, and surface analysis methods as

well as the full quantum-mechanical treatment. Polarization measurements prove

that studied compounds are mixed-type inhibitors with a predominantly anodic

reaction. The inhibition efficiency obtained from the polarization curves is

about 80-92% for all of the 1-octyl-3-methylimidazolium salts with a

concentration higher than 0.005 mol/l, while it is much lower for

1-butyl-3-methylimidazolium hydrogen sulphate. The values measured in the

weight loss experiments (after seven days) are to some extent higher (reaching

up to 98% efficiency). Furthermore, we have shown that the influence of the

alkyl chain length on the inhibition efficiency is much larger than that of the

anion type. Furthermore, we obtain a realistic model of a single molecule on

iron surface Fe(110) by applying the Density Functional Theory calculations. We

use the state-of-the-art computational approach, including the meta-GGA

strongly-constrained and appropriately normed semilocal density functional to

model the electronic structure properties of both free and bounded-to-surface

molecules of 1-butyl-, 1-hexyl-, and 1-octyl-3-methylimizadolium bromide,

chloride, and hydrogen sulphate. From the calculations we extract, the

HOMO/LUMO gap, hardness, electronegativity, and charge transfer of electrons

from/to molecules-in-question. It supports the experimental findings and

explains the influence of the alkyl chain length and the functional group on

the inhibition process.

NiBr2, similar to NiI2, exhibits the onset of collinear antiferromagnetism at

a subroom temperature and, with further cooling, undergoes a transition to a

helimagnetic ordering associated with multiferroic behavior. This work

investigates the hydrostatic pressure effects on magnetic phase transitions in

NiBr2. We measured isobaric temperature dependencies of AC magnetic

susceptibility at various pressures up to 3 GPa. The experimental data are

interpreted in conjunction with the results of theoretical calculations focused

on pressure influence on the hierarchy of exchange interactions. Contrary to

the NiI2 case, the phase transition to helimagnetism rapidly shifts to lower

temperatures with increasing pressure. Similar to the NiI2, the Neel

temperature increases with pressure. The rate of increase accelerates when the

helimagnetic phase is suppressed by pressure. The ab initio calculations link

these contrasting trends to pressure-enhanced magnetic exchange interactions.

Similarly to the NiI2 case, the stabilization of the collinear AFM phase is

driven primarily by the second-nearest interlayer coupling (j2'). Furthermore,

the ratio of in-plane interactions makes helimagnetic order in NiBr2 much more

volatile, which permits its suppression with already small pressures. These

findings highlight the principal role of interlayer interactions in the

distinct response of NiBr2 and NiI2 magnetic phases to external pressure.

The article presents an application of Hidden Markov Models (HMMs) for pattern recognition on genome sequences. We apply HMM for identifying genes encoding the Variant Surface Glycoprotein (VSG) in the genomes of Trypanosoma brucei (T. brucei) and other African trypanosomes. These are parasitic protozoa causative agents of sleeping sickness and several diseases in domestic and wild animals. These parasites have a peculiar strategy to evade the host's immune system that consists in periodically changing their predominant cellular surface protein (VSG). The motivation for using patterns recognition methods to identify these genes, instead of traditional homology based ones, is that the levels of sequence identity (amino acid and DNA sequence) amongst these genes is often below of what is considered reliable in these methods. Among pattern recognition approaches, HMM are particularly suitable to tackle this problem because they can handle more naturally the determination of gene edges. We evaluate the performance of the model using different number of states in the Markov model, as well as several performance metrics. The model is applied using public genomic data. Our empirical results show that the VSG genes on T. brucei can be safely identified (high sensitivity and low rate of false positives) using HMM.

23 Apr 2008

Today there are many universal compression algorithms, but in most cases is for specific data better using specific algorithm - JPEG for images, MPEG for movies, etc. For textual documents there are special methods based on PPM algorithm or methods with non-character access, e.g. word-based compression. In the past, several papers describing variants of word-based compression using Huffman encoding or LZW method were published. The subject of this paper is the description of a word-based compression variant based on the LZ77 algorithm. The LZ77 algorithm and its modifications are described in this paper. Moreover, various ways of sliding window implementation and various possibilities of output encoding are described, as well. This paper also includes the implementation of an experimental application, testing of its efficiency and finding the best combination of all parts of the LZ77 coder. This is done to achieve the best compression ratio. In conclusion there is comparison of this implemented application with other word-based compression programs and with other commonly used compression programs.

MAELAS is a computer program for the calculation of magnetocrystalline anisotropy energy, anisotropic magnetostrictive coefficients and magnetoelastic constants in an automated way. The method originally implemented in version 1.0 of MAELAS was based on the length optimization of the unit cell, proposed by Wu and Freeman, to calculate the anisotropic magnetostrictive coefficients. We present here a revised and updated version (v2.0) of MAELAS, where we added a new methodology to compute anisotropic magnetoelastic constants from a linear fitting of the energy versus applied strain. We analyze and compare the accuracy of both methods showing that the new approach is more reliable and robust than the one implemented in version 1.0, especially for non-cubic crystal symmetries. This analysis also help us to find that the accuracy of the method implemented in version 1.0 could be improved by using deformation gradients derived from the equilibrium magnetoelastic strain tensor, as well as potential future alternative methods like the strain optimization method. Additionally, we clarify the role of the demagnetized state in the fractional change in length, and derive the expression for saturation magnetostriction for polycrystals with trigonal, tetragonal and orthorhombic crystal symmetry. In this new version, we also fix some issues related to trigonal crystal symmetry found in version 1.0.

24 Jun 2021

An anisotropic charge distribution on individual atoms, such as e.g.

{\sigma}-hole, may strongly affect material and structural properties of

systems. Nevertheless, subatomic resolution of such anisotropic charge

distributions represents a long-standing experimental challenge. In particular,

the existence of the {\sigma}-hole on halogen atoms has been demonstrated only

indirectly through determination of crystal structures of organic molecules

containing halogens or via theoretical calculations. Nevertheless, its direct

experimental visualization has not been reported yet. Here we demonstrate that

Kelvin probe force microscopy, with a properly functionalized probe, can reach

subatomic resolution imaging the {\sigma}-hole or a quadrupolar charge of

carbon monoxide molecule. This achievement opens new way to characterize

biological and chemical systems where anisotropic atomic charges play decisive

role.

The Echo State Network (ESN) is a class of Recurrent Neural Network with a large number of hidden-hidden weights (in the so-called reservoir). Canonical ESN and its variations have recently received significant attention due to their remarkable success in the modeling of non-linear dynamical systems. The reservoir is randomly connected with fixed weights that don't change in the learning process. Only the weights from reservoir to output are trained. Since the reservoir is fixed during the training procedure, we may wonder if the computational power of the recurrent structure is fully harnessed. In this article, we propose a new computational model of the ESN type, that represents the reservoir weights in the Fourier space and performs a fine-tuning of these weights applying genetic algorithms in the frequency domain. The main interest is that this procedure will work in a much smaller space compared to the classical ESN, thus providing a dimensionality reduction transformation of the initial method. The proposed technique allows us to exploit the benefits of the large recurrent structure avoiding the training problems of gradient-based method. We provide a detailed experimental study that demonstrates the good performances of our approach with well-known chaotic systems and real-world data.

For many years, Evolutionary Algorithms (EAs) have been applied to improve

Neural Networks (NNs) architectures. They have been used for solving different

problems, such as training the networks (adjusting the weights), designing

network topology, optimizing global parameters, and selecting features. Here,

we provide a systematic brief survey about applications of the EAs on the

specific domain of the recurrent NNs named Reservoir Computing (RC). At the

beginning of the 2000s, the RC paradigm appeared as a good option for employing

recurrent NNs without dealing with the inconveniences of the training

algorithms. RC models use a nonlinear dynamic system, with fixed recurrent

neural network named the \textit{reservoir}, and learning process is restricted

to adjusting a linear parametric function. %so the performance of learning is

fast and precise. However, an RC model has several hyper-parameters, therefore

EAs are helpful tools to figure out optimal RC architectures. We provide an

overview of the results on the area, discuss novel advances, and we present our

vision regarding the new trends and still open questions.

There are no more papers matching your filters at the moment.