Zhejiang University of Science and Technology

Graphical User Interface (GUI) grounding, the task of mapping natural language instructions to precise screen coordinates, is fundamental to autonomous GUI agents. While existing methods achieve strong performance through extensive supervised training or reinforcement learning with labeled rewards, they remain constrained by the cost and availability of pixel-level annotations. We observe that when models generate multiple predictions for the same GUI element, the spatial overlap patterns reveal implicit confidence signals that can guide more accurate localization. Leveraging this insight, we propose GUI-RC (Region Consistency), a test-time scaling method that constructs spatial voting grids from multiple sampled predictions to identify consensus regions where models show highest agreement. Without any training, GUI-RC improves accuracy by 2-3% across various architectures on ScreenSpot benchmarks. We further introduce GUI-RCPO (Region Consistency Policy Optimization), transforming these consistency patterns into rewards for test-time reinforcement learning. By computing how well each prediction aligns with the collective consensus, GUI-RCPO enables models to iteratively refine their outputs on unlabeled data during inference. Extensive experiments demonstrate the generality of our approach: using only 1,272 unlabeled data, GUI-RCPO achieves 3-6% accuracy improvements across various architectures on ScreenSpot benchmarks. Our approach reveals the untapped potential of test-time scaling and test-time reinforcement learning for GUI grounding, offering a promising path toward more data-efficient GUI agents.

GVMGen is introduced as a general model for generating multi-style, multi-track waveform music from video, employing hierarchical attention mechanisms for implicit visual-musical feature alignment. The model achieves superior music-video correspondence and generative diversity compared to existing methods, supported by a novel objective evaluation framework and a new large-scale dataset including Chinese traditional music.

15 Oct 2025

Nonlinear spin systems exhibit rich and exotic dynamical phenomena, offering promising applications ranging from spin masers and time crystals to precision measurement. Recent theoretical work [T. Wang et al., Commun. Phys. 8, 41 (2025)] predicted intriguing nonlinear dynamical phases arising from inhomogeneous magnetic fields and feedback interactions. However, experimental exploration of these predictions remains lacking. Here, we report the observation of nonlinear spin dynamics in dual-bias magnetic fields with dual-cell alkali-metal atomic gases and present three representative stable dynamical behaviors of limit cycles, quasi-periodic orbits, and chaos. Additionally, we probe the nonlinear phase transitions between these phases by varying the feedback gain and the difference of dual-bias magnetic fields. Furthermore, we demonstrate the robustness of the limit cycle and quasi-periodic orbit against the noise of magnetic fields. Our findings establish a versatile platform for exploring complex spin dynamics and open new avenues for the realization of multimode spin masers, time crystals and quasi-crystals, and high-precision magnetometers.

13 Oct 2025

Tuning Layer Orbital Hall Effect via Spin Rotation in Ferromagnetic Transition Metal Dichalcogenides

Tuning Layer Orbital Hall Effect via Spin Rotation in Ferromagnetic Transition Metal Dichalcogenides

Orbitronics, which leverages the angular momentum of atomic orbitals for information transmission, provides a novel strategy to overcome the limitations of electronic devices. Unlike electron spin, orbital angular momentum (OAM) is strongly influenced by crystal field effects and band topology, making its orientation difficult to manipulate with external fields. In this work, by using first principle calculations, we investigate quantum anomalous Hall insulators (QAHIs) as a model system to study the layer orbital Hall effect (OHE). Due to band inversion, only one valley remains orbital polarization, and thus the OHE originates from a single valley. Based on stacking symmetry analysis, we investigated both AA and AB stacking configurations, which possess mirror and inversion symmetries, respectively. The excitation of OAM exhibits valley selectivity, determined jointly by valley polarization and orbital polarization. In AA stacking, the absence of inversion center gives rise to intrinsic orbital polarization, leading to OAM excitations from different valleys in the two layers. In contrast, AB stacking is protected by inversion symmetry, which enforces valley polarization and

26 Apr 2024

In this paper, we define the rarefaction and compression characters for the supersonic expanding wave of the compressible Euler equations with radial symmetry. Under this new definition, we show that solutions with rarefaction initial data will not form shock in finite time, i.e. exist global-in-time as classical solutions. On the other hand, singularity forms in finite time when the initial data include strong compression somewhere. Several useful invariant domains will be also given.

Zhejiang UniversityChongqing UniversityUniversity of MacauHainan UniversityZhejiang University of TechnologyChina Academy of Information and Communications TechnologyFuzhou UniversityZhejiang University of Science and TechnologyChina Three Gorges UniversityGuangdong University of Science and TechnologyChiYU Intelligence Technology (Suzhou) Ltd

Zhejiang UniversityChongqing UniversityUniversity of MacauHainan UniversityZhejiang University of TechnologyChina Academy of Information and Communications TechnologyFuzhou UniversityZhejiang University of Science and TechnologyChina Three Gorges UniversityGuangdong University of Science and TechnologyChiYU Intelligence Technology (Suzhou) LtdMicroalgae, vital for ecological balance and economic sectors, present

challenges in detection due to their diverse sizes and conditions. This paper

summarizes the second "Vision Meets Algae" (VisAlgae 2023) Challenge, aiming to

enhance high-throughput microalgae cell detection. The challenge, which

attracted 369 participating teams, includes a dataset of 1000 images across six

classes, featuring microalgae of varying sizes and distinct features.

Participants faced tasks such as detecting small targets, handling motion blur,

and complex backgrounds. The top 10 methods, outlined here, offer insights into

overcoming these challenges and maximizing detection accuracy. This

intersection of algae research and computer vision offers promise for

ecological understanding and technological advancement. The dataset can be

accessed at: this https URL

Depth completion is a key task in autonomous driving, aiming to complete sparse LiDAR depth measurements into high-quality dense depth maps through image guidance. However, existing methods usually treat depth maps as an additional channel of color images, or directly perform convolution on sparse data, failing to fully exploit the 3D geometric information in depth maps, especially with limited performance in complex boundaries and sparse areas. To address these issues, this paper proposes a depth completion network combining channel attention mechanism and 3D global feature perception (CGA-Net). The main innovations include: 1) Utilizing PointNet++ to extract global 3D geometric features from sparse depth maps, enhancing the scene perception ability of low-line LiDAR data; 2) Designing a channel-attention-based multimodal feature fusion module to efficiently integrate sparse depth, RGB images, and 3D geometric features; 3) Combining residual learning with CSPN++ to optimize the depth refinement stage, further improving the completion quality in edge areas and complex scenes. Experiments on the KITTI depth completion dataset show that CGA-Net can significantly improve the prediction accuracy of dense depth maps, achieving a new state-of-the-art (SOTA), and demonstrating strong robustness to sparse and complex scenes.

Accurate abdominal multi-organ segmentation is critical for clinical applications. Although numerous deep learning-based automatic segmentation methods have been developed, they still struggle to segment small, irregular, or anatomically complex organs. Moreover, most current methods focus on spatial-domain analysis, often overlooking the synergistic potential of frequency-domain representations. To address these limitations, we propose a novel framework named FMD-TransUNet for precise abdominal multi-organ segmentation. It innovatively integrates the Multi-axis External Weight Block (MEWB) and the improved dual attention module (DA+) into the TransUNet framework. The MEWB extracts multi-axis frequency-domain features to capture both global anatomical structures and local boundary details, providing complementary information to spatial-domain representations. The DA+ block utilizes depthwise separable convolutions and incorporates spatial and channel attention mechanisms to enhance feature fusion, reduce redundant information, and narrow the semantic gap between the encoder and decoder. Experimental validation on the Synapse dataset shows that FMD-TransUNet outperforms other recent state-of-the-art methods, achieving an average DSC of 81.32\% and a HD of 16.35 mm across eight abdominal organs. Compared to the baseline model, the average DSC increased by 3.84\%, and the average HD decreased by 15.34 mm. These results demonstrate the effectiveness of FMD-TransUNet in improving the accuracy of abdominal multi-organ segmentation.

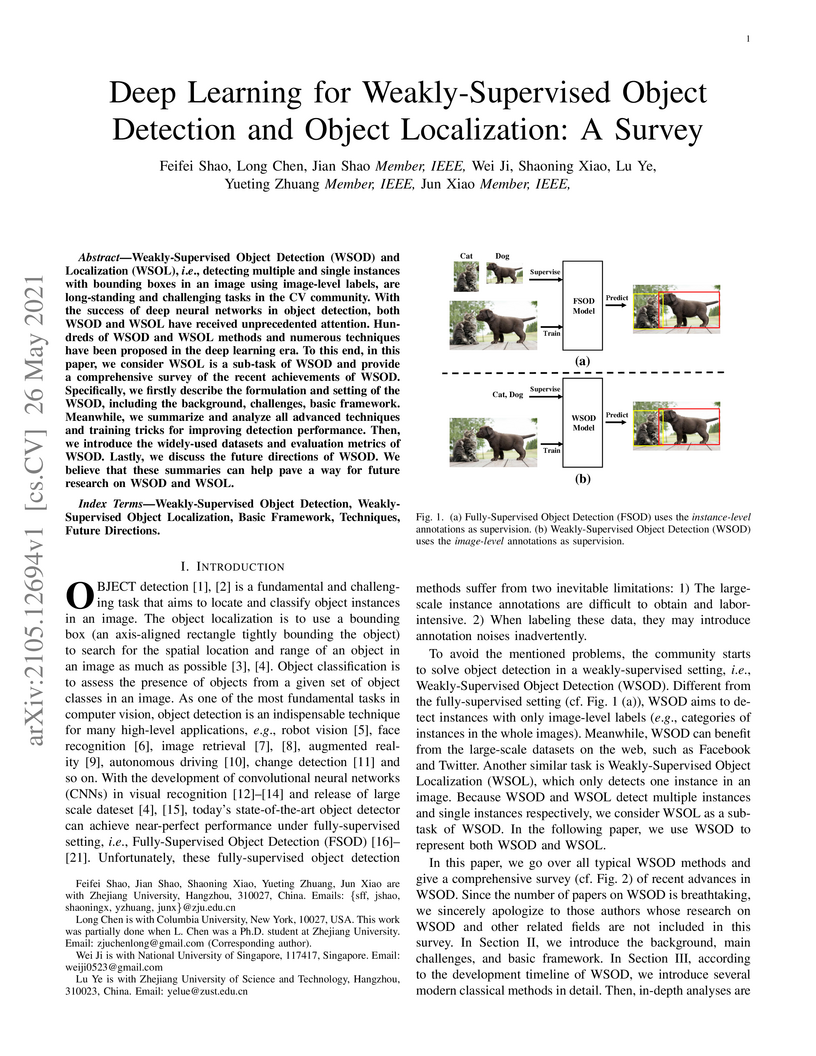

Weakly-Supervised Object Detection (WSOD) and Localization (WSOL), i.e., detecting multiple and single instances with bounding boxes in an image using image-level labels, are long-standing and challenging tasks in the CV community. With the success of deep neural networks in object detection, both WSOD and WSOL have received unprecedented attention. Hundreds of WSOD and WSOL methods and numerous techniques have been proposed in the deep learning era. To this end, in this paper, we consider WSOL is a sub-task of WSOD and provide a comprehensive survey of the recent achievements of WSOD. Specifically, we firstly describe the formulation and setting of the WSOD, including the background, challenges, basic framework. Meanwhile, we summarize and analyze all advanced techniques and training tricks for improving detection performance. Then, we introduce the widely-used datasets and evaluation metrics of WSOD. Lastly, we discuss the future directions of WSOD. We believe that these summaries can help pave a way for future research on WSOD and WSOL.

10 Feb 2025

In 2015, Phulara established a generalization of the famous central set

theorem by an original idea. Roughly speaking, this idea extends a

combinatorial result from one large subset of the given semigroup to countably

many. In this paper, we apply this idea to other combinatorial results to

obtain corresponding generalizations, and do some further investigation.

Moreover, we find that Phulara's generalization can be generalized further that

can deal with uncountably many C-sets.

13 Oct 2025

It is known that every (single-qudit) Clifford operator maps the full set of generalized Pauli matrices (GPMs) to itself under unitary conjugation, which is an important quantum operation and plays a crucial role in quantum computation and information. However, in many quantum information processing tasks, it is required that a specific set of GPMs be mapped to another such set under conjugation, instead of the entire set. We formalize this by introducing local Clifford operator, which maps a given n-GPM set to another such set under unitary conjugation. We establish necessary and sufficient conditions for such an operator to transform a pair of GPMs, showing that these local Clifford operators admit a classical matrix representation, analogous to the classical (or symplectic) representation of standard (single-qudit) Clifford operators. Furthermore, we demonstrate that any local Clifford operator acting on an n-GPM (n≥2) set can be decomposed into a product of standard Clifford operators and a local Clifford operator acting on a pair of GPMs. This decomposition provides a complete classical characterization of unitary conjugation mappings between n-GPM sets. As a key application, we use this framework to address the local unitary equivalence (LU-equivalence) of sets of generalized Bell states (GBSs). We prove that the 31 equivalence classes of 4-GBS sets in bipartite system C6⊗C6 previously identified via Clifford operators are indeed distinct under LU-equivalence, confirming that this classification is complete.

We aim to address the problem of Natural Language Video Localization (NLVL)-localizing the video segment corresponding to a natural language description in a long and untrimmed video. State-of-the-art NLVL methods are almost in one-stage fashion, which can be typically grouped into two categories: 1) anchor-based approach: it first pre-defines a series of video segment candidates (e.g., by sliding window), and then does classification for each candidate; 2) anchor-free approach: it directly predicts the probabilities for each video frame as a boundary or intermediate frame inside the positive segment. However, both kinds of one-stage approaches have inherent drawbacks: the anchor-based approach is susceptible to the heuristic rules, further limiting the capability of handling videos with variant length. While the anchor-free approach fails to exploit the segment-level interaction thus achieving inferior results. In this paper, we propose a novel Boundary Proposal Network (BPNet), a universal two-stage framework that gets rid of the issues mentioned above. Specifically, in the first stage, BPNet utilizes an anchor-free model to generate a group of high-quality candidate video segments with their boundaries. In the second stage, a visual-language fusion layer is proposed to jointly model the multi-modal interaction between the candidate and the language query, followed by a matching score rating layer that outputs the alignment score for each candidate. We evaluate our BPNet on three challenging NLVL benchmarks (i.e., Charades-STA, TACoS and ActivityNet-Captions). Extensive experiments and ablative studies on these datasets demonstrate that the BPNet outperforms the state-of-the-art methods.

To maintain the company's talent pool, recruiters need to continuously search for resumes from third-party websites (e.g., LinkedIn, Indeed). However, fetched resumes are often incomplete and inaccurate. To improve the quality of third-party resumes and enrich the company's talent pool, it is essential to conduct duplication detection between the fetched resumes and those already in the company's talent pool. Such duplication detection is challenging due to the semantic complexity, structural heterogeneity, and information incompleteness of resume texts. To this end, we propose MHSNet, an multi-level identity verification framework that fine-tunes BGE-M3 using contrastive learning. With the fine-tuned , Mixture-of-Experts (MoE) generates multi-level sparse and dense representations for resumes, enabling the computation of corresponding multi-level semantic similarities. Moreover, the state-aware Mixture-of-Experts (MoE) is employed in MHSNet to handle diverse incomplete resumes. Experimental results verify the effectiveness of MHSNet

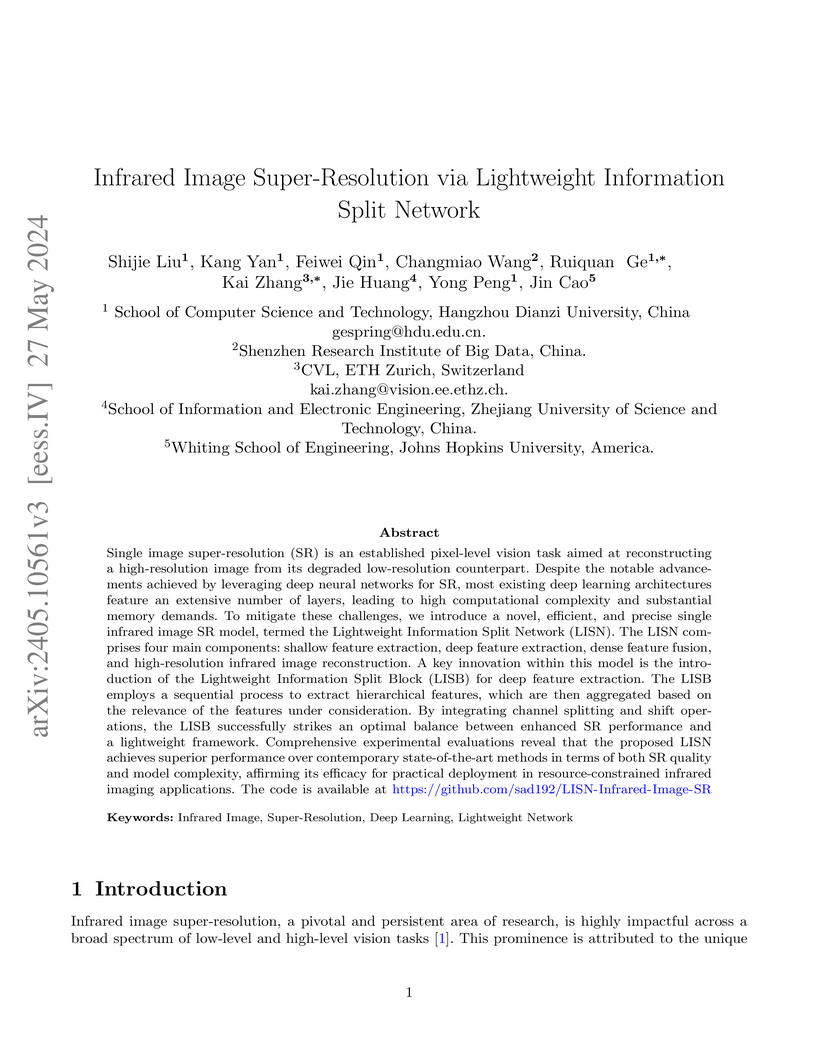

Single image super-resolution (SR) is an established pixel-level vision task aimed at reconstructing a high-resolution image from its degraded low-resolution counterpart. Despite the notable advancements achieved by leveraging deep neural networks for SR, most existing deep learning architectures feature an extensive number of layers, leading to high computational complexity and substantial memory demands. These issues become particularly pronounced in the context of infrared image SR, where infrared devices often have stringent storage and computational constraints. To mitigate these challenges, we introduce a novel, efficient, and precise single infrared image SR model, termed the Lightweight Information Split Network (LISN). The LISN comprises four main components: shallow feature extraction, deep feature extraction, dense feature fusion, and high-resolution infrared image reconstruction. A key innovation within this model is the introduction of the Lightweight Information Split Block (LISB) for deep feature extraction. The LISB employs a sequential process to extract hierarchical features, which are then aggregated based on the relevance of the features under consideration. By integrating channel splitting and shift operations, the LISB successfully strikes an optimal balance between enhanced SR performance and a lightweight framework. Comprehensive experimental evaluations reveal that the proposed LISN achieves superior performance over contemporary state-of-the-art methods in terms of both SR quality and model complexity, affirming its efficacy for practical deployment in resource-constrained infrared imaging applications.

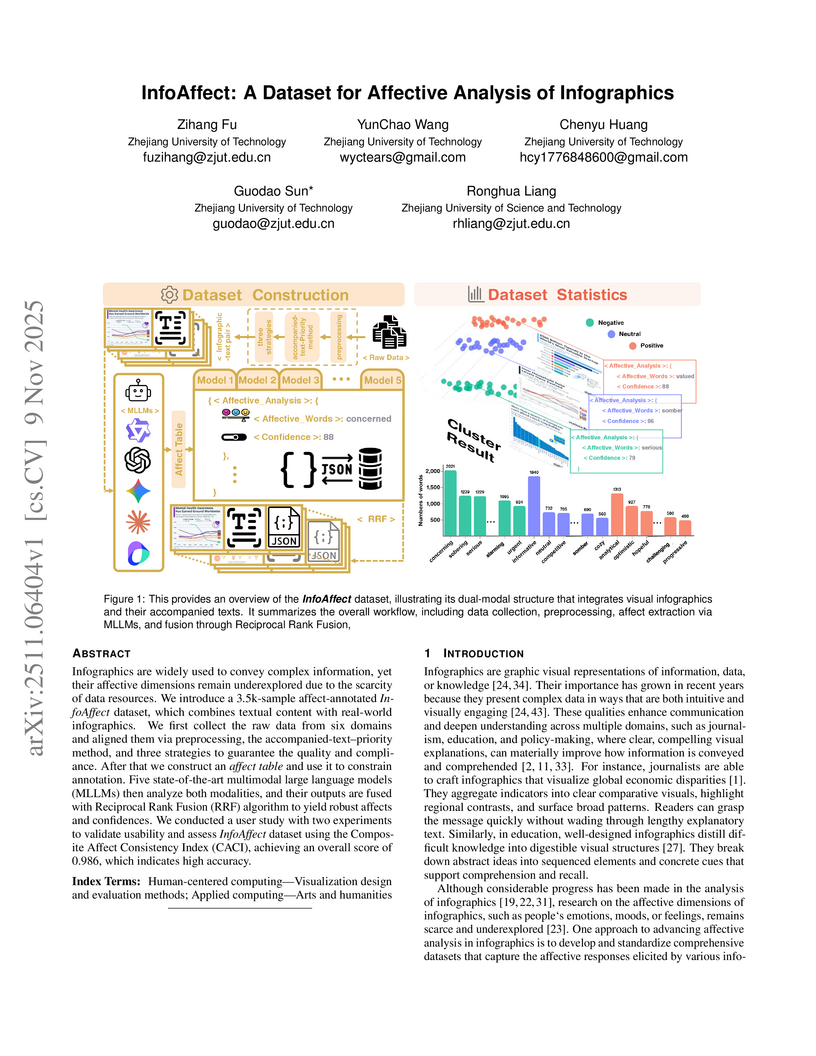

Infographics are widely used to convey complex information, yet their affective dimensions remain underexplored due to the scarcity of data resources. We introduce a 3.5k-sample affect-annotated InfoAffect dataset, which combines textual content with real-world infographics. We first collect the raw data from six domains and aligned them via preprocessing, the accompanied-text-priority method, and three strategies to guarantee the quality and compliance. After that we construct an affect table and use it to constrain annotation. Five state-of-the-art multimodal large language models (MLLMs) then analyze both modalities, and their outputs are fused with Reciprocal Rank Fusion (RRF) algorithm to yield robust affects and confidences. We conducted a user study with two experiments to validate usability and assess InfoAffect dataset using the Composite Affect Consistency Index (CACI), achieving an overall score of 0.986, which indicates high accuracy.

21 Jul 2020

In this paper, we consider the 3D Navier-Stokes equations in the whole space. We investigate some new inequalities and \textit{a priori} estimates to provide the critical regularity criteria in terms of one directional derivative of the velocity field, namely $\partial_3 \mathbf{u} \in L^p((0,T); L^q(\mathbb{R}^3)), ~\frac{2}{p} + \frac{3}{q} = 2, ~\frac{3}{2}

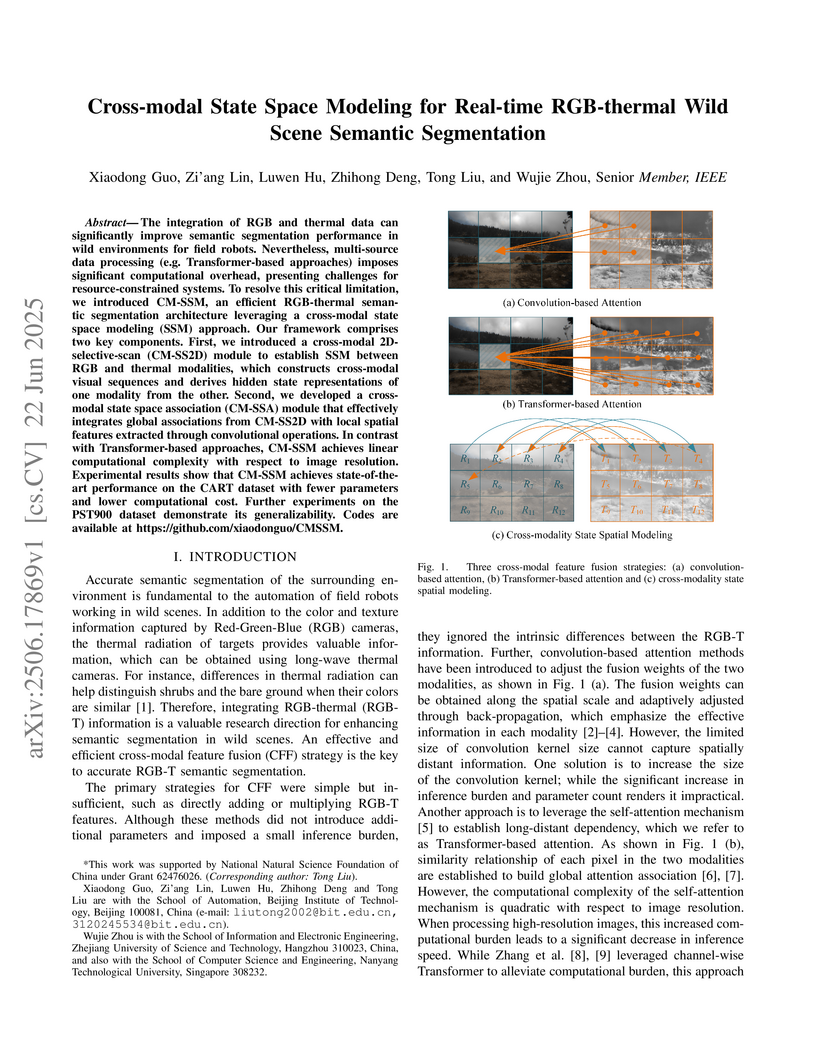

The integration of RGB and thermal data can significantly improve semantic segmentation performance in wild environments for field robots. Nevertheless, multi-source data processing (e.g. Transformer-based approaches) imposes significant computational overhead, presenting challenges for resource-constrained systems. To resolve this critical limitation, we introduced CM-SSM, an efficient RGB-thermal semantic segmentation architecture leveraging a cross-modal state space modeling (SSM) approach. Our framework comprises two key components. First, we introduced a cross-modal 2D-selective-scan (CM-SS2D) module to establish SSM between RGB and thermal modalities, which constructs cross-modal visual sequences and derives hidden state representations of one modality from the other. Second, we developed a cross-modal state space association (CM-SSA) module that effectively integrates global associations from CM-SS2D with local spatial features extracted through convolutional operations. In contrast with Transformer-based approaches, CM-SSM achieves linear computational complexity with respect to image resolution. Experimental results show that CM-SSM achieves state-of-the-art performance on the CART dataset with fewer parameters and lower computational cost. Further experiments on the PST900 dataset demonstrate its generalizability. Codes are available at this https URL.

09 Mar 2023

Most current LiDAR simultaneous localization and mapping (SLAM) systems build maps in point clouds, which are sparse when zoomed in, even though they seem dense to human eyes. Dense maps are essential for robotic applications, such as map-based navigation. Due to the low memory cost, mesh has become an attractive dense model for mapping in recent years. However, existing methods usually produce mesh maps by using an offline post-processing step to generate mesh maps. This two-step pipeline does not allow these methods to use the built mesh maps online and to enable localization and meshing to benefit each other. To solve this problem, we propose the first CPU-only real-time LiDAR SLAM system that can simultaneously build a mesh map and perform localization against the mesh map. A novel and direct meshing strategy with Gaussian process reconstruction realizes the fast building, registration, and updating of mesh maps. We perform experiments on several public datasets. The results show that our SLAM system can run at around 40Hz. The localization and meshing accuracy also outperforms the state-of-the-art methods, including the TSDF map and Poisson reconstruction. Our code and video demos are available at: this https URL.

This paper introduces EnsembleBERT, an ensemble learning approach utilizing single-layer BERT models, to classify sentiment in middle school students' social media text. The model achieved performance comparable to a three-layer BERT model, demonstrating the feasibility of using NLP for understanding student emotional well-being while highlighting trade-offs in computational efficiency.

Recent studies have shown convolution neural networks (CNNs) for image recognition are vulnerable to evasion attacks with carefully manipulated adversarial examples. Previous work primarily focused on how to generate adversarial examples closed to source images, by introducing pixel-level perturbations into the whole or specific part of images. In this paper, we propose an evasion attack on CNN classifiers in the context of License Plate Recognition (LPR), which adds predetermined perturbations to specific regions of license plate images, simulating some sort of naturally formed spots (such as sludge, etc.). Therefore, the problem is modeled as an optimization process searching for optimal perturbation positions, which is different from previous work that consider pixel values as decision variables. Notice that this is a complex nonlinear optimization problem, and we use a genetic-algorithm based approach to obtain optimal perturbation positions. In experiments, we use the proposed algorithm to generate various adversarial examples in the form of rectangle, circle, ellipse and spots cluster. Experimental results show that these adversarial examples are almost ignored by human eyes, but can fool HyperLPR with high attack success rate over 93%. Therefore, we believe that this kind of spot evasion attacks would pose a great threat to current LPR systems, and needs to be investigated further by the security community.

There are no more papers matching your filters at the moment.