Ask or search anything...

Aalborg University

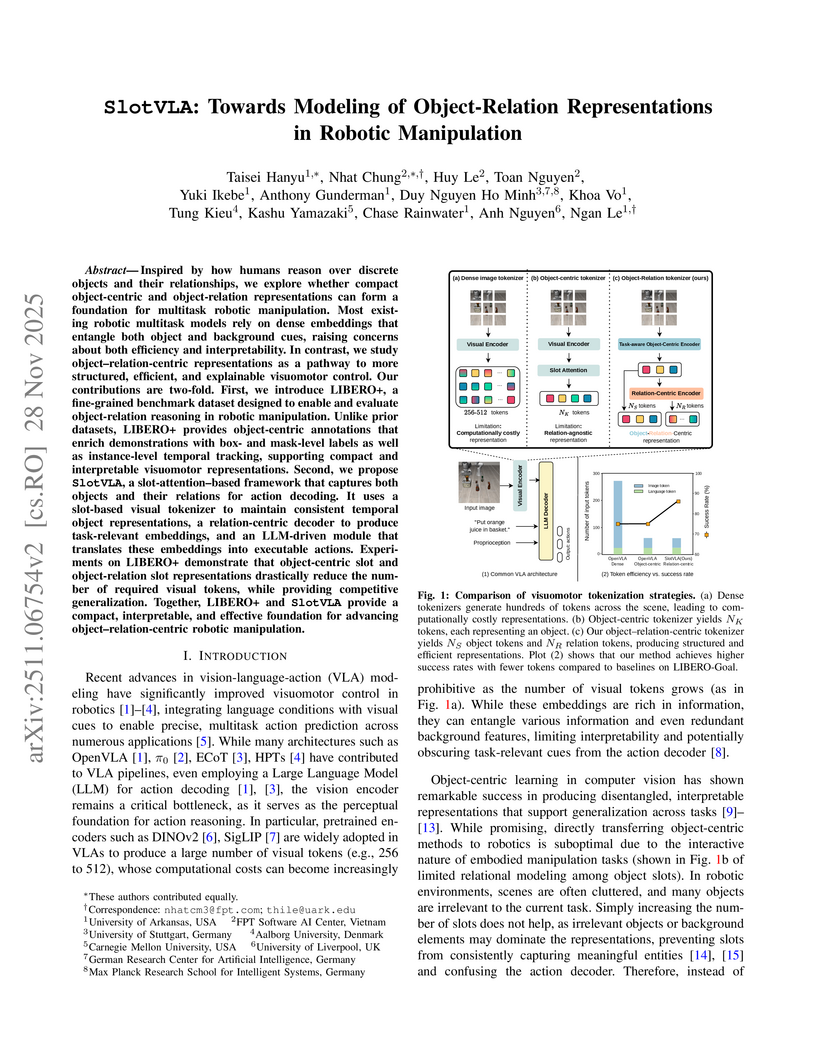

Aalborg UniversityA comprehensive survey proposes a novel two-dimensional taxonomy to organize research on integrating Large Language Models and Knowledge Graphs for Question Answering, detailing methods by complex QA categories and the specific functions KGs serve. The work reviews state-of-the-art techniques, highlights their utility in mitigating LLM weaknesses such as factual inaccuracy and limited reasoning, and identifies critical future research directions.

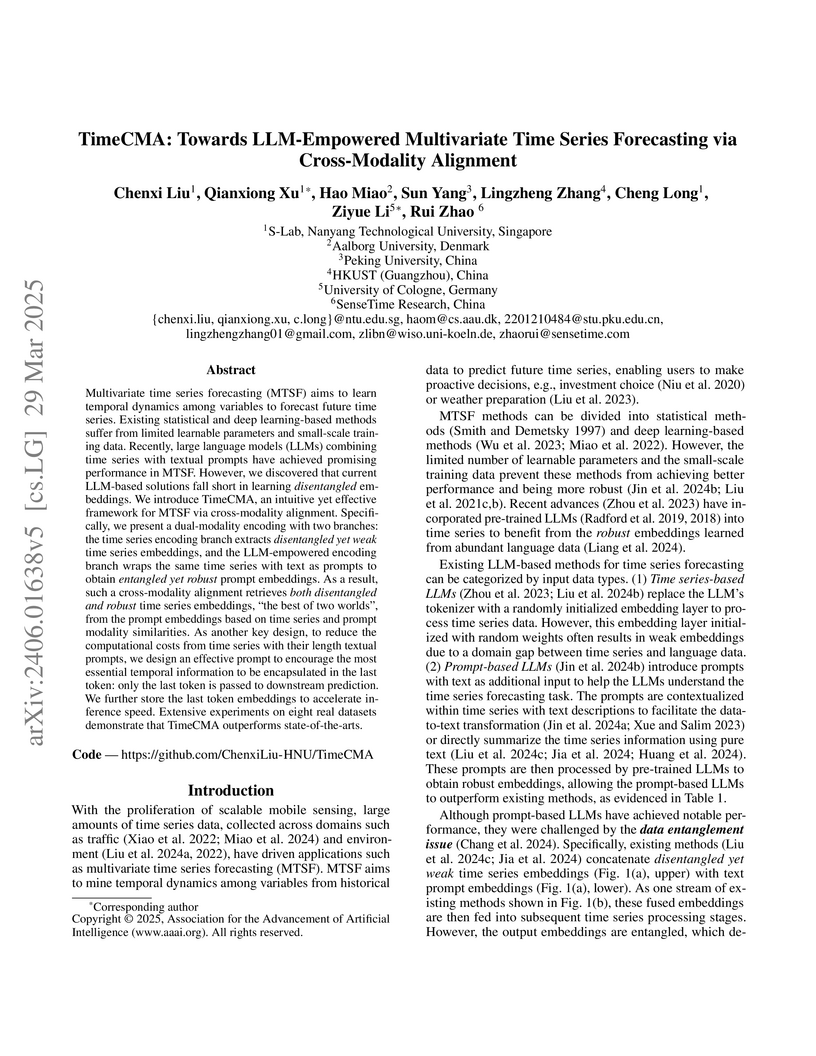

View blogTimeCMA introduces a framework for multivariate time series forecasting that leverages large language models (LLMs) through a novel cross-modality alignment module to generate disentangled yet robust time series embeddings. This approach, combined with efficient last token embedding storage, consistently outperforms state-of-the-art baselines across eight datasets while significantly reducing computational overhead.

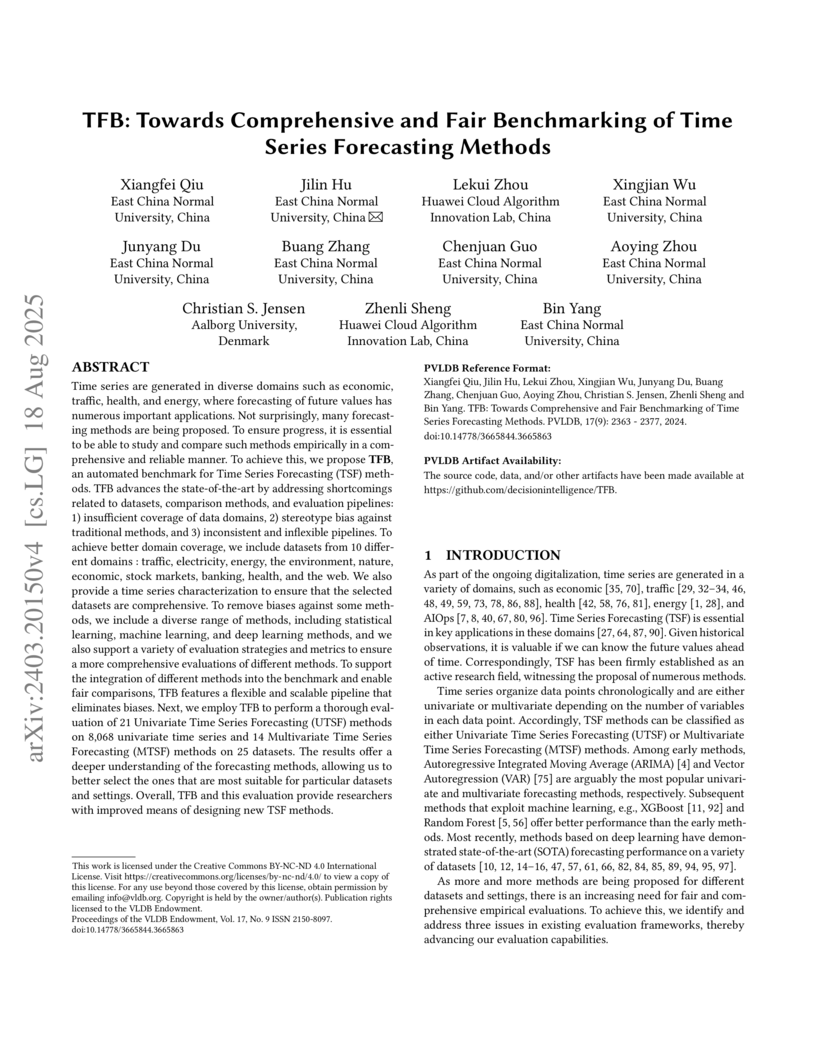

View blogAn automated benchmark framework, TFB, addresses critical shortcomings in time series forecasting evaluation by offering comprehensive data coverage, fair comparisons across diverse method paradigms, and consistent experimental pipelines. Its extensive evaluation challenges existing assumptions about method efficacy and provides guidance for selecting appropriate models based on time series characteristics.

View blogA comprehensive survey of over 200 Video Foundation Models (ViFMs) is presented, categorizing them by architecture and analyzing various training strategies and their performance across tasks. The survey reveals that image-based models show competitive performance, multimodal approaches consistently outperform unimodal ones on complex tasks, and increased model scale generally yields superior results.

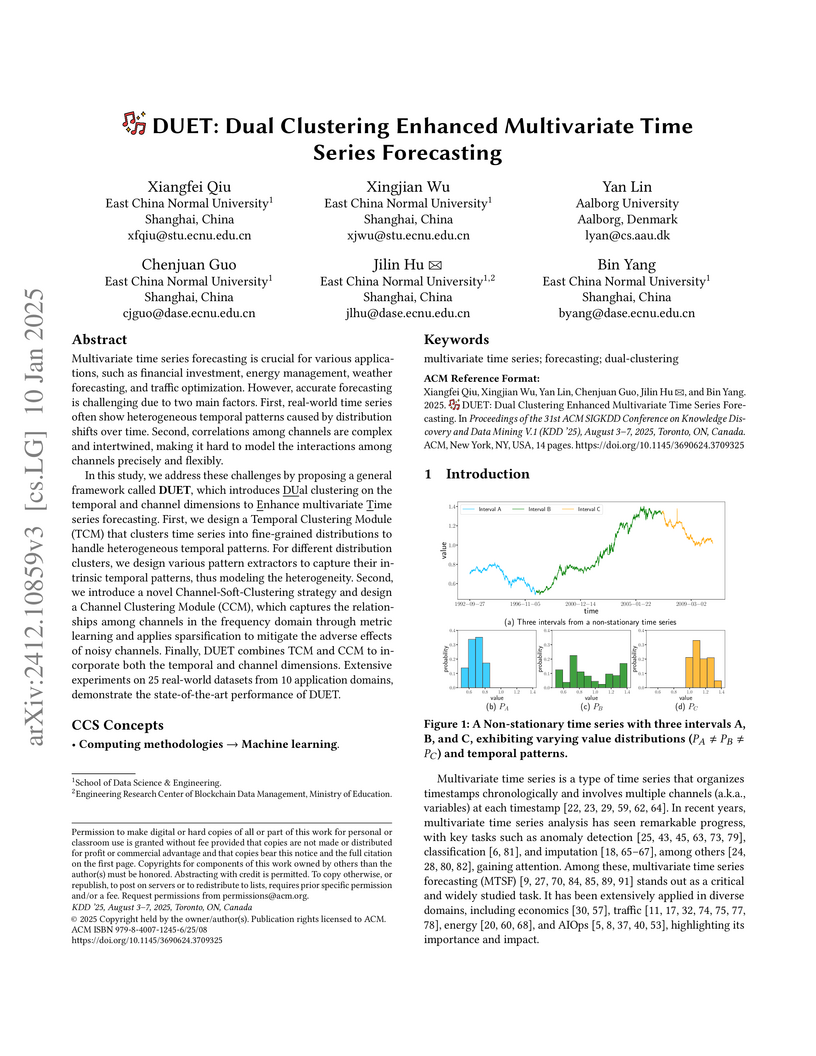

View blogDUET is a framework for multivariate time series forecasting that enhances accuracy by employing dual clustering on both temporal and channel dimensions. It achieves state-of-the-art performance, demonstrating an average reduction of 7.1% in Mean Squared Error (MSE) and 6.5% in Mean Absolute Error (MAE) compared to the next best baseline.

View blogAn international team of researchers analyzes the convergence of Large Language Models (LLMs) and Knowledge Graphs (KGs), proposing a hybrid approach that leverages their complementary strengths to advance Knowledge Computing, aiming for more robust and accurate knowledge systems.

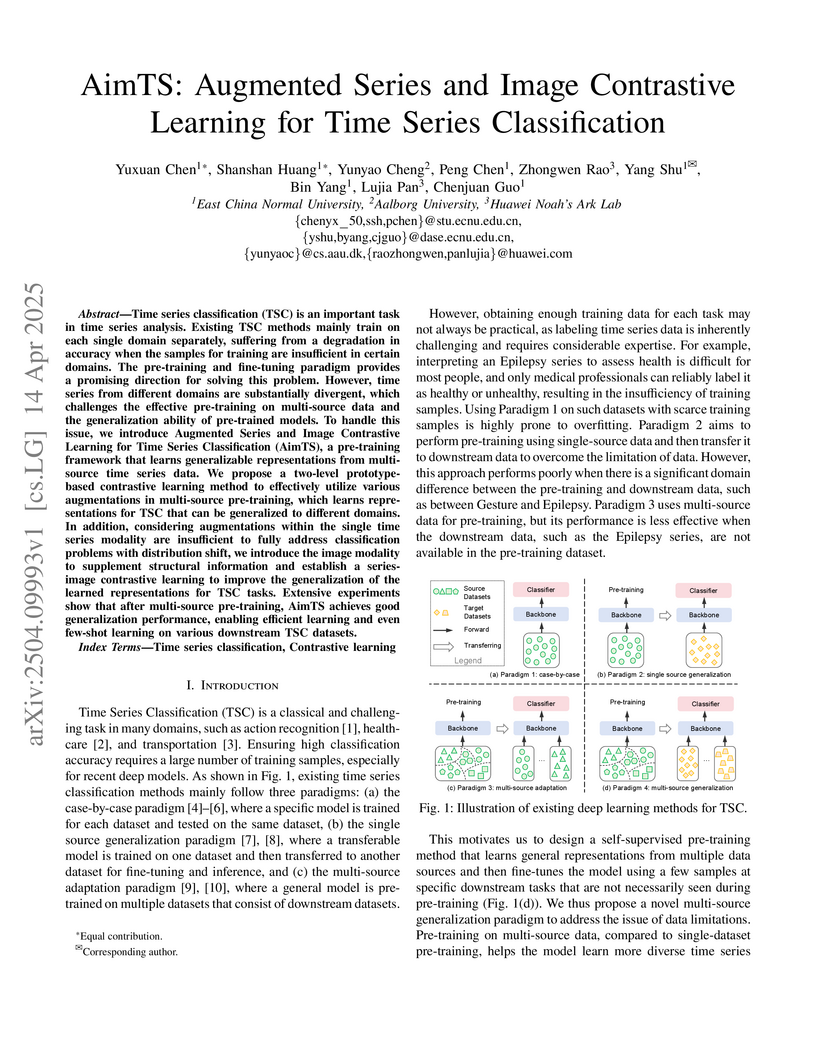

View blogAimTS provides a pre-training framework for time series classification, learning generalizable representations from multi-source data through prototype-based and series-image contrastive learning. The method achieved superior accuracy against state-of-the-art techniques across 125 UCR and 30 UEA datasets and demonstrated improved computational efficiency.

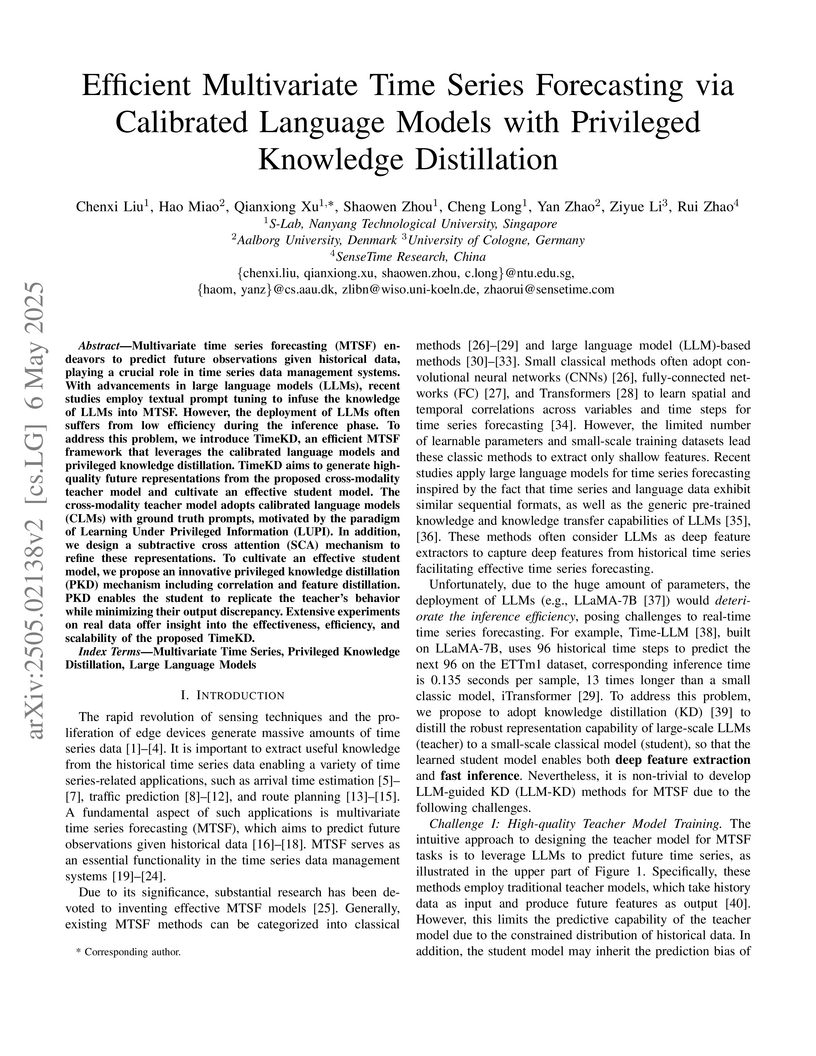

View blogTimeKD introduces an efficient framework for multivariate time series forecasting that leverages the robust representation capabilities of Large Language Models while mitigating their high inference costs. This is achieved through a novel privileged knowledge distillation method, which enables a lightweight student model to effectively learn from a calibrated LLM teacher that processes privileged information (future ground truth data) during training, leading to improved forecasting accuracy and superior computational efficiency.

View blog

Peking University

Peking University Nanyang Technological University

Nanyang Technological University

University of Central Florida

University of Central Florida

Alibaba Group

Alibaba Group

Northeastern University

Northeastern University

MIT

MIT

University of Oxford

University of Oxford Zhejiang University

Zhejiang University

University of California, Santa Barbara

University of California, Santa Barbara University of Copenhagen

University of Copenhagen

Carnegie Mellon University

Carnegie Mellon University