Algoma University

12 Sep 2025

There is a growing concern about the environmental impact of large language models (LLMs) in software development, particularly due to their high energy use and carbon footprint. Small Language Models (SLMs) offer a more sustainable alternative, requiring fewer computational resources while remaining effective for fundamental programming tasks. In this study, we investigate whether prompt engineering can improve the energy efficiency of SLMs in code generation. We evaluate four open-source SLMs, StableCode-Instruct-3B, Qwen2.5-Coder-3B-Instruct, CodeLlama-7B-Instruct, and Phi-3-Mini-4K-Instruct, across 150 Python problems from LeetCode, evenly distributed into easy, medium, and hard categories. Each model is tested under four prompting strategies: role prompting, zero-shot, few-shot, and chain-of-thought (CoT). For every generated solution, we measure runtime, memory usage, and energy consumption, comparing the results with a human-written baseline. Our findings show that CoT prompting provides consistent energy savings for Qwen2.5-Coder and StableCode-3B, while CodeLlama-7B and Phi-3-Mini-4K fail to outperform the baseline under any prompting strategy. These results highlight that the benefits of prompting are model-dependent and that carefully designed prompts can guide SLMs toward greener software development.

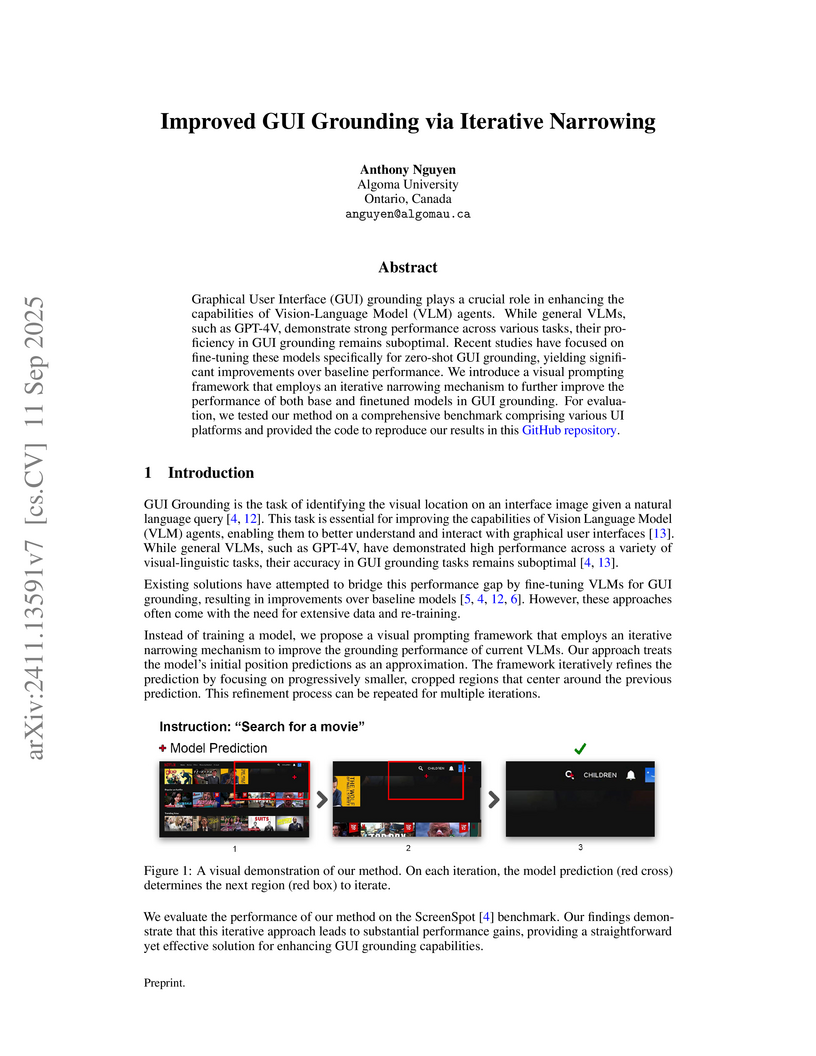

Graphical User Interface (GUI) grounding plays a crucial role in enhancing the capabilities of Vision-Language Model (VLM) agents. While general VLMs, such as GPT-4V, demonstrate strong performance across various tasks, their proficiency in GUI grounding remains suboptimal. Recent studies have focused on fine-tuning these models specifically for zero-shot GUI grounding, yielding significant improvements over baseline performance. We introduce a visual prompting framework that employs an iterative narrowing mechanism to further improve the performance of both general and fine-tuned models in GUI grounding. For evaluation, we tested our method on a comprehensive benchmark comprising various UI platforms and provided the code to reproduce our results.

12 Sep 2025

To optimize the reasoning and problem-solving capabilities of Large Language Models (LLMs), we propose a novel cloud-edge collaborative architecture that enables a structured, multi-agent prompting framework. This framework comprises three specialized components: GuideLLM, a lightweight model deployed at the edge to provide methodological guidance; SolverLLM, a more powerful model hosted in the cloud responsible for generating code solutions; and JudgeLLM, an automated evaluator for assessing solution correctness and quality. To evaluate and demonstrate the effectiveness of this architecture in realistic settings, we introduce RefactorCoderQA, a comprehensive benchmark designed to evaluate and enhance the performance of Large Language Models (LLMs) across multi-domain coding tasks. Motivated by the limitations of existing benchmarks, RefactorCoderQA systematically covers various technical domains, including Software Engineering, Data Science, Machine Learning, and Natural Language Processing, using authentic coding challenges from Stack Overflow. Extensive experiments reveal that our fine-tuned model, RefactorCoder-MoE, achieves state-of-the-art performance, significantly outperforming leading open-source and commercial baselines with an overall accuracy of 76.84%. Human evaluations further validate the interpretability, accuracy, and practical relevance of the generated solutions. In addition, we evaluate system-level metrics, such as throughput and latency, to gain deeper insights into the performance characteristics and trade-offs of the proposed architecture.

University of Toronto

University of Toronto University of Cambridge

University of Cambridge New York UniversityUniversity of EdinburghUniversity Health NetworkVector Institute for AILeeds Teaching Hospitals NHS TrustBasque Center for Applied Mathematics (BCAM)Basque Foundation for Science (IKERBASQUE)Algoma UniversityCancer Research UK Cambridge InstituteJohns Hopkins Medical InstitutionsSynthesizeUniversity of Iowa Hospitals & ClinicsQueens

’ University

New York UniversityUniversity of EdinburghUniversity Health NetworkVector Institute for AILeeds Teaching Hospitals NHS TrustBasque Center for Applied Mathematics (BCAM)Basque Foundation for Science (IKERBASQUE)Algoma UniversityCancer Research UK Cambridge InstituteJohns Hopkins Medical InstitutionsSynthesizeUniversity of Iowa Hospitals & ClinicsQueens

’ UniversityResearchers from the University of Toronto, Vector Institute, and University Health Network conducted a red-teaming workshop with healthcare professionals to identify vulnerabilities in Large Language Models for clinical use. The study categorized 32 unique prompts that elicited medically vulnerable responses, highlighting issues like hallucination and anchoring, and a replication study showed these vulnerabilities often persisted but also demonstrated model evolution.

The proliferation of connected vehicles within the Internet of Vehicles (IoV)

ecosystem presents critical challenges in ensuring scalable, real-time, and

privacy-preserving traffic management. Existing centralized IoV solutions often

suffer from high latency, limited scalability, and reliance on proprietary

Artificial Intelligence (AI) models, creating significant barriers to

widespread deployment, particularly in dynamic and privacy-sensitive

environments. Meanwhile, integrating Large Language Models (LLMs) in vehicular

systems remains underexplored, especially concerning prompt optimization and

effective utilization in federated contexts. To address these challenges, we

propose the Federated Prompt-Optimized Traffic Transformer (FPoTT), a novel

framework that leverages open-source LLMs for predictive IoV management. FPoTT

introduces a dynamic prompt optimization mechanism that iteratively refines

textual prompts to enhance trajectory prediction. The architecture employs a

dual-layer federated learning paradigm, combining lightweight edge models for

real-time inference with cloud-based LLMs to retain global intelligence. A

Transformer-driven synthetic data generator is incorporated to augment training

with diverse, high-fidelity traffic scenarios in the Next Generation Simulation

(NGSIM) format. Extensive evaluations demonstrate that FPoTT, utilizing

EleutherAI Pythia-1B, achieves 99.86% prediction accuracy on real-world data

while maintaining high performance on synthetic datasets. These results

underscore the potential of open-source LLMs in enabling secure, adaptive, and

scalable IoV management, offering a promising alternative to proprietary

solutions in smart mobility ecosystems.

Autonomous AI-based Cybersecurity Framework for Critical Infrastructure: Real-Time Threat Mitigation

Autonomous AI-based Cybersecurity Framework for Critical Infrastructure: Real-Time Threat Mitigation

Critical infrastructure systems, including energy grids, healthcare facilities, transportation networks, and water distribution systems, are pivotal to societal stability and economic resilience. However, the increasing interconnectivity of these systems exposes them to various cyber threats, including ransomware, Denial-of-Service (DoS) attacks, and Advanced Persistent Threats (APTs). This paper examines cybersecurity vulnerabilities in critical infrastructure, highlighting the threat landscape, attack vectors, and the role of Artificial Intelligence (AI) in mitigating these risks. We propose a hybrid AI-driven cybersecurity framework to enhance real-time vulnerability detection, threat modelling, and automated remediation. This study also addresses the complexities of adversarial AI, regulatory compliance, and integration. Our findings provide actionable insights to strengthen the security and resilience of critical infrastructure systems against emerging cyber threats.

The increasing complexity and scale of the Internet of Things (IoT) have made security a critical concern. This paper presents a novel Large Language Model (LLM)-based framework for comprehensive threat detection and prevention in IoT environments. The system integrates lightweight LLMs fine-tuned on IoT-specific datasets (IoT-23, TON_IoT) for real-time anomaly detection and automated, context-aware mitigation strategies optimized for resource-constrained devices. A modular Docker-based deployment enables scalable and reproducible evaluation across diverse network conditions. Experimental results in simulated IoT environments demonstrate significant improvements in detection accuracy, response latency, and resource efficiency over traditional security methods. The proposed framework highlights the potential of LLM-driven, autonomous security solutions for future IoT ecosystems.

This paper presents a novel approach to intrusion detection by integrating traditional signature-based methods with the contextual understanding capabilities of the GPT-2 Large Language Model (LLM). As cyber threats become increasingly sophisticated, particularly in distributed, heterogeneous, and resource-constrained environments such as those enabled by the Internet of Things (IoT), the need for dynamic and adaptive Intrusion Detection Systems (IDSs) becomes increasingly urgent. While traditional methods remain effective for detecting known threats, they often fail to recognize new and evolving attack patterns. In contrast, GPT-2 excels at processing unstructured data and identifying complex semantic relationships, making it well-suited to uncovering subtle, zero-day attack vectors. We propose a hybrid IDS framework that merges the robustness of signature-based techniques with the adaptability of GPT-2-driven semantic analysis. Experimental evaluations on a representative intrusion dataset demonstrate that our model enhances detection accuracy by 6.3%, reduces false positives by 9.0%, and maintains near real-time responsiveness. These results affirm the potential of language model integration to build intelligent, scalable, and resilient cybersecurity defences suited for modern connected environments.

Transformer models have established new benchmarks in natural language processing; however, their increasing depth results in substantial growth in parameter counts. While existing recurrent transformer methods address this issue by reprocessing layers multiple times, they often apply recurrence indiscriminately across entire blocks of layers. In this work, we investigate Intra-Layer Recurrence (ILR), a more targeted approach that applies recurrence selectively to individual layers within a single forward pass. Our experiments show that allocating more iterations to earlier layers yields optimal results. These findings suggest that ILR offers a promising direction for optimizing recurrent structures in transformer architectures.

07 Oct 2025

In the digital era, the exponential growth of scientific publications has made it increasingly difficult for researchers to efficiently identify and access relevant work. This paper presents an automated framework for research article classification and recommendation that leverages Natural Language Processing (NLP) techniques and machine learning. Using a large-scale arXiv.org dataset spanning more than three decades, we evaluate multiple feature extraction approaches (TF--IDF, Count Vectorizer, Sentence-BERT, USE, Mirror-BERT) in combination with diverse machine learning classifiers (Logistic Regression, SVM, Naïve Bayes, Random Forest, Gradient Boosted Trees, and k-Nearest Neighbour). Our experiments show that Logistic Regression with TF--IDF consistently yields the best classification performance, achieving an accuracy of 69\%. To complement classification, we incorporate a recommendation module based on the cosine similarity of vectorized articles, enabling efficient retrieval of related research papers. The proposed system directly addresses the challenge of information overload in digital libraries and demonstrates a scalable, data-driven solution to support literature discovery.

Phishing attacks are becoming increasingly sophisticated, underscoring the need for detection systems that strike a balance between high accuracy and computational efficiency. This paper presents a comparative evaluation of traditional Machine Learning (ML), Deep Learning (DL), and quantized small-parameter Large Language Models (LLMs) for phishing detection. Through experiments on a curated dataset, we show that while LLMs currently underperform compared to ML and DL methods in terms of raw accuracy, they exhibit strong potential for identifying subtle, context-based phishing cues. We also investigate the impact of zero-shot and few-shot prompting strategies, revealing that LLM-rephrased emails can significantly degrade the performance of both ML and LLM-based detectors. Our benchmarking highlights that models like DeepSeek R1 Distill Qwen 14B (Q8_0) achieve competitive accuracy, above 80%, using only 17GB of VRAM, supporting their viability for cost-efficient deployment. We further assess the models' adversarial robustness and cost-performance tradeoffs, and demonstrate how lightweight LLMs can provide concise, interpretable explanations to support real-time decision-making. These findings position optimized LLMs as promising components in phishing defence systems and offer a path forward for integrating explainable, efficient AI into modern cybersecurity frameworks.

16 Sep 2024

We examine the Einstein-Cartan (EC) theory in first-order form, which has a diffeomorphism as well as a local Lorentz invariance. We study the renormalizability of this theory in the framework of the Batalin-Vilkovisky formalism, which allows for a gauge invariant renormalization. Using the background field method, we discuss the gauge invariance of the background effective action and analyze the Ward identities which reflect the symmetries of the EC theory. As an application, we compute, in a general background gauge, the self-energy of the tetrad field at one-loop order.

This paper introduces a collaborative, human-centred taxonomy of AI,

algorithmic and automation harms. We argue that existing taxonomies, while

valuable, can be narrow, unclear, typically cater to practitioners and

government, and often overlook the needs of the wider public. Drawing on

existing taxonomies and a large repository of documented incidents, we propose

a taxonomy that is clear and understandable to a broad set of audiences, as

well as being flexible, extensible, and interoperable. Through iterative

refinement with topic experts and crowdsourced annotation testing, we propose a

taxonomy that can serve as a powerful tool for civil society organisations,

educators, policymakers, product teams and the general public. By fostering a

greater understanding of the real-world harms of AI and related technologies,

we aim to increase understanding, empower NGOs and individuals to identify and

report violations, inform policy discussions, and encourage responsible

technology development and deployment.

The rapid expansion of IoT ecosystems introduces severe challenges in

scalability, security, and real-time decision-making. Traditional centralized

architectures struggle with latency, privacy concerns, and excessive resource

consumption, making them unsuitable for modern large-scale IoT deployments.

This paper presents a novel Federated Learning-driven Large Language Model

(FL-LLM) framework, designed to enhance IoT system intelligence while ensuring

data privacy and computational efficiency. The framework integrates Generative

IoT (GIoT) models with a Gradient Sensing Federated Strategy (GSFS),

dynamically optimizing model updates based on real-time network conditions. By

leveraging a hybrid edge-cloud processing architecture, our approach balances

intelligence, scalability, and security in distributed IoT environments.

Evaluations on the IoT-23 dataset demonstrate that our framework improves model

accuracy, reduces response latency, and enhances energy efficiency,

outperforming traditional FL techniques (i.e., FedAvg, FedOpt). These findings

highlight the potential of integrating LLM-powered federated learning into

large-scale IoT ecosystems, paving the way for more secure, scalable, and

adaptive IoT management solutions.

03 Dec 2025

California Institute of Technology

California Institute of Technology University of California, San Diego

University of California, San Diego Space Telescope Science InstituteUniversiteit Gent

Space Telescope Science InstituteUniversiteit Gent Princeton University

Princeton University The Ohio State UniversityJet Propulsion Laboratory, California Institute of TechnologyJet Propulsion LaboratoryEuropean Space AgencyIPACIPAC, California Institute of TechnologyUniversity of ToledoAlgoma UniversityAURA

The Ohio State UniversityJet Propulsion Laboratory, California Institute of TechnologyJet Propulsion LaboratoryEuropean Space AgencyIPACIPAC, California Institute of TechnologyUniversity of ToledoAlgoma UniversityAURAThe mid-infrared spectrum of star-forming, high metallicity galaxies is dominated by emission features from aromatic and aliphatic bonds in small carbonaceous dust grains, often referred to as polycyclic aromatic hydrocarbons (PAHs). In metal-poor galaxies, the abundance of PAHs relative to the total dust sharply declines, but the origin of this deficit is unknown. We present JWST observations that detect and resolve emission from PAHs in the 7% Solar metallicity galaxy Sextans A, representing the lowest metallicity detection of PAH emission to date. In contrast to higher metallicity galaxies, the clumps of PAH emission are compact (0.5-1.5'' or 3-10 pc), which explains why PAH emission evaded detection by lower resolution instruments like Spitzer. Ratios between the 3.3, 7.7, and 11.3 μm PAH features indicate that the PAH grains in Sextans A are small and neutral, with no evidence of significant processing from the hard radiation fields within the galaxy. These results favor inhibited grain growth over enhanced destruction as the origin of the low PAH abundance in Sextans A. The compact clumps of PAH emission are likely active sites of in-situ PAH growth within a dense, well-shielded phase of the interstellar medium. Our results show that PAHs can form and survive in extremely metal-poor environments common early in the evolution of the Universe.

16 Jul 2015

We consider all radiative corrections to the total electron-positron cross section showing how the renormalization group equation can be used to sum the logarithmic contributions in two ways. First of all, one can sum leading-log etc. contributions. A second summation shows how all logarithmic corrections can be expressed in terms of log-independent contributions. Next, using Stevenson's characterization of renormalization scheme, we examine scheme dependence when using the second way of summing logarithms. The renormalization scheme invariants that arise are then related to those of Stevenson. We consider two choices of renormalization scheme, one resulting in two powers of a running coupling, the second in an infinite series in the two loop running constant. We then establish how the coupling constant arising in one renormalization scheme can be expressed as a power series of the coupling in any other scheme. Next we establish how by using different mass scale at each order of perturbation theory, all renormalization scheme dependence can be absorbed into these mass scales when one uses the second way of summing logarithmic corrections. We then employ this approach to renormalization scheme dependency to the effective potential in a scalar model, showing the result that it is independent of the background field is scheme independent. The way in which the "principle of minimal sensitivity" can be applied after summation is then discussed.

02 Jun 2017

The renormalization that relates a coupling "a" associated with a distinct

renormalization group beta function in a given theory is considered.

Dimensional regularization and mass independent renormalization schemes are

used in this discussion. It is shown how the renormalization a∗=a+x2a2 is

related to a change in the mass scale μ that is induced by renormalization.

It is argued that the infrared fixed point is to be a determined in a

renormalization scheme in which the series expansion for a physical quantity

R terminates.

09 Jun 2025

A Lagrange multiplier field can be used to restrict radiative corrections to

the Einstein-Hilbert action to one-loop order. This result is employed to show

that it is possible to couple a scalar field to the metric (graviton) field in

such a way that the model is both renormalizable and unitary. The usual

Einstein equations of motion for the gravitational field are recovered,

perturbatively, in the classical limit. By evaluating the generating functional

of proper Green's functions in closed form, one obtains a novel analytic

contribution to the effective action.

27 Aug 2016

The zero to four loop contribution to the cross section Re+e− for

e+e−⟶ hadrons, when combined with the renormalization

group equation, allows for summation of all leading-log (LL),

next-to-leading-log (NLL)…N3LL perturbative contributions. It is also

shown how all logarithmic contributions to Re+e− can be summed and

that Re+e− can be expressed in terms of the log independent

contributions, and once this is done the running coupling a is evaluated at a

point independent of the renormalization scale μ. All explicit dependence

of Re+e− on μ cancels against its implicit dependence on μ

through the running coupling a so that the ambiguity associated with the

value of μ is shown to disappear. The renormalization scheme dependency of

the "summed" cross section Re+e− is examined in three distinct

renormalization schemes. In each case, Re+e− is expressible in terms

of renormalization scheme independent parameters τi and is explicitly and

implicitly independent of the renormalization scale μ. Two of the forms are

then compared graphically both with each other and with the purely perturbative

results and the RG-summed N3LL results.

28 Apr 2023

We calculate in a general background gauge, to one-loop order, the leading

logarithmic contribution from the graviton self-energy at finite temperature

T, extending a previous analysis done at T=0. The result, which has a

transverse structure, is applied to evaluate the leading quantum correction of

the gravitational vacuum polarization to the Newtonian potential. An analytic

expression valid at all temperatures is obtained, which generalizes the result

obtained earlier at T=0. One finds that the magnitude of this quantum

correction decreases as the temperature rises.

There are no more papers matching your filters at the moment.