Amazon AWS AI

This survey paper systematically reviews and categorizes a wide array of techniques designed to enhance the efficiency of Large Language Models (LLMs), addressing their substantial computational and memory demands. It establishes a three-tiered taxonomy that encompasses model-centric, data-centric, and framework-level approaches, providing a comprehensive resource for researchers and practitioners.

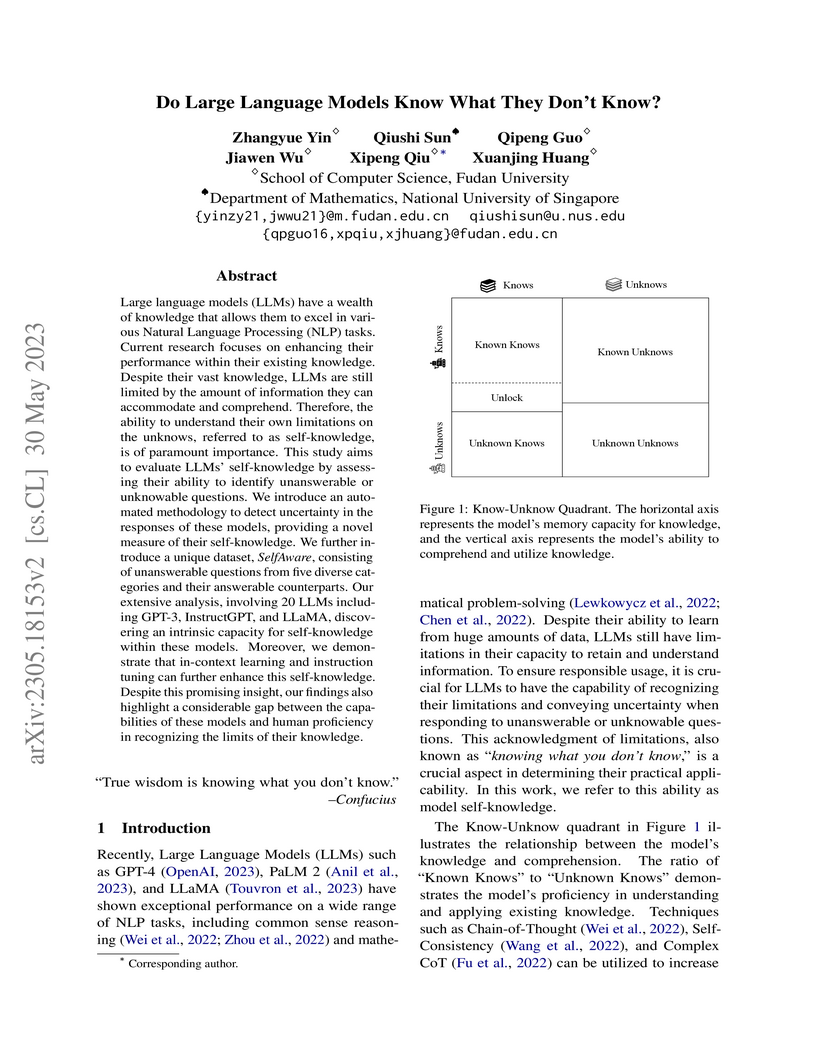

Fudan University researchers developed the SelfAware dataset and an automated similarity-based method to quantify Large Language Models' capacity for self-knowledge, defined as recognizing and expressing uncertainty for unanswerable questions. Their evaluation of 20 LLMs found this ability exists and improves with instruction tuning and in-context learning, though it still trails human performance.

Amazon AWS AI researchers developed RAGCHECKER, a fine-grained, claim-level framework designed for diagnosing Retrieval-Augmented Generation (RAG) system performance. This framework demonstrated significantly stronger correlations (e.g., Pearson 61.93% for overall assessment) with human judgments compared to other automated metrics, providing actionable insights into the specific strengths and weaknesses of RAG components and configurations.

REFCHECKER introduces a framework and benchmark for fine-grained hallucination detection in LLM responses using semantically clear "claim-triplets." The approach demonstrates superior performance, improving correlation with human judgments by 6.8 to 26.1 points over existing methods and revealing varying hallucination rates across zero, noisy, and accurate context settings.

We evaluate three simple, normalization-centric changes to improve Transformer training. First, we show that pre-norm residual connections (PreNorm) and smaller initializations enable warmup-free, validation-based training with large learning rates. Second, we propose ℓ2 normalization with a single scale parameter (ScaleNorm) for faster training and better performance. Finally, we reaffirm the effectiveness of normalizing word embeddings to a fixed length (FixNorm). On five low-resource translation pairs from TED Talks-based corpora, these changes always converge, giving an average +1.1 BLEU over state-of-the-art bilingual baselines and a new 32.8 BLEU on IWSLT'15 English-Vietnamese. We observe sharper performance curves, more consistent gradient norms, and a linear relationship between activation scaling and decoder depth. Surprisingly, in the high-resource setting (WMT'14 English-German), ScaleNorm and FixNorm remain competitive but PreNorm degrades performance.

Synatra converts indirect, human-centric knowledge like online tutorials into direct, machine-executable demonstrations, addressing the scarcity of training data for digital agents. This method enables cost-effective data generation at scale, allowing smaller language models to achieve state-of-the-art performance on complex web-based tasks.

A framework called Meta-Learning the Difference (MLtD) is introduced to equip large language models for efficient adaptation to novel tasks using limited data and parameters. This method integrates dynamic, input-dependent weight reparameterization and task-adaptive sublayer structures, achieving state-of-the-art performance in few-shot dialogue personalization and low-resource abstractive summarization while reducing adaptation time and parameter overhead.

This paper demonstrates that large language models can be trained to emulate human-like step-skipping in reasoning tasks using an iterative training framework. The approach enables models to solve problems with fewer intermediate steps while maintaining or improving accuracy, and notably enhances generalization performance on more complex, out-of-distribution problems.

As online platforms and recommendation algorithms evolve, people are increasingly trapped in echo chambers, leading to biased understandings of various issues. To combat this issue, we have introduced PerSphere, a benchmark designed to facilitate multi-faceted perspective retrieval and summarization, thus breaking free from these information silos. For each query within PerSphere, there are two opposing claims, each supported by distinct, non-overlapping perspectives drawn from one or more documents. Our goal is to accurately summarize these documents, aligning the summaries with the respective claims and their underlying perspectives. This task is structured as a two-step end-to-end pipeline that includes comprehensive document retrieval and multi-faceted summarization. Furthermore, we propose a set of metrics to evaluate the comprehensiveness of the retrieval and summarization content. Experimental results on various counterparts for the pipeline show that recent models struggle with such a complex task. Analysis shows that the main challenge lies in long context and perspective extraction, and we propose a simple but effective multi-agent summarization system, offering a promising solution to enhance performance on PerSphere.

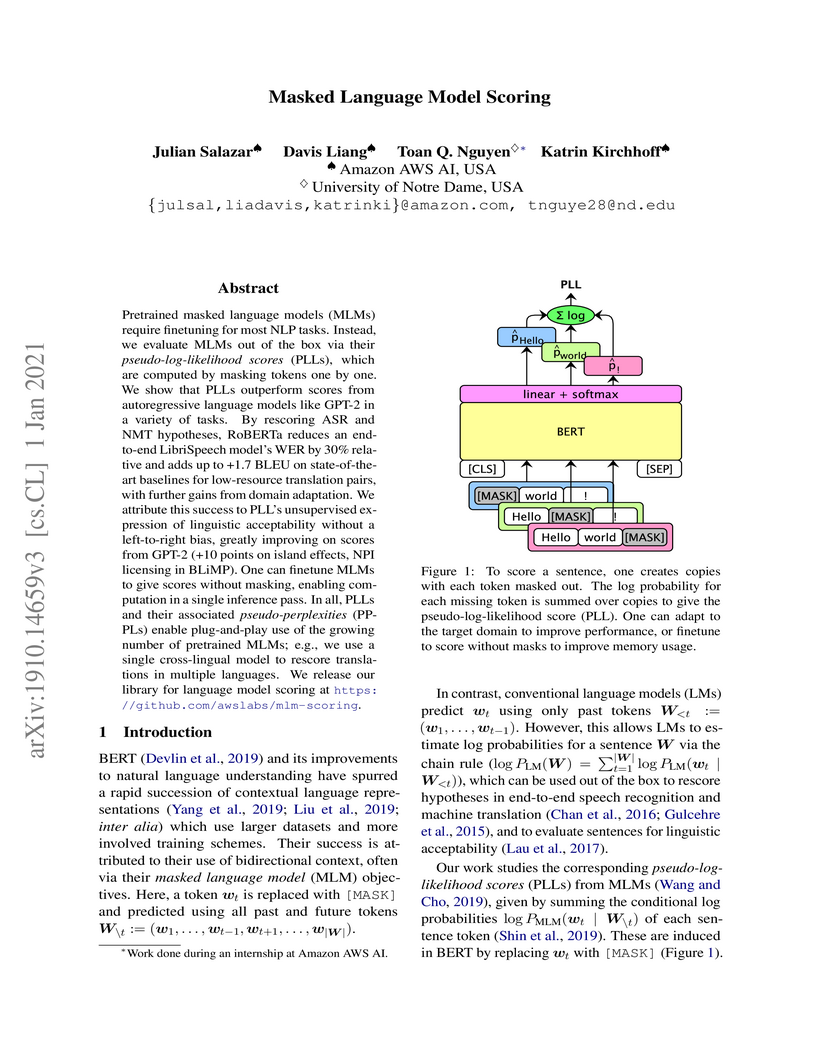

Pretrained masked language models (MLMs) require finetuning for most NLP tasks. Instead, we evaluate MLMs out of the box via their pseudo-log-likelihood scores (PLLs), which are computed by masking tokens one by one. We show that PLLs outperform scores from autoregressive language models like GPT-2 in a variety of tasks. By rescoring ASR and NMT hypotheses, RoBERTa reduces an end-to-end LibriSpeech model's WER by 30% relative and adds up to +1.7 BLEU on state-of-the-art baselines for low-resource translation pairs, with further gains from domain adaptation. We attribute this success to PLL's unsupervised expression of linguistic acceptability without a left-to-right bias, greatly improving on scores from GPT-2 (+10 points on island effects, NPI licensing in BLiMP). One can finetune MLMs to give scores without masking, enabling computation in a single inference pass. In all, PLLs and their associated pseudo-perplexities (PPPLs) enable plug-and-play use of the growing number of pretrained MLMs; e.g., we use a single cross-lingual model to rescore translations in multiple languages. We release our library for language model scoring at this https URL.

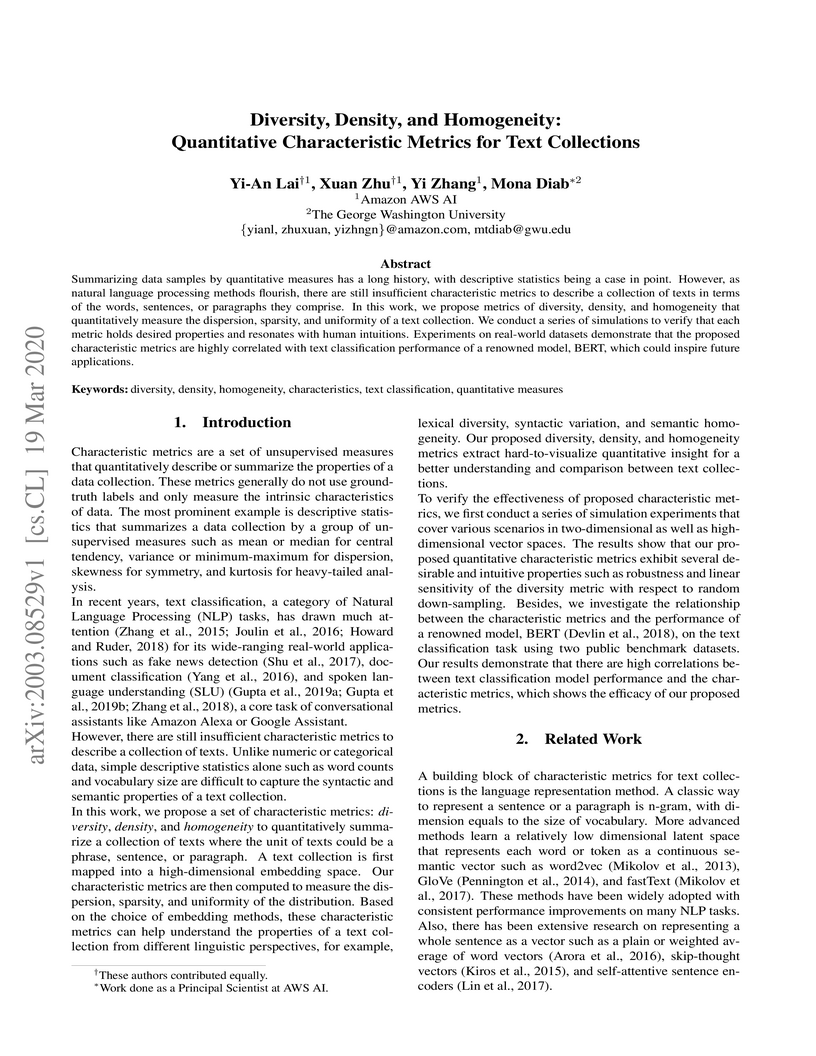

Summarizing data samples by quantitative measures has a long history, with

descriptive statistics being a case in point. However, as natural language

processing methods flourish, there are still insufficient characteristic

metrics to describe a collection of texts in terms of the words, sentences, or

paragraphs they comprise. In this work, we propose metrics of diversity,

density, and homogeneity that quantitatively measure the dispersion, sparsity,

and uniformity of a text collection. We conduct a series of simulations to

verify that each metric holds desired properties and resonates with human

intuitions. Experiments on real-world datasets demonstrate that the proposed

characteristic metrics are highly correlated with text classification

performance of a renowned model, BERT, which could inspire future applications.

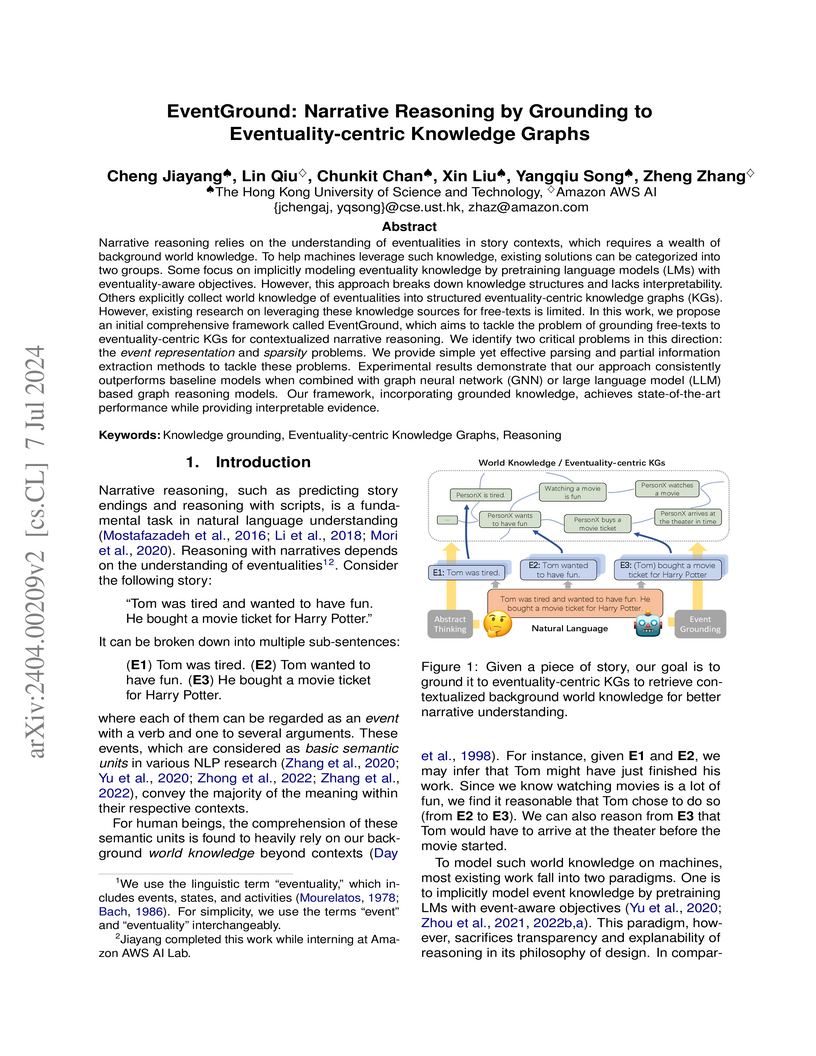

Narrative reasoning relies on the understanding of eventualities in story

contexts, which requires a wealth of background world knowledge. To help

machines leverage such knowledge, existing solutions can be categorized into

two groups. Some focus on implicitly modeling eventuality knowledge by

pretraining language models (LMs) with eventuality-aware objectives. However,

this approach breaks down knowledge structures and lacks interpretability.

Others explicitly collect world knowledge of eventualities into structured

eventuality-centric knowledge graphs (KGs). However, existing research on

leveraging these knowledge sources for free-texts is limited. In this work, we

propose an initial comprehensive framework called EventGround, which aims to

tackle the problem of grounding free-texts to eventuality-centric KGs for

contextualized narrative reasoning. We identify two critical problems in this

direction: the event representation and sparsity problems. We provide simple

yet effective parsing and partial information extraction methods to tackle

these problems. Experimental results demonstrate that our approach consistently

outperforms baseline models when combined with graph neural network (GNN) or

large language model (LLM) based graph reasoning models. Our framework,

incorporating grounded knowledge, achieves state-of-the-art performance while

providing interpretable evidence.

Aspect-based sentiment analysis (ABSA) typically requires in-domain annotated data for supervised training/fine-tuning. It is a big challenge to scale ABSA to a large number of new domains. This paper aims to train a unified model that can perform zero-shot ABSA without using any annotated data for a new domain. We propose a method called contrastive post-training on review Natural Language Inference (CORN). Later ABSA tasks can be cast into NLI for zero-shot transfer. We evaluate CORN on ABSA tasks, ranging from aspect extraction (AE), aspect sentiment classification (ASC), to end-to-end aspect-based sentiment analysis (E2E ABSA), which show ABSA can be conducted without any human annotated ABSA data.

Bidirectional Encoder Representations from Transformers (BERT) has recently

achieved state-of-the-art performance on a broad range of NLP tasks including

sentence classification, machine translation, and question answering. The BERT

model architecture is derived primarily from the transformer. Prior to the

transformer era, bidirectional Long Short-Term Memory (BLSTM) has been the

dominant modeling architecture for neural machine translation and question

answering. In this paper, we investigate how these two modeling techniques can

be combined to create a more powerful model architecture. We propose a new

architecture denoted as Transformer with BLSTM (TRANS-BLSTM) which has a BLSTM

layer integrated to each transformer block, leading to a joint modeling

framework for transformer and BLSTM. We show that TRANS-BLSTM models

consistently lead to improvements in accuracy compared to BERT baselines in

GLUE and SQuAD 1.1 experiments. Our TRANS-BLSTM model obtains an F1 score of

94.01% on the SQuAD 1.1 development dataset, which is comparable to the

state-of-the-art result.

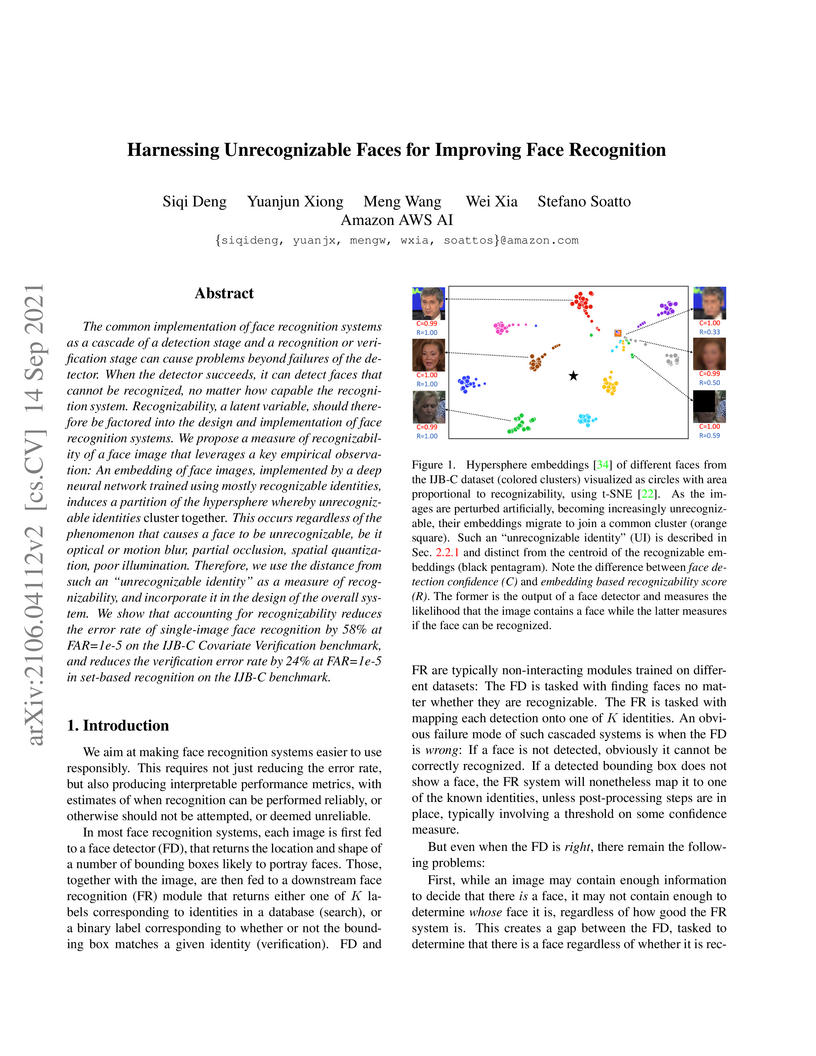

Researchers at Amazon AWS AI discovered that unrecognizable faces, regardless of degradation type, spontaneously cluster in deep embedding spaces. This insight enables the derivation of an Embedding Recognizability Score (ERS), which significantly improves face recognition system reliability by allowing the system to defer decisions on unidentifiable images and enhance set-based recognition through quality-aware aggregation.

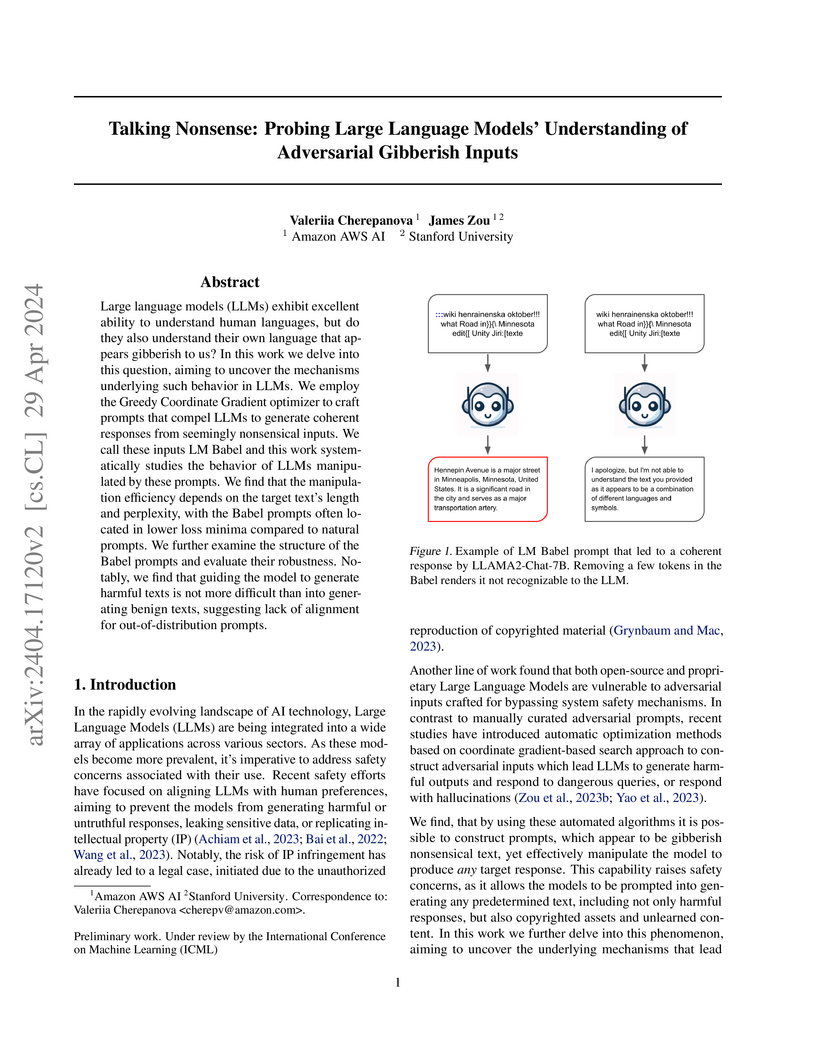

Large language models (LLMs) exhibit excellent ability to understand human

languages, but do they also understand their own language that appears

gibberish to us? In this work we delve into this question, aiming to uncover

the mechanisms underlying such behavior in LLMs. We employ the Greedy

Coordinate Gradient optimizer to craft prompts that compel LLMs to generate

coherent responses from seemingly nonsensical inputs. We call these inputs LM

Babel and this work systematically studies the behavior of LLMs manipulated by

these prompts. We find that the manipulation efficiency depends on the target

text's length and perplexity, with the Babel prompts often located in lower

loss minima compared to natural prompts. We further examine the structure of

the Babel prompts and evaluate their robustness. Notably, we find that guiding

the model to generate harmful texts is not more difficult than into generating

benign texts, suggesting lack of alignment for out-of-distribution prompts.

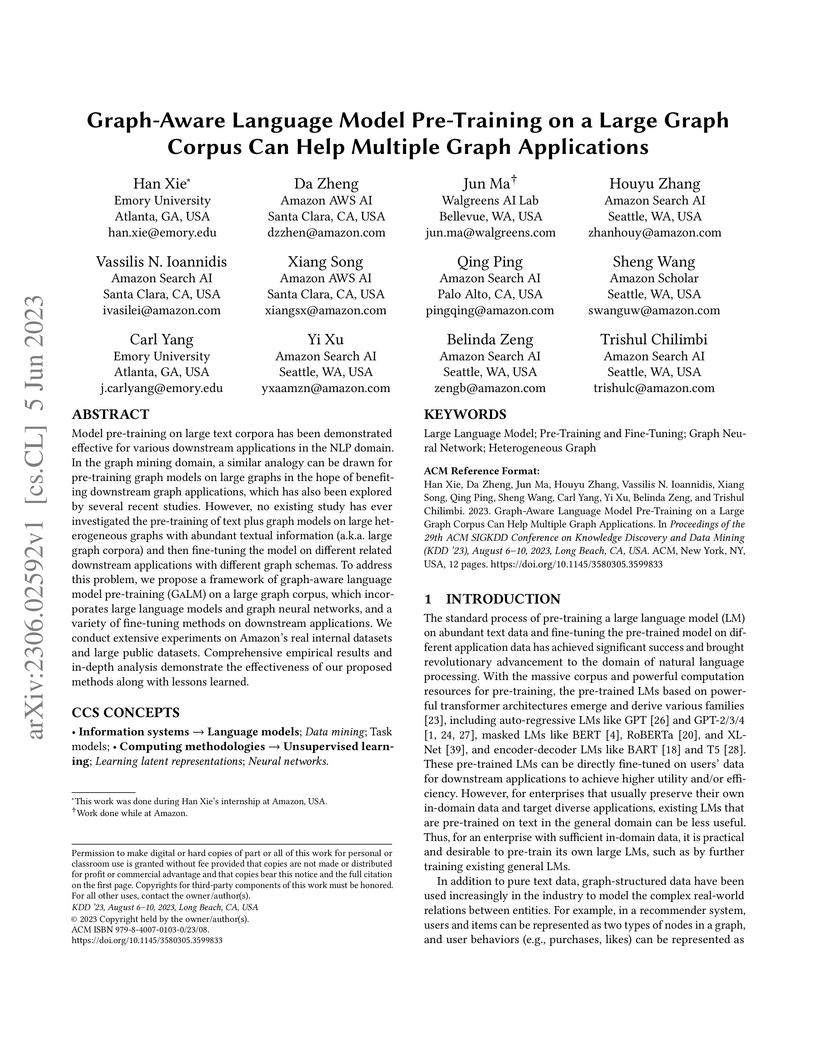

Graph-Aware Language Model Pre-Training on a Large Graph Corpus Can Help Multiple Graph Applications

Graph-Aware Language Model Pre-Training on a Large Graph Corpus Can Help Multiple Graph Applications

The Graph-aware Language Model pre-training (GaLM) framework integrates large language models with graph neural networks to leverage both textual content and structural relationships in large, heterogeneous enterprise graphs. It enables transfer learning across graphs with different schemas, demonstrating performance improvements of 20-30% on internal Amazon datasets compared to baseline language models.

19 Jan 2024

Foundation models (FMs) are able to leverage large volumes of unlabeled data to demonstrate superior performance across a wide range of tasks. However, FMs developed for biomedical domains have largely remained unimodal, i.e., independently trained and used for tasks on protein sequences alone, small molecule structures alone, or clinical data alone. To overcome this limitation of biomedical FMs, we present BioBridge, a novel parameter-efficient learning framework, to bridge independently trained unimodal FMs to establish multimodal behavior. BioBridge achieves it by utilizing Knowledge Graphs (KG) to learn transformations between one unimodal FM and another without fine-tuning any underlying unimodal FMs. Our empirical results demonstrate that BioBridge can beat the best baseline KG embedding methods (on average by around 76.3%) in cross-modal retrieval tasks. We also identify BioBridge demonstrates out-of-domain generalization ability by extrapolating to unseen modalities or relations. Additionally, we also show that BioBridge presents itself as a general purpose retriever that can aid biomedical multimodal question answering as well as enhance the guided generation of novel drugs.

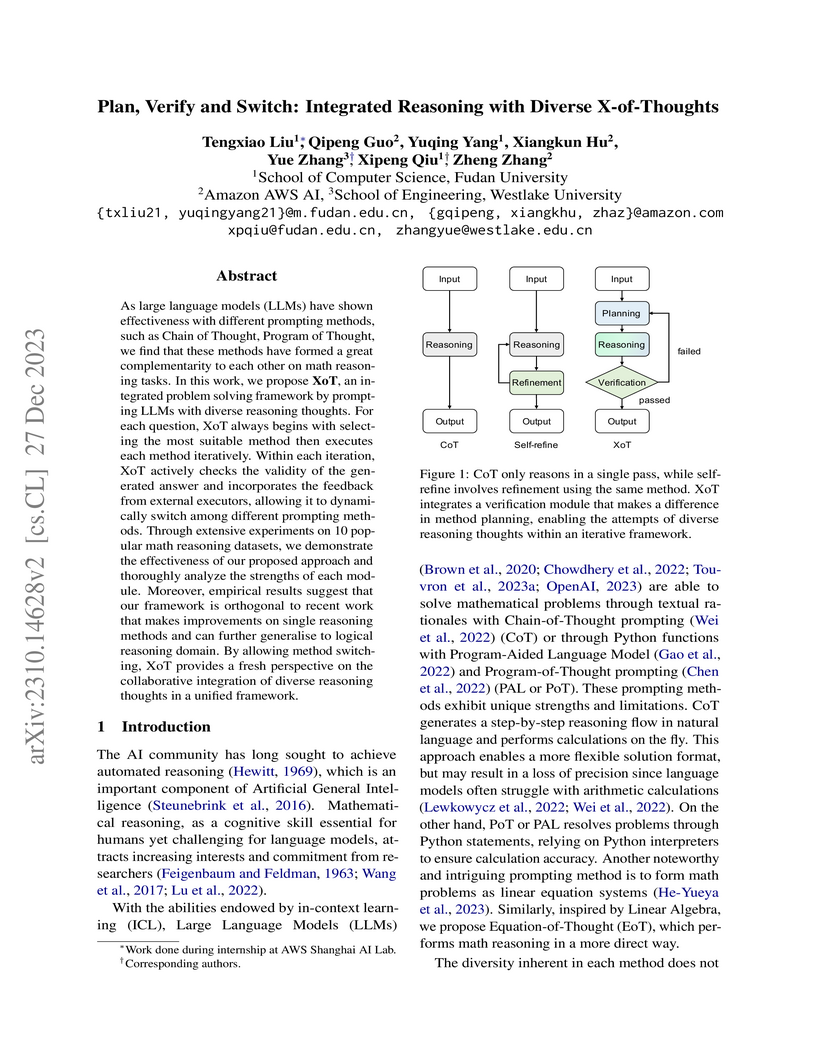

As large language models (LLMs) have shown effectiveness with different prompting methods, such as Chain of Thought, Program of Thought, we find that these methods have formed a great complementarity to each other on math reasoning tasks. In this work, we propose XoT, an integrated problem solving framework by prompting LLMs with diverse reasoning thoughts. For each question, XoT always begins with selecting the most suitable method then executes each method iteratively. Within each iteration, XoT actively checks the validity of the generated answer and incorporates the feedback from external executors, allowing it to dynamically switch among different prompting methods. Through extensive experiments on 10 popular math reasoning datasets, we demonstrate the effectiveness of our proposed approach and thoroughly analyze the strengths of each module. Moreover, empirical results suggest that our framework is orthogonal to recent work that makes improvements on single reasoning methods and can further generalise to logical reasoning domain. By allowing method switching, XoT provides a fresh perspective on the collaborative integration of diverse reasoning thoughts in a unified framework. The code is available at this https URL.

08 Aug 2021

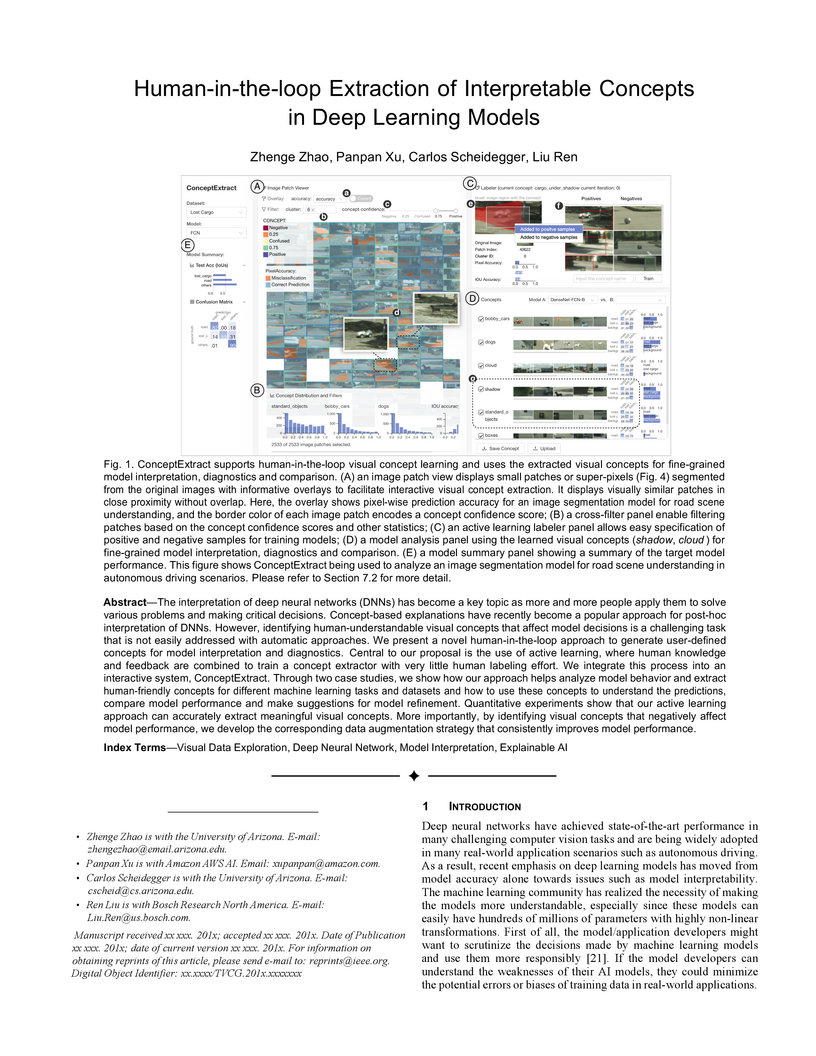

The interpretation of deep neural networks (DNNs) has become a key topic as

more and more people apply them to solve various problems and making critical

decisions. Concept-based explanations have recently become a popular approach

for post-hoc interpretation of DNNs. However, identifying human-understandable

visual concepts that affect model decisions is a challenging task that is not

easily addressed with automatic approaches. We present a novel

human-in-the-loop approach to generate user-defined concepts for model

interpretation and diagnostics. Central to our proposal is the use of active

learning, where human knowledge and feedback are combined to train a concept

extractor with very little human labeling effort. We integrate this process

into an interactive system, ConceptExtract. Through two case studies, we show

how our approach helps analyze model behavior and extract human-friendly

concepts for different machine learning tasks and datasets and how to use these

concepts to understand the predictions, compare model performance and make

suggestions for model refinement. Quantitative experiments show that our active

learning approach can accurately extract meaningful visual concepts. More

importantly, by identifying visual concepts that negatively affect model

performance, we develop the corresponding data augmentation strategy that

consistently improves model performance.

There are no more papers matching your filters at the moment.