Ask or search anything...

MapNav introduces Annotated Semantic Maps (ASMs) as a memory representation for Vision-and-Language Navigation (VLN), enabling Vision-Language Models (VLMs) to interpret structured spatial information through explicit textual annotations. This approach achieves state-of-the-art performance, boosting Success Rate by 23.5% and Success weighted Path Length by 26.5% on R2R over prior methods while reducing memory consumption to a constant 0.17MB and inference time by 79.5%.

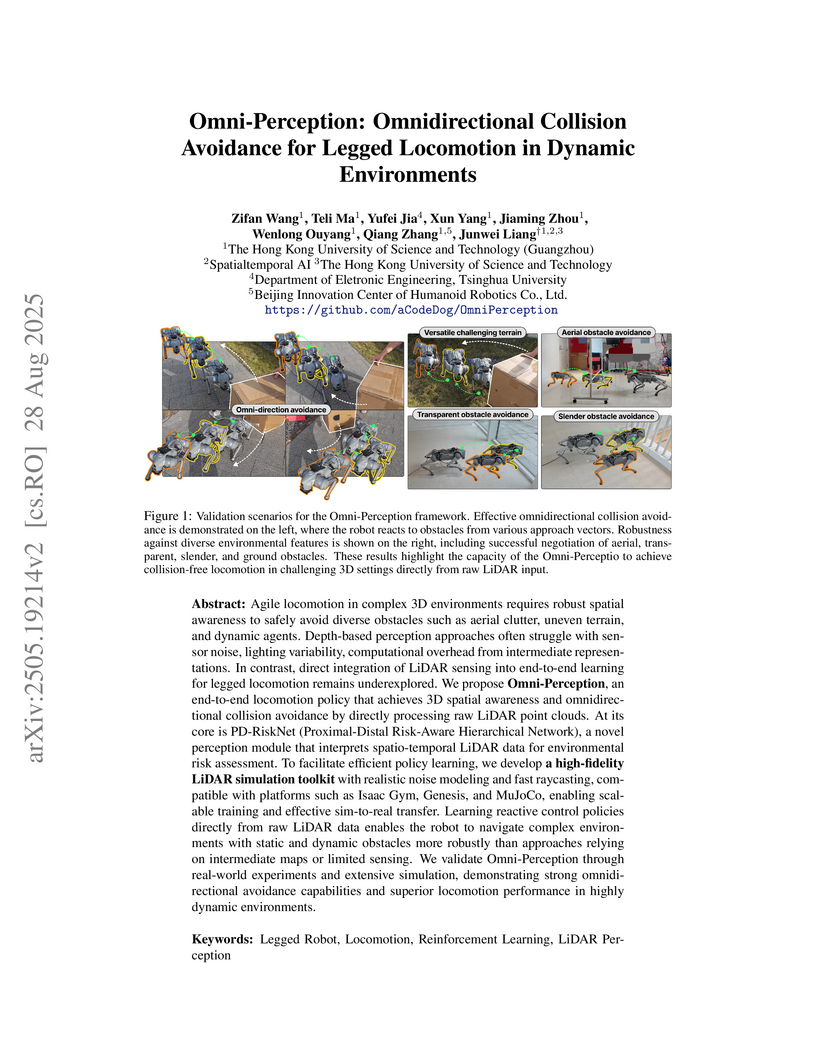

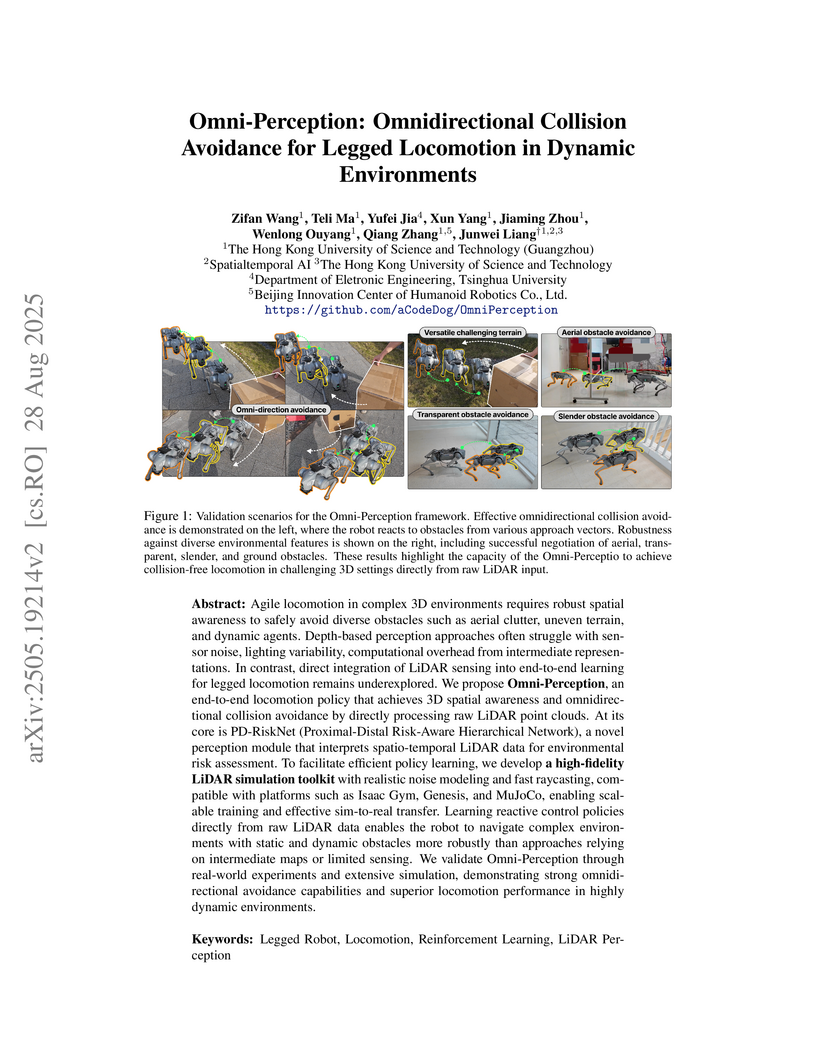

View blogResearchers at The Hong Kong University of Science and Technology (Guangzhou) developed Omni-Perception, an end-to-end reinforcement learning framework enabling legged robots to perform omnidirectional collision avoidance by directly processing raw, spatio-temporal LiDAR data. The system achieved 70% success avoiding aerial obstacles and 90% for moving humans in real-world tests, significantly outperforming a native robot system, and incorporates a custom high-fidelity LiDAR simulator and a novel hierarchical perception network.

View blogResearchers developed a system for depth-only perceptive locomotion in humanoid robots across challenging terrains, integrating realistic depth synthesis and a cross-attention transformer for terrain reconstruction. The approach enables accurate inference of occluded regions and robust, adaptive locomotion with a perception latency of approximately 20 ms in real-world deployment.

View blogTopoNav introduces a framework for object navigation that utilizes dynamic, evolving topological graphs as a spatial memory mechanism, enabling state-of-the-art zero-shot performance on HM3D and MP3D datasets. The approach enhances an agent's ability to retain and reason with environmental structure, demonstrating an SR of 0.601 on HM3D and successfully implementing the system on a quadruped robot.

View blogLiPS integrates detailed multi-rigid-body dynamics of humanoid robot parallel ankle mechanisms directly into large-scale GPU-accelerated reinforcement learning simulations. This approach enables policies to learn parallel-aware control strategies, significantly reducing the sim-to-real gap and allowing direct, robust deployment on physical humanoid robots such as the Tien Kung.

View blog

Tsinghua University

Tsinghua University

Northeastern University

Northeastern University