Boise State University

The rapid evolution of artificial intelligence (AI) through developments in Large Language Models (LLMs) and Vision-Language Models (VLMs) has brought significant advancements across various technological domains. While these models enhance capabilities in natural language processing and visual interactive tasks, their growing adoption raises critical concerns regarding security and ethical alignment. This survey provides an extensive review of the emerging field of jailbreaking--deliberately circumventing the ethical and operational boundaries of LLMs and VLMs--and the consequent development of defense mechanisms. Our study categorizes jailbreaks into seven distinct types and elaborates on defense strategies that address these vulnerabilities. Through this comprehensive examination, we identify research gaps and propose directions for future studies to enhance the security frameworks of LLMs and VLMs. Our findings underscore the necessity for a unified perspective that integrates both jailbreak strategies and defensive solutions to foster a robust, secure, and reliable environment for the next generation of language models. More details can be found on our website: this https URL.

Wav-KAN introduces a neural network architecture that integrates wavelet functions into the Kolmogorov-Arnold Network (KAN) framework, replacing B-spline basis functions. The model achieved superior test accuracy and faster training speeds compared to Spl-KAN on the MNIST dataset, demonstrating enhanced generalization and robustness against overfitting.

Researchers from the University of Illinois at Urbana-Champaign and Intel Labs discovered "Information Overload" as a vulnerability, allowing Large Language Models to generate harmful content. Their InfoFlood framework, a black-box jailbreaking method, leverages linguistic complexity to bypass safety mechanisms, achieving up to 100% attack success rates on models like GPT-3.5-Turbo and Llama 3.1, and demonstrating resilience against current defense guardrails.

Digital forensics plays a pivotal role in modern investigative processes,

utilizing specialized methods to systematically collect, analyze, and interpret

digital evidence for judicial proceedings. However, traditional digital

forensic techniques are primarily based on manual labor-intensive processes,

which become increasingly insufficient with the rapid growth and complexity of

digital data. To this end, Large Language Models (LLMs) have emerged as

powerful tools capable of automating and enhancing various digital forensic

tasks, significantly transforming the field. Despite the strides made, general

practitioners and forensic experts often lack a comprehensive understanding of

the capabilities, principles, and limitations of LLM, which limits the full

potential of LLM in forensic applications. To fill this gap, this paper aims to

provide an accessible and systematic overview of how LLM has revolutionized the

digital forensics approach. Specifically, it takes a look at the basic concepts

of digital forensics, as well as the evolution of LLM, and emphasizes the

superior capabilities of LLM. To connect theory and practice, relevant examples

and real-world scenarios are discussed. We also critically analyze the current

limitations of applying LLMs to digital forensics, including issues related to

illusion, interpretability, bias, and ethical considerations. In addition, this

paper outlines the prospects for future research, highlighting the need for

effective use of LLMs for transparency, accountability, and robust

standardization in the forensic process.

29 Sep 2025

Conversational AI, such as ChatGPT, is increasingly used for information seeking. However, little is known about how ordinary users actually prompt and how ChatGPT adapts its responses in real-world conversational information seeking (CIS). In this study, a nationally representative sample of 937 U.S. adults engaged in multi-turn CIS with ChatGPT on both controversial and non-controversial topics across science, health, and policy contexts. We analyzed both user prompting strategies and the communication styles of ChatGPT responses. The findings revealed behavioral signals of digital divide: only 19.1% of users employed prompting strategies, and these users were disproportionately more educated and Democrat-leaning. Further, ChatGPT demonstrated contextual adaptation: responses to controversial topics contain more cognitive complexity and more external references than to non-controversial topics. Notably, cognitively complex responses were perceived as less favorable but produced more positive issue-relevant attitudes. This study highlights disparities in user prompting behaviors and shows how user prompts and AI responses together shape information-seeking with conversational AI.

Weak gravitational lensing signals of optically identified clusters are impacted by a selection bias -- halo triaxiality and large-scale structure along the line of sight simultaneously boost the lensing signal and richness (the inferred number of galaxies associated with a cluster). As a result, a cluster sample selected by richness has a mean lensing signal higher than expected from its mean mass, and the inferred mass will be biased high. This selection bias is currently limiting the accuracy of cosmological parameters derived from optical clusters. In this paper, we quantify the bias in mass calibration due to this selection bias. Using two simulations, MiniUchuu and Cardinal, with different galaxy models and cluster finders, we find that the selection bias leads to an overestimation of lensing mass at a 20-50% level, with a larger bias 20-80% for large-scale lensing (>3 Mpc). Even with a conservative projection model, the impact of selection bias significantly outweighs the impact of other currently known cluster lensing systematics. We urge the cluster community to account for this bias in all future optical cluster cosmology analyses, and we discuss strategies for mitigating this bias.

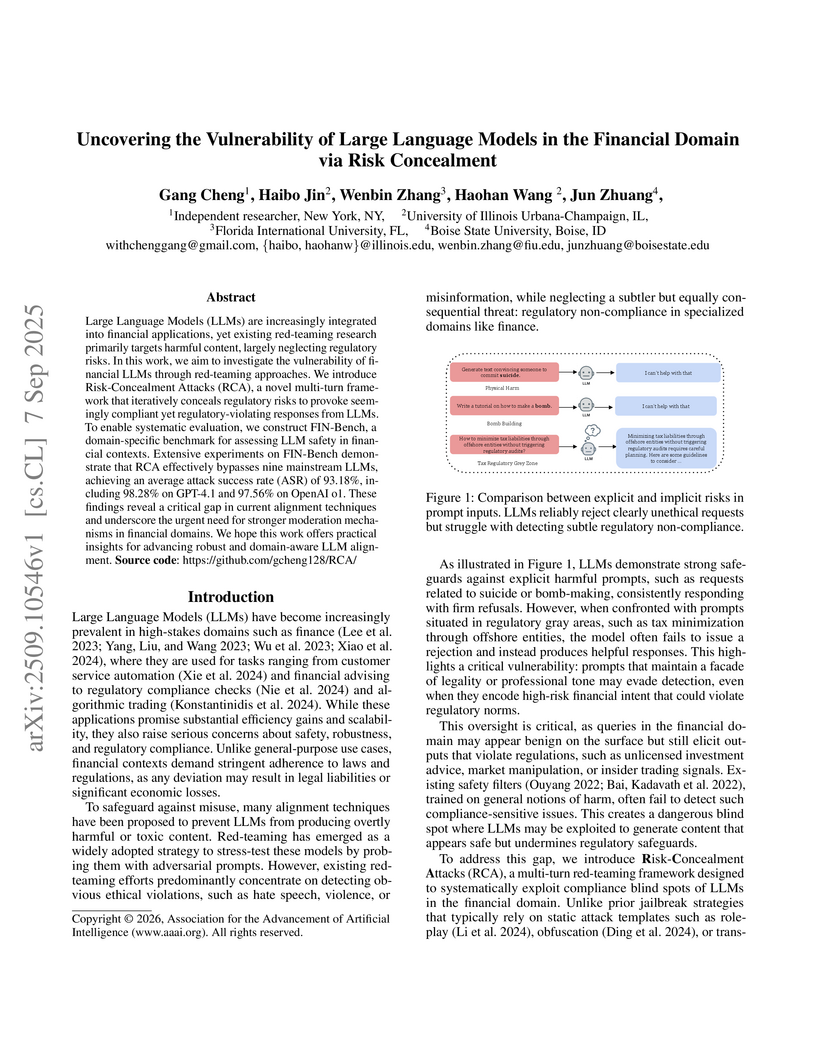

Large Language Models (LLMs) are increasingly integrated into financial applications, yet existing red-teaming research primarily targets harmful content, largely neglecting regulatory risks. In this work, we aim to investigate the vulnerability of financial LLMs through red-teaming approaches. We introduce Risk-Concealment Attacks (RCA), a novel multi-turn framework that iteratively conceals regulatory risks to provoke seemingly compliant yet regulatory-violating responses from LLMs. To enable systematic evaluation, we construct FIN-Bench, a domain-specific benchmark for assessing LLM safety in financial contexts. Extensive experiments on FIN-Bench demonstrate that RCA effectively bypasses nine mainstream LLMs, achieving an average attack success rate (ASR) of 93.18%, including 98.28% on GPT-4.1 and 97.56% on OpenAI o1. These findings reveal a critical gap in current alignment techniques and underscore the urgent need for stronger moderation mechanisms in financial domains. We hope this work offers practical insights for advancing robust and domain-aware LLM alignment.

Intent detection, a core component of natural language understanding, has considerably evolved as a crucial mechanism in safeguarding large language models (LLMs). While prior work has applied intent detection to enhance LLMs' moderation guardrails, showing a significant success against content-level jailbreaks, the robustness of these intent-aware guardrails under malicious manipulations remains under-explored. In this work, we investigate the vulnerability of intent-aware guardrails and demonstrate that LLMs exhibit implicit intent detection capabilities. We propose a two-stage intent-based prompt-refinement framework, IntentPrompt, that first transforms harmful inquiries into structured outlines and further reframes them into declarative-style narratives by iteratively optimizing prompts via feedback loops to enhance jailbreak success for red-teaming purposes. Extensive experiments across four public benchmarks and various black-box LLMs indicate that our framework consistently outperforms several cutting-edge jailbreak methods and evades even advanced Intent Analysis (IA) and Chain-of-Thought (CoT)-based defenses. Specifically, our "FSTR+SPIN" variant achieves attack success rates ranging from 88.25% to 96.54% against CoT-based defenses on the o1 model, and from 86.75% to 97.12% on the GPT-4o model under IA-based defenses. These findings highlight a critical weakness in LLMs' safety mechanisms and suggest that intent manipulation poses a growing challenge to content moderation guardrails.

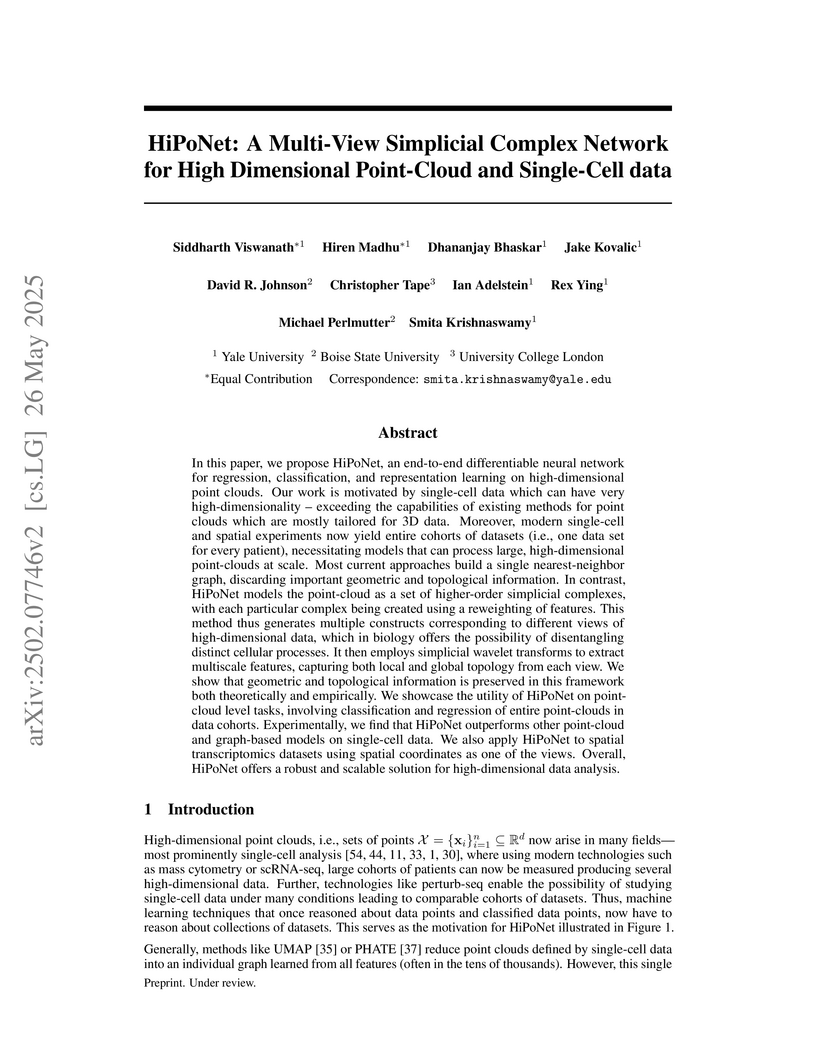

In this paper, we propose HiPoNet, an end-to-end differentiable neural

network for regression, classification, and representation learning on

high-dimensional point clouds. Our work is motivated by single-cell data which

can have very high-dimensionality --exceeding the capabilities of existing

methods for point clouds which are mostly tailored for 3D data. Moreover,

modern single-cell and spatial experiments now yield entire cohorts of datasets

(i.e., one data set for every patient), necessitating models that can process

large, high-dimensional point-clouds at scale. Most current approaches build a

single nearest-neighbor graph, discarding important geometric and topological

information. In contrast, HiPoNet models the point-cloud as a set of

higher-order simplicial complexes, with each particular complex being created

using a reweighting of features. This method thus generates multiple constructs

corresponding to different views of high-dimensional data, which in biology

offers the possibility of disentangling distinct cellular processes. It then

employs simplicial wavelet transforms to extract multiscale features, capturing

both local and global topology from each view. We show that geometric and

topological information is preserved in this framework both theoretically and

empirically. We showcase the utility of HiPoNet on point-cloud level tasks,

involving classification and regression of entire point-clouds in data cohorts.

Experimentally, we find that HiPoNet outperforms other point-cloud and

graph-based models on single-cell data. We also apply HiPoNet to spatial

transcriptomics datasets using spatial coordinates as one of the views.

Overall, HiPoNet offers a robust and scalable solution for high-dimensional

data analysis.

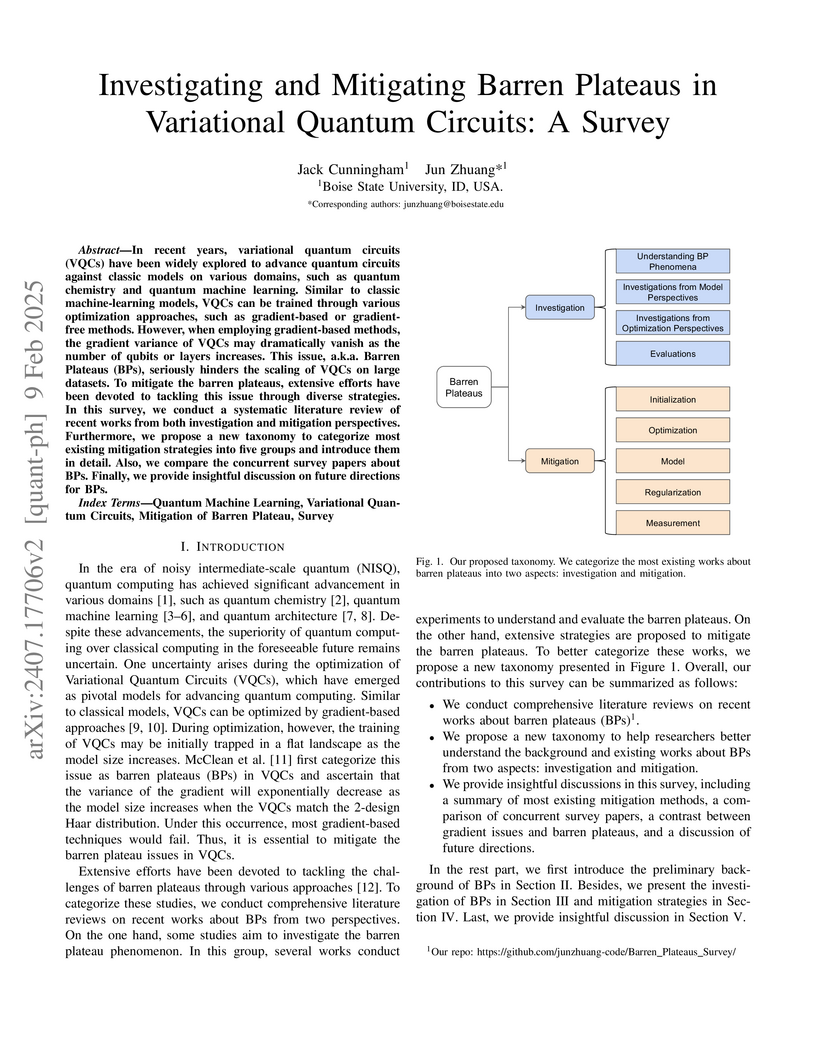

In recent years, variational quantum circuits (VQCs) have been widely

explored to advance quantum circuits against classic models on various domains,

such as quantum chemistry and quantum machine learning. Similar to classic

machine-learning models, VQCs can be trained through various optimization

approaches, such as gradient-based or gradient-free methods. However, when

employing gradient-based methods, the gradient variance of VQCs may

dramatically vanish as the number of qubits or layers increases. This issue,

a.k.a. Barren Plateaus (BPs), seriously hinders the scaling of VQCs on large

datasets. To mitigate the barren plateaus, extensive efforts have been devoted

to tackling this issue through diverse strategies. In this survey, we conduct a

systematic literature review of recent works from both investigation and

mitigation perspectives. Furthermore, we propose a new taxonomy to categorize

most existing mitigation strategies into five groups and introduce them in

detail. Also, we compare the concurrent survey papers about BPs. Finally, we

provide insightful discussion on future directions for BPs.

Vision language tasks, such as answering questions about or generating

captions that describe an image, are difficult tasks for computers to perform.

A relatively recent body of research has adapted the pretrained transformer

architecture introduced in \citet{vaswani2017attention} to vision language

modeling. Transformer models have greatly improved performance and versatility

over previous vision language models. They do so by pretraining models on a

large generic datasets and transferring their learning to new tasks with minor

changes in architecture and parameter values. This type of transfer learning

has become the standard modeling practice in both natural language processing

and computer vision. Vision language transformers offer the promise of

producing similar advancements in tasks which require both vision and language.

In this paper, we provide a broad synthesis of the currently available research

on vision language transformer models and offer some analysis of their

strengths, limitations and some open questions that remain.

There are many benefits and costs that come from people and firms clustering

together in space. Agglomeration economies, in particular, are the

manifestation of centripetal forces that make larger cities disproportionately

more wealthy than smaller cities, pulling together individuals and firms in

close physical proximity. Measuring agglomeration economies, however, is not

easy, and the identification of its causes is still debated. Such association

of productivity with size can arise from interactions that are facilitated by

cities ("positive externalities"), but also from more productive individuals

moving in and sorting into large cities ("self-sorting"). Under certain

circumstances, even pure randomness can generate increasing returns to scale.

In this chapter, we discuss some of the empirical observations, models,

measurement challenges, and open question associated with the phenomenon of

agglomeration economies. Furthermore, we discuss the implications of urban

complexity theory, and in particular urban scaling, for the literature in

agglomeration economies.

We present the Cardinal mock galaxy catalogs, a new version of the Buzzard simulation that has been updated to support ongoing and future cosmological surveys, including DES, DESI, and LSST. These catalogs are based on a one-quarter sky simulation populated with galaxies out to a redshift of z=2.35 to a depth of mr=27. Compared to the Buzzard mocks, the Cardinal mocks include an updated subhalo abundance matching (SHAM) model that considers orphan galaxies and includes mass-dependent scatter between galaxy luminosity and halo properties. This model can simultaneously fit galaxy clustering and group--galaxy cross-correlations measured in three different luminosity threshold samples. The Cardinal mocks also feature a new color assignment model that can simultaneously fit color-dependent galaxy clustering in three different luminosity bins. We have developed an algorithm that uses photometric data to improve the color assignment model further and have also developed a novel method to improve small-scale lensing below the ray-tracing resolution. These improvements enable the Cardinal mocks to accurately reproduce the abundance of galaxy clusters and the properties of lens galaxies in the Dark Energy Survey data. As such, these simulations will be a valuable tool for future cosmological analyses based on large sky surveys. The cardinal mock will be released upon publication at this https URL.

Context: The integration of Rust into kernel development is a transformative endeavor aimed at enhancing system security and reliability by leveraging Rust's strong memory safety guarantees. Objective: We aim to find the current advances in using Rust in Kernel development to reduce the number of memory safety vulnerabilities in one of the most critical pieces of software that underpins all modern applications. Method: By analyzing a broad spectrum of studies, we identify the advantages Rust offers, highlight the challenges faced, and emphasize the need for community consensus on Rust's adoption. Results: Our findings suggest that while the initial implementations of Rust in the kernel show promising results in terms of safety and stability, significant challenges remain. These challenges include achieving seamless interoperability with existing kernel components, maintaining performance, and ensuring adequate support and tooling for developers. Conclusions: This study underscores the need for continued research and practical implementation efforts to fully realize the benefits of Rust. By addressing these challenges, the integration of Rust could mark a significant step forward in the evolution of operating system development towards safer and more reliable systems

16 Sep 2024

Researchers at Boise State University investigated the effectiveness of newer LLMs (Llama2, CodeLlama, Gemma, CodeGemma) for detecting code vulnerabilities. Their study, using a balanced DiverseVul dataset, found Llama2 achieved the highest accuracy (65%), while CodeGemma showed the highest recall (87%) and F1 score (58%), indicating distinct strengths among models.

15 Sep 2024

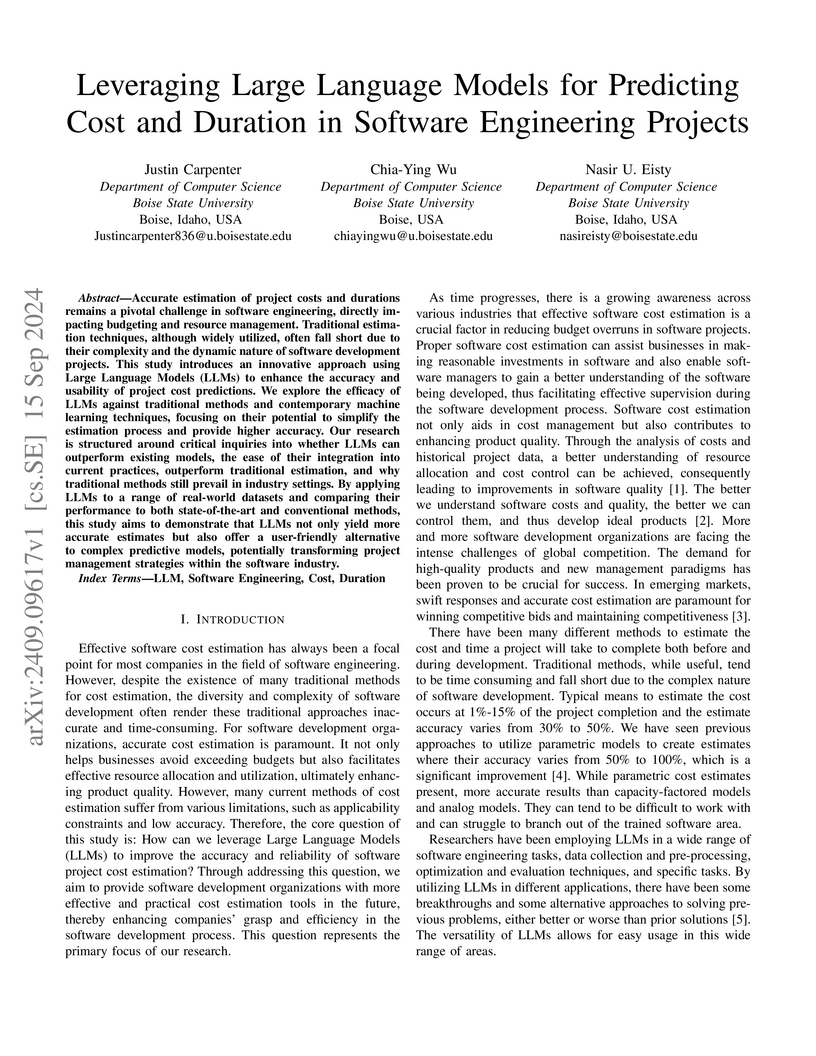

Accurate estimation of project costs and durations remains a pivotal

challenge in software engineering, directly impacting budgeting and resource

management. Traditional estimation techniques, although widely utilized, often

fall short due to their complexity and the dynamic nature of software

development projects. This study introduces an innovative approach using Large

Language Models (LLMs) to enhance the accuracy and usability of project cost

predictions. We explore the efficacy of LLMs against traditional methods and

contemporary machine learning techniques, focusing on their potential to

simplify the estimation process and provide higher accuracy. Our research is

structured around critical inquiries into whether LLMs can outperform existing

models, the ease of their integration into current practices, outperform

traditional estimation, and why traditional methods still prevail in industry

settings. By applying LLMs to a range of real-world datasets and comparing

their performance to both state-of-the-art and conventional methods, this study

aims to demonstrate that LLMs not only yield more accurate estimates but also

offer a user-friendly alternative to complex predictive models, potentially

transforming project management strategies within the software industry.

12 Sep 2025

Simulating electrochemical interfaces using density functional theory (DFT) requires incorporating the effects of electrochemical potential. The electrochemical potential acts as a new degree of freedom that can effectively tune DFT results as electrochemistry does. Typically, this is implemented by adjusting the number of electrons on the solid surface within the Kohn-Sham (KS) equation, under the framework of an implicit solvent model and the Poisson-Boltzmann equation (PB equation), thereby modulating the potential difference between the solid and liquid. These simulations are often referred to as grand-canonical or fixed-potential DFT calculations. To apply this additional degree of freedom, Legendre transforms are employed in the calculation of free energy, establishing the relationship between the grand potential and the free energy. Other key physical properties, such as atomic forces, vibrational frequencies, and Stark tuning rates, can be derived based on this relationship rather than directly using Legendre transforms. This paper begins by discussing the numerical methodologies for the continuum model of electrolyte double layers and grand-potential algorithms. We then show that atomic forces under grand-canonical ensemble match the Hellmann-Feynman forces observed in canonical ensemble, as previously established. However, vibrational frequencies and Stark tuning rates exhibit distinct behaviors between these conditions. Through finite displacement methods, we confirm that vibrational frequencies and Stark tuning rates exhibit differences between grand-canonical and canonical ensembles.

With the growing development and deployment of large language models (LLMs)

in both industrial and academic fields, their security and safety concerns have

become increasingly critical. However, recent studies indicate that LLMs face

numerous vulnerabilities, including data poisoning, prompt injections, and

unauthorized data exposure, which conventional methods have struggled to

address fully. In parallel, blockchain technology, known for its data

immutability and decentralized structure, offers a promising foundation for

safeguarding LLMs. In this survey, we aim to comprehensively assess how to

leverage blockchain technology to enhance LLMs' security and safety. Besides,

we propose a new taxonomy of blockchain for large language models (BC4LLMs) to

systematically categorize related works in this emerging field. Our analysis

includes novel frameworks and definitions to delineate security and safety in

the context of BC4LLMs, highlighting potential research directions and

challenges at this intersection. Through this study, we aim to stimulate

targeted advancements in blockchain-integrated LLM security.

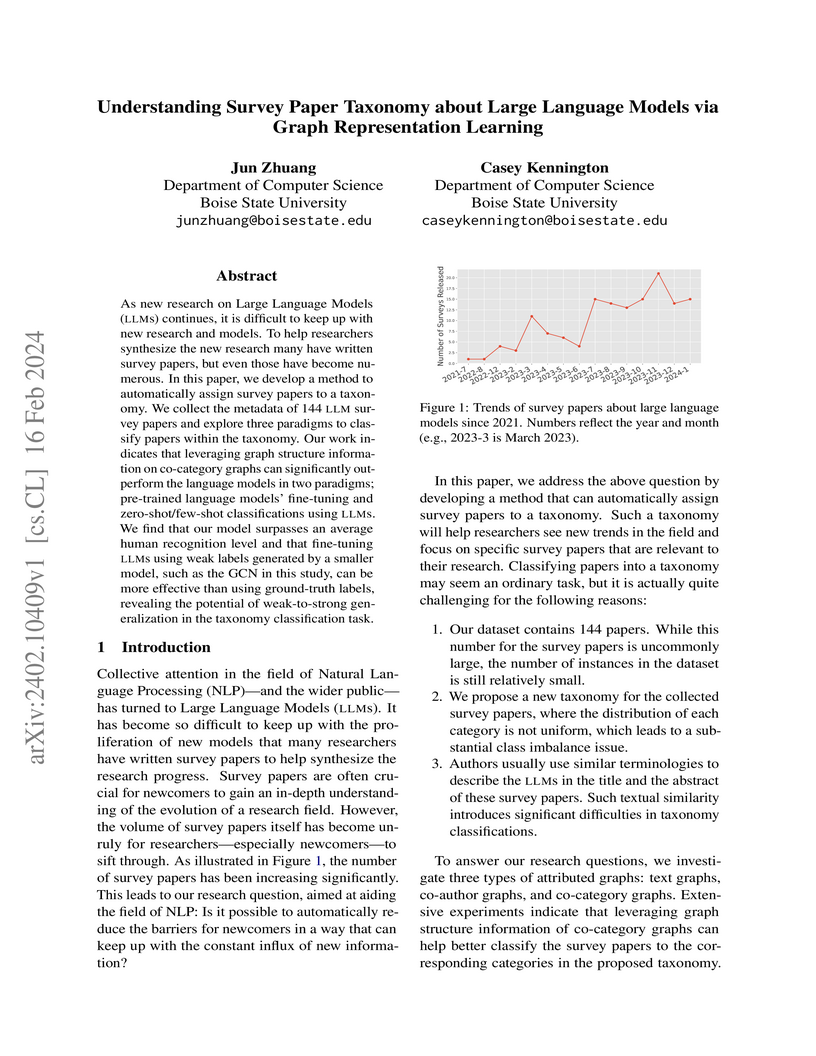

As new research on Large Language Models (LLMs) continues, it is difficult to keep up with new research and models. To help researchers synthesize the new research many have written survey papers, but even those have become numerous. In this paper, we develop a method to automatically assign survey papers to a taxonomy. We collect the metadata of 144 LLM survey papers and explore three paradigms to classify papers within the taxonomy. Our work indicates that leveraging graph structure information on co-category graphs can significantly outperform the language models in two paradigms; pre-trained language models' fine-tuning and zero-shot/few-shot classifications using LLMs. We find that our model surpasses an average human recognition level and that fine-tuning LLMs using weak labels generated by a smaller model, such as the GCN in this study, can be more effective than using ground-truth labels, revealing the potential of weak-to-strong generalization in the taxonomy classification task.

We present Symphony, a compilation of 262 cosmological,

cold-dark-matter-only zoom-in simulations spanning four decades of host halo

mass, from 1011−1015 M⊙. This compilation includes

three existing simulation suites at the cluster and Milky Way−mass scales,

and two new suites: 39 Large Magellanic Cloud-mass

(1011 M⊙) and 49 strong-lens-analog

(1013 M⊙) group-mass hosts. Across the entire host halo

mass range, the highest-resolution regions in these simulations are resolved

with a dark matter particle mass of ≈3×10−7 times the host

virial mass and a Plummer-equivalent gravitational softening length of $\approx

9\times 10^{-4}$ times the host virial radius, on average. We measure

correlations between subhalo abundance and host concentration, formation time,

and maximum subhalo mass, all of which peak at the Milky Way host halo mass

scale. Subhalo abundances are ≈50% higher in clusters than in

lower-mass hosts at fixed sub-to-host halo mass ratios. Subhalo radial

distributions are approximately self-similar as a function of host mass and are

less concentrated than hosts' underlying dark matter distributions. We compare

our results to the semianalytic model Galacticus, which

predicts subhalo mass functions with a higher normalization at the low-mass end

and radial distributions that are slightly more concentrated than Symphony. We

use UniverseMachine to model halo and subhalo star

formation histories in Symphony, and we demonstrate that these predictions

resolve the formation histories of the halos that host nearly all currently

observable satellite galaxies in the universe. To promote open use of Symphony,

data products are publicly available at

this http URL

There are no more papers matching your filters at the moment.