Brock University

01 Sep 2025

Researchers developed TAAROFBENCH, the first open-ended benchmark for Persian *taarof*, revealing that current LLMs exhibit a strong bias towards directness and perform poorly on culturally nuanced interactions. Targeted fine-tuning, especially using Direct Preference Optimization, significantly improved LLM accuracy on *taarof*-expected scenarios, bringing performance close to native human levels.

04 Oct 2025

This paper refines Integrated Information Theory (IIT) 4.0 by formalizing the concept of intrinsic cause-effect power, which requires a system to both provide a repertoire of alternatives and specify a particular state. Researchers from the University of Wisconsin–Madison and Brock University demonstrate that true intrinsic information (ii), and consequently integrated information (φs), emerges from a delicate balance between this intrinsic differentiation and specification, peaking at an intermediate level of determinism.

This research introduces the Zero-shot Importance of Perturbation (ZIP) score, a model-agnostic method to quantify the impact of individual words in instructional prompts for Large Language Models. The technique, which utilizes context-aware word perturbations, achieved 90% accuracy in identifying key words on a custom validation benchmark and revealed a consistent inverse correlation between word importance and model performance.

Sphalerons in nonlinear Klein-Gordon models are unstable lump-like solutions that arise from a saddle point between true and false vacua in the energy functional. Numerical simulations are presented which show the sphaleron evolving into an accelerating kink-antikink pair whose separation approaches the speed of light asymptotically at large times. Utilizing a nonlinear collective coordinate method, an approximate analytical solution is derived for this evolution. These results indicate that an exact solution is expected to exhibit a gradient blow-up for large times,caused by energy concentrating at the flanks of the kink-antikink pair.

29 Aug 2025

As Large Language Models (LLMs) increasingly integrate into everyday workflows, where users shape outcomes through multi-turn collaboration, a critical question emerges: do users with different personality traits systematically prefer certain LLMs over others? We conducted a study with 32 participants evenly distributed across four Keirsey personality types, evaluating their interactions with GPT-4 and Claude 3.5 across four collaborative tasks: data analysis, creative writing, information retrieval, and writing assistance. Results revealed significant personality-driven preferences: Rationals strongly preferred GPT-4, particularly for goal-oriented tasks, while idealists favored Claude 3.5, especially for creative and analytical tasks. Other personality types showed task-dependent preferences. Sentiment analysis of qualitative feedback confirmed these patterns. Notably, aggregate helpfulness ratings were similar across models, showing how personality-based analysis reveals LLM differences that traditional evaluations miss.

This study explores the effectiveness of Large Language Models (LLMs) in creating personalized "mirror stories" that reflect and resonate with individual readers' identities, addressing the significant lack of diversity in literature. We present MirrorStories, a corpus of 1,500 personalized short stories generated by integrating elements such as name, gender, age, ethnicity, reader interest, and story moral. We demonstrate that LLMs can effectively incorporate diverse identity elements into narratives, with human evaluators identifying personalized elements in the stories with high accuracy. Through a comprehensive evaluation involving 26 diverse human judges, we compare the effectiveness of MirrorStories against generic narratives. We find that personalized LLM-generated stories not only outscore generic human-written and LLM-generated ones across all metrics of engagement (with average ratings of 4.22 versus 3.37 on a 5-point scale), but also achieve higher textual diversity while preserving the intended moral. We also provide analyses that include bias assessments and a study on the potential for integrating images into personalized stories.

14 Oct 2025

A spin-1 chain model integrating Kitaev-like and AKLT interactions is introduced, revealing exact solvability at specific parameter points. The model exhibits an extensive ground state degeneracy of 2^N+1 and provides Matrix Product State descriptions that accurately approximate the ground and excited states of the pure spin-1 Kitaev chain.

A new attention mechanism, Tri-Attention, is proposed to explicitly model three-way interactions between query, key, and context. This approach consistently outperforms standard Bi-Attention and contextual Bi-Attention models on retrieval-based dialogue, Chinese sentence semantic matching, and machine reading comprehension tasks.

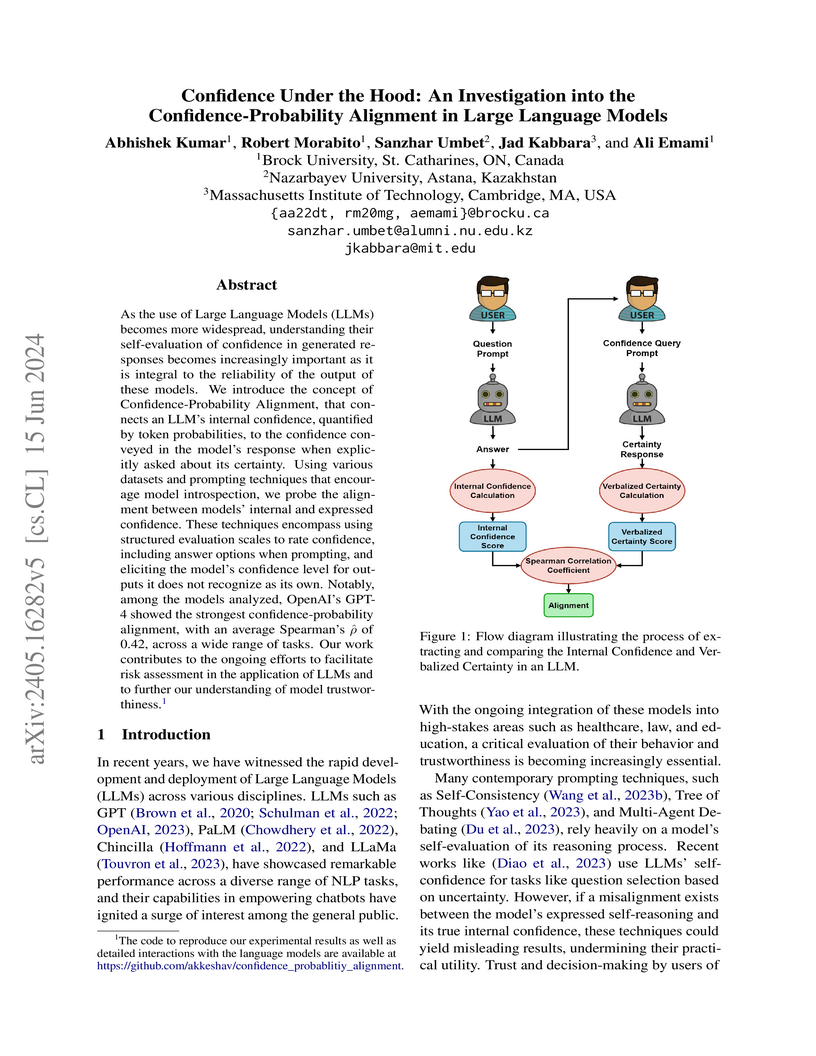

As the use of Large Language Models (LLMs) becomes more widespread, understanding their self-evaluation of confidence in generated responses becomes increasingly important as it is integral to the reliability of the output of these models. We introduce the concept of Confidence-Probability Alignment, that connects an LLM's internal confidence, quantified by token probabilities, to the confidence conveyed in the model's response when explicitly asked about its certainty. Using various datasets and prompting techniques that encourage model introspection, we probe the alignment between models' internal and expressed confidence. These techniques encompass using structured evaluation scales to rate confidence, including answer options when prompting, and eliciting the model's confidence level for outputs it does not recognize as its own. Notably, among the models analyzed, OpenAI's GPT-4 showed the strongest confidence-probability alignment, with an average Spearman's ρ^ of 0.42, across a wide range of tasks. Our work contributes to the ongoing efforts to facilitate risk assessment in the application of LLMs and to further our understanding of model trustworthiness.

This research provides a theory-driven investigation into artificial consciousness, demonstrating that functional equivalence in artificial intelligence does not imply phenomenal equivalence. Using Integrated Information Theory (IIT), the study shows that a digital computer perfectly simulating another system's behavior does not replicate its consciousness; instead, the computer's intrinsic causal structure typically results in fragmented or trivial conscious states.

30 Dec 2022

Integrated Information Theory (IIT) 4.0 presents a refined and expanded mathematical framework that objectively defines and quantifies subjective experience, translating phenomenal properties into physical cause-effect structures.

Weak ergodicity breaking in interacting quantum systems may occur due to the existence of a subspace dynamically decoupled from the rest of the Hilbert space. In two-orbital spinful lattice systems, we construct such subspaces that are in addition distinguished by strongest inter-orbital and spin-singlet or spin-triplet, long-range superconducting pairing correlations. All unconventional pairing types we consider are local in space and unitary. Alternatively to orbitals, the additional degree of freedom could originate from the presence of two layers or through any other mechanism. Required Hamiltonians are rather non-exotic and include chemical potential, Hubbard, and spin-orbit interactions typically used for two-orbital superconducting materials. Each subspace is spanned by a family of group-invariant quantum many-body scars combining both 2e and 4e pairing/clustering contributions. One of the basis states has the form of a BCS wavefunction and can always be made the ground state by adding a mean-field pairing potential. Analytical results in this work are lattice-, dimension- and (mostly) system size-independent. We confirm them by exact numerical diagonalization in small systems.

This research introduces "Trace-of-Thought Prompting," a prompt-based knowledge distillation method that enables low-resource language models to achieve substantial accuracy gains on arithmetic reasoning tasks by leveraging problem decomposition from a teacher model. For instance, Llama 2 Chat 7B showed a 113% relative accuracy gain on the GSM8K dataset with this approach.

20 Jun 2025

As Large Language Models (LLMs) become increasingly integrated into real-world, autonomous applications, relying on static, pre-annotated references for evaluation poses significant challenges in cost, scalability, and completeness. We propose Tool-Augmented LLM Evaluation (TALE), a framework to assess LLM outputs without predetermined ground-truth answers. Unlike conventional metrics that compare to fixed references or depend solely on LLM-as-a-judge knowledge, TALE employs an agent with tool-access capabilities that actively retrieves and synthesizes external evidence. It iteratively generates web queries, collects information, summarizes findings, and refines subsequent searches through reflection. By shifting away from static references, TALE aligns with free-form question-answering tasks common in real-world scenarios. Experimental results on multiple free-form QA benchmarks show that TALE not only outperforms standard reference-based metrics for measuring response accuracy but also achieves substantial to near-perfect agreement with human evaluations. TALE enhances the reliability of LLM evaluations in real-world, dynamic scenarios without relying on static references.

In traffic prediction, the goal is to estimate traffic speed or flow in specific regions or road segments using historical data collected by devices deployed in each area. Each region or road segment can be viewed as an individual client that measures local traffic flow, making Federated Learning (FL) a suitable approach for collaboratively training models without sharing raw data. In centralized FL, a central server collects and aggregates model updates from multiple clients to build a shared model while preserving each client's data privacy. Standard FL methods, such as Federated Averaging (FedAvg), assume that clients are independent, which can limit performance in traffic prediction tasks where spatial relationships between clients are important. Federated Graph Learning methods can capture these dependencies during server-side aggregation, but they often introduce significant computational overhead. In this paper, we propose a lightweight graph-aware FL approach that blends the simplicity of FedAvg with key ideas from graph learning. Rather than training full models, our method applies basic neighbourhood aggregation principles to guide parameter updates, weighting client models based on graph connectivity. This approach captures spatial relationships effectively while remaining computationally efficient. We evaluate our method on two benchmark traffic datasets, METR-LA and PEMS-BAY, and show that it achieves competitive performance compared to standard baselines and recent graph-based federated learning techniques.

Large Language Models (LLMs) have shown impressive performance on various

benchmarks, yet their ability to engage in deliberate reasoning remains

questionable. We present NYT-Connections, a collection of 358 simple word

classification puzzles derived from the New York Times Connections game. This

benchmark is designed to penalize quick, intuitive "System 1" thinking,

isolating fundamental reasoning skills. We evaluated six recent LLMs, a simple

machine learning heuristic, and humans across three configurations:

single-attempt, multiple attempts without hints, and multiple attempts with

contextual hints. Our findings reveal a significant performance gap: even

top-performing LLMs like GPT-4 fall short of human performance by nearly 30%.

Notably, advanced prompting techniques such as Chain-of-Thought and

Self-Consistency show diminishing returns as task difficulty increases.

NYT-Connections uniquely combines linguistic isolation, resistance to intuitive

shortcuts, and regular updates to mitigate data leakage, offering a novel tool

for assessing LLM reasoning capabilities.

19 Mar 2025

Athletic training is characterized by physiological systems responding to

repeated exercise-induced stress, resulting in gradual alterations in the

functional properties of these systems. The adaptive response leading to

improved performance follows a remarkably predictable pattern that may be

described by a systems model provided that training load can be accurately

quantified and that the constants defining the training-performance

relationship are known. While various impulse-response models have been

proposed, they are inherently limited in reducing training stress (the impulse)

into a single metric, assuming that the adaptive responses are independent of

the type of training performed. This is despite ample evidence of markedly

diverse acute and chronic responses to exercise of different intensities and

durations. Herein, we propose an alternative, three-dimensional

impulse-response model that uses three training load metrics as inputs and

three performance metrics as outputs. These metrics, represented by a

three-parameter critical power model, reflect the stress imposed on each of the

three energy systems: the alactic (phosphocreatine/immediate) system; the

lactic (glycolytic) system; and the aerobic (oxidative) system. The purpose of

this article is to outline the scientific rationale and the practical

implementation of the three-dimensional impulse-response model.

This report offers a comprehensive analysis of the evolving landscape of quantum algorithm software specifically tailored for condensed matter physics. It examines fundamental quantum algorithms such as Variational Quantum Eigensolver (VQE), Quantum Phase Estimation (QPE), Quantum Annealing (QA), Quantum Approximate Optimization Algorithm (QAOA), and Quantum Machine Learning (QML) as applied to key condensed matter problems including strongly correlated systems, topological phases, and quantum magnetism. This review details leading software development kits (SDKs) like Qiskit, Cirq, PennyLane, and Q\#, and profiles key academic, commercial, and governmental initiatives driving innovation in this domain. Furthermore, it assesses current challenges, including hardware limitations, algorithmic scalability, and error mitigation, and explores future trajectories, anticipating new algorithmic breakthroughs, software enhancements, and the impact of next-generation quantum hardware. The central theme emphasizes the critical role of a co-design approach, where algorithms, software, and hardware evolve in tandem, and highlights the necessity of standardized benchmarks to accelerate progress towards leveraging quantum computation for transformative discoveries in condensed matter physics.

Healthcare fraud detection remains a critical challenge due to limited availability of labeled data, constantly evolving fraud tactics, and the high dimensionality of medical records. Traditional supervised methods are challenged by extreme label scarcity, while purely unsupervised approaches often fail to capture clinically meaningful anomalies. In this work, we introduce CleverCatch, a knowledge-guided weak supervision model designed to detect fraudulent prescription behaviors with improved accuracy and interpretability. Our approach integrates structured domain expertise into a neural architecture that aligns rules and data samples within a shared embedding space. By training encoders jointly on synthetic data representing both compliance and violation, CleverCatch learns soft rule embeddings that generalize to complex, real-world datasets. This hybrid design enables data-driven learning to be enhanced by domain-informed constraints, bridging the gap between expert heuristics and machine learning. Experiments on the large-scale real-world dataset demonstrate that CleverCatch outperforms four state-of-the-art anomaly detection baselines, yielding average improvements of 1.3\% in AUC and 3.4\% in recall. Our ablation study further highlights the complementary role of expert rules, confirming the adaptability of the framework. The results suggest that embedding expert rules into the learning process not only improves detection accuracy but also increases transparency, offering an interpretable approach for high-stakes domains such as healthcare fraud detection.

Feature selection is a crucial step in machine learning, especially for high-dimensional datasets, where irrelevant and redundant features can degrade model performance and increase computational costs. This paper proposes a novel large-scale multi-objective evolutionary algorithm based on the search space shrinking, termed LMSSS, to tackle the challenges of feature selection particularly as a sparse optimization problem. The method includes a shrinking scheme to reduce dimensionality of the search space by eliminating irrelevant features before the main evolutionary process. This is achieved through a ranking-based filtering method that evaluates features based on their correlation with class labels and frequency in an initial, cost-effective evolutionary process. Additionally, a smart crossover scheme based on voting between parent solutions is introduced, giving higher weight to the parent with better classification accuracy. An intelligent mutation process is also designed to target features prematurely excluded from the population, ensuring they are evaluated in combination with other features. These integrated techniques allow the evolutionary process to explore the search space more efficiently and effectively, addressing the sparse and high-dimensional nature of large-scale feature selection problems. The effectiveness of the proposed algorithm is demonstrated through comprehensive experiments on 15 large-scale datasets, showcasing its potential to identify more accurate feature subsets compared to state-of-the-art large-scale feature selection algorithms. These results highlight LMSSS's capability to improve model performance and computational efficiency, setting a new benchmark in the field.

There are no more papers matching your filters at the moment.