Centre National de la Recherche Scientifique (CNRS)

University of WashingtonUniversity of Illinois ChicagoRadboud University

University of WashingtonUniversity of Illinois ChicagoRadboud University Stony Brook University

Stony Brook University University of California, DavisWashington University in St. LouisNorth Carolina State UniversityUniversity of Wisconsin–MadisonCentre National de la Recherche Scientifique (CNRS)Wesleyan UniversityInria centre at Université Côte d’Azur

University of California, DavisWashington University in St. LouisNorth Carolina State UniversityUniversity of Wisconsin–MadisonCentre National de la Recherche Scientifique (CNRS)Wesleyan UniversityInria centre at Université Côte d’AzurWeb tracking is a pervasive and opaque practice that enables personalized

advertising, retargeting, and conversion tracking. Over time, it has evolved

into a sophisticated and invasive ecosystem, employing increasingly complex

techniques to monitor and profile users across the web. The research community

has a long track record of analyzing new web tracking techniques, designing and

evaluating the effectiveness of countermeasures, and assessing compliance with

privacy regulations. Despite a substantial body of work on web tracking, the

literature remains fragmented across distinctly scoped studies, making it

difficult to identify overarching trends, connect new but related techniques,

and identify research gaps in the field. Today, web tracking is undergoing a

once-in-a-generation transformation, driven by fundamental shifts in the

advertising industry, the adoption of anti-tracking countermeasures by

browsers, and the growing enforcement of emerging privacy regulations. This

Systematization of Knowledge (SoK) aims to consolidate and synthesize this

wide-ranging research, offering a comprehensive overview of the technical

mechanisms, countermeasures, and regulations that shape the modern and rapidly

evolving web tracking landscape. This SoK also highlights open challenges and

outlines directions for future research, aiming to serve as a unified reference

and introductory material for researchers, practitioners, and policymakers

alike.

We study the (w0,wa) parametrization of the dark energy (DE) equation of state, with and without the effective field theory of dark energy (EFTofDE) framework to describe the DE perturbations, parametrized here by the braiding parameter αB and the running of the Planck mass αM. We combine the EFTofLSS full-shape analysis of the power spectrum and bispectrum of BOSS data with the tomographic angular power spectra Cℓgg, Cℓκg, CℓTg and CℓTκ, where g, κ and T stand for the DESI luminous red galaxy map, Planck PR4 lensing map and Planck PR4 temperature map, respectively. To analyze these angular power spectra, we go beyond the Limber approximation, allowing us to include large-scales data in Cℓgg. The combination of all these probes with Planck PR4, DESI DR2 BAO and DES Y5 improves the constraint on the 2D posterior distribution of {w0,wa} by ∼50% and increases the preference for evolving dark energy over Λ from 3.8σ to 4.6σ. When we remove BAO and supernovae data, we obtain a hint for evolving dark energy at 2.3σ. Regarding the EFTofDE parameters, we improve the constraints on αB and αM by ∼40% and 50% respectively, finding results compatible with general relativity at ∼2σ. We show that these constraints do not depend on the choice of the BAO and supernovae likelihoods.

Artificial intelligence (AI) has rapidly evolved into a critical technology; however, electrical hardware struggles to keep pace with the exponential growth of AI models. Free space optical hardware provides alternative approaches for large-scale optical processing, and in-memory computing, with applications across diverse machine learning tasks. Here, we explore the use of broadband light scattering in free-space optical components, specifically complex media, which generate uncorrelated optical features at each wavelength. By treating individual wavelengths as independent predictors, we demonstrate improved classification accuracy through in-silico majority voting, along with the ability to estimate uncertainty without requiring access to the model's probability outputs. We further demonstrate that linearly combining multiwavelength features, akin to spectral shaping, enables us to tune output features with improved performance on classification tasks, potentially eliminating the need for multiple digital post-processing steps. These findings illustrate the spectral multiplexing or broadband advantage for free-space optical computing.

This paper presents Ψ-GNN, a novel Graph Neural Network (GNN) approach

for solving the ubiquitous Poisson PDE problems with mixed boundary conditions.

By leveraging the Implicit Layer Theory, Ψ-GNN models an "infinitely" deep

network, thus avoiding the empirical tuning of the number of required Message

Passing layers to attain the solution. Its original architecture explicitly

takes into account the boundary conditions, a critical prerequisite for

physical applications, and is able to adapt to any initially provided solution.

Ψ-GNN is trained using a "physics-informed" loss, and the training process

is stable by design, and insensitive to its initialization. Furthermore, the

consistency of the approach is theoretically proven, and its flexibility and

generalization efficiency are experimentally demonstrated: the same learned

model can accurately handle unstructured meshes of various sizes, as well as

different boundary conditions. To the best of our knowledge, Ψ-GNN is the

first physics-informed GNN-based method that can handle various unstructured

domains, boundary conditions and initial solutions while also providing

convergence guarantees.

In our digital and connected societies, the development of social networks,

online shopping, and reputation systems raises the question of how individuals

use social information, and how it affects their decisions. We report

experiments performed in France and Japan, in which subjects could update their

estimates after having received information from other subjects. We measure and

model the impact of this social information at individual and collective

scales. We observe and justify that when individuals have little prior

knowledge about a quantity, the distribution of the logarithm of their

estimates is close to a Cauchy distribution. We find that social influence

helps the group improve its properly defined collective accuracy. We quantify

the improvement of the group estimation when additional controlled and reliable

information is provided, unbeknownst to the subjects. We show that subjects'

sensitivity to social influence permits to define five robust behavioral traits

and increases with the difference between personal and group estimates. We then

use our data to build and calibrate a model of collective estimation, to

analyze the impact on the group performance of the quantity and quality of

information received by individuals. The model quantitatively reproduces the

distributions of estimates and the improvement of collective performance and

accuracy observed in our experiments. Finally, our model predicts that

providing a moderate amount of incorrect information to individuals can

counterbalance the human cognitive bias to systematically underestimate

quantities, and thereby improve collective performance.

National University of Singapore

National University of Singapore Nanyang Technological University

Nanyang Technological University Université Paris-Saclay

Université Paris-Saclay Sorbonne UniversitéUniversité Côte d’AzurCentre for Quantum TechnologiesUniversity of LiègeCentre National de la Recherche Scientifique (CNRS)Northumbria UniversityMajuLabUniversidad Nacional de Mar del PlataInstituto de Investigaciones Físicas de Mar del Plata (IFIMAR)Consejo Nacional de Investigaciones Científicas y Tecnológicas (CONICET)Universit

de Toulouse

Sorbonne UniversitéUniversité Côte d’AzurCentre for Quantum TechnologiesUniversity of LiègeCentre National de la Recherche Scientifique (CNRS)Northumbria UniversityMajuLabUniversidad Nacional de Mar del PlataInstituto de Investigaciones Físicas de Mar del Plata (IFIMAR)Consejo Nacional de Investigaciones Científicas y Tecnológicas (CONICET)Universit

de ToulouseThe Anderson transition in random graphs has raised great interest, partly because of its analogy with the many-body localization (MBL) transition. Unlike the latter, many results for random graphs are now well established, in particular the existence and precise value of a critical disorder separating a localized from an ergodic delocalized phase. However, the renormalization group flow and the nature of the transition are not well understood. In turn, recent works on the MBL transition have made the remarkable prediction that the flow is of Kosterlitz-Thouless type. Here we show that the Anderson transition on graphs displays the same type of flow. Our work attests to the importance of rare branches along which wave functions have a much larger localization length ξ∥ than the one in the transverse direction, ξ⊥. Importantly, these two lengths have different critical behaviors: ξ∥ diverges with a critical exponent ν∥=1, while ξ⊥ reaches a finite universal value ξ⊥c at the transition point Wc. Indeed, ξ⊥−1≈ξ⊥c−1+ξ−1, with ξ∼(W−Wc)−ν⊥ associated with a new critical exponent ν⊥=1/2, where exp(ξ) controls finite-size effects. The delocalized phase inherits the strongly non-ergodic properties of the critical regime at short scales, but is ergodic at large scales, with a unique critical exponent ν=1/2. This shows a very strong analogy with the MBL transition: the behavior of ξ⊥ is identical to that recently predicted for the typical localization length of MBL in a phenomenological renormalization group flow. We demonstrate these important properties for a smallworld complex network model and show the universality of our results by considering different network parameters and different key observables of Anderson localization.

21 Jan 2014

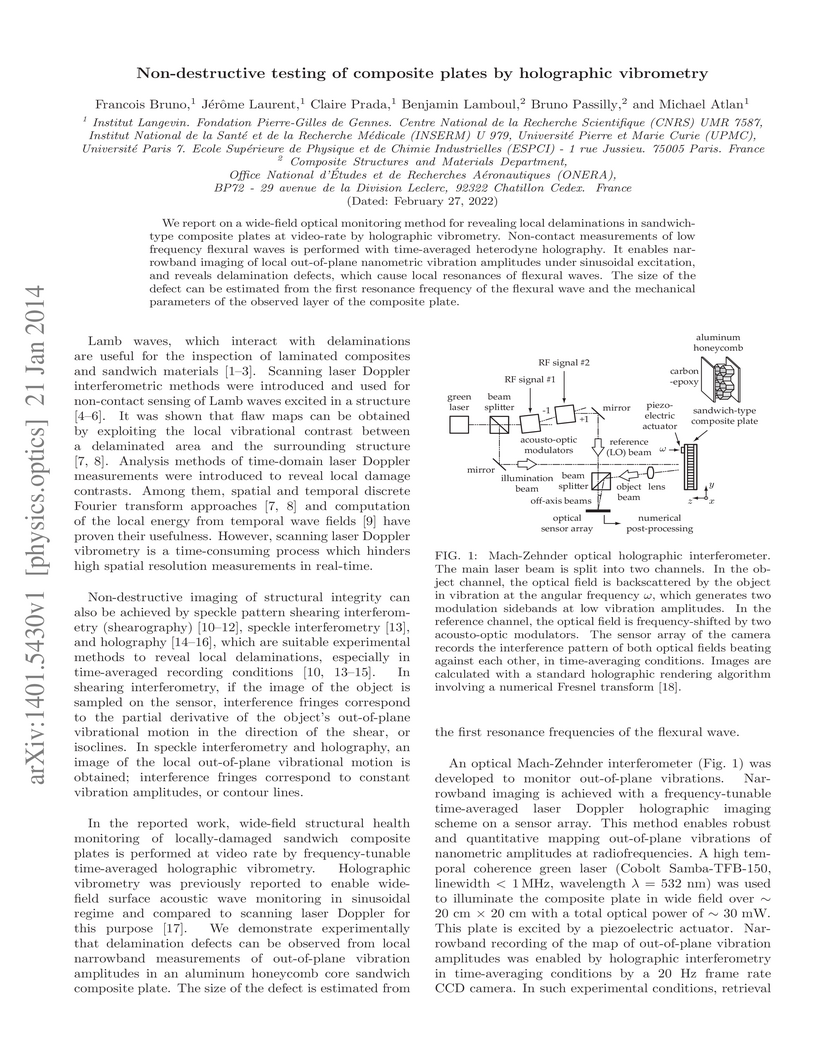

We report on a wide-field optical monitoring method for revealing local

delaminations in sandwich-type composite plates at video-rate by holographic

vibrometry. Non-contact measurements of low frequency flexural waves is

performed with time-averaged heterodyne holography. It enables narrowband

imaging of local out-of-plane nanometric vibration amplitudes under sinusoidal

excitation, and reveals delamination defects, which cause local resonances of

flexural waves. The size of the defect can be estimated from the first

resonance frequency of the flexural wave and the mechanical parameters of the

observed layer of the composite plate.

While the use of social robots for language teaching has been explored, there remains limited work on a task-specific synthesized voices for language teaching robots. Given that language is a verbal task, this gap may have severe consequences for the effectiveness of robots for language teaching tasks. We address this lack of L2 teaching robot voices through three contributions: 1. We address the need for a lightweight and expressive robot voice. Using a fine-tuned version of Matcha-TTS, we use emoji prompting to create an expressive voice that shows a range of expressivity over time. The voice can run in real time with limited compute resources. Through case studies, we found this voice more expressive, socially appropriate, and suitable for long periods of expressive speech, such as storytelling. 2. We explore how to adapt a robot's voice to physical and social ambient environments to deploy our voices in various locations. We found that increasing pitch and pitch rate in noisy and high-energy environments makes the robot's voice appear more appropriate and makes it seem more aware of its current environment. 3. We create an English TTS system with improved clarity for L2 listeners using known linguistic properties of vowels that are difficult for these listeners. We used a data-driven, perception-based approach to understand how L2 speakers use duration cues to interpret challenging words with minimal tense (long) and lax (short) vowels in English. We found that the duration of vowels strongly influences the perception for L2 listeners and created an "L2 clarity mode" for Matcha-TTS that applies a lengthening to tense vowels while leaving lax vowels unchanged. Our clarity mode was found to be more respectful, intelligible, and encouraging than base Matcha-TTS while reducing transcription errors in these challenging tense/lax minimal pairs.

We report on local superficial blood flow monitoring in biological tissue from laser Doppler holographic imaging. In time averaging recording conditions, holography acts as a narrowband bandpass filter, which, combined with a frequency shifted reference beam, permits frequency selective imaging in the radiofrequency range. These Doppler images are acquired with an off axis Mach Zehnder interferometer. Microvascular hemodynamic components mapping is performed in the cerebral cortex of the mouse and the eye fundus of the rat with near-infrared laser light without any exogenous marker. These measures are made from a basic inverse method analysis of local first order optical fluctuation spectra at low radiofrequencies, from 0 Hz to 100 kHz. Local quadratic velocity is derived from Doppler broadenings induced by fluid flows, with elementary diffusing wave spectroscopy formalism in backscattering configuration. We demonstrate quadratic mean velocity assessment in the 0.1 to 10 millimeters per second range in vitro and imaging of superficial blood perfusion with a spatial resolution of about 10 micrometers in rodent models of cortical and retinal blood flow.

Much effort has been devoted in the last two decades to characterize the situations in which a reservoir computing system exhibits the so-called echo state (ESP) and fading memory (FMP) properties. These important features amount, in mathematical terms, to the existence and continuity of global reservoir system solutions. That research is complemented in this paper with the characterization of the differentiability of reservoir filters for very general classes of discrete-time deterministic inputs. This constitutes a novel strong contribution to the long line of research on the ESP and the FMP and, in particular, links to existing research on the input-dependence of the ESP. Differentiability has been shown in the literature to be a key feature in the learning of attractors of chaotic dynamical systems. A Volterra-type series representation for reservoir filters with semi-infinite discrete-time inputs is constructed in the analytic case using Taylor's theorem and corresponding approximation bounds are provided. Finally, it is shown as a corollary of these results that any fading memory filter can be uniformly approximated by a finite Volterra series with finite memory.

29 Sep 2025

Direct numerical simulations of interfacial flows with surfactant-induced complexities involving surface viscous stresses are performed within the framework of the Level Contour Reconstruction Method (LCRM); this hybrid front-tracking/level-set approach leverages the advantages of both methods. In addition to interface-confined surfactant transport that results in surface diffusion and Marangoni stresses, the interface, endowed with shear and dilatational viscosities; these act to resist deformation arising from velocity gradients in the plane of the two-dimensional manifold of the interface, and interfacial compressibility effects, respectively. By adopting the Boussinesq-Scriven constitutive model, We provide a mathematical formulation of these effects that accurately captures the interfacial mechanics, which is then implemented within the LCRM-based code by exploiting the benefits inherent to the underlying front-tracking/level-sets hybrid approach. We validate our numerical predictions against a number of benchmark cases that involve drops undergoing deformation when subjected to a flow field or when rising under the action of buoyancy. The results of these validation studies highlight the importance of adopting a rigorous approach in modelling the interfacial dynamics. We also present results that demonstrate the effects of surface viscous stresses on interfacial deformation in unsteady parametric surface waves and atomisation events.

05 Jun 2025

This research proposes a novel morphing structure with shells inspired by the movement of pillbugs. Instead of the pillbug body, a loopcoupled mechanism based on slider-crank mechanisms is utilized to achieve the rolling up and spreading motion. This mechanism precisely imitates three distinct curves that mimic the shape morphing of a pillbug. To decrease the degree-of-freedom (DOF) of the mechanism to one, scissor mechanisms are added. 3D curved shells are then attached to the tracer points of the morphing mechanism to safeguard it from attacks while allowing it to roll. Through type and dimensional synthesis, a complete system that includes shells and an underlying morphing mechanism is developed. A 3D model is created and tested to demonstrate the proposed system's shape-changing capability. Lastly, a robot with two modes is developed based on the proposed mechanism, which can curl up to roll down hills and can spread to move in a straight line via wheels.

Digital hologram rendering can be performed by a convolutional neural

network, trained with image pairs calculated by numerical wave propagation from

sparse generating images. 512-by-512 pixeldigital Gabor magnitude holograms are

successfully estimated from experimental interferograms by a standard UNet

trained with 50,000 synthetic image pairs over 70 epochs.

Tohoku University

Tohoku University University of TorontoAcademia Sinica

University of TorontoAcademia Sinica University of Amsterdam

University of Amsterdam California Institute of Technology

California Institute of Technology University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of Waterloo

University of Waterloo Harvard UniversityNational Astronomical Observatory of Japan

Harvard UniversityNational Astronomical Observatory of Japan Chinese Academy of Sciences

Chinese Academy of Sciences University of Chicago

University of Chicago UC Berkeley

UC Berkeley Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Cornell University

Cornell University INFN

INFN Peking University

Peking University McGill UniversityCSIC

McGill UniversityCSIC Northwestern UniversityUniversit‘a di Napoli Federico IIKorea Astronomy and Space Science InstituteNational Taiwan Normal UniversityInstitute for Advanced StudyUniversity of Southern Queensland

Northwestern UniversityUniversit‘a di Napoli Federico IIKorea Astronomy and Space Science InstituteNational Taiwan Normal UniversityInstitute for Advanced StudyUniversity of Southern Queensland University of Arizona

University of Arizona Perimeter Institute for Theoretical PhysicsUniversity of Massachusetts AmherstFermi National Accelerator Laboratory

Perimeter Institute for Theoretical PhysicsUniversity of Massachusetts AmherstFermi National Accelerator Laboratory MIT

MIT Princeton UniversityEuropean Southern Observatory (ESO)Purple Mountain Observatory

Princeton UniversityEuropean Southern Observatory (ESO)Purple Mountain Observatory Chalmers University of TechnologyLomonosov Moscow State UniversityYunnan ObservatoriesNational Radio Astronomy ObservatoryINAFNational Sun Yat-sen UniversityPrinceton Plasma Physics LaboratoryNational Tsing-Hua UniversityNational Center for Theoretical SciencesUniversity of Malaya

Chalmers University of TechnologyLomonosov Moscow State UniversityYunnan ObservatoriesNational Radio Astronomy ObservatoryINAFNational Sun Yat-sen UniversityPrinceton Plasma Physics LaboratoryNational Tsing-Hua UniversityNational Center for Theoretical SciencesUniversity of Malaya Harvard-Smithsonian Center for AstrophysicsMax Planck Institute for Gravitational Physics (Albert Einstein Institute)Joint Institute for VLBI ERICUniversity of CalgaryCentre National de la Recherche Scientifique (CNRS)East Asian ObservatoryShanghai Astronomical ObservatoryThe Graduate University for Advanced StudiesUniversity of Science and TechnologyDelta Institute for Theoretical PhysicsInstitut de Radioastronomie MillimetriqueSouth African Radio Astronomy ObservatoryUniversitat de ValenciaMax Planck Institute for Radio AstronomyMax Planck Institute for AstronomyInstituto de Astrofísica de AndalucíaRadboud University NijmegenKagoshima UniversityUNAMEberhard Karls University of T ̈ubingenCanadian Institute for Theoretical AstrophysicsJoint ALMA ObservatoryVillanova UniversityLeibniz Institute for Astrophysics PotsdamDunlap Institute for Astronomy and AstrophysicsEuropean Space Agency (ESA)CONACyTUniversity of WitwatersrandLeiden ObservatoryFrankfurt Institute for Advanced Studies (FIAS)Laboratoire de Physique et Chimie de l’Environnement et de l’EspaceNational Optical-Infrared Astronomy Research Laboratory (NOIRLab)Omnisys Instruments ABInstituto Nacional de Astrofısica Optica y ElectronicaGoethe-University, FrankfurtUniversidad de Concepci

on

Harvard-Smithsonian Center for AstrophysicsMax Planck Institute for Gravitational Physics (Albert Einstein Institute)Joint Institute for VLBI ERICUniversity of CalgaryCentre National de la Recherche Scientifique (CNRS)East Asian ObservatoryShanghai Astronomical ObservatoryThe Graduate University for Advanced StudiesUniversity of Science and TechnologyDelta Institute for Theoretical PhysicsInstitut de Radioastronomie MillimetriqueSouth African Radio Astronomy ObservatoryUniversitat de ValenciaMax Planck Institute for Radio AstronomyMax Planck Institute for AstronomyInstituto de Astrofísica de AndalucíaRadboud University NijmegenKagoshima UniversityUNAMEberhard Karls University of T ̈ubingenCanadian Institute for Theoretical AstrophysicsJoint ALMA ObservatoryVillanova UniversityLeibniz Institute for Astrophysics PotsdamDunlap Institute for Astronomy and AstrophysicsEuropean Space Agency (ESA)CONACyTUniversity of WitwatersrandLeiden ObservatoryFrankfurt Institute for Advanced Studies (FIAS)Laboratoire de Physique et Chimie de l’Environnement et de l’EspaceNational Optical-Infrared Astronomy Research Laboratory (NOIRLab)Omnisys Instruments ABInstituto Nacional de Astrofısica Optica y ElectronicaGoethe-University, FrankfurtUniversidad de Concepci

onRecent developments in very long baseline interferometry (VLBI) have made it possible for the Event Horizon Telescope (EHT) to resolve the innermost accretion flows of the largest supermassive black holes on the sky. The sparse nature of the EHT's (u,v)-coverage presents a challenge when attempting to resolve highly time-variable sources. We demonstrate that the changing (u, v)-coverage of the EHT can contain regions of time over the course of a single observation that facilitate dynamical imaging. These optimal time regions typically have projected baseline distributions that are approximately angularly isotropic and radially homogeneous. We derive a metric of coverage quality based on baseline isotropy and density that is capable of ranking array configurations by their ability to produce accurate dynamical reconstructions. We compare this metric to existing metrics in the literature and investigate their utility by performing dynamical reconstructions on synthetic data from simulated EHT observations of sources with simple orbital variability. We then use these results to make recommendations for imaging the 2017 EHT Sgr A* data set.

06 Oct 2025

Structural biology has made significant progress in determining membrane proteins, leading to a remarkable increase in the number of available structures in dedicated databases. The inherent complexity of membrane protein structures, coupled with challenges such as missing data, inconsistencies, and computational barriers from disparate sources, underscores the need for improved database integration. To address this gap, we present MetaMP, a framework that unifies membrane-protein databases within a web application and uses machine learning for classification. MetaMP improves data quality by enriching metadata, offering a user-friendly interface, and providing eight interactive views for streamlined exploration. MetaMP was effective across tasks of varying difficulty, demonstrating advantages across different levels without compromising speed or accuracy, according to user evaluations. Moreover, MetaMP supports essential functions such as structure classification and outlier detection.

We present three practical applications of Artificial Intelligence (AI) in membrane protein research: predicting transmembrane segments, reconciling legacy databases, and classifying structures with explainable AI support. In a validation focused on statistics, MetaMP resolved 77% of data discrepancies and accurately predicted the class of newly identified membrane proteins 98% of the time and overtook expert curation. Altogether, MetaMP is a much-needed resource that harmonizes current knowledge and empowers AI-driven exploration of membrane-protein architecture.

Grain boundaries (GBs) are ubiquitous in large-scale graphene samples, playing a crucial role in their overall performance. Due to their complexity, they are usually investigated as model structures, under the assumption of a fully relaxed interface. Here, we present cantilever-based non-contact atomic force microscopy (ncAFM) as a suitable technique to resolve, atom by atom, the complete structure of these linear defects. Our experimental findings reveal a richer scenario than expected, with the coexistence of energetically stable and metastable graphene GBs. Although both GBs are structurally composed of pentagonal and heptagonal like rings, they can be differentiated by the irregular geometric shapes present in the metastable boundaries. Theoretical modeling and simulated ncAFM images, accounting for the experimental data, show that metastable GBs form under compressive uniaxial strain and exhibit vertical corrugation, whereas stable GBs remain in a fully relaxed, flat configuration. By locally introducing energy with the AFM tip, we show the possibility to manipulate the metastable GBs, driving them toward their minimum energy configuration. Notably, our high-resolution ncAFM images reveal a clear dichotomy: while the structural distortions of metastable grain boundaries are confined to just a few atoms, their impact on graphene's properties extends over significantly larger length scales.

It is generally accepted that, when moving in groups, animals process information to coordinate their motion. Recent studies have begun to apply rigorous methods based on Information Theory to quantify such distributed computation. Following this perspective, we use transfer entropy to quantify dynamic information flows locally in space and time across a school of fish during directional changes around a circular tank, i.e. U-turns. This analysis reveals peaks in information flows during collective U-turns and identifies two different flows: an informative flow (positive transfer entropy) based on fish that have already turned about fish that are turning, and a misinformative flow (negative transfer entropy) based on fish that have not turned yet about fish that are turning. We also reveal that the information flows are related to relative position and alignment between fish, and identify spatial patterns of information and misinformation cascades. This study offers several methodological contributions and we expect further application of these methodologies to reveal intricacies of self-organisation in other animal groups and active matter in general.

SLAC National Accelerator Laboratory University College London

University College London Stanford University

Stanford University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of TokyoLos Alamos National LaboratoryUniversity of Colorado BoulderNASA Marshall Space Flight CenterCentre National de la Recherche Scientifique (CNRS)JILAINAF – Osservatorio Astronomico di RomaINAF- Osservatorio Astronomico di CagliariINAF-IASF MilanoUniversitat d’AlacantUniversity of Zielona GoraMullard Space Science LaboratoryUniversit´e Paris DiderotInstitute of Physical and Chemical Research (RIKEN)Institut de Ci`encies de l’Espai (CSIC-IEEC)IUSS – Istituto Universitario di Studi SuperioriCommissariat à l'énergie atomique et aux énergies alternatives (CEA)Sabanc UniversityUniversita' di PadovaINAF

Osservatorio Astronomico di Brera

University of TokyoLos Alamos National LaboratoryUniversity of Colorado BoulderNASA Marshall Space Flight CenterCentre National de la Recherche Scientifique (CNRS)JILAINAF – Osservatorio Astronomico di RomaINAF- Osservatorio Astronomico di CagliariINAF-IASF MilanoUniversitat d’AlacantUniversity of Zielona GoraMullard Space Science LaboratoryUniversit´e Paris DiderotInstitute of Physical and Chemical Research (RIKEN)Institut de Ci`encies de l’Espai (CSIC-IEEC)IUSS – Istituto Universitario di Studi SuperioriCommissariat à l'énergie atomique et aux énergies alternatives (CEA)Sabanc UniversityUniversita' di PadovaINAF

Osservatorio Astronomico di Brera

University College London

University College London Stanford University

Stanford University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of TokyoLos Alamos National LaboratoryUniversity of Colorado BoulderNASA Marshall Space Flight CenterCentre National de la Recherche Scientifique (CNRS)JILAINAF – Osservatorio Astronomico di RomaINAF- Osservatorio Astronomico di CagliariINAF-IASF MilanoUniversitat d’AlacantUniversity of Zielona GoraMullard Space Science LaboratoryUniversit´e Paris DiderotInstitute of Physical and Chemical Research (RIKEN)Institut de Ci`encies de l’Espai (CSIC-IEEC)IUSS – Istituto Universitario di Studi SuperioriCommissariat à l'énergie atomique et aux énergies alternatives (CEA)Sabanc UniversityUniversita' di PadovaINAF

Osservatorio Astronomico di Brera

University of TokyoLos Alamos National LaboratoryUniversity of Colorado BoulderNASA Marshall Space Flight CenterCentre National de la Recherche Scientifique (CNRS)JILAINAF – Osservatorio Astronomico di RomaINAF- Osservatorio Astronomico di CagliariINAF-IASF MilanoUniversitat d’AlacantUniversity of Zielona GoraMullard Space Science LaboratoryUniversit´e Paris DiderotInstitute of Physical and Chemical Research (RIKEN)Institut de Ci`encies de l’Espai (CSIC-IEEC)IUSS – Istituto Universitario di Studi SuperioriCommissariat à l'énergie atomique et aux énergies alternatives (CEA)Sabanc UniversityUniversita' di PadovaINAF

Osservatorio Astronomico di BreraWe report on the long term X-ray monitoring with Swift, RXTE, Suzaku, Chandra and XMM-Newton of the outburst of the newly discovered magnetar Swift J1822.3-1606 (SGR 1822-1606), from the first observations soon after the detection of the short X-ray bursts which led to its discovery, through the first stages of its outburst decay (covering the time-span from July 2011, until end of April 2012). We also report on archival ROSAT observations which witnessed the source during its likely quiescent state, and on upper limits on Swift J1822.3-1606's radio-pulsed and optical emission during outburst, with the Green Bank Telescope (GBT) and the Gran Telescopio Canarias (GTC), respectively. Our X-ray timing analysis finds the source rotating with a period of P=8.43772016(2) s and a period derivative \dot{P}=8.3(2)x10^{-14} s s^{-1} , which entails an inferred dipolar surface magnetic field of B~2.7x10^{13} G at the equator. This measurement makes Swift J1822.3-1606 the second lowest magnetic field magnetar (after SGR 0418+5729; Rea et al. 2010). Following the flux and spectral evolution from the beginning of the outburst, we find that the flux decreased by about an order of magnitude, with a subtle softening of the spectrum, both typical of the outburst decay of magnetars. By modeling the secular thermal evolution of Swift J1822.3-1606, we find that the observed timing properties of the source, as well as its quiescent X-ray luminosity, can be reproduced if it was born with a poloidal and crustal toroidal fields of B_{p}~1.5x10^{14} G and B_{tor}~7x10^{14} G, respectively, and if its current age is ~550 kyr.

01 Jun 2021

Laser Doppler holography was introduced as a full-field imaging technique to measure blood flow in the retina and choroid with an as yet unrivaled temporal resolution. We here investigate separating the different contributions to the power Doppler signal in order to isolate the flow waveforms of vessels in the posterior pole of the human eye. Distinct flow behaviors are found in retinal arteries and veins with seemingly interrelated waveforms. We demonstrate a full field mapping of the local resistivity index, and the possibility to perform unambiguous identification of retinal arteries and veins on the basis of their systolodiastolic variations. Finally we investigate the arterial flow waveforms in the retina and choroid and find synchronous and similar waveforms, although with a lower pulsatility in choroidal vessels. This work demonstrates the potential held by laser Doppler holography to study ocular hemodynamics in healthy and diseased eyes.

11 Nov 2020

Biosensors and wearable sensor systems with transmitting capabilities are

currently developed and used for the monitoring of health data, exercise

activities, and other performance data. Unlike conventional approaches, these

devices enable convenient, continuous, and/or unobtrusive monitoring of user

behavioral signals in real time. Examples include signals relative to body

motion, body temperature, blood flow parameters and a variety of biological or

biochemical markers and, as will be shown in this chapter here, individual grip

force data that directly translate into spatiotemporal grip force profiles for

different locations on the fingers and palm of the hand. Wearable sensor

systems combine innovation in sensor design, electronics, data transmission,

power management, and signal processing for statistical analysis, as will be

further shown herein. The first section of this chapter will provide an

overview of the current state of the art in grip force profiling to highlight

important functional aspects to be considered. In the next section, the

contribution of wearable sensor technology in the form of sensor glove systems

for the real-time monitoring of surgical task skill evolution in novices

training in a simulator task will be described on the basis of recent examples.

In the discussion, advantages and limitations will be weighed against each

other.

There are no more papers matching your filters at the moment.