DARPA

This survey provides a comprehensive overview of sim-to-real methods in Reinforcement Learning, introducing a novel taxonomy based on Markov Decision Process (MDP) elements (Observation, Action, Transition, Reward). It integrates discussions on how large foundation models can bridge the sim-to-real gap, offering a structured framework for understanding and addressing discrepancies between simulated and real-world environments.

This paper summarizes some of the technical background, research ideas, and

possible development strategies for achieving machine common sense. Machine

common sense has long been a critical-but-missing component of Artificial

Intelligence (AI). Recent advances in machine learning have resulted in new AI

capabilities, but in all of these applications, machine reasoning is narrow and

highly specialized. Developers must carefully train or program systems for

every situation. General commonsense reasoning remains elusive. The absence of

common sense prevents intelligent systems from understanding their world,

behaving reasonably in unforeseen situations, communicating naturally with

people, and learning from new experiences. Its absence is perhaps the most

significant barrier between the narrowly focused AI applications we have today

and the more general, human-like AI systems we would like to build in the

future. Machine common sense remains a broad, potentially unbounded problem in

AI. There are a wide range of strategies that could be employed to make

progress on this difficult challenge. This paper discusses two diverse

strategies for focusing development on two different machine commonsense

services: (1) a service that learns from experience, like a child, to construct

computational models that mimic the core domains of child cognition for objects

(intuitive physics), agents (intentional actors), and places (spatial

navigation); and (2) service that learns from reading the Web, like a research

librarian, to construct a commonsense knowledge repository capable of answering

natural language and image-based questions about commonsense phenomena.

16 Oct 2025

DARPA Google DeepMind

Google DeepMind Stanford University

Stanford University OpenAI

OpenAI Google Research

Google Research Microsoft

Microsoft MITUniversity of Hawai’iNASAorUniversityLaboratoryLudwig-Maximilians Universit

t

The user wants an array of organization names from the provided text. I need to identify the affiliations of the authors.[Ludwig-Maximilians Universit

ext{University of HawaiiLudwig-Maximilians Universit

ew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleLudwig-Maximilians Universit

M

unchen

MITUniversity of Hawai’iNASAorUniversityLaboratoryLudwig-Maximilians Universit

t

The user wants an array of organization names from the provided text. I need to identify the affiliations of the authors.[Ludwig-Maximilians Universit

ext{University of HawaiiLudwig-Maximilians Universit

ew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleLudwig-Maximilians Universit

M

unchen

Google DeepMind

Google DeepMind Stanford University

Stanford University OpenAI

OpenAI Google Research

Google Research Microsoft

Microsoft MITUniversity of Hawai’iNASAorUniversityLaboratoryLudwig-Maximilians Universit

t

The user wants an array of organization names from the provided text. I need to identify the affiliations of the authors.[Ludwig-Maximilians Universit

ext{University of HawaiiLudwig-Maximilians Universit

ew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleLudwig-Maximilians Universit

M

unchen

MITUniversity of Hawai’iNASAorUniversityLaboratoryLudwig-Maximilians Universit

t

The user wants an array of organization names from the provided text. I need to identify the affiliations of the authors.[Ludwig-Maximilians Universit

ext{University of HawaiiLudwig-Maximilians Universit

ew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleLudwig-Maximilians Universit

M

unchenA VLT/MUSE population synthesis study of metallicities in the nuclear star-forming rings of four disk galaxies (NGC 613, NGC 1097, NGC 3351, NGC 7552) is presented. Disentangling the spectral contributions of young and old stellar populations, we find a large spread of ages and metallicities of the old stars in the nuclear rings. This indicates a persistent infall of metal-poor gas and ongoing episodic star formation over many gigayears. The young stars have metallicities a factor two to three higher than solar in all galaxies except NGC 3351, where the range is from half to twice solar. Previously reported detections of extremely metal poor regions at young stellar age on the rings of these four galaxies are a methodological artifact of the average over all stars, young and old. In addition, it is important to include contributions of very young stars (<6 Myr) in this environment. For each of the four galaxies, the extinction maps generated through our population synthesis analysis provide support for the infall scenario. They reveal dust lanes along the leading edges of the stellar bars, indicating the flow of interstellar material towards the circumnuclear zone. Prominent stellar clusters show little extinction, most likely because of the onset of stellar winds. Inside and on the nuclear rings, regions that are largely free of extinction are detected.

In a sampling problem, we are given an input x, and asked to sample

approximately from a probability distribution D_x. In a search problem, we are

given an input x, and asked to find a member of a nonempty set A_x with high

probability. (An example is finding a Nash equilibrium.) In this paper, we use

tools from Kolmogorov complexity and algorithmic information theory to show

that sampling and search problems are essentially equivalent. More precisely,

for any sampling problem S, there exists a search problem R_S such that, if C

is any "reasonable" complexity class, then R_S is in the search version of C if

and only if S is in the sampling version. As one application, we show that

SampP=SampBQP if and only if FBPP=FBQP: in other words, classical computers can

efficiently sample the output distribution of every quantum circuit, if and

only if they can efficiently solve every search problem that quantum computers

can solve. A second application is that, assuming a plausible conjecture, there

exists a search problem R that can be solved using a simple linear-optics

experiment, but that cannot be solved efficiently by a classical computer

unless the polynomial hierarchy collapses. That application will be described

in a forthcoming paper with Alex Arkhipov on the computational complexity of

linear optics.

02 Aug 2012

We present an introduction to the equivariant slice filtration. After

reviewing the definitions and basic properties, we determine the slice

dimension of various families of naturally arising spectra. This leads to an

analysis of pullbacks of slices defined on quotient groups, producing new

collections of slices. Building on this, we determine the slice tower for the

Eilenberg-Mac Lane spectrum associated to a Mackey functor for a cyclic

p-group. We then relate the Postnikov tower to the slice tower for various

spectra. Finally, we pose a few conjectures about the nature of slices and

pullbacks.

We consider sequences of absolute and relative homology and cohomology groups

that arise naturally for a filtered cell complex. We establish algebraic

relationships between their persistence modules, and show that they contain

equivalent information. We explain how one can use the existing algorithm for

persistent homology to process any of the four modules, and relate it to a

recently introduced persistent cohomology algorithm. We present experimental

evidence for the practical efficiency of the latter algorithm.

03 Nov 2018

Ropelength and embedding thickness are related measures of geometric complexity of classical knots and links in Euclidean space. In their recent work, Freedman and Krushkal posed a question regarding lower bounds for embedding thickness of n-component links in terms of the Milnor linking numbers. The main goal of the current paper is to provide such estimates and thus generalizing the known linking number bound. In the process, we collect several facts about finite type invariants and ropelength/crossing number of knots. We give examples of families of knots, where such estimates behave better than the well-known knot-genus estimate.

The Langlands Program was launched in the late 60s with the goal of relating

Galois representations and automorphic forms. In recent years a geometric

version has been developed which leads to a mysterious duality between certain

categories of sheaves on moduli spaces of (flat) bundles on algebraic curves.

Three years ago, in a groundbreaking advance, Kapustin and Witten have linked

the geometric Langlands correspondence to the S-duality of 4D supersymmetric

gauge theories. This and subsequent works have already led to striking new

insights into the geometric Langlands Program, which in particular involve the

Homological Mirror Symmetry of the Hitchin moduli spaces of Higgs bundles on

algebraic curves associated to two Langlands dual Lie groups.

24 Oct 2012

We make explicit a construction of Serre giving a definition of an algebraic Sato-Tate group associated to an abelian variety over a number field, which is conjecturally linked to the distribution of normalized L-factors as in the usual Sato-Tate conjecture for elliptic curves. The connected part of the algebraic Sato-Tate group is closely related to the Mumford-Tate group, but the group of components carries additional arithmetic information. We then check that in many cases where the Mumford-Tate group is completely determined by the endomorphisms of the abelian variety, the algebraic Sato-Tate group can also be described explicitly in terms of endomorphisms. In particular, we cover all abelian varieties (not necessarily absolutely simple) of dimension at most 3; this result figures prominently in the analysis of Sato-Tate groups for abelian surfaces given recently by Fite, Kedlaya, Rotger, and Sutherland.

10 May 2019

Many of the input-parameter-to-output-quantity-of-interest maps that arise in

computational science admit a surprising low-dimensional structure, where the

outputs vary primarily along a handful of directions in the high-dimensional

input space. This type of structure is well modeled by a ridge function, which

is a composition of a low-dimensional linear transformation with a nonlinear

function. If the goal is to compute statistics of the output (e.g., as in

uncertainty quantification or robust design) then one should exploit this

low-dimensional structure, when present, to accelerate computations. We develop

Gaussian quadrature and the associated polynomial approximation for

one-dimensional ridge functions. The key elements of our method are (i)

approximating the univariate density of the given linear combination of inputs

by repeated convolutions and (ii) a Lanczos-Stieltjes method for constructing

orthogonal polynomials and Gaussian quadrature.

We describe a construction of the cyclotomic structure on topological

Hochschild homology (THH) of a ring spectrum using the Hill-Hopkins-Ravenel

multiplicative norm. Our analysis takes place entirely in the category of

equivariant orthogonal spectra, avoiding use of the B\"okstedt coherence

machinery. We are able to define versions of topological cyclic homology (TC)

and TR-theory relative to a cyclotomic commutative ring spectrum A. We

describe spectral sequences computing this relative theory ATR in terms of

TR over the sphere spectrum and vice versa. Furthermore, our construction

permits a straightforward definition of the Adams operations on TR and TC.

05 Nov 2018

Recent studies have shown that Convolutional Neural Networks (CNN) are

relatively easy to attack through the generation of so-called adversarial

examples. Such vulnerability also affects CNN-based image forensic tools.

Research in deep learning has shown that adversarial examples exhibit a certain

degree of transferability, i.e., they maintain part of their effectiveness even

against CNN models other than the one targeted by the attack. This is a very

strong property undermining the usability of CNN's in security-oriented

applications. In this paper, we investigate if attack transferability also

holds in image forensics applications. With specific reference to the case of

manipulation detection, we analyse the results of several experiments

considering different sources of mismatch between the CNN used to build the

adversarial examples and the one adopted by the forensic analyst. The analysis

ranges from cases in which the mismatch involves only the training dataset, to

cases in which the attacker and the forensic analyst adopt different

architectures. The results of our experiments show that, in the majority of the

cases, the attacks are not transferable, thus easing the design of proper

countermeasures at least when the attacker does not have a perfect knowledge of

the target detector.

In this paper, we describe the development of symbolic representations

annotated on human-robot dialogue data to make dimensions of meaning accessible

to autonomous systems participating in collaborative, natural language

dialogue, and to enable common ground with human partners. A particular

challenge for establishing common ground arises in remote dialogue (occurring

in disaster relief or search-and-rescue tasks), where a human and robot are

engaged in a joint navigation and exploration task of an unfamiliar

environment, but where the robot cannot immediately share high quality visual

information due to limited communication constraints. Engaging in a dialogue

provides an effective way to communicate, while on-demand or lower-quality

visual information can be supplemented for establishing common ground. Within

this paradigm, we capture propositional semantics and the illocutionary force

of a single utterance within the dialogue through our Dialogue-AMR annotation,

an augmentation of Abstract Meaning Representation. We then capture patterns in

how different utterances within and across speaker floors relate to one another

in our development of a multi-floor Dialogue Structure annotation schema.

Finally, we begin to annotate and analyze the ways in which the visual

modalities provide contextual information to the dialogue for overcoming

disparities in the collaborators' understanding of the environment. We conclude

by discussing the use-cases, architectures, and systems we have implemented

from our annotations that enable physical robots to autonomously engage with

humans in bi-directional dialogue and navigation.

A new multi-modal human-robot dialogue corpus, SCOUT, provides 278 dialogues and 89,056 utterances, along with synchronized images and detailed linguistic annotations, to specifically capture how humans interact with robots in collaborative exploration tasks. This resource, developed by DEVCOM Army Research Laboratory and collaborators, aims to accelerate the development of autonomous situated dialogue systems by providing data reflecting unique human-robot communication dynamics.

The deployment of pre-trained perception models in novel environments often leads to performance degradation due to distributional shifts. Although recent artificial intelligence approaches for metacognition use logical rules to characterize and filter model errors, improving precision often comes at the cost of reduced recall. This paper addresses the hypothesis that leveraging multiple pre-trained models can mitigate this recall reduction. We formulate the challenge of identifying and managing conflicting predictions from various models as a consistency-based abduction problem, building on the idea of abductive learning (ABL) but applying it to test-time instead of training. The input predictions and the learned error detection rules derived from each model are encoded in a logic program. We then seek an abductive explanation--a subset of model predictions--that maximizes prediction coverage while ensuring the rate of logical inconsistencies (derived from domain constraints) remains below a specified threshold. We propose two algorithms for this knowledge representation task: an exact method based on Integer Programming (IP) and an efficient Heuristic Search (HS). Through extensive experiments on a simulated aerial imagery dataset featuring controlled, complex distributional shifts, we demonstrate that our abduction-based framework outperforms individual models and standard ensemble baselines, achieving, for instance, average relative improvements of approximately 13.6\% in F1-score and 16.6\% in accuracy across 15 diverse test datasets when compared to the best individual model. Our results validate the use of consistency-based abduction as an effective mechanism to robustly integrate knowledge from multiple imperfect models in challenging, novel scenarios.

29 May 2014

We construct splitting varieties for triple Massey products. For a,b,c in F^* the triple Massey product < a,b,c> of the corresponding elements of H^1(F, mu_2) contains 0 if and only if there is x in F^* and y in F[\sqrt{a}, \sqrt{c}]^* such that b x^2 = N_{F[\sqrt{a}, \sqrt{c}]/F}(y), where N_{F[\sqrt{a}, \sqrt{c}]/F} denotes the norm, and F is a field of characteristic different from 2. These varieties satisfy the Hasse principle by a result of D.B. Lee and A.R. Wadsworth. This shows that triple Massey products for global fields of characteristic different from 2 always contain 0.

13 Nov 2024

DARPA Google DeepMind

Google DeepMind Stanford University

Stanford University OpenAI

OpenAI Google Research

Google Research Microsoft

Microsoft MITNASAorUniversityLaboratory) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,

MITNASAorUniversityLaboratory) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,

Google DeepMind

Google DeepMind Stanford University

Stanford University OpenAI

OpenAI Google Research

Google Research Microsoft

Microsoft MITNASAorUniversityLaboratory) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,

MITNASAorUniversityLaboratory) - Research laboratories (e.g.,) - Companies (e.g.,) - Government agencies (e.g.,) Do not include: - Department names without their institution - General terms likewithout specific names - Project names or system names - Other organization names mentioned in the paper that are not DIRECTLY involved with the publication of the paper - Organization names in the paper title. Some times a paper will be a study on another organization, do not be fooled by the titleew the first section of a research paper, looking at the area usually under the author names determine which such organizations the authors are a part of. Be careful, many times papers will have other organizations/frameworks/library names in their first page that aren't the organizations the authors are actually from! Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains). Return only the organization names, in an array, with no additional text. If no organizations are mentioned, return an empty array. Examples of what to extract: - University names (e.g.,Airborne insects generate a leading edge vortex when they flap their wings. This coherent vortex is a low pressure region that enhances the lift of flapping wings compared to fixed wings. Insect wings are thin membranes strengthened by a system of veins that does not allow large wing deformations. Bat wings are thin compliant skin membranes stretched between their limbs, hand, and body that show larger deformations during flapping wing flight. This study examines the role of the leading edge vortex on highly deformable membrane wings that passively change shape under fluid dynamic loading maintaining a positive camber throughout the hover cycle. Our experiments reveal that unsteady wing deformations suppress the formation of a coherent leading edge vortex as flexibility increases. At lift and energy optimal aeroelastic conditions, there is no more leading edge vortex. Instead, vorticity accumulates in a bound shear layer covering the wing's upper surface from the leading to the trailing edge. Despite the absence of a leading edge vortex, the optimal deformable membrane wings demonstrate enhanced lift and energy efficiency compared to their rigid counterparts. It is possible that small bats rely on this mechanism for efficient hovering. We relate the force production on the wings with their deformation through scaling analyses. Additionally, we identify the geometric angles at the leading and trailing edges as observable indicators of the flow state and use them to map out the transitions of the flow topology and their aerodynamic performance for a wide range of aeroelastic conditions.

Deep Reinforcement Learning (RL) has been explored and verified to be effective in solving decision-making tasks in various domains, such as robotics, transportation, recommender systems, etc. It learns from the interaction with environments and updates the policy using the collected experience. However, due to the limited real-world data and unbearable consequences of taking detrimental actions, the learning of RL policy is mainly restricted within the simulators. This practice guarantees safety in learning but introduces an inevitable sim-to-real gap in terms of deployment, thus causing degraded performance and risks in execution. There are attempts to solve the sim-to-real problems from different domains with various techniques, especially in the era with emerging techniques such as large foundations or language models that have cast light on the sim-to-real. This survey paper, to the best of our knowledge, is the first taxonomy that formally frames the sim-to-real techniques from key elements of the Markov Decision Process (State, Action, Transition, and Reward). Based on the framework, we cover comprehensive literature from the classic to the most advanced methods including the sim-to-real techniques empowered by foundation models, and we also discuss the specialties that are worth attention in different domains of sim-to-real problems. Then we summarize the formal evaluation process of sim-to-real performance with accessible code or benchmarks. The challenges and opportunities are also presented to encourage future exploration of this direction. We are actively maintaining a repository to include the most up-to-date sim-to-real research work to help domain researchers.

01 Apr 2024

The ability to interact with machines using natural human language is becoming not just commonplace, but expected. The next step is not just text interfaces, but speech interfaces and not just with computers, but with all machines including robots. In this paper, we chronicle the recent history of this growing field of spoken dialogue with robots and offer the community three proposals, the first focused on education, the second on benchmarks, and the third on the modeling of language when it comes to spoken interaction with robots. The three proposals should act as white papers for any researcher to take and build upon.

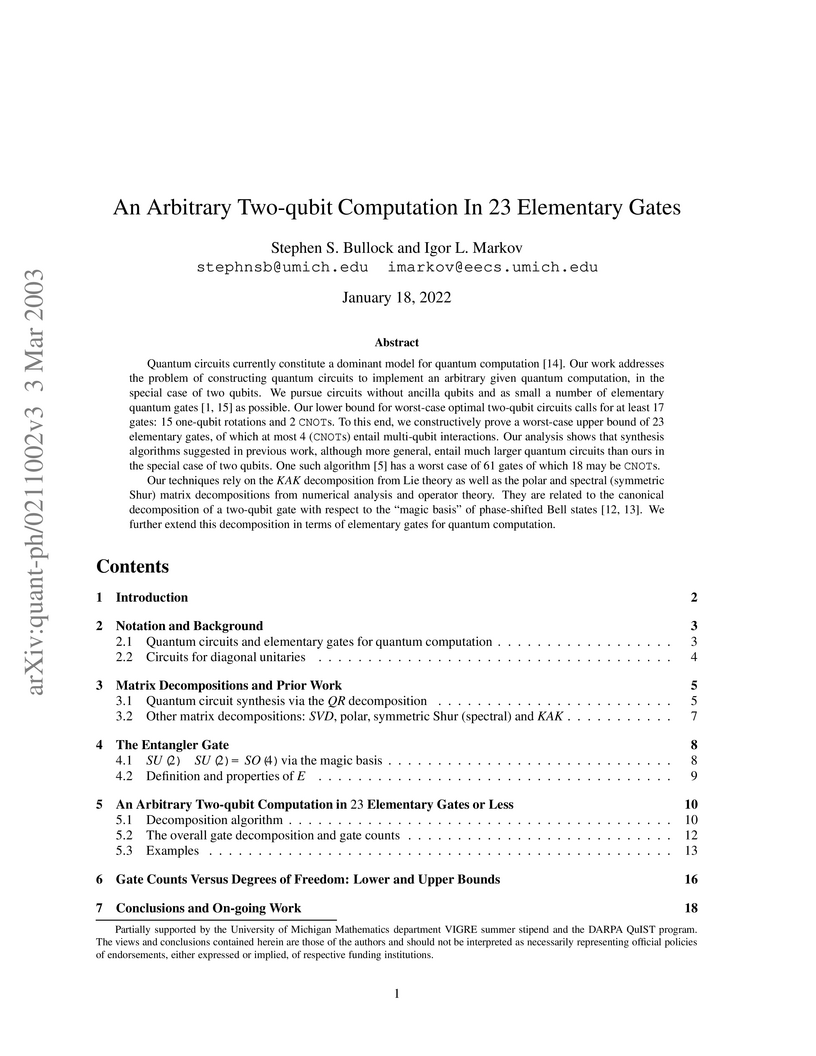

03 Mar 2003

Quantum circuits currently constitute a dominant model for quantum

computation. Our work addresses the problem of constructing quantum circuits to

implement an arbitrary given quantum computation, in the special case of two

qubits. We pursue circuits without ancilla qubits and as small a number of

elementary quantum gates as possible. Our lower bound for worst-case optimal

two-qubit circuits calls for at least 17 gates: 15 one-qubit rotations and 2

CNOTs. To this end, we constructively prove a worst-case upper bound of 23

elementary gates, of which at most 4 (CNOT) entail multi-qubit interactions.

Our analysis shows that synthesis algorithms suggested in previous work,

although more general, entail much larger quantum circuits than ours in the

special case of two qubits. One such algorithm has a worst case of 61 gates of

which 18 may be CNOTs. Our techniques rely on the KAK decomposition from Lie

theory as well as the polar and spectral (symmetric Shur) matrix decompositions

from numerical analysis and operator theory. They are related to the canonical

decomposition of a two-qubit gate with respect to the ``magic basis'' of

phase-shifted Bell states, published previously. We further extend this

decomposition in terms of elementary gates for quantum computation.

There are no more papers matching your filters at the moment.