European Centre for Living Technology

This paper presents a comprehensive survey of Agents for Computer Use (ACUs), introducing a novel, unifying taxonomy to structure the rapidly evolving field. It classifies 87 ACUs and 33 datasets, analyzing current trends and identifying six critical research gaps, while also proposing strategic directions for future research to foster general-purpose, robust, and scalable agents.

A hybrid retrieval pipeline effectively links informal social media claims to formal scientific papers, securing 3rd place with an MRR@5 of 66.43% in the CLEF CheckThat! 2025 competition (subtask 4b). The system combines lexical and semantic search with an LLM-based re-ranker, improving upon baselines using only open-source models.

Researchers from Ca

Foscari University and UNC Chapel Hill developed a thermodynamic framework for biological systems that explicitly incorporates information. This framework introduces a "Biological Free Energy" and a generalized First Law, demonstrating how molecular switches achieve stable Nonequilibrium Steady States (NESS) where minimizing this free energy corresponds to maximizing mesoscopic information, driven by external energy fluxes like ATP hydrolysis.

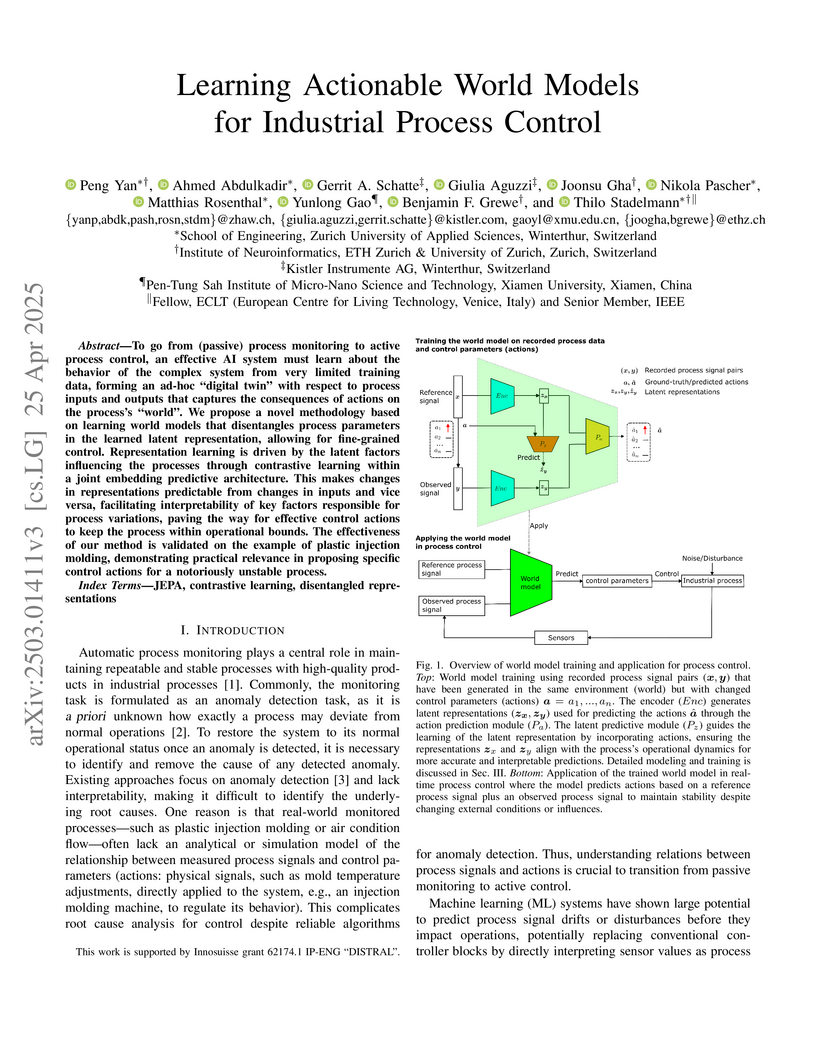

Researchers from ZHAW, ETH Zurich, and Kistler Instrumente AG developed an actionable world model that learns disentangled representations of industrial process signals in relation to control parameters. This model enables diagnosis of root causes and suggests specific corrective actions, achieving average 2D action direction errors as low as 8.53° on a plastic injection molding dataset when using transfer learning.

In this work, we propose an end-to-end constrained clustering scheme to

tackle the person re-identification (re-id) problem. Deep neural networks (DNN)

have recently proven to be effective on person re-identification task. In

particular, rather than leveraging solely a probe-gallery similarity, diffusing

the similarities among the gallery images in an end-to-end manner has proven to

be effective in yielding a robust probe-gallery affinity. However, existing

methods do not apply probe image as a constraint, and are prone to noise

propagation during the similarity diffusion process. To overcome this, we

propose an intriguing scheme which treats person-image retrieval problem as a

{\em constrained clustering optimization} problem, called deep constrained

dominant sets (DCDS). Given a probe and gallery images, we re-formulate person

re-id problem as finding a constrained cluster, where the probe image is taken

as a constraint (seed) and each cluster corresponds to a set of images

corresponding to the same person. By optimizing the constrained clustering in

an end-to-end manner, we naturally leverage the contextual knowledge of a set

of images corresponding to the given person-images. We further enhance the

performance by integrating an auxiliary net alongside DCDS, which employs a

multi-scale Resnet. To validate the effectiveness of our method we present

experiments on several benchmark datasets and show that the proposed method can

outperform state-of-the-art methods.

04 Jun 2024

The renormalization group (RG) constitutes a fundamental framework in modern theoretical physics. It allows the study of many systems showing states with large-scale correlations and their classification in a relatively small set of universality classes. RG is the most powerful tool for investigating organizational scales within dynamic systems. However, the application of RG techniques to complex networks has presented significant challenges, primarily due to the intricate interplay of correlations on multiple scales. Existing approaches have relied on hypotheses involving hidden geometries and based on embedding complex networks into hidden metric spaces. Here, we present a practical overview of the recently introduced Laplacian Renormalization Group for heterogeneous networks. First, we present a brief overview that justifies the use of the Laplacian as a natural extension for well-known field theories to analyze spatial disorder. We then draw an analogy to traditional real-space renormalization group procedures, explaining how the LRG generalizes the concept of "Kadanoff supernodes" as block nodes that span multiple scales. These supernodes help mitigate the effects of cross-scale correlations due to small-world properties. Additionally, we rigorously define the LRG procedure in momentum space in the spirit of Wilson RG. Finally, we show different analyses for the evolution of network properties along the LRG flow following structural changes when the network is properly reduced.

We introduce the Cooperative Network Architecture (CNA), a model that represents sensory signals using structured, recurrently connected networks of neurons, termed "nets." Nets are dynamically assembled from overlapping net fragments, which are learned based on statistical regularities in sensory input. This architecture offers robustness to noise, deformation, and out-of-distribution data, addressing challenges in current vision systems from a novel perspective. We demonstrate that net fragments can be learned without supervision and flexibly recombined to encode novel patterns, enabling figure completion and resilience to noise. Our findings establish CNA as a promising paradigm for developing neural representations that integrate local feature processing with global structure formation, providing a foundation for future research on invariant object recognition.

We analyze about two hundred naturally occurring networks with distinct

dynamical origins to formally test whether the commonly assumed hypothesis of

an underlying scale-free structure is generally viable. This has recently been

questioned on the basis of statistical testing of the validity of power law

distributions of network degrees by contrasting real data. Specifically, we

analyze by finite-size scaling analysis the datasets of real networks to check

whether purported departures from the power law behavior are due to the

finiteness of the sample size. In this case, power laws would be recovered in

the case of progressively larger cutoffs induced by the size of the sample. We

find that a large number of the networks studied follow a finite size scaling

hypothesis without any self-tuning. This is the case of biological protein

interaction networks, technological computer and hyperlink networks, and

informational networks in general. Marked deviations appear in other cases,

especially infrastructure and transportation but also social networks. We

conclude that underlying scale invariance properties of many naturally

occurring networks are extant features often clouded by finite-size effects due

to the nature of the sample data.

Introduction: In contrast to current AI technology, natural intelligence --

the kind of autonomous intelligence that is realized in the brains of animals

and humans to attain in their natural environment goals defined by a repertoire

of innate behavioral schemata -- is far superior in terms of learning speed,

generalization capabilities, autonomy and creativity. How are these strengths,

by what means are ideas and imagination produced in natural neural networks?

Methods: Reviewing the literature, we put forward the argument that both our

natural environment and the brain are of low complexity, that is, require for

their generation very little information and are consequently both highly

structured. We further argue that the structures of brain and natural

environment are closely related.

Results: We propose that the structural regularity of the brain takes the

form of net fragments (self-organized network patterns) and that these serve as

the powerful inductive bias that enables the brain to learn quickly, generalize

from few examples and bridge the gap between abstractly defined general goals

and concrete situations.

Conclusions: Our results have important bearings on open problems in

artificial neural network research.

03 Dec 2024

Modular microrobotics can potentially address many information-intensive microtasks in medicine, manufacturing and the environment. However, surface area has limited the natural powering, communication, functional integration, and self-assembly of smart mass-fabricated modular robotic devices at small scales. We demonstrate the integrated self-folding and self-rolling of functionalized patterned interior and exterior membrane surfaces resulting in programmable, self-assembling, inter-communicating and self-locomoting micromodules (smartlets ess-than 1 mm3) with interior chambers for on-board buoyancy control. The microbotic divers, with 360 deg solar harvesting rolls, function with sufficient ambient power for communication and programmed locomotion in water via electrolysis. The folding faces carry rigid microcomponents including silicon chiplets as microprocessors and micro-LEDs for communication. This remodels modular microrobotics closer to the surface-rich modular autonomy of biological cells and provides an economical platform for microscopic applications.

16 Jun 2024

Lablets are autonomous microscopic particles with programmable CMOS

electronics that can control electrokinetic phenomena and electrochemical

reactions in solution via actuator and sensor microelectrodes. In this paper,

we describe the design and fabrication of optimized singulated lablets (CMOS3)

with dimensions 140x140x50 micrometers carrying an integrated coplanar

encapsulated supercapacitor as a rechargeable power supply. The lablets are

designed to allow docking to one another or to a smart surface for interchange

of energy, electronic information, and chemicals. The paper focusses on the

digital and analog design of the lablets to allow significant programmable

functionality in a microscopic footprint, including the control of autonomous

actuation and sensing up to the level of being able to support a complete

lablet self-reproduction life cycle, although experimentally this remains to be

proven. The potential of lablets in autonomous sensing and control and for

evolutionary experimentation are discussed.

12 Jul 2022

Since Newton, all classical and quantum physics depends upon the "Newtonian Paradigm". Here the relevant variables of the system are identified. The boundary conditions creating the phase space of all possible values of the variables are defined. Then, given any initial condition, the differential equations of motion are integrated to yield an entailed trajectory in the phase space. It is fundamental to the Newtonian Paradigm that the set of possibilities that constitute the phase space is always definable and fixed ahead of time. All of this fails for the diachronic evolution of ever new adaptations in any biosphere. The central reason is that living cells achieve Constraint Closure and construct themselves. Living cells, evolving via heritable variation and Natural selection, adaptively construct new in the universe possibilities. The new possibilities are opportunities for new adaptations thereafter seized by heritable variation and Natural Selection. Surprisingly, we can neither define nor deduce the evolving phase spaces ahead of time. We can use no mathematics based on Set Theory to do so. These ever-new adaptations with ever-new relevant variables constitute the ever-changing phase space of evolving biospheres. Because of this, evolving biospheres are entirely outside the Newtonian Paradigm. One consequence is that for any universe such as ours there can be no Final Theory that entails all that comes to exist. The implications are large. We face a third major transition in science beyond the Pythagorean dream that "All is Number". We must give up deducing the diachronic evolution of the biosphere. All of physics, classical and quantum, however, apply to the analysis of existing life, a synchronic analysis. We begin to better understand the emergent creativity of an evolving biosphere. Thus, we are on the edge of inventing a physics-like new statistical mechanics of emergence.

30 Jul 2024

We report on extensive molecular dynamics atomistic simulations of a

\textit{meta}-substituted \textit{poly}-phenylacetylene (pPA) foldamer

dispersed in three solvents, water \ce{H2O}, cyclohexane \ce{cC6H12}, and

\textit{n}-hexane \ce{nC6H14}, and for three oligomer lengths \textit{12mer},

\textit{16mer} and \textit{20mer}. At room temperature, we find a tendency of

the pPA foldamer to collapse into a helical structure in all three solvents but

with rather different stability character, stable in water, marginally stable

in n-hexane, unstable in cyclohexane. In the case of water, the initial and

final number of hydrogen bonds of the foldamer with water molecules is found to

be unchanged, with no formation of intrachain hydrogen bonding, thus indicating

that hydrogen bonding plays no role in the folding process. In all three

solvents, the folding is found to be mainly driven by electrostatics, nearly

identical in the three cases, and largely dominant compared to van der Waals

interactions that are different in the three cases.

This scenario is also supported by the analysis of distribution of the bond

and dihedral angles and by a direct calculation of the solvation and transfer

free energies via thermodynamic integration. The different stability in the

case of cyclohexane and n-hexane notwithstanding their rather similar chemical

composition can be traced back to the different entropy-enthalpy compensation

that is found similar for water and n-hexane, and very different for

cyclohexane.

A comparison with the same properties for \textit{poly}-phenylalanine

oligomers underscores the crucial differences between pPA and peptides.

To highlight how these findings can hardly be interpreted in terms of a

simple "good" and "poor" solvent picture, a molecular dynamics study of a

bead-spring polymer chain in a Lennard-Jones fluid is also included.

Open vocabulary 3D object detection (OV3D) allows precise and extensible

object recognition crucial for adapting to diverse environments encountered in

assistive robotics. This paper presents OpenNav, a zero-shot 3D object

detection pipeline based on RGB-D images for smart wheelchairs. Our pipeline

integrates an open-vocabulary 2D object detector with a mask generator for

semantic segmentation, followed by depth isolation and point cloud construction

to create 3D bounding boxes. The smart wheelchair exploits these 3D bounding

boxes to identify potential targets and navigate safely. We demonstrate

OpenNav's performance through experiments on the Replica dataset and we report

preliminary results with a real wheelchair. OpenNav improves state-of-the-art

significantly on the Replica dataset at mAP25 (+9pts) and mAP50 (+5pts) with

marginal improvement at mAP. The code is publicly available at this link:

https://github.com/EasyWalk-PRIN/OpenNav.

Understanding the origins of complexity is a fundamental challenge with implications for biological and technological systems. Network theory emerges as a powerful tool to model complex systems. Networks are an intuitive framework to represent inter-dependencies among many system components, facilitating the study of both local and global properties. However, it is unclear whether we can define a universal theoretical framework for evolving networks. While basic growth mechanisms, like preferential attachment, recapitulate common properties such as the power-law degree distribution, they fall short in capturing other system-specific properties. Tinkering, on the other hand, has shown to be very successful in generating modular or nested structures "for-free", highlighting the role of internal, non-adaptive mechanisms in the evolution of complexity. Different network extensions, like hypergraphs, have been recently developed to integrate exogenous factors in evolutionary models, as pairwise interactions are insufficient to capture environmentally-mediated species associations. As we confront global societal and climatic challenges, the study of network and hypergraphs provides valuable insights, emphasizing the importance of scientific exploration in understanding and managing complexity.

We present a lattice model for polymer solutions, explicitly incorporating interactions with a bath of solvent and cosolvent molecules. By exploiting the well-known analogy between polymer systems and the O(n)-vector spin model in the limit n→0, we derive an exact field-theoretic expression for the partition function of the system. The latter is then evaluated at the saddle point, providing a mean-field estimate of the free energy. The resulting expression, which conforms to the Flory-Huggins type, is then used to analyze the system's stability with respect to phase separation, complemented by a numerical approach based on convex hull evaluation. We demonstrate that this simple lattice model can effectively explain the behavior of a variety of seemingly unrelated polymer systems, which have been predominantly investigated in the past only through numerical simulations. This includes both, single-chain and multi-chain, solutions. Our findings emphasize the fundamental, mutually competing, roles of solvent and cosolvent in polymer systems.

Complex networks usually exhibit a rich architecture organized over multiple

intertwined scales. Information pathways are expected to pervade these scales

reflecting structural insights that are not manifest from analyses of the

network topology. Moreover, small-world effects correlate with the different

network hierarchies complicating the identification of coexisting mesoscopic

structures and functional cores. We present a communicability analysis of

effective information pathways throughout complex networks based on information

diffusion to shed further light on these issues. We employ a variety of

brand-new theoretical techniques allowing for: (i) bring the theoretical

framework to quantify the probability of information diffusion among nodes,

(ii) identify critical scales and structures of complex networks regardless of

their intrinsic properties, and (iii) demonstrate their dynamical relevance in

synchronization phenomena. By combining these ideas, we evidence how the

information flow on complex networks unravels different resolution scales.

Using computational techniques, we focus on entropic transitions, uncovering a

generic mesoscale object, the information core, and controlling information

processing in complex networks. Altogether, this study sheds much light on

allowing new theoretical techniques paving the way to introduce future

renormalization group approaches based on diffusion distances.

26 Feb 2025

Charged colloids can form ordered structures like Wigner crystals or glasses

at very low concentrations due to long-range electrostatic repulsions. Here, we

combine small-angle x-ray scattering (SAXS) and optical experiments with

simulations to investigate the phase behavior of charged rodlike colloids

across a wide range of salt concentrations and packing fractions. At ultra low

ionic strength and packing fractions, we reveal both experimentally and

numerically a direct transition from a nematic to a crystalline smectic-B

phase, previously identified as a glass state. This transition, bypassing the

smectic-A intermediate phase, results from minimizing Coulomb repulsion and

maximizing entropic gains due to fluctuations in the crystalline structure.

This demonstrates how long-range electrostatic repulsion significantly alters

the phase behavior of rod-shaped particles and highlights its key-role in

driving the self-organization of anisotropic particles.

Quantum computing promises to solve difficult optimization problems in chemistry, physics and mathematics more efficiently than classical computers, but requires fault-tolerant quantum computers with millions of qubits. To overcome errors introduced by today's quantum computers, hybrid algorithms combining classical and quantum computers are used. In this paper we tackle the multiple query optimization problem (MQO) which is an important NP-hard problem in the area of data-intensive problems. We propose a novel hybrid classical-quantum algorithm to solve the MQO on a gate-based quantum computer. We perform a detailed experimental evaluation of our algorithm and compare its performance against a competing approach that employs a quantum annealer -- another type of quantum computer. Our experimental results demonstrate that our algorithm currently can only handle small problem sizes due to the limited number of qubits available on a gate-based quantum computer compared to a quantum computer based on quantum annealing. However, our algorithm shows a qubit efficiency of close to 99% which is almost a factor of 2 higher compared to the state of the art implementation. Finally, we analyze how our algorithm scales with larger problem sizes and conclude that our approach shows promising results for near-term quantum computers.

22 Jun 2021

The evolution of the biosphere unfolds as a luxuriant generative process of new living forms and functions. Organisms adapt to their environment, exploit novel opportunities that are created in this continuous blooming dynamics. Affordances play a fundamental role in the evolution of the biosphere, for organisms can exploit them for new morphological and behavioral adaptations achieved by heritable variations and selection. This way, the opportunities offered by affordances are then actualized as ever novel adaptations. In this paper we maintain that affordances elude a formalization that relies on set theory: we argue that it is not possible to apply set theory to affordances, therefore we cannot devise a set-based mathematical theory of the diachronic evolution of the biosphere.

There are no more papers matching your filters at the moment.