Forschungszentrum Jülich GmbH

01 Oct 2025

University of Michigan

University of Michigan RIKEN

RIKEN Technical University of Munich

Technical University of Munich Chalmers University of TechnologyForschungszentrum Jülich GmbHNational Institute of Advanced Industrial Science and Technology (AIST)IBM Research EuropeUnitary FundPlaksha UniversityAberystwyth UniversityZurich InstrumentsRakutenUniversity of GdañskIBM, T.J. Watson Research CenterUniversit

de Sherbrooke

Chalmers University of TechnologyForschungszentrum Jülich GmbHNational Institute of Advanced Industrial Science and Technology (AIST)IBM Research EuropeUnitary FundPlaksha UniversityAberystwyth UniversityZurich InstrumentsRakutenUniversity of GdañskIBM, T.J. Watson Research CenterUniversit

de SherbrookeQuTiP, the Quantum Toolbox in Python, has been at the forefront of open-source quantum software for the past 13 years. It is used as a research, teaching, and industrial tool, and has been downloaded millions of times by users around the world. Here we introduce the latest developments in QuTiP v5, which are set to have a large impact on the future of QuTiP and enable it to be a modern, continuously developed and popular tool for another decade and more. We summarize the code design and fundamental data layer changes as well as efficiency improvements, new solvers, applications to quantum circuits with QuTiP-QIP, and new quantum control tools with QuTiP-QOC. Additional flexibility in the data layer underlying all ``quantum objects'' in QuTiP allows us to harness the power of state-of-the-art data formats and packages like JAX, CuPy, and more. We explain these new features with a series of both well-known and new examples. The code for these examples is available in a static form on GitHub and as continuously updated and documented notebooks in the qutip-tutorials package.

28 Apr 2025

ETH Zurich

ETH Zurich Harvard University

Harvard University National University of Singapore

National University of Singapore University of OxfordIndiana UniversityScuola Normale Superiore

University of OxfordIndiana UniversityScuola Normale Superiore University of British ColumbiaAustrian Academy of SciencesUniversity of ExeterUniversity of RochesterUniversity of LuxembourgUniversity of ViennaUniversity of PotsdamTU WienUniversity College DublinUniversidad de La LagunaUniversity of YorkUniversity of Maryland Baltimore CountyForschungszentrum Jülich GmbHQueen's University BelfastUniversità di FirenzeThe University of CampinasOIST Graduate UniversityIstituto Nanoscienze

CNRUniversit

de LorraineUniversit

degli Studi di Palermo

University of British ColumbiaAustrian Academy of SciencesUniversity of ExeterUniversity of RochesterUniversity of LuxembourgUniversity of ViennaUniversity of PotsdamTU WienUniversity College DublinUniversidad de La LagunaUniversity of YorkUniversity of Maryland Baltimore CountyForschungszentrum Jülich GmbHQueen's University BelfastUniversità di FirenzeThe University of CampinasOIST Graduate UniversityIstituto Nanoscienze

CNRUniversit

de LorraineUniversit

degli Studi di PalermoThe last two decades has seen quantum thermodynamics become a well

established field of research in its own right. In that time, it has

demonstrated a remarkably broad applicability, ranging from providing

foundational advances in the understanding of how thermodynamic principles

apply at the nano-scale and in the presence of quantum coherence, to providing

a guiding framework for the development of efficient quantum devices. Exquisite

levels of control have allowed state-of-the-art experimental platforms to

explore energetics and thermodynamics at the smallest scales which has in turn

helped to drive theoretical advances. This Roadmap provides an overview of the

recent developments across many of the field's sub-disciplines, assessing the

key challenges and future prospects, providing a guide for its near term

progress.

Researchers from TU Darmstadt, ETH Zurich, and Forschungszentrum Jülich developed a methodology to denoise empirical performance models by integrating noise-resilient effort metrics as dynamic priors, significantly enhancing model accuracy and robustness while reducing measurement overhead. The approach was implemented within the Extra-P framework, demonstrating improved predictions on synthetic benchmarks and real-world mini-applications.

09 Oct 2025

In this paper, we provide an analytical study of the transmission eigenvalue problem in the context of biharmonic scattering with a penetrable obstacle. We will assume that the underlying physical model is given by an infinite elastic two--dimensional Kirchhoff--Love plate in R2, where the plate's thickness is small relative to the wavelength of the incident wave. In previous studies, transmission eigenvalues have been studied for acoustic scattering, whereas in this case, we consider biharmonic scattering. We prove the existence and discreteness of the transmission eigenvalues as well as study the dependence on the refractive index. We are able to prove the monotonicity of the first transmission eigenvalue with respect to the refractive index. Lastly, we provide numerical experiments to validate the theoretical work.

18 Dec 2024

Rooftop photovoltaic (RTPV) systems are essential for building a decarbonized and, due to its decentralized structure, more resilient energy system, and are particularly important for Ukraine, where recent conflicts have damaged more than half of its electricity and heat supply capacity. Favorable solar irradiation conditions make Ukraine a strong candidate for large-scale PV deployment, but effective policy requires detailed data on spatial and temporal generation potential. This study fills the data gap by using open-source satellite building footprint data corrected with high-resolution data from eastern Germany. This approach allowed accurate estimates of rooftop area and PV capacity and generation across Ukraine, with simulations revealing a capacity potential of 238.8 GW and a generation potential of 290 TWh/a excluding north-facing. The majority of this potential is located in oblasts (provinces) across the country with large cities such as Donetsk, Dnipro or Kyiv and surroundings. These results, validated against previous studies and available as open data, confirm Ukraine's significant potential for RTPV, supporting both energy resilience and climate goals.

In the acquisition of Magnetic Resonance (MR) images shorter scan times lead

to higher image noise. Therefore, automatic image denoising using deep learning

methods is of high interest. MR images containing line-like structures such as

roots or vessels yield special characteristics as they display connected

structures and yield sparse information. For this kind of data, it is important

to consider voxel neighborhoods when training a denoising network. In this

paper, we translate the Perceptual Loss to 3D data by comparing feature maps of

untrained networks in the loss function as done previously for 2D data. We

tested the performance of untrained Perceptual Loss (uPL) on 3D image denoising

of MR images displaying brain vessels (MR angiograms - MRA) and images of plant

roots in soil. We investigate the impact of various uPL characteristics such as

weight initialization, network depth, kernel size, and pooling operations on

the results. We tested the performance of the uPL loss on four Rician noise

levels using evaluation metrics such as the Structural Similarity Index Metric

(SSIM). We observe, that our uPL outperforms conventional loss functions such

as the L1 loss or a loss based on the Structural Similarity Index Metric

(SSIM). The uPL network's initialization is not important, while network depth

and pooling operations impact denoising performance. E.g. for both datasets a

network with five convolutional layers led to the best performance while a

network with more layers led to a performance drop. We also find that small uPL

networks led to better or comparable results than using large networks such as

VGG. We observe superior performance of our loss for both datasets, all noise

levels, and three network architectures. In conclusion, for images containing

line-like structures, uPL is an alternative to other loss functions for 3D

image denoising.

Large-scale land cover maps generated using deep learning play a critical role across a wide range of Earth science applications. Open in-situ datasets from principled land cover surveys offer a scalable alternative to manual annotation for training such models. However, their sparse spatial coverage often leads to fragmented and noisy predictions when used with existing deep learning-based land cover mapping approaches. A promising direction to address this issue is object-based classification, which assigns labels to semantically coherent image regions rather than individual pixels, thereby imposing a minimum mapping unit. Despite this potential, object-based methods remain underexplored in deep learning-based land cover mapping pipelines, especially in the context of medium-resolution imagery and sparse supervision. To address this gap, we propose LC-SLab, the first deep learning framework for systematically exploring object-based deep learning methods for large-scale land cover classification under sparse supervision. LC-SLab supports both input-level aggregation via graph neural networks, and output-level aggregation by postprocessing results from established semantic segmentation models. Additionally, we incorporate features from a large pre-trained network to improve performance on small datasets. We evaluate the framework on annual Sentinel-2 composites with sparse LUCAS labels, focusing on the tradeoff between accuracy and fragmentation, as well as sensitivity to dataset size. Our results show that object-based methods can match or exceed the accuracy of common pixel-wise models while producing substantially more coherent maps. Input-level aggregation proves more robust on smaller datasets, whereas output-level aggregation performs best with more data. Several configurations of LC-SLab also outperform existing land cover products, highlighting the framework's practical utility.

23 Oct 2024

Many biological processes occur on time scales longer than those accessible to molecular dynamics simulations. Identifying collective variables (CVs) and introducing an external potential to accelerate them is a popular approach to address this problem. In particular, PLUMED is a community-developed library that implements several methods for CV-based enhanced sampling. This chapter discusses two recent developments that have gained popularity in recent years. The first is the On-the-fly Probability Enhanced Sampling (OPES) method as a biasing scheme. This provides a unified approach to enhanced sampling able to cover many different scenarios: from free energy convergence to the discovery of metastable states, from rate calculation to generalized ensemble simulation. The second development concerns the use of machine learning (ML) approaches to determine CVs by learning the relevant variables directly from simulation data. The construction of these variables is facilitated by the mlcolvar library, which allows them to be optimized in Python and then used to enhance sampling thanks to a native interface inside PLUMED. For each of these methods, in addition to a brief introduction, we provide guidelines, practical suggestions and point to examples from the literature to facilitate their use in the study of the process of interest.

14 Sep 2025

Luminescence imaging is invaluable for studying biological and material systems, particularly when advanced protocols that exploit temporal dynamics are employed. However, implementing such protocols often requires custom instrumentation, either modified commercial systems or fully bespoke setups, which poses a barrier for researchers without expertise in optics, electronics, or software. To address this, we present a versatile macroscopic fluorescence imaging system capable of supporting a wide range of protocols, and provide detailed build instructions along with open-source software to enable replication with minimal prior experience. We demonstrate its broad utility through applications to plants, reversibly photoswitchable fluorescent proteins, and optoelectronic devices.

Spin qubit shuttling via moving conveyor-mode quantum dots in Si/SiGe offers a promising route to scalable miniaturized quantum computing. Recent modeling of dephasing via valley degrees of freedom and well disorder dictate a slow shutting speed which seems to limit errors to above correction thresholds if not mitigated. We increase the precision of this prediction, showing that typical errors for 10 μm shuttling at constant speed results in O(1) error, using fast, automatically differentiable numerics and including improved disorder modeling and potential noise ranges. However, remarkably, we show that these errors can be brought to well below fault-tolerant thresholds using trajectory shaping with very simple parametrization with as few as 4 Fourier components, well within the means for experimental in-situ realization, and without the need for targeting or knowing the location of valley near degeneracies.

Twisted bilayer graphene (tBLG) near the magic angle is a unique platform where the combination of topology and strong correlations gives rise to exotic electronic phases. These phases are gate-tunable and related to the presence of flat electronic bands, isolated by single-particle band gaps. This enables gate-controlled charge confinement, essential for the operation of single-electron transistors (SETs), and allows to explore the interplay of confinement, electron interactions, band renormalisation and the moiré superlattice, potentially revealing key paradigms of strong correlations. Here, we present gate-defined SETs in near-magic-angle tBLG with well-tunable Coulomb blockade resonances. These SETs allow to study magnetic field-induced quantum oscillations in the density of states of the source-drain reservoirs, providing insight into gate-tunable Fermi surfaces of tBLG. Comparison with tight-binding calculations highlights the importance of displacement-field-induced band renormalisation crucial for future advanced gate-tunable quantum devices and circuits in tBLG including e.g. quantum dots and Josephson junction arrays.

28 Aug 2025

In this paper, we study the so-called clamped transmission eigenvalue problem. This is a new transmission eigenvalue problem that is derived from the scattering of an impenetrable clamped obstacle in a thin elastic plate. The scattering problem is modeled by a biharmonic wave operator given by the Kirchhoff--Love infinite plate problem in the frequency domain. These scattering problems have not been studied to the extent of other models. Unlike other transmission eigenvalue problems, the problem studied here is a system of homogeneous PDEs defined in all of R2. This provides unique analytical and computational difficulties when studying the clamped transmission eigenvalue problem. We are able to prove that there exist infinitely many real clamped transmission eigenvalues. This is done by studying the equivalent variational formulation. We also investigate the relationship of the clamped transmission eigenvalues to the Dirichlet and Neumann eigenvalues of the negative Laplacian for the bounded scattering obstacle.

We present a perspective on molecular machine learning (ML) in the field of chemical process engineering. Recently, molecular ML has demonstrated great potential in (i) providing highly accurate predictions for properties of pure components and their mixtures, and (ii) exploring the chemical space for new molecular structures. We review current state-of-the-art molecular ML models and discuss research directions that promise further advancements. This includes ML methods, such as graph neural networks and transformers, which can be further advanced through the incorporation of physicochemical knowledge in a hybrid or physics-informed fashion. Then, we consider leveraging molecular ML at the chemical process scale, which is highly desirable yet rather unexplored. We discuss how molecular ML can be integrated into process design and optimization formulations, promising to accelerate the identification of novel molecules and processes. To this end, it will be essential to create molecule and process design benchmarks and practically validate proposed candidates, possibly in collaboration with the chemical industry.

11 Jul 2025

The Quantum Approximate Optimization Algorithm (QAOA) has been proposed as a method to obtain approximate solutions for combinatorial optimization tasks. In this work, we study the underlying algebraic properties of three QAOA ansätze for the maximum-cut (maxcut) problem on connected graphs, while focusing on the generated Lie algebras as well as their invariant subspaces. Specifically, we analyze the standard QAOA ansatz as well as the orbit and the multi-angle ansätze. We are able to fully characterize the Lie algebras of the multi-angle ansatz across arbitrary connected graphs, finding that they only fall into one of just six families. Besides the cycle and the path graphs, the Lie dimensions for every graph are exponentially large in the system size, meaning that multi-angle ansätze are extremely prone to exhibiting barren plateaus. Then, a similar quasi-graph-independent Lie-algebraic characterization beyond the multi-angle ansatz is impeded as the circuit exhibits additional "hidden" symmetries besides those naturally arising from a certain parity-superselection operator and all automorphisms of the considered graph. Disregarding the "hidden" symmetries, we can upper bound the dimensions of the orbit and the standard Lie algebras, and the dimensions of the associated invariant subspaces are determined via explicit character formulas. To finish, we conjecture that (for most graphs) the standard Lie algebras have only components that are either exponential or that grow, at most, polynomially with the system size. This would imply that the QAOA is either prone to barren plateaus, or classically simulable. More generally, our work provides a symmetry framework and tools to analyze any desired variational quantum algorithm.

04 Oct 2025

Silicon quantum chips offer a promising path toward scalable, fault-tolerant quantum computing, with the potential to host millions of qubits. However, scaling up dense quantum-dot arrays and enabling qubit interconnections through shuttling are hindered by uncontrolled lateral variations of the valley splitting energy EVS. We map EVS across a 40nm x 400nm region of a 28Si/Si0.7Ge0.3 shuttle device and analyze the spin coherence of a single electron spin transported by conveyor-belt shuttling. We observe that the EVS varies over a wide range from 1.5μeV to 200μeV and is dominated by SiGe alloy disorder. In regions of low EVS and at spin-valley resonances, spin coherence is reduced and its dependence on shuttle velocity matches predictions. Rapid and frequent traversal of low-EVS regions induces a regime of enhanced spin coherence explained by motional narrowing. By selecting shuttle trajectories that avoid problematic areas on the EVS map, we achieve transport over tens of microns with coherence limited only by the coupling to a static electron spin entangled with the mobile qubit. Our results provide experimental confirmation of the theory of spin-decoherence of mobile electron spin-qubits and present practical strategies to integrate conveyor-mode qubit shuttling into silicon quantum chips.

02 Oct 2024

We present one of the first comprehensive field datasets capturing dense pedestrian dynamics across multiple scales, ranging from macroscopic crowd flows over distances of several hundred meters to microscopic individual trajectories, including approximately 7,000 recorded trajectories. The dataset also includes a sample of GPS traces, statistics on contact and push interactions, as well as a catalog of non-standard crowd phenomena observed in video recordings. Data were collected during the 2022 Festival of Lights in Lyon, France, within the framework of the French-German MADRAS project, covering pedestrian densities up to 4 individuals per square meter.

05 May 2025

Quantum circuit cutting refers to a series of techniques that allow one to partition a quantum computation on a large quantum computer into several quantum computations on smaller devices. This usually comes at the price of a sampling overhead, that is quantified by the 1-norm of the associated decomposition. The applicability of these techniques relies on the possibility of finding decompositions of the ideal, global unitaries into quantum operations that can be simulated onto each sub-register, which should ideally minimize the 1-norm. In this work, we show how these decompositions can be obtained diagrammatically using ZX-calculus expanding on the work of Ufrecht et al. [arXiv:2302.00387]. The central idea of our work is that since in ZX-calculus only connectivity matters, it should be possible to cut wires in ZX-diagrams by inserting known decompositions of the identity in standard quantum circuits. We show how, using this basic idea, many of the gate decompositions known in the literature can be re-interpreted as an instance of wire cuts in ZX-diagrams. Furthermore, we obtain improved decompositions for multi-qubit controlled-Z (MCZ) gates with 1-norm equal to 3 for any number of qubits and any partition, which we argue to be optimal. Our work gives new ways of thinking about circuit cutting that can be particularly valuable for finding decompositions of large unitary gates. Besides, it sheds light on the question of why exploiting classical communication decreases the 1-norm of a wire cut but does not do so for certain gate decompositions. In particular, using wire cuts with classical communication, we obtain gate decompositions that do not require classical communication.

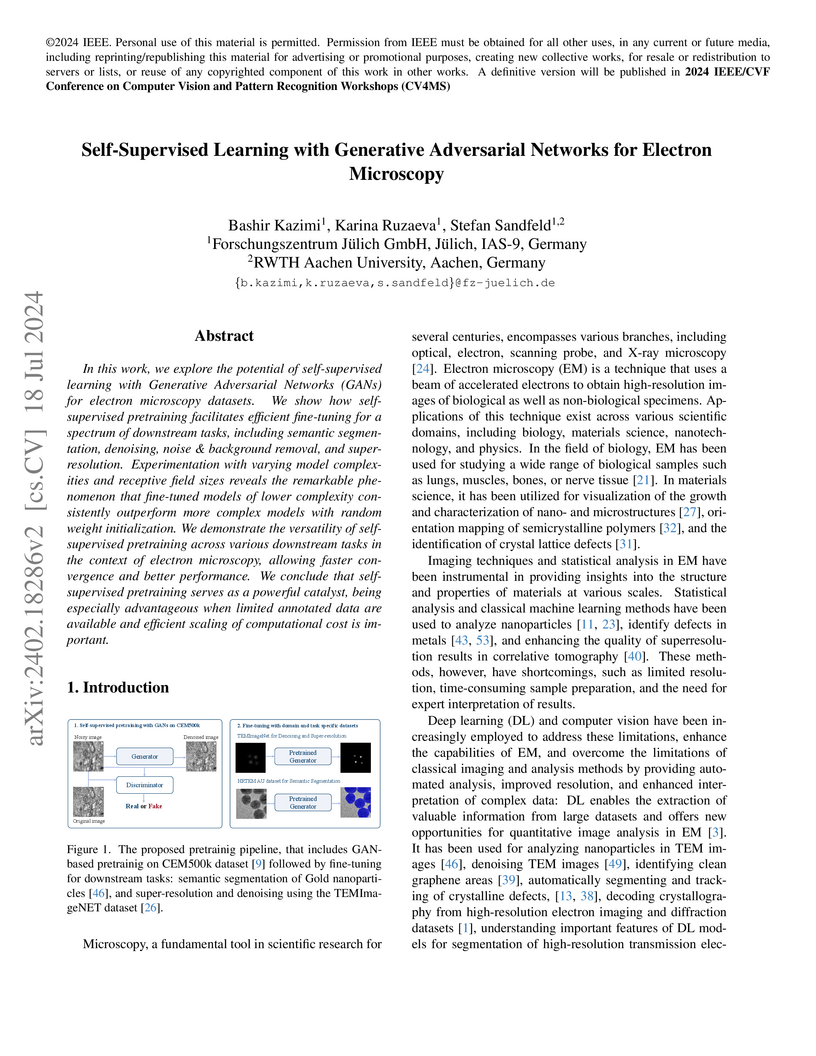

In this work, we explore the potential of self-supervised learning with Generative Adversarial Networks (GANs) for electron microscopy datasets. We show how self-supervised pretraining facilitates efficient fine-tuning for a spectrum of downstream tasks, including semantic segmentation, denoising, noise \& background removal, and super-resolution. Experimentation with varying model complexities and receptive field sizes reveals the remarkable phenomenon that fine-tuned models of lower complexity consistently outperform more complex models with random weight initialization. We demonstrate the versatility of self-supervised pretraining across various downstream tasks in the context of electron microscopy, allowing faster convergence and better performance. We conclude that self-supervised pretraining serves as a powerful catalyst, being especially advantageous when limited annotated data are available and efficient scaling of computational cost is important.

06 Jul 2023

Meta-learning of numerical algorithms for a given task consists of the data-driven identification and adaptation of an algorithmic structure and the associated hyperparameters. To limit the complexity of the meta-learning problem, neural architectures with a certain inductive bias towards favorable algorithmic structures can, and should, be used. We generalize our previously introduced Runge-Kutta neural network to a recursively recurrent neural network (R2N2) superstructure for the design of customized iterative algorithms. In contrast to off-the-shelf deep learning approaches, it features a distinct division into modules for generation of information and for the subsequent assembly of this information towards a solution. Local information in the form of a subspace is generated by subordinate, inner, iterations of recurrent function evaluations starting at the current outer iterate. The update to the next outer iterate is computed as a linear combination of these evaluations, reducing the residual in this space, and constitutes the output of the network. We demonstrate that regular training of the weight parameters inside the proposed superstructure on input/output data of various computational problem classes yields iterations similar to Krylov solvers for linear equation systems, Newton-Krylov solvers for nonlinear equation systems, and Runge-Kutta integrators for ordinary differential equations. Due to its modularity, the superstructure can be readily extended with functionalities needed to represent more general classes of iterative algorithms traditionally based on Taylor series expansions.

In chemical engineering, process data are expensive to acquire, and complex

phenomena are difficult to fully model. We explore the use of physics-informed

neural networks (PINNs) for modeling dynamic processes with incomplete

mechanistic semi-explicit differential-algebraic equation systems and scarce

process data. In particular, we focus on estimating states for which neither

direct observational data nor constitutive equations are available. We propose

an easy-to-apply heuristic to assess whether estimation of such states may be

possible. As numerical examples, we consider a continuously stirred tank

reactor and a liquid-liquid separator. We find that PINNs can infer

immeasurable states with reasonable accuracy, even if respective constitutive

equations are unknown. We thus show that PINNs are capable of modeling

processes when relatively few experimental data and only partially known

mechanistic descriptions are available, and conclude that they constitute a

promising avenue that warrants further investigation.

There are no more papers matching your filters at the moment.