German Cancer Research Center

nnInteractive introduces a comprehensive framework for interactive 3D medical image segmentation, designed to overcome limitations of 2D-focused approaches and enhance real-world applicability. It achieves true volumetric consistency, supports diverse 3D interaction types across multiple axes, generalizes across 120+ modalities, and enables radiologists to complete segmentations 72% faster while maintaining expert-level quality.

University College London

University College London University of Oxford

University of Oxford University of Science and Technology of China

University of Science and Technology of China Northwestern University

Northwestern University Rice UniversityUniversity of Colorado

Rice UniversityUniversity of Colorado King’s College LondonUniversity of IowaMayo ClinicKitware Inc.German Cancer Research CenterHeidelberg University HospitalFrederick National Laboratory for Cancer ResearchNational Cancer InstituteInstaDeep LtdMars IncorporatedGuy’s & St. Thomas’ NHS Foundation Trust

King’s College LondonUniversity of IowaMayo ClinicKitware Inc.German Cancer Research CenterHeidelberg University HospitalFrederick National Laboratory for Cancer ResearchNational Cancer InstituteInstaDeep LtdMars IncorporatedGuy’s & St. Thomas’ NHS Foundation TrustArtificial Intelligence (AI) is having a tremendous impact across most areas of science. Applications of AI in healthcare have the potential to improve our ability to detect, diagnose, prognose, and intervene on human disease. For AI models to be used clinically, they need to be made safe, reproducible and robust, and the underlying software framework must be aware of the particularities (e.g. geometry, physiology, physics) of medical data being processed. This work introduces MONAI, a freely available, community-supported, and consortium-led PyTorch-based framework for deep learning in healthcare. MONAI extends PyTorch to support medical data, with a particular focus on imaging, and provide purpose-specific AI model architectures, transformations and utilities that streamline the development and deployment of medical AI models. MONAI follows best practices for software-development, providing an easy-to-use, robust, well-documented, and well-tested software framework. MONAI preserves the simple, additive, and compositional approach of its underlying PyTorch libraries. MONAI is being used by and receiving contributions from research, clinical and industrial teams from around the world, who are pursuing applications spanning nearly every aspect of healthcare.

Researchers from Microsoft and leading medical institutions developed COLIPRI, a 3D vision-language pre-training framework that integrates contrastive learning, radiology report generation, and masked autoencoding to enhance understanding of 3D medical images. The framework significantly improved the clinical accuracy of generated radiology reports and achieved strong performance in global classification and retrieval tasks.

University of Washington

University of Washington Imperial College LondonUniversity of BernTUD Dresden University of TechnologyMayo ClinicUniversity Hospital RWTH AachenMedical University of ViennaUniversity of MilanUniversity Medical Center MainzGerman Cancer Research CenterFred Hutchinson Cancer CenterUniversity of LinzUniversity Hospital HeidelbergKepler University HospitalInternational Agency for Research on CancerUniversity Hospital Schleswig-HolsteinWorld Health OrganizationEuropean Institute of Oncology (IRCCS)

Imperial College LondonUniversity of BernTUD Dresden University of TechnologyMayo ClinicUniversity Hospital RWTH AachenMedical University of ViennaUniversity of MilanUniversity Medical Center MainzGerman Cancer Research CenterFred Hutchinson Cancer CenterUniversity of LinzUniversity Hospital HeidelbergKepler University HospitalInternational Agency for Research on CancerUniversity Hospital Schleswig-HolsteinWorld Health OrganizationEuropean Institute of Oncology (IRCCS)A deep learning framework, EAGLE, was developed to efficiently analyze whole slide pathology images, achieving an average AUROC of 0.742 across 31 tasks while processing images over 99% faster than previous methods by selectively focusing on critical regions.

Since the introduction of TotalSegmentator CT, there is demand for a similar

robust automated MRI segmentation tool that can be applied across all MRI

sequences and anatomic structures. In this retrospective study, a nnU-Net model

(TotalSegmentator) was trained on MRI and CT examinations to segment 80

anatomic structures relevant for use cases such as organ volumetry, disease

characterization, surgical planning and opportunistic screening. Examinations

were randomly sampled from routine clinical studies to represent real-world

examples. Dice scores were calculated between the predicted segmentations and

expert radiologist reference standard segmentations to evaluate model

performance on an internal test set, two external test sets and against two

publicly available models, and TotalSegmentator CT. The model was applied to an

internal dataset containing abdominal MRIs to investigate age-dependent volume

changes. A total of 1143 examinations (616 MRIs, 527 CTs) (median age 61 years,

IQR 50-72) were split into training (n=1088, CT and MRI) and an internal test

set (n=55; only MRI), two external test sets (AMOS, n=20; CHAOS, n=20; only

MRI), and an internal aging-study dataset of 8672 abdominal MRIs (median age 59

years, IQR 45-70) were included. The model showed a Dice Score of 0.839 on the

internal test set and outperformed two other models (Dice Score, 0.862 versus

0.759; and 0.838 versus 0.560; p<.001 for both). The proposed open-source,

easy-to-use model allows for automatic, robust segmentation of 80 structures,

extending the capabilities of TotalSegmentator to MRIs of any sequence. The

ready-to-use online tool is available at this https URL, the

model at this https URL, and the dataset at

this https URL

MedNeXt, developed at the German Cancer Research Center (DKFZ), presents a modernized 3D convolutional network for medical image segmentation that integrates Transformer-inspired design principles. The architecture achieves state-of-the-art performance across diverse medical tasks by introducing techniques like UpKern for effective large kernel initialization and compound scaling of network dimensions.

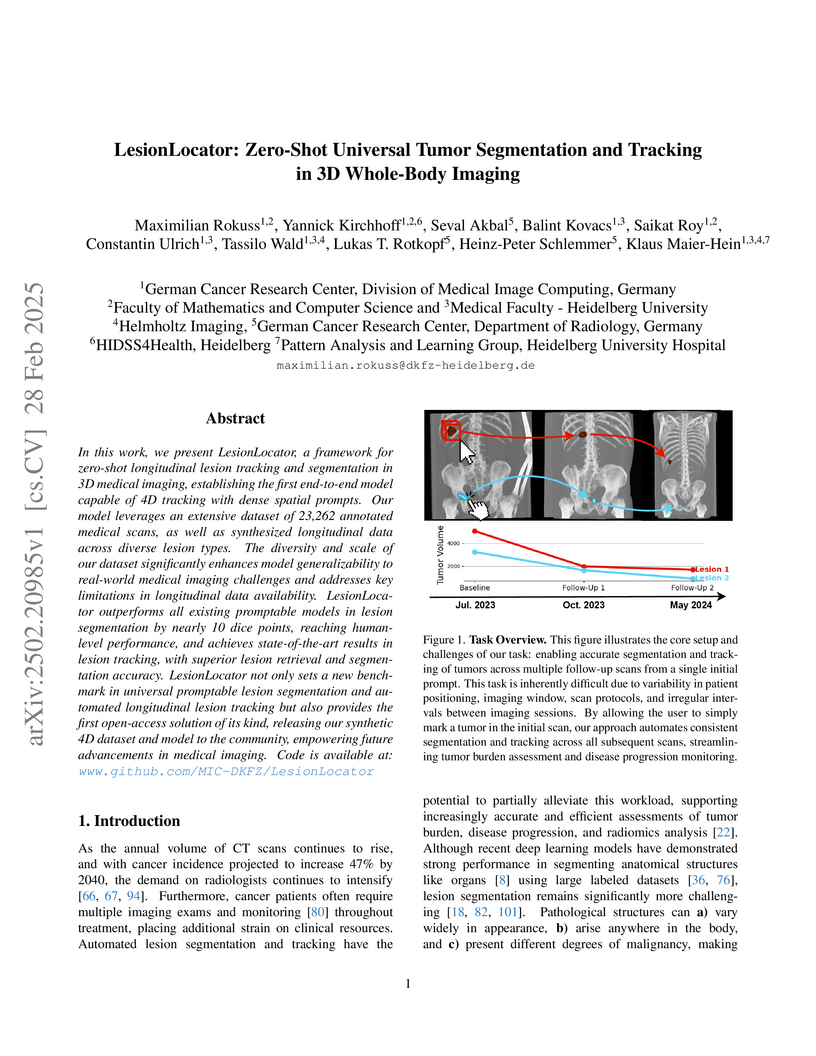

In this work, we present LesionLocator, a framework for zero-shot

longitudinal lesion tracking and segmentation in 3D medical imaging,

establishing the first end-to-end model capable of 4D tracking with dense

spatial prompts. Our model leverages an extensive dataset of 23,262 annotated

medical scans, as well as synthesized longitudinal data across diverse lesion

types. The diversity and scale of our dataset significantly enhances model

generalizability to real-world medical imaging challenges and addresses key

limitations in longitudinal data availability. LesionLocator outperforms all

existing promptable models in lesion segmentation by nearly 10 dice points,

reaching human-level performance, and achieves state-of-the-art results in

lesion tracking, with superior lesion retrieval and segmentation accuracy.

LesionLocator not only sets a new benchmark in universal promptable lesion

segmentation and automated longitudinal lesion tracking but also provides the

first open-access solution of its kind, releasing our synthetic 4D dataset and

model to the community, empowering future advancements in medical imaging. Code

is available at: www.github.com/MIC-DKFZ/LesionLocator

Understanding temporal dynamics in medical imaging is crucial for applications such as disease progression modeling, treatment planning and anatomical development tracking. However, most deep learning methods either consider only single temporal contexts, or focus on tasks like classification or regression, limiting their ability for fine-grained spatial predictions. While some approaches have been explored, they are often limited to single timepoints, specific diseases or have other technical restrictions. To address this fundamental gap, we introduce Temporal Flow Matching (TFM), a unified generative trajectory method that (i) aims to learn the underlying temporal distribution, (ii) by design can fall back to a nearest image predictor, i.e. predicting the last context image (LCI), as a special case, and (iii) supports 3D volumes, multiple prior scans, and irregular sampling. Extensive benchmarks on three public longitudinal datasets show that TFM consistently surpasses spatio-temporal methods from natural imaging, establishing a new state-of-the-art and robust baseline for 4D medical image prediction.

This paper presents Invertible Neural Networks (INNs) as a robust method for solving ambiguous inverse problems across scientific disciplines, enabling the efficient estimation of full posterior parameter distributions. The approach accurately captures complex, multi-modal uncertainties and correlations in hidden parameters, outperforming traditional and deep learning methods in calibration and re-simulation accuracy on both synthetic and real-world medical and astrophysical data.

Many real-world vision problems suffer from inherent ambiguities. In clinical

applications for example, it might not be clear from a CT scan alone which

particular region is cancer tissue. Therefore a group of graders typically

produces a set of diverse but plausible segmentations. We consider the task of

learning a distribution over segmentations given an input. To this end we

propose a generative segmentation model based on a combination of a U-Net with

a conditional variational autoencoder that is capable of efficiently producing

an unlimited number of plausible hypotheses. We show on a lung abnormalities

segmentation task and on a Cityscapes segmentation task that our model

reproduces the possible segmentation variants as well as the frequencies with

which they occur, doing so significantly better than published approaches.

These models could have a high impact in real-world applications, such as being

used as clinical decision-making algorithms accounting for multiple plausible

semantic segmentation hypotheses to provide possible diagnoses and recommend

further actions to resolve the present ambiguities.

University of Washington

University of Washington New York University

New York University The Chinese University of Hong KongThe University of Edinburgh

The Chinese University of Hong KongThe University of Edinburgh Cornell University

Cornell University McGill University

McGill University Northwestern UniversityUniversity of MissouriMayo ClinicUniversity Hospital EssenWashington UniversityGerman Cancer Research CenterUniversity of UlmYale University School of MedicineUniversity of LeipzigNorthShore University HealthSystemChildren’s National HospitalLoyola University Medical CenterChildren’s Healthcare of AtlantaSage BionetworksMercy Catholic Medical CenterUniversity of Arkansas for Medical SciencesUniversity of Pennsylvania School of MedicineLudwig Maximillian UniversityUniversity of DusseldorfVisage Imaging, GmbHVisage Imaging, IncQueens

’ University

Northwestern UniversityUniversity of MissouriMayo ClinicUniversity Hospital EssenWashington UniversityGerman Cancer Research CenterUniversity of UlmYale University School of MedicineUniversity of LeipzigNorthShore University HealthSystemChildren’s National HospitalLoyola University Medical CenterChildren’s Healthcare of AtlantaSage BionetworksMercy Catholic Medical CenterUniversity of Arkansas for Medical SciencesUniversity of Pennsylvania School of MedicineLudwig Maximillian UniversityUniversity of DusseldorfVisage Imaging, GmbHVisage Imaging, IncQueens

’ UniversityThe translation of AI-generated brain metastases (BM) segmentation into clinical practice relies heavily on diverse, high-quality annotated medical imaging datasets. The BraTS-METS 2023 challenge has gained momentum for testing and benchmarking algorithms using rigorously annotated internationally compiled real-world datasets. This study presents the results of the segmentation challenge and characterizes the challenging cases that impacted the performance of the winning algorithms. Untreated brain metastases on standard anatomic MRI sequences (T1, T2, FLAIR, T1PG) from eight contributed international datasets were annotated in stepwise method: published UNET algorithms, student, neuroradiologist, final approver neuroradiologist. Segmentations were ranked based on lesion-wise Dice and Hausdorff distance (HD95) scores. False positives (FP) and false negatives (FN) were rigorously penalized, receiving a score of 0 for Dice and a fixed penalty of 374 for HD95. Eight datasets comprising 1303 studies were annotated, with 402 studies (3076 lesions) released on Synapse as publicly available datasets to challenge competitors. Additionally, 31 studies (139 lesions) were held out for validation, and 59 studies (218 lesions) were used for testing. Segmentation accuracy was measured as rank across subjects, with the winning team achieving a LesionWise mean score of 7.9. Common errors among the leading teams included false negatives for small lesions and misregistration of masks in this http URL BraTS-METS 2023 challenge successfully curated well-annotated, diverse datasets and identified common errors, facilitating the translation of BM segmentation across varied clinical environments and providing personalized volumetric reports to patients undergoing BM treatment.

Automated segmentation of Pancreatic Ductal Adenocarcinoma (PDAC) from MRI is critical for clinical workflows but is hindered by poor tumor-tissue contrast and a scarcity of annotated data. This paper details our submission to the PANTHER challenge, addressing both diagnostic T1-weighted (Task 1) and therapeutic T2-weighted (Task 2) segmentation. Our approach is built upon the nnU-Net framework and leverages a deep, multi-stage cascaded pre-training strategy, starting from a general anatomical foundation model and sequentially fine-tuning on CT pancreatic lesion datasets and the target MRI modalities. Through extensive five-fold cross-validation, we systematically evaluated data augmentation schemes and training schedules. Our analysis revealed a critical trade-off, where aggressive data augmentation produced the highest volumetric accuracy, while default augmentations yielded superior boundary precision (achieving a state-of-the-art MASD of 5.46 mm and HD95 of 17.33 mm for Task 1). For our final submission, we exploited this finding by constructing custom, heterogeneous ensembles of specialist models, essentially creating a mix of experts. This metric-aware ensembling strategy proved highly effective, achieving a top cross-validation Tumor Dice score of 0.661 for Task 1 and 0.523 for Task 2. Our work presents a robust methodology for developing specialized, high-performance models in the context of limited data and complex medical imaging tasks (Team MIC-DKFZ).

University of WashingtonGeorge Washington UniversityIndiana University

University of WashingtonGeorge Washington UniversityIndiana University Cornell University

Cornell University University of California, San Diego

University of California, San Diego Northwestern University

Northwestern University University of PennsylvaniaMassachusetts General Hospital

University of PennsylvaniaMassachusetts General Hospital King’s College London

King’s College London Duke UniversityHelmholtz MunichIntelMayo ClinicUniversity Hospital RWTH AachenUniversity of ZürichTechnical University MunichGerman Cancer Research CenterUniversity of California San FranciscoUniversity Hospital BonnDuke University Medical CenterChildren’s National HospitalKing’s College HospitalChildren’s Hospital of PhiladelphiaSUNY Upstate Medical UniversityGuy’s and St. Thomas’ NHS Foundation TrustSage BionetworksCrestview RadiologyMLCommonsResearch Center JuelichFactored AICMH Lahore Medical CollegeCenter for AI and Data ScienceUniversit

Laval

Duke UniversityHelmholtz MunichIntelMayo ClinicUniversity Hospital RWTH AachenUniversity of ZürichTechnical University MunichGerman Cancer Research CenterUniversity of California San FranciscoUniversity Hospital BonnDuke University Medical CenterChildren’s National HospitalKing’s College HospitalChildren’s Hospital of PhiladelphiaSUNY Upstate Medical UniversityGuy’s and St. Thomas’ NHS Foundation TrustSage BionetworksCrestview RadiologyMLCommonsResearch Center JuelichFactored AICMH Lahore Medical CollegeCenter for AI and Data ScienceUniversit

LavalThe 2024 Brain Tumor Segmentation Meningioma Radiotherapy (BraTS-MEN-RT) challenge aimed to advance automated segmentation algorithms using the largest known multi-institutional dataset of 750 radiotherapy planning brain MRIs with expert-annotated target labels for patients with intact or postoperative meningioma that underwent either conventional external beam radiotherapy or stereotactic radiosurgery. Each case included a defaced 3D post-contrast T1-weighted radiotherapy planning MRI in its native acquisition space, accompanied by a single-label "target volume" representing the gross tumor volume (GTV) and any at-risk post-operative site. Target volume annotations adhered to established radiotherapy planning protocols, ensuring consistency across cases and institutions, and were approved by expert neuroradiologists and radiation oncologists. Six participating teams developed, containerized, and evaluated automated segmentation models using this comprehensive dataset. Team rankings were assessed using a modified lesion-wise Dice Similarity Coefficient (DSC) and 95% Hausdorff Distance (95HD). The best reported average lesion-wise DSC and 95HD was 0.815 and 26.92 mm, respectively. BraTS-MEN-RT is expected to significantly advance automated radiotherapy planning by enabling precise tumor segmentation and facilitating tailored treatment, ultimately improving patient outcomes. We describe the design and results from the BraTS-MEN-RT challenge.

CNRS

CNRS University of CambridgeHeidelberg University

University of CambridgeHeidelberg University Imperial College LondonUniversity of Zurich

Imperial College LondonUniversity of Zurich University College London

University College London The Chinese University of Hong Kong

The Chinese University of Hong Kong University of PennsylvaniaTUD Dresden University of Technology

University of PennsylvaniaTUD Dresden University of Technology Sorbonne Université

Sorbonne Université InriaHelmholtz-Zentrum Dresden-Rossendorf (HZDR)INSERMHelmholtz-Zentrum Dresden-RossendorfUniversity Hospital CologneWestern UniversityGerman Cancer Research CenterHeidelberg University HospitalUniversity of StrasbourgNational Center for Tumor Diseases (NCT)Lawson Health Research InstituteHelmholtz ImagingAP-HPInstitut du Cerveau – Paris Brain Institute - ICMBalgrist University HospitalChildren’s National HospitalReutlingen UniversityGerman Cancer Research Center (DKFZ) HeidelbergUCL Hawkes InstituteUniversity College London HospitalNational Center for Tumor DiseasesEnAcuity Ltd.University Hospital LeipzigHIDSS4Health - Helmholtz Information and Data Science School for HealthHôpital de la Pitié SalpêtrièreHI Helmholtz ImagingElse Kröner Fresenius Center for Digital HealthFaculty of Medicine and University Hospital Carl Gustav CarusAI Health Innovation ClusterUniversity Medical Center HeidelbergAP-HP, Hôpital de la Pitié-SalpêtrièreScialyticsDZHK Partnersite Heidelberg-MannheimVerb Surgical Inc.Children

DANQueens

’ University

InriaHelmholtz-Zentrum Dresden-Rossendorf (HZDR)INSERMHelmholtz-Zentrum Dresden-RossendorfUniversity Hospital CologneWestern UniversityGerman Cancer Research CenterHeidelberg University HospitalUniversity of StrasbourgNational Center for Tumor Diseases (NCT)Lawson Health Research InstituteHelmholtz ImagingAP-HPInstitut du Cerveau – Paris Brain Institute - ICMBalgrist University HospitalChildren’s National HospitalReutlingen UniversityGerman Cancer Research Center (DKFZ) HeidelbergUCL Hawkes InstituteUniversity College London HospitalNational Center for Tumor DiseasesEnAcuity Ltd.University Hospital LeipzigHIDSS4Health - Helmholtz Information and Data Science School for HealthHôpital de la Pitié SalpêtrièreHI Helmholtz ImagingElse Kröner Fresenius Center for Digital HealthFaculty of Medicine and University Hospital Carl Gustav CarusAI Health Innovation ClusterUniversity Medical Center HeidelbergAP-HP, Hôpital de la Pitié-SalpêtrièreScialyticsDZHK Partnersite Heidelberg-MannheimVerb Surgical Inc.Children

DANQueens

’ UniversitySurgical data science (SDS) is rapidly advancing, yet clinical adoption of artificial intelligence (AI) in surgery remains severely limited, with inadequate validation emerging as a key obstacle. In fact, existing validation practices often neglect the temporal and hierarchical structure of intraoperative videos, producing misleading, unstable, or clinically irrelevant results. In a pioneering, consensus-driven effort, we introduce the first comprehensive catalog of validation pitfalls in AI-based surgical video analysis that was derived from a multi-stage Delphi process with 91 international experts. The collected pitfalls span three categories: (1) data (e.g., incomplete annotation, spurious correlations), (2) metric selection and configuration (e.g., neglect of temporal stability, mismatch with clinical needs), and (3) aggregation and reporting (e.g., clinically uninformative aggregation, failure to account for frame dependencies in hierarchical data structures). A systematic review of surgical AI papers reveals that these pitfalls are widespread in current practice, with the majority of studies failing to account for temporal dynamics or hierarchical data structure, or relying on clinically uninformative metrics. Experiments on real surgical video datasets provide the first empirical evidence that ignoring temporal and hierarchical data structures can lead to drastic understatement of uncertainty, obscure critical failure modes, and even alter algorithm rankings. This work establishes a framework for the rigorous validation of surgical video analysis algorithms, providing a foundation for safe clinical translation, benchmarking, regulatory review, and future reporting standards in the field.

06 Mar 2023

The Cox model is an indispensable tool for time-to-event analysis,

particularly in biomedical research. However, medicine is undergoing a profound

transformation, generating data at an unprecedented scale, which opens new

frontiers to study and understand diseases. With the wealth of data collected,

new challenges for statistical inference arise, as datasets are often high

dimensional, exhibit an increasing number of measurements at irregularly spaced

time points, and are simply too large to fit in memory. Many current

implementations for time-to-event analysis are ill-suited for these problems as

inference is computationally demanding and requires access to the full data at

once. Here we propose a Bayesian version for the counting process

representation of Cox's partial likelihood for efficient inference on

large-scale datasets with millions of data points and thousands of

time-dependent covariates. Through the combination of stochastic variational

inference and a reweighting of the log-likelihood, we obtain an approximation

for the posterior distribution that factorizes over subsamples of the data,

enabling the analysis in big data settings. Crucially, the method produces

viable uncertainty estimates for large-scale and high-dimensional datasets. We

show the utility of our method through a simulation study and an application to

myocardial infarction in the UK Biobank.

Classification of heterogeneous diseases is challenging due to their

complexity, variability of symptoms and imaging findings. Chronic Obstructive

Pulmonary Disease (COPD) is a prime example, being underdiagnosed despite being

the third leading cause of death. Its sparse, diffuse and heterogeneous

appearance on computed tomography challenges supervised binary classification.

We reformulate COPD binary classification as an anomaly detection task,

proposing cOOpD: heterogeneous pathological regions are detected as

Out-of-Distribution (OOD) from normal homogeneous lung regions. To this end, we

learn representations of unlabeled lung regions employing a self-supervised

contrastive pretext model, potentially capturing specific characteristics of

diseased and healthy unlabeled regions. A generative model then learns the

distribution of healthy representations and identifies abnormalities (stemming

from COPD) as deviations. Patient-level scores are obtained by aggregating

region OOD scores. We show that cOOpD achieves the best performance on two

public datasets, with an increase of 8.2% and 7.7% in terms of AUROC compared

to the previous supervised state-of-the-art. Additionally, cOOpD yields

well-interpretable spatial anomaly maps and patient-level scores which we show

to be of additional value in identifying individuals in the early stage of

progression. Experiments in artificially designed real-world prevalence

settings further support that anomaly detection is a powerful way of tackling

COPD classification.

Researchers at Massachusetts General Hospital and collaborating institutions develop a region-adaptive MRI super-resolution system combining mixture-of-experts with diffusion models, achieving superior image quality metrics while enabling specialized processing of distinct anatomical regions through three expert networks that dynamically adapt to tissue characteristics.

Researchers at the German Cancer Research Center and Heidelberg University performed the most extensive single-cell and spatial analysis of colorectal adenocarcinoma tumor microenvironments (TME) to date, identifying 10 coordinated multicellular programs and specific metabolic and spatial reorganizations that track disease progression. This work, utilizing MIBI-TOF and the open-source MuVIcell framework, provides a holistic understanding of how TME trajectories evolve across tumor stages.

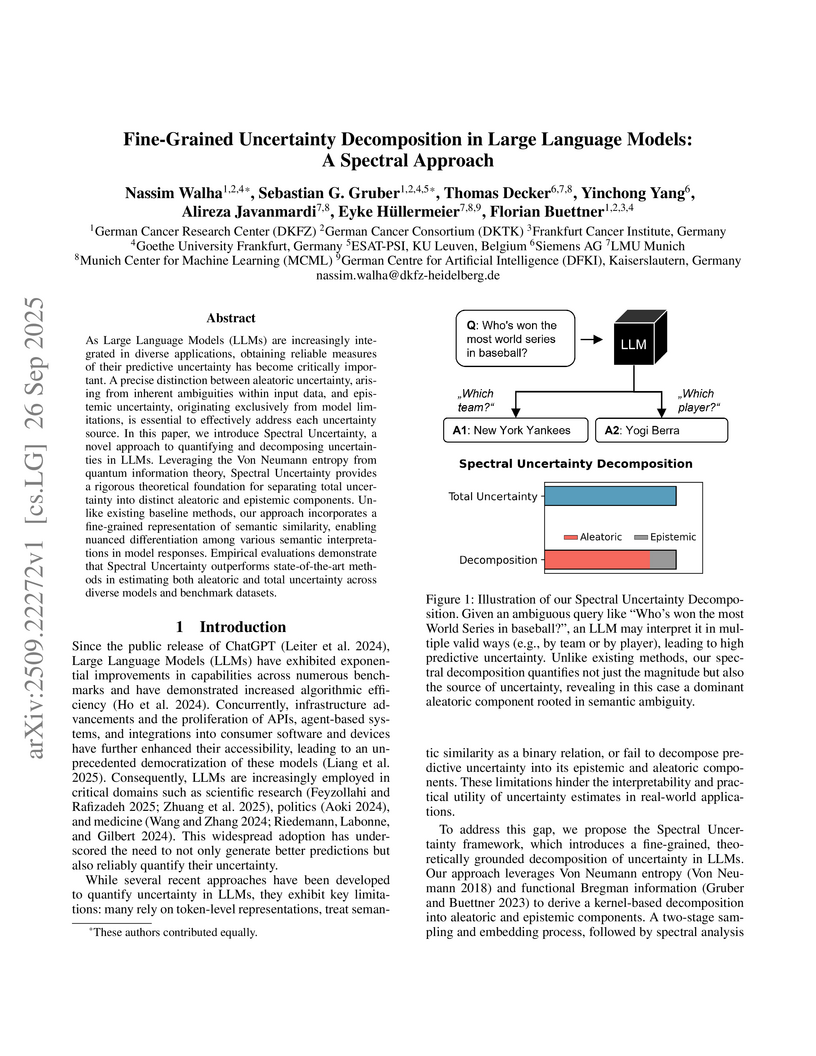

As Large Language Models (LLMs) are increasingly integrated in diverse applications, obtaining reliable measures of their predictive uncertainty has become critically important. A precise distinction between aleatoric uncertainty, arising from inherent ambiguities within input data, and epistemic uncertainty, originating exclusively from model limitations, is essential to effectively address each uncertainty source. In this paper, we introduce Spectral Uncertainty, a novel approach to quantifying and decomposing uncertainties in LLMs. Leveraging the Von Neumann entropy from quantum information theory, Spectral Uncertainty provides a rigorous theoretical foundation for separating total uncertainty into distinct aleatoric and epistemic components. Unlike existing baseline methods, our approach incorporates a fine-grained representation of semantic similarity, enabling nuanced differentiation among various semantic interpretations in model responses. Empirical evaluations demonstrate that Spectral Uncertainty outperforms state-of-the-art methods in estimating both aleatoric and total uncertainty across diverse models and benchmark datasets.

Medical imaging only indirectly measures the molecular identity of the tissue

within each voxel, which often produces only ambiguous image evidence for

target measures of interest, like semantic segmentation. This diversity and the

variations of plausible interpretations are often specific to given image

regions and may thus manifest on various scales, spanning all the way from the

pixel to the image level. In order to learn a flexible distribution that can

account for multiple scales of variations, we propose the Hierarchical

Probabilistic U-Net, a segmentation network with a conditional variational

auto-encoder (cVAE) that uses a hierarchical latent space decomposition. We

show that this model formulation enables sampling and reconstruction of

segmenations with high fidelity, i.e. with finely resolved detail, while

providing the flexibility to learn complex structured distributions across

scales. We demonstrate these abilities on the task of segmenting ambiguous

medical scans as well as on instance segmentation of neurobiological and

natural images. Our model automatically separates independent factors across

scales, an inductive bias that we deem beneficial in structured output

prediction tasks beyond segmentation.

There are no more papers matching your filters at the moment.