Griffith University

TIME-LLM introduces a reprogramming framework that adapts large language models for general time series forecasting by keeping the LLM backbone frozen. The approach achieves state-of-the-art performance across various benchmarks, excelling particularly in data-scarce few-shot and zero-shot settings.

University of WashingtonWuhan University

University of WashingtonWuhan University University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign UCLA

UCLA Chinese Academy of SciencesShanghai AI Laboratory

Chinese Academy of SciencesShanghai AI Laboratory New York University

New York University National University of Singapore

National University of Singapore Fudan University

Fudan University Georgia Institute of Technology

Georgia Institute of Technology University of Science and Technology of China

University of Science and Technology of China Zhejiang UniversityUniversity of Electronic Science and Technology of China

Zhejiang UniversityUniversity of Electronic Science and Technology of China Renmin University of China

Renmin University of China The Hong Kong Polytechnic University

The Hong Kong Polytechnic University Peking UniversityGriffith University

Peking UniversityGriffith University Nanyang Technological University

Nanyang Technological University Johns Hopkins University

Johns Hopkins University The University of Hong Kong

The University of Hong Kong The Pennsylvania State UniversityA*STARShanghai UniversityUniversity of Illinois at ChicagoSingapore Management University

The Pennsylvania State UniversityA*STARShanghai UniversityUniversity of Illinois at ChicagoSingapore Management University Southern University of Science and Technology

Southern University of Science and Technology HKUST

HKUST TencentTeleAISquirrel Ai LearningHong Kong University of Science and Technology (Guangzhou)The University of North Carolina at Chapel HillBen Gurion UniversityCenter for Applied Scientific Computing

TencentTeleAISquirrel Ai LearningHong Kong University of Science and Technology (Guangzhou)The University of North Carolina at Chapel HillBen Gurion UniversityCenter for Applied Scientific ComputingThis survey paper defines and applies a 'full-stack' safety concept for Large Language Models (LLMs), systematically analyzing safety concerns across their entire lifecycle from data to deployment and commercialization. The collaboration synthesizes findings from over 900 papers, providing a unified taxonomy of attacks and defenses while identifying key insights and future research directions for LLM and LLM-agent safety.

GFM-RAG introduces the first graph foundation model specifically designed for Retrieval Augmented Generation (RAG), leveraging a query-dependent Graph Neural Network to capture complex, multi-hop knowledge relationships. This model achieves state-of-the-art retrieval and question answering performance on diverse datasets and generalizes to unseen domains without fine-tuning, significantly enhancing LLM reasoning capabilities.

TIME-MOE introduces a billion-scale time series foundation model leveraging a sparse Mixture-of-Experts architecture to achieve state-of-the-art zero-shot and fine-tuned forecasting performance. The model validates scaling laws for time series, achieving over 20% average MSE reduction and significantly improving computational efficiency.

27 Aug 2025

A survey charts the recent trajectory of Compositional Visual Reasoning (CVR) from 2023 to 2025, introducing a five-stage taxonomy to explain its evolution and distinct advantages over monolithic approaches. The work systematically reviews over 260 papers, identifying key benefits such as enhanced interpretability and robustness, while also outlining persistent open challenges and future research directions for the field.

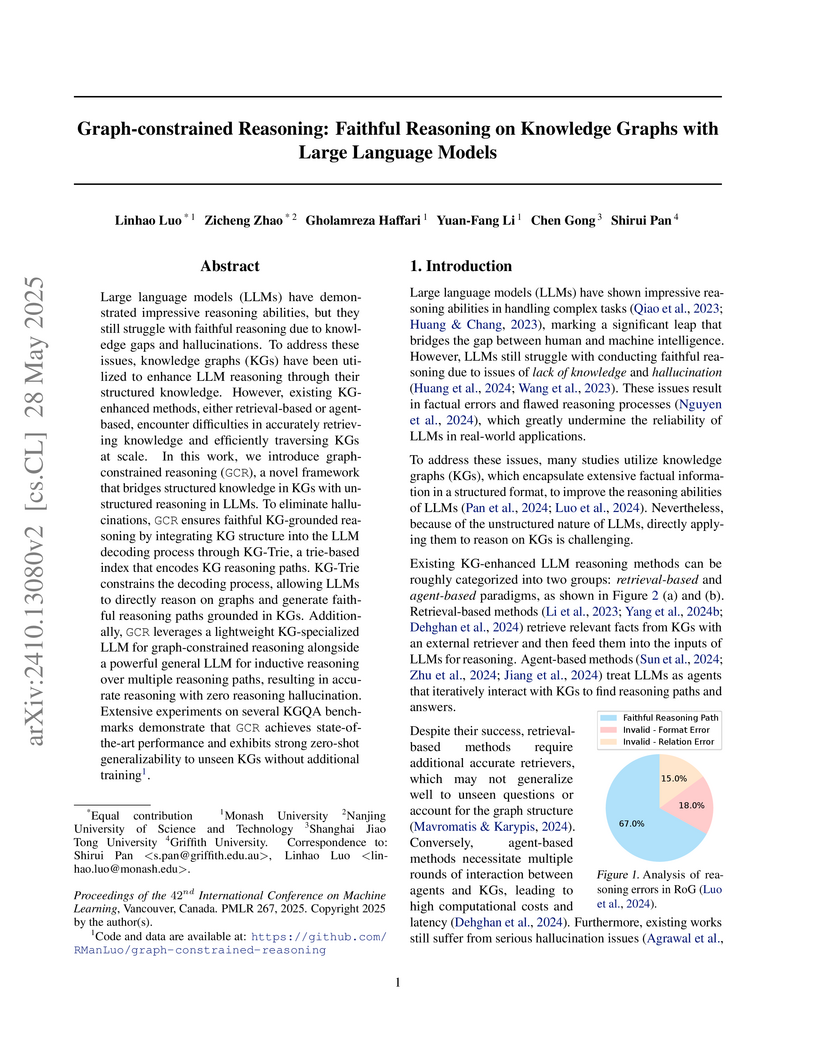

The Graph-constrained Reasoning (GCR) framework integrates Knowledge Graph (KG) structure directly into Large Language Model (LLM) decoding, achieving 100% faithful reasoning without hallucinations on KGQA tasks. This approach consistently outperforms state-of-the-art methods on benchmarks like WebQuestionSP and Complex WebQuestions by up to 9.1% while being significantly more efficient than agent-based approaches.

TIMEMIXER++, developed by researchers from Griffith University, Zhejiang University, and MIT, presents a general-purpose time series pattern machine capable of dynamically capturing patterns across multiple temporal scales and frequency resolutions. The model consistently achieves state-of-the-art performance across 8 diverse time series tasks, including long-term forecasting (reducing MSE on Electricity by 7.3%), imputation (outperforming TimesNet by 25.7% in MSE), and zero-shot forecasting (reducing MSE by 13.1%).

The Reasoning on Graphs (RoG) framework enhances Large Language Model (LLM) reasoning by integrating Knowledge Graph (KG) structural information as explicit reasoning plans. It achieves state-of-the-art performance on KGQA benchmarks, improving Hits@1 by 22.3% and F1 by 14.4% on CWQ, while providing faithful and interpretable explanations grounded in KG paths.

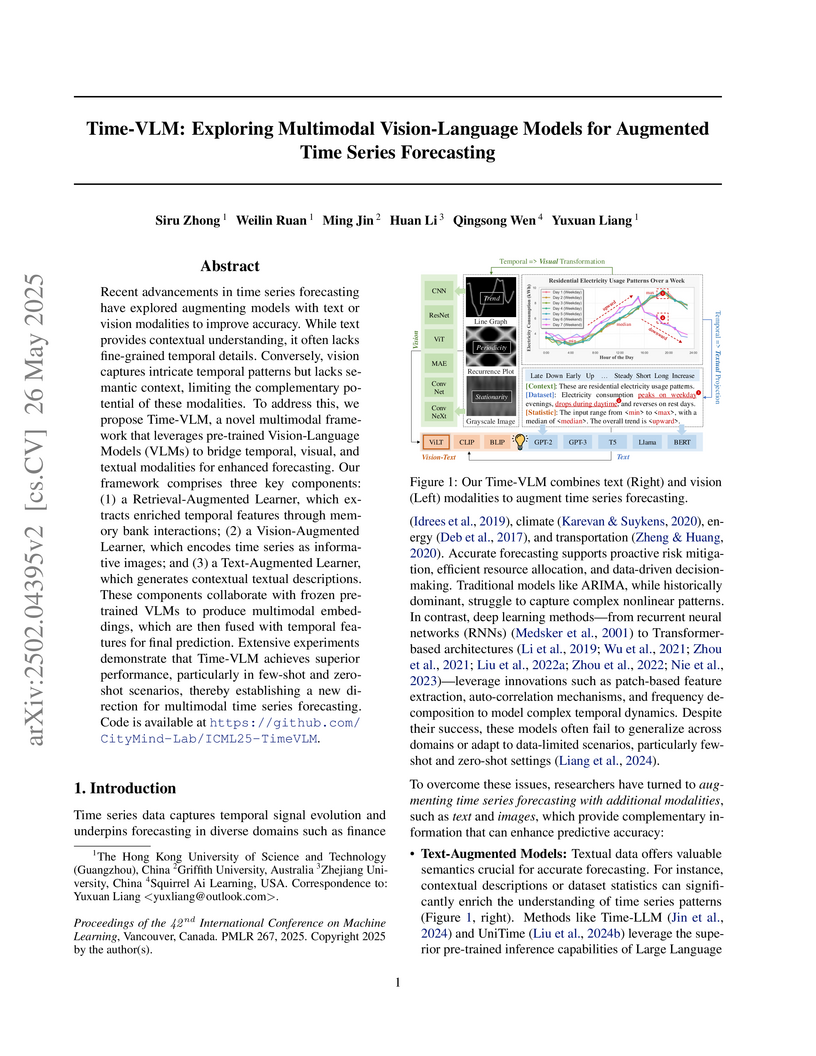

Time-VLM, developed by researchers at The Hong Kong University of Science and Technology (Guangzhou) and collaborators, proposes a unified framework that integrates temporal, visual, and textual modalities using pre-trained Vision-Language Models for time series forecasting. The model demonstrates enhanced generalization in data-scarce settings, outperforming baselines in few-shot and zero-shot scenarios, while maintaining significantly higher computational efficiency compared to existing large language model-based approaches.

Researchers introduce Diversity-Preserving Hybrid Reinforcement Learning (DPH-RL), a framework that leverages mass-covering f-divergences to counter diversity collapse and catastrophic forgetting in large language models fine-tuned with verifiable rewards. DPH-RL improves multi-attempt performance (Pass@k) and out-of-domain generalization, surpassing baselines by up to 8.35% in average out-of-domain performance on mathematical tasks.

30 Aug 2025

This survey paper, authored by researchers from Griffith University and collaborators, provides a comprehensive overview and taxonomy of Graph-Augmented Large Language Model Agents (GLA), synthesizing current advancements and outlining future research directions. It systematically categorizes how graph structures enhance LLM agents in planning, memory, tool use, and multi-agent system coordination and trustworthiness.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of Oxford

University of Oxford Fudan University

Fudan University Shanghai Jiao Tong University

Shanghai Jiao Tong University the University of Tokyo

the University of Tokyo Tsinghua University

Tsinghua University City University of Hong KongThe University of Melbourne

City University of Hong KongThe University of Melbourne ByteDance

ByteDance RIKENGriffith University

RIKENGriffith University Nanyang Technological University

Nanyang Technological University University of Wisconsin-Madison

University of Wisconsin-Madison Purdue UniversityThe University of SydneyUniversity of Massachusetts Amherst

Purdue UniversityThe University of SydneyUniversity of Massachusetts Amherst Duke University

Duke University Virginia TechSingapore Management UniversitySea AI LabUniversity of Auckland

Virginia TechSingapore Management UniversitySea AI LabUniversity of Auckland HKUSTCISPA – Helmholtz Center for Information SecurityChinese University of Hong Kong, Shenzhen

HKUSTCISPA – Helmholtz Center for Information SecurityChinese University of Hong Kong, Shenzhen University of California, Santa Cruz

University of California, Santa CruzThis comprehensive survey systematically reviews current safety research across six major large AI model paradigms and autonomous agents, presenting a detailed taxonomy of 10 attack types and corresponding defense strategies. The review identifies a predominant focus on attack methodologies (60% of papers) over defenses and outlines key open challenges for advancing AI safety.

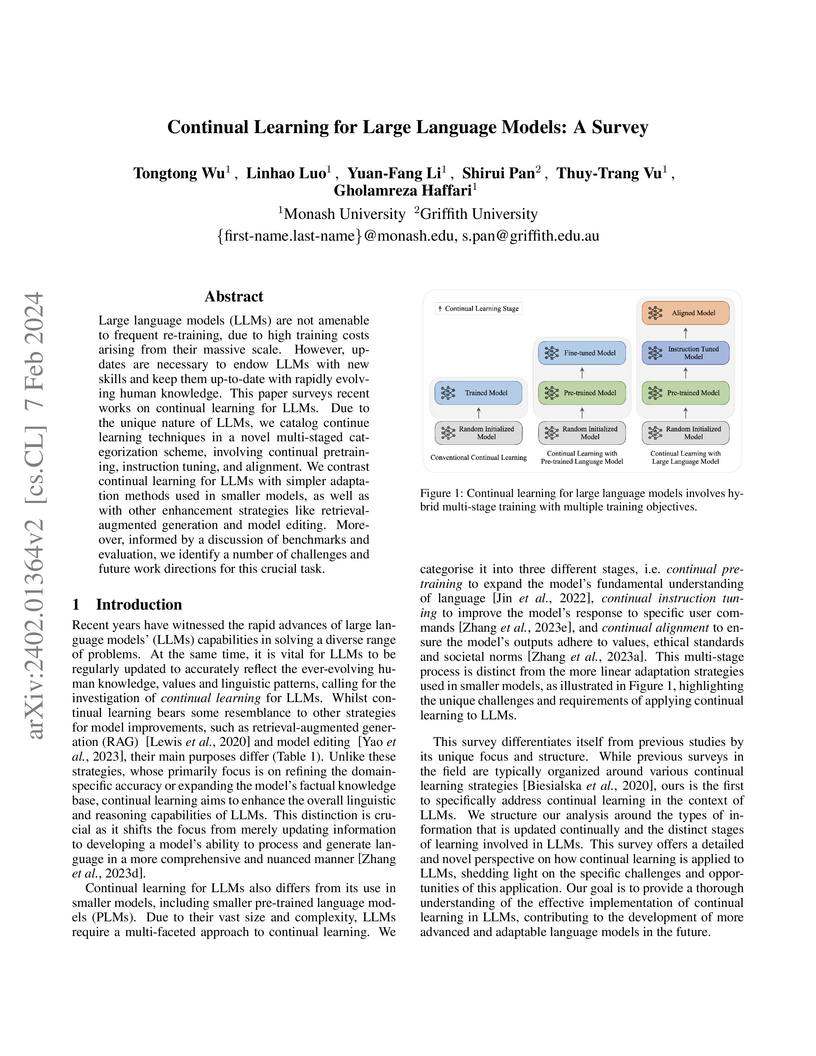

Researchers from Monash University and Griffith University present the first comprehensive survey of continual learning techniques tailored for large language models, proposing a novel multi-staged categorization scheme that aligns with the distinct phases of LLM training. The survey identifies specific challenges like "cross-stage forgetting" and outlines key areas for future research to enable LLMs to adapt continuously and sustainably to evolving information, tasks, and human values.

19 Nov 2025

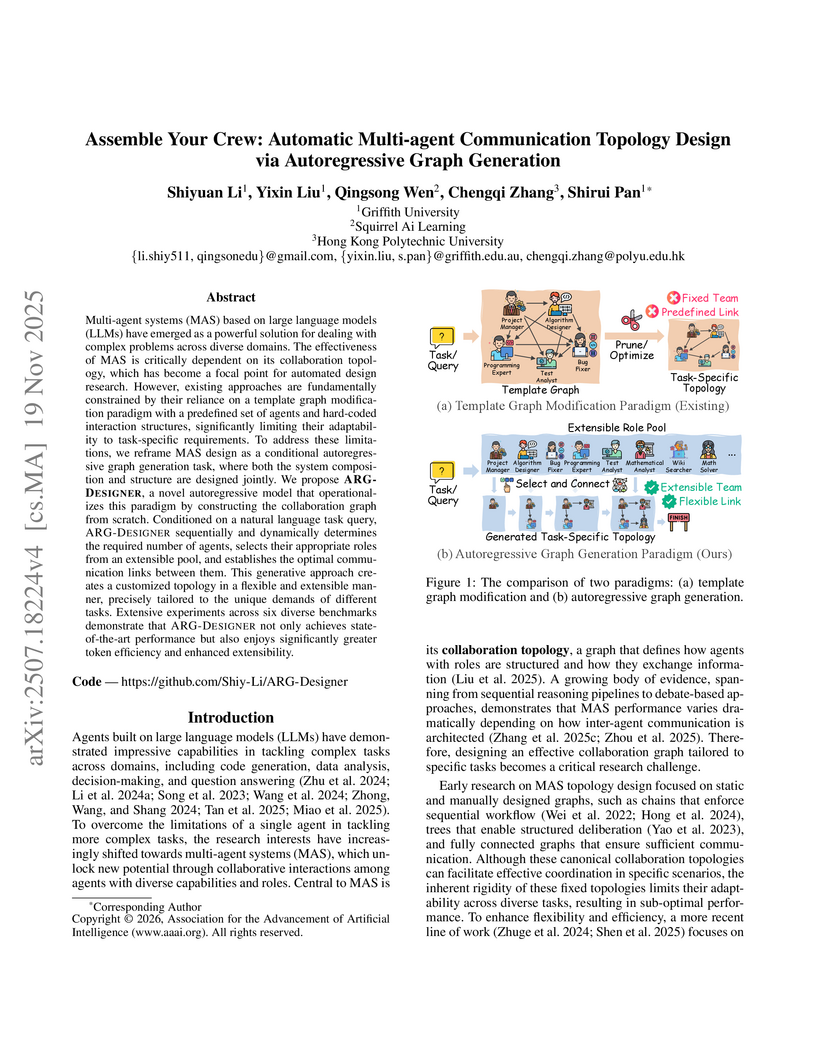

Researchers from Griffith University and collaborators introduce ARG-DESIGNER, an autoregressive graph generation model that designs customized multi-agent system communication topologies from scratch. The model achieves state-of-the-art performance across six benchmarks, including MMLU and HumanEval, while simultaneously reducing token consumption by approximately 50% compared to previous learning-based methods.

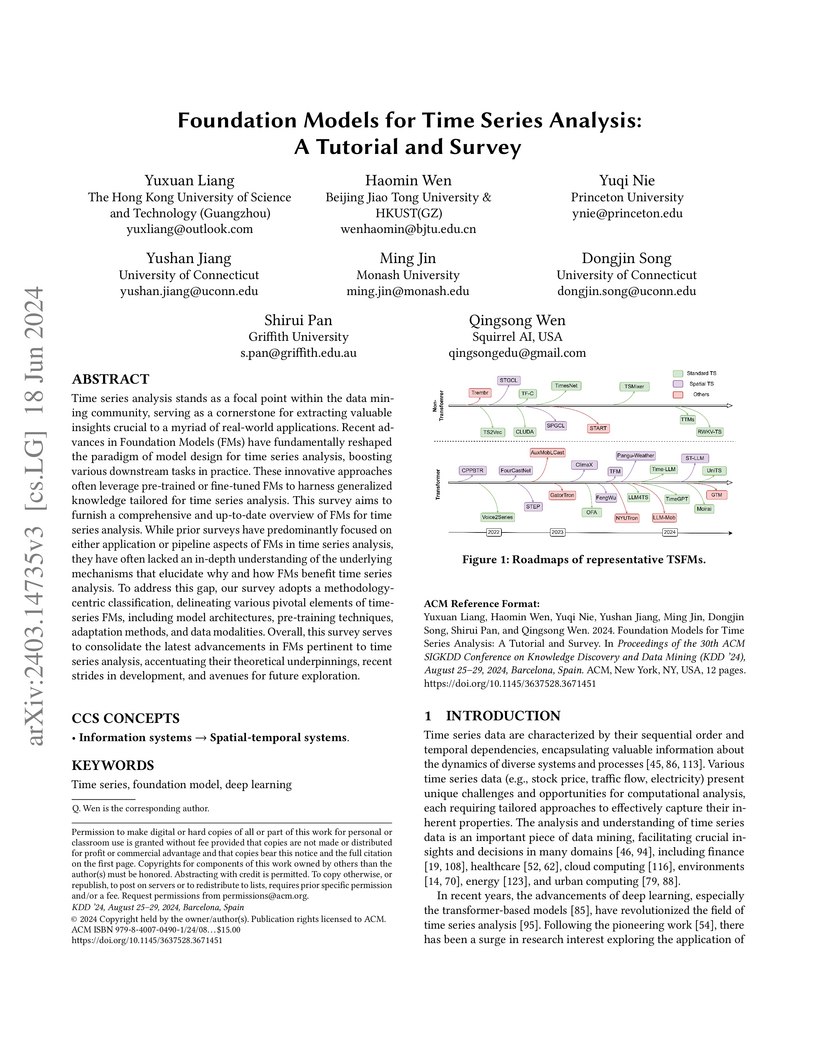

A tutorial and survey categorizes Foundation Models for Time Series (TSFMs) by their underlying mechanisms across diverse time series data types, offering a methodology-centric taxonomy of architectures, pre-training, and adaptation methods. It synthesizes current advancements and identifies future research avenues.

04 Jul 2025

This survey paper from a collaborative team including researchers from Zhejiang University, University of Illinois Chicago, and MBZUAI, offers a systematic review and taxonomy for understanding how graph techniques enhance various functionalities of AI agents. It demonstrates that graphs effectively structure complex information, leading to more capable agents in planning, execution, memory management, and multi-agent coordination, and also highlights how AI agents can advance graph learning tasks.

Diffusion models have been widely used in time series and spatio-temporal data, enhancing generative, inferential, and downstream capabilities. These models are applied across diverse fields such as healthcare, recommendation, climate, energy, audio, and traffic. By separating applications for time series and spatio-temporal data, we offer a structured perspective on model category, task type, data modality, and practical application domain. This study aims to provide a solid foundation for researchers and practitioners, inspiring future innovations that tackle traditional challenges and foster novel solutions in diffusion model-based data mining tasks and applications. For more detailed information, we have open-sourced a repository at this https URL.

RemoteCLIP introduces the first vision-language foundation model specifically designed for remote sensing, adapting the CLIP paradigm through an innovative data scaling strategy that unifies heterogeneous annotations. The model achieves State-of-the-Art performance across various remote sensing tasks, including cross-modal retrieval, zero-shot and few-shot classification, and object counting, demonstrating enhanced semantic understanding and generalization capabilities.

Researchers from Rensselaer Polytechnic Institute and collaborators audit existing Knowledge Graph Question Answering (KGQA) datasets, revealing an average factual correctness of only 57%. They introduce KGQAGen, an LLM-guided framework for creating high-quality, verifiable benchmarks, and use it to construct KGQAGen-10k, which achieves 96.3% factual accuracy and highlights retrieval as a primary bottleneck for state-of-the-art KG-RAG models.

TableDART presents a framework for multimodal table understanding that dynamically routes each query-table pair to optimal processing paths (text-only, image-only, or fusion), achieving state-of-the-art performance among open-source models. It outperforms existing multimodal baselines by an average of +4.02% accuracy and reduces inference latency by 24.5% while using nearly 10 times fewer trainable parameters.

There are no more papers matching your filters at the moment.