Hunan University of Technology and Business

28 May 2025

This paper offers a comprehensive tutorial detailing the systematic integration of Large AI Models (LAMs) and Agentic AI into future 6G communication networks. It outlines core concepts, a five-component Agentic AI system architecture, and specific design methodologies, demonstrating how these advanced AI paradigms can enable autonomous and self-optimizing communication systems.

An approach to multimodal semantic communication (LAM-MSC) leverages large AI models for unifying diverse data types, personalizing semantic interpretation, and robustly estimating wireless channels. Simulations indicate the framework achieves high compression rates and maintains robust performance.

Semantic communication (SC) is an emerging intelligent paradigm, offering solutions for various future applications like metaverse, mixed reality, and the Internet of Everything. However, in current SC systems, the construction of the knowledge base (KB) faces several issues, including limited knowledge representation, frequent knowledge updates, and insecure knowledge sharing. Fortunately, the development of the large AI model (LAM) provides new solutions to overcome the above issues. Here, we propose a LAM-based SC framework (LAM-SC) specifically designed for image data, where we first apply the segment anything model (SAM)-based KB (SKB) that can split the original image into different semantic segments by universal semantic knowledge. Then, we present an attention-based semantic integration (ASI) to weigh the semantic segments generated by SKB without human participation and integrate them as the semantic aware image. Additionally, we propose an adaptive semantic compression (ASC) encoding to remove redundant information in semantic features, thereby reducing communication overhead. Finally, through simulations, we demonstrate the effectiveness of the LAM-SC framework and the possibility of applying the LAM-based KB in future SC paradigms.

The probabilistic diffusion model (DM), generating content by inferencing through a recursive chain structure, has emerged as a powerful framework for visual generation. After pre-training on enormous data, the model needs to be properly aligned to meet requirements for downstream applications. How to efficiently align the foundation DM is a crucial task. Contemporary methods are either based on Reinforcement Learning (RL) or truncated Backpropagation (BP). However, RL and truncated BP suffer from low sample efficiency and biased gradient estimation, respectively, resulting in limited improvement or, even worse, complete training failure. To overcome the challenges, we propose the Recursive Likelihood Ratio (RLR) optimizer, a Half-Order (HO) fine-tuning paradigm for DM. The HO gradient estimator enables the computation graph rearrangement within the recursive diffusive chain, making the RLR's gradient estimator an unbiased one with lower variance than other methods. We theoretically investigate the bias, variance, and convergence of our method. Extensive experiments are conducted on image and video generation to validate the superiority of the RLR. Furthermore, we propose a novel prompt technique that is natural for the RLR to achieve a synergistic effect.

06 May 2025

The 6G wireless communications aim to establish an intelligent world of

ubiquitous connectivity, providing an unprecedented communication experience.

Large artificial intelligence models (LAMs) are characterized by significantly

larger scales (e.g., billions or trillions of parameters) compared to typical

artificial intelligence (AI) models. LAMs exhibit outstanding cognitive

abilities, including strong generalization capabilities for fine-tuning to

downstream tasks, and emergent capabilities to handle tasks unseen during

training. Therefore, LAMs efficiently provide AI services for diverse

communication applications, making them crucial tools for addressing complex

challenges in future wireless communication systems. This study provides a

comprehensive review of the foundations, applications, and challenges of LAMs

in communication. First, we introduce the current state of AI-based

communication systems, emphasizing the motivation behind integrating LAMs into

communications and summarizing the key contributions. We then present an

overview of the essential concepts of LAMs in communication. This includes an

introduction to the main architectures of LAMs, such as transformer, diffusion

models, and mamba. We also explore the classification of LAMs, including large

language models (LLMs), large vision models (LVMs), large multimodal models

(LMMs), and world models, and examine their potential applications in

communication. Additionally, we cover the training methods and evaluation

techniques for LAMs in communication systems. Lastly, we introduce optimization

strategies such as chain of thought (CoT), retrieval augmented generation

(RAG), and agentic systems. Following this, we discuss the research

advancements of LAMs across various communication scenarios. Finally, we

analyze the challenges in the current research and provide insights into

potential future research directions.

FaaSMT: Lightweight Serverless Framework for Intrusion Detection Using Merkle Tree and Task Inlining

FaaSMT: Lightweight Serverless Framework for Intrusion Detection Using Merkle Tree and Task Inlining

The serverless platform aims to facilitate cloud applications'

straightforward deployment, scaling, and management. Unfortunately, the

distributed nature of serverless computing makes it difficult to port

traditional security tools directly. The existing serverless solutions

primarily identify potential threats or performance bottlenecks through

post-analysis of modified operating system audit logs, detection of encrypted

traffic offloading, or the collection of runtime metrics. However, these

methods often prove inadequate for comprehensively detecting communication

violations across functions. This limitation restricts the real-time log

monitoring and validation capabilities in distributed environments while

impeding the maintenance of minimal communication overhead. Therefore, this

paper presents FaaSMT, which aims to fill this gap by addressing research

questions related to security checks and the optimization of performance and

costs in serverless applications. This framework employs parallel processing

for the collection of distributed data logs, incorporating Merkle Tree

algorithms and heuristic optimisation methods to achieve adaptive inline

security task execution. The results of experimental trials demonstrate that

FaaSMT is capable of effectively identifying major attack types (e.g., Denial

of Wallet (DoW) and Business Logic attacks), thereby providing comprehensive

monitoring and validation of function executions while significantly reducing

performance overhead.

22 Jan 2024

Many existing coverless steganography methods establish a mapping relationship between cover images and hidden data. There exists an issue that the number of images stored in the database grows exponentially as the steganographic capacity rises. The need for a high steganographic capacity makes it challenging to build an image database. To improve the image library utilization and anti-attack capability of the steganography system, we present an efficient coverless scheme based on dynamically matched substrings. YOLO is employed for selecting optimal objects, and a mapping dictionary is established between these objects and scrambling factors. With the aid of this dictionary, each image is effectively assigned to a specific scrambling factor, which is used to scramble the receiver's sequence key. To achieve sufficient steganography capability based on a limited image library, all substrings of the scrambled sequences hold the potential to hide data. After completing the secret information matching, the ideal number of stego images will be obtained from the database. According to experimental results, this technology outperforms most previous works on data load, transmission security, and hiding capacity. Under typical geometric attacks, it can recover 79.85\% of secret information on average. Furthermore, only approximately 200 random images are needed to meet a capacity of 19 bits per image.

Semantic Communication (SC) has emerged as a novel communication paradigm in recent years, successfully transcending the Shannon physical capacity limits through innovative semantic transmission concepts. Nevertheless, extant Image Semantic Communication (ISC) systems face several challenges in dynamic environments, including low semantic density, catastrophic forgetting, and uncertain Signal-to-Noise Ratio (SNR). To address these challenges, we propose a novel Vision-Language Model-based Cross-modal Semantic Communication (VLM-CSC) system. The VLM-CSC comprises three novel components: (1) Cross-modal Knowledge Base (CKB) is used to extract high-density textual semantics from the semantically sparse image at the transmitter and reconstruct the original image based on textual semantics at the receiver. The transmission of high-density semantics contributes to alleviating bandwidth pressure. (2) Memory-assisted Encoder and Decoder (MED) employ a hybrid long/short-term memory mechanism, enabling the semantic encoder and decoder to overcome catastrophic forgetting in dynamic environments when there is a drift in the distribution of semantic features. (3) Noise Attention Module (NAM) employs attention mechanisms to adaptively adjust the semantic coding and the channel coding based on SNR, ensuring the robustness of the CSC system. The experimental simulations validate the effectiveness, adaptability, and robustness of the CSC system.

The rapid development of generative Artificial Intelligence (AI) continually unveils the potential of Semantic Communication (SemCom). However, current talking-face SemCom systems still encounter challenges such as low bandwidth utilization, semantic ambiguity, and diminished Quality of Experience (QoE). This study introduces a Large Generative Model-assisted Talking-face Semantic Communication (LGM-TSC) System tailored for the talking-face video communication. Firstly, we introduce a Generative Semantic Extractor (GSE) at the transmitter based on the FunASR model to convert semantically sparse talking-face videos into texts with high information density. Secondly, we establish a private Knowledge Base (KB) based on the Large Language Model (LLM) for semantic disambiguation and correction, complemented by a joint knowledge base-semantic-channel coding scheme. Finally, at the receiver, we propose a Generative Semantic Reconstructor (GSR) that utilizes BERT-VITS2 and SadTalker models to transform text back into a high-QoE talking-face video matching the user's timbre. Simulation results demonstrate the feasibility and effectiveness of the proposed LGM-TSC system.

A Personalized Semantic Federated Learning (PSFL) framework is introduced for Generative AI-assisted Semantic Communication (GSC), integrating Vision Transformers and employing Personalized Local Distillation (PLD) and Adaptive Global Pruning (AGP). The framework manages mobile user heterogeneity and decreases communication overhead, achieving higher accuracy and reduced energy consumption when compared to other federated learning benchmarks.

02 Dec 2024

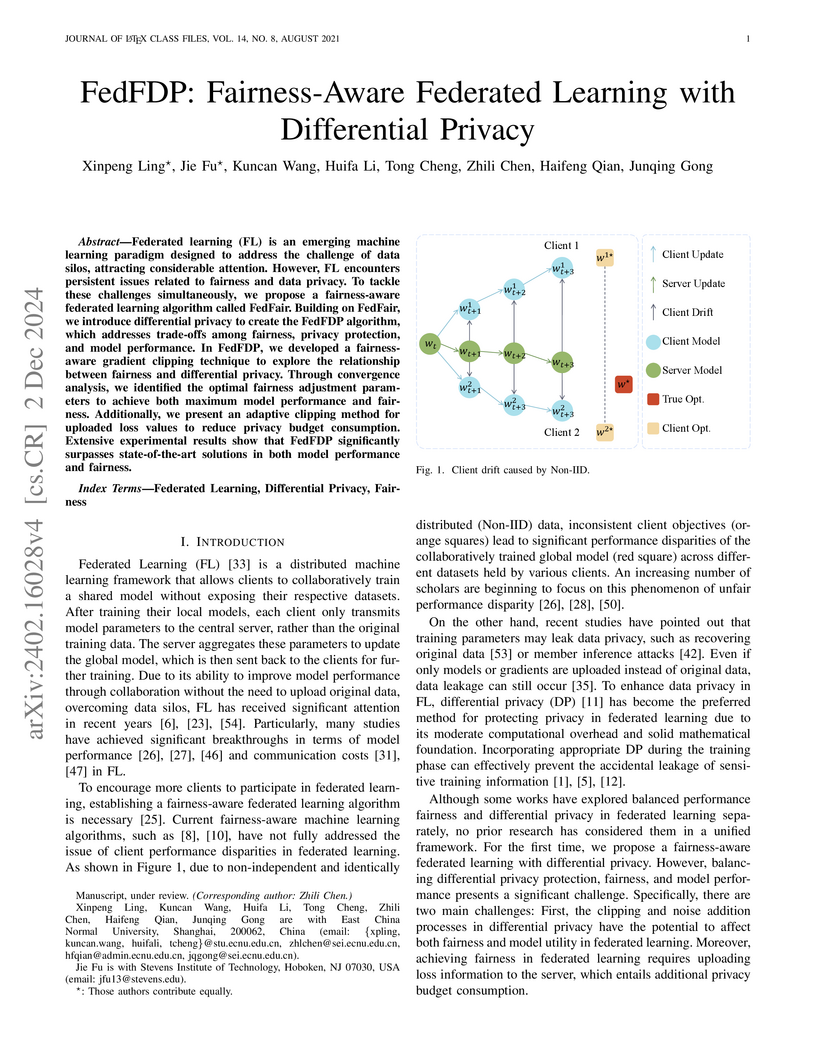

Federated learning (FL) is an emerging machine learning paradigm designed to address the challenge of data silos, attracting considerable attention. However, FL encounters persistent issues related to fairness and data privacy. To tackle these challenges simultaneously, we propose a fairness-aware federated learning algorithm called FedFair. Building on FedFair, we introduce differential privacy to create the FedFDP algorithm, which addresses trade-offs among fairness, privacy protection, and model performance. In FedFDP, we developed a fairness-aware gradient clipping technique to explore the relationship between fairness and differential privacy. Through convergence analysis, we identified the optimal fairness adjustment parameters to achieve both maximum model performance and fairness. Additionally, we present an adaptive clipping method for uploaded loss values to reduce privacy budget consumption. Extensive experimental results show that FedFDP significantly surpasses state-of-the-art solutions in both model performance and fairness.

Federated learning (FL) is a commonly distributed algorithm for mobile users (MUs) training artificial intelligence (AI) models, however, several challenges arise when applying FL to real-world scenarios, such as label scarcity, non-IID data, and unexplainability. As a result, we propose an explainable personalized FL framework, called XPFL. First, we introduce a generative AI (GAI) assisted personalized federated semi-supervised learning, called GFed. Particularly, in local training, we utilize a GAI model to learn from large unlabeled data and apply knowledge distillation-based semi-supervised learning to train the local FL model using the knowledge acquired from the GAI model. In global aggregation, we obtain the new local FL model by fusing the local and global FL models in specific proportions, allowing each local model to incorporate knowledge from others while preserving its personalized characteristics. Second, we propose an explainable AI mechanism for FL, named XFed. Specifically, in local training, we apply a decision tree to match the input and output of the local FL model. In global aggregation, we utilize t-distributed stochastic neighbor embedding (t-SNE) to visualize the local models before and after aggregation. Finally, simulation results validate the effectiveness of the proposed XPFL framework.

Existing serverless workflow orchestration systems are predominantly designed

for a single-cloud FaaS system, leading to vendor lock-in. This restricts

performance optimization, cost reduction, and availability of applications.

However, orchestrating serverless workflows on Jointcloud FaaS systems faces

two main challenges: 1) Additional overhead caused by centralized cross-cloud

orchestration; and 2) A lack of reliable failover and fault-tolerant mechanisms

for cross-cloud serverless workflows. To address these challenges, we propose

Jointλ, a distributed runtime system designed to orchestrate serverless

workflows on multiple FaaS systems without relying on a centralized

orchestrator. Jointλ introduces a compatibility layer, Backend-Shim,

leveraging inter-cloud heterogeneity to optimize makespan and reduce costs with

on-demand billing. By using function-side orchestration instead of centralized

nodes, it enables independent function invocations and data transfers, reducing

cross-cloud communication overhead. For high availability, it ensures

exactly-once execution via datastores and failover mechanisms for serverless

workflows on Jointcloud FaaS systems. We validate Jointλ on two

heterogeneous FaaS systems, AWS and ALiYun, with four workflows. Compared to

the most advanced commercial orchestration services for single-cloud serverless

workflows, Jointλ reduces up to 3.3× latency, saving up to 65\%

cost. Jointλ is also faster than the state-of-the-art orchestrators for

cross-cloud serverless workflows up to 4.0×, reducing up to 4.5×

cost and providing strong execution guarantees.

Data obtained from Parker Solar Probe (PSP) since 2021 April have shown the first in situ observation of the solar corona, where the solar wind is formed and accelerated. Here we investigate the alpha-proton differential flow and its characteristics across the critical Alfvén surface (CAS) using data from PSP during encounters 8-10 and 12-13. We first show the positive correlation between the alpha-proton differential velocity and the bulk solar wind speed at PSP encounter distances. Then we explore how the characteristics of the differential flow vary across the CAS and how they are affected by Alfvénic fluctuations including switchbacks. We find that the differential velocity below the CAS is generally smaller than that above the CAS, and the local Alfvén speed well limits the differential speed both above and below the CAS. The deviations from the alignment between the differential velocity and the local magnetic field vector are accompanied by large-amplitude Alfvénic fluctuations and decreases in the differential speed. Moreover, we observe that Vαp increases from M_A < 1 to MA≃2 and then starts to decrease, which suggests that alphas may remain preferentially accelerated well above the CAS. Our results also reveal that in the sub-Alfvénic solar wind both protons and alphas show a strong correlation between their velocity fluctuations and magnetic field fluctuations, with a weaker correlation for alphas. In contrast, in the super-Alfvénic regime the correlation remains high for protons, but is reduced for alphas.

14 Oct 2025

In this paper, we study the quasi-stationary behavior of the one-dimensional diffusion process with a regular or exit boundary at 0 and an entrance boundary at ∞. By using the Doob's h-transform, we show that the conditional distribution of the process converges to its unique quasi-stationary distribution exponentially fast in the total variation norm, uniformly with respect to the initial distribution. Moreover, we also use the same method to show that the conditional distribution of the process converges exponentially fast in the ψ-norm to the unique quasi-stationary distribution. The rate of convergence of the conditional empirical measure to the quasi-ergodic distribution is also considered. Finally, two examples arising in population dynamics are also given to illustrate the main results.

09 Jan 2025

Scatter signals can degrade the contrast and resolution of computed tomography (CT) images and induce artifacts. How to effectively correct scatter signals in CT has always been a focal point of research for researchers. This work presents a new framework for eliminating scatter artifacts in CT. In the framework, the interaction between photons and matter is characterized as a Markov process, and the calculation of the scatter signal intensity in CT is transformed into the computation of a 4n-dimensional integral, where n is the highest scatter order. Given the low-frequency characteristics of scatter signals in CT, this paper uses the quasi-Monte Carlo (QMC) method combined with forced fixed detection and down sampling to compute the integral. In the reconstruction process, the impact of scatter signals on the X-ray energy spectrum is considered. A scatter-corrected spectrum estimation method is proposed and applied to estimate the X-ray energy spectrum. Based on the Feldkamp-Davis-Kress (FDK) algorithm, a multi-module coupled reconstruction method, referred to as FDK-QMC-BM4D, has been developed to simultaneously eliminate scatter artifacts, beam hardening artifacts, and noise in CT imaging. Finally, the effectiveness of the FDK-QMC-BM4D method is validated in the Shepp-Logan phantom and head. Compared to the widely recognized Monte Carlo method, which is the most accurate method by now for estimating and correcting scatter signals in CT, the FDK-QMC-BM4D method improves the running speed by approximately 102 times while ensuring accuracy. By integrating the mechanism of FDK-QMC-BM4D, this study offers a novel approach to addressing artifacts in clinical CT.

Background and objective: In this paper, a modified U-Net based framework is

presented, which leverages techniques from Squeeze-and-Excitation (SE) block,

Atrous Spatial Pyramid Pooling (ASPP) and residual learning for accurate and

robust liver CT segmentation, and the effectiveness of the proposed method was

tested on two public datasets LiTS17 and SLiver07.

Methods: A new network architecture called SAR-U-Net was designed. Firstly,

the SE block is introduced to adaptively extract image features after each

convolution in the U-Net encoder, while suppressing irrelevant regions, and

highlighting features of specific segmentation task; Secondly, ASPP was

employed to replace the transition layer and the output layer, and acquire

multi-scale image information via different receptive fields. Thirdly, to

alleviate the degradation problem, the traditional convolution block was

replaced with the residual block and thus prompt the network to gain accuracy

from considerably increased depth.

Results: In the LiTS17 experiment, the mean values of Dice, VOE, RVD, ASD and

MSD were 95.71, 9.52, -0.84, 1.54 and 29.14, respectively. Compared with other

closely related 2D-based models, the proposed method achieved the highest

accuracy. In the experiment of the SLiver07, the mean values of Dice, VOE, RVD,

ASD and MSD were 97.31, 5.37, -1.08, 1.85 and 27.45, respectively. Compared

with other closely related models, the proposed method achieved the highest

segmentation accuracy except for the RVD.

Conclusion: The proposed model enables a great improvement on the accuracy

compared to 2D-based models, and its robustness in circumvent challenging

problems, such as small liver regions, discontinuous liver regions, and fuzzy

liver boundaries, is also well demonstrated and validated.

In the field of affective computing, traditional methods for generating

emotions predominantly rely on deep learning techniques and large-scale emotion

datasets. However, deep learning techniques are often complex and difficult to

interpret, and standardizing large-scale emotional datasets are difficult and

costly to establish. To tackle these challenges, we introduce a novel framework

named Audio-Visual Fusion for Brain-like Emotion Learning(AVF-BEL). In contrast

to conventional brain-inspired emotion learning methods, this approach improves

the audio-visual emotion fusion and generation model through the integration of

modular components, thereby enabling more lightweight and interpretable emotion

learning and generation processes. The framework simulates the integration of

the visual, auditory, and emotional pathways of the brain, optimizes the fusion

of emotional features across visual and auditory modalities, and improves upon

the traditional Brain Emotional Learning (BEL) model. The experimental results

indicate a significant improvement in the similarity of the audio-visual fusion

emotion learning generation model compared to single-modality visual and

auditory emotion learning and generation model. Ultimately, this aligns with

the fundamental phenomenon of heightened emotion generation facilitated by the

integrated impact of visual and auditory stimuli. This contribution not only

enhances the interpretability and efficiency of affective intelligence but also

provides new insights and pathways for advancing affective computing

technology. Our source code can be accessed here:

https://github.com/OpenHUTB/emotion}{this https URL

EEG signals have emerged as a powerful tool in affective brain-computer interfaces, playing a crucial role in emotion recognition. However, current deep transfer learning-based methods for EEG recognition face challenges due to the reliance of both source and target data in model learning, which significantly affect model performance and generalization. To overcome this limitation, we propose a novel framework (PL-DCP) and introduce the concepts of feature disentanglement and prototype inference. The dual prototyping mechanism incorporates both domain and class prototypes: domain prototypes capture individual variations across subjects, while class prototypes represent the ideal class distributions within their respective domains. Importantly, the proposed PL-DCP framework operates exclusively with source data during training, meaning that target data remains completely unseen throughout the entire process. To address label noise, we employ a pairwise learning strategy that encodes proximity relationships between sample pairs, effectively reducing the influence of mislabeled data. Experimental validation on the SEED and SEED-IV datasets demonstrates that PL-DCP, despite not utilizing target data during training, achieves performance comparable to deep transfer learning methods that require both source and target data. This highlights the potential of PL-DCP as an effective and robust approach for EEG-based emotion recognition.

23 Feb 2025

A Lightweight Vision Model-based Multi-user Semantic Communication System (LVM-MSC) is proposed to enhance image transmission efficiency in 6G networks. It integrates a lightweight large vision model for rapid semantic object identification, employs an adaptive semantic codec to efficiently encode critical image regions, and implements a multi-user semantic sharing mechanism to reduce redundant data transmission.

There are no more papers matching your filters at the moment.