IBM Almaden Research Center

In over sixty years since its inception, the field of planning has made

significant contributions to both the theory and practice of building planning

software that can solve a never-before-seen planning problem. This was done

through established practices of rigorous design and evaluation of planning

systems. It is our position that this rigor should be applied to the current

trend of work on planning with large language models. One way to do so is by

correctly incorporating the insights, tools, and data from the automated

planning community into the design and evaluation of LLM-based planners. The

experience and expertise of the planning community are not just important from

a historical perspective; the lessons learned could play a crucial role in

accelerating the development of LLM-based planners. This position is

particularly important in light of the abundance of recent works that replicate

and propagate the same pitfalls that the planning community has encountered and

learned from. We believe that avoiding such known pitfalls will contribute

greatly to the progress in building LLM-based planners and to planning in

general.

31 Oct 2025

We introduce several dynamical schemes that take advantage of mid-circuit measurement and nearest-neighbor gates on a lattice with maximum vertex degree three to implement topological codes and perform logic gates between them. We first review examples of Floquet codes and their implementation with nearest-neighbor gates and ancillary qubits. Next, we describe implementations of these Floquet codes that make use of the ancillary qubits to reset all qubits every measurement cycle. We then show how switching the role of data and ancilla qubits allows a pair of Floquet codes to be implemented simultaneously. We describe how to perform a logical Clifford gate to entangle a pair of Floquet codes that are implemented in this way. Finally, we show how switching between the color code and a pair of Floquet codes, via a depth-two circuit followed by mid-circuit measurement, can be used to perform syndrome extraction for the color code.

25 Oct 2025

In pursuit of large-scale fault-tolerant quantum computation, quantum low-density parity-check (LDPC) codes have been established as promising candidates for low-overhead memory when compared to conventional approaches based on surface codes. Performing fault-tolerant logical computation on QLDPC memory, however, has been a long standing challenge in theory and in practice. In this work, we propose a new primitive, which we call an extractor system, that can augment any QLDPC memory into a computational block well-suited for Pauli-based computation. In particular, any logical Pauli operator supported on the memory can be fault-tolerantly measured in one logical cycle, consisting of O(d) physical syndrome measurement cycles, without rearranging qubit connectivity. We further propose a fixed-connectivity, LDPC architecture built by connecting many extractor-augmented computational (EAC) blocks with bridge systems. When combined with any user-defined source of high fidelity ∣T⟩ states, our architecture can implement universal quantum circuits via parallel logical measurements, such that all single-block Clifford gates are compiled away. The size of an extractor on an n qubit code is O~(n), where the precise overhead has immense room for practical optimizations.

Quantum computation must be performed in a fault-tolerant manner to be realizable in practice. Recent progress has uncovered quantum error-correcting codes with sparse connectivity requirements and constant qubit overhead. Existing schemes for fault-tolerant logical measurement do not always achieve low qubit overhead. Here we present a low-overhead method to implement fault-tolerant logical measurement in a quantum error-correcting code by treating the logical operator as a symmetry and gauging it. The gauging measurement procedure introduces a high degree of flexibility that can be leveraged to achieve a qubit overhead that is linear in the weight of the operator being measured up to a polylogarithmic factor. This flexibility also allows the procedure to be adapted to arbitrary quantum codes. Our results provide a new, more efficient, approach to performing fault-tolerant quantum computation, making it more tractable for near-term implementation.

02 Aug 1999

We present a method to create a variety of interesting gates by teleporting quantum bits through special entangled states. This allows, for instance, the construction of a quantum computer based on just single qubit operations, Bell measurements, and GHZ states. We also present straightforward constructions of a wide variety of fault-tolerant quantum gates.

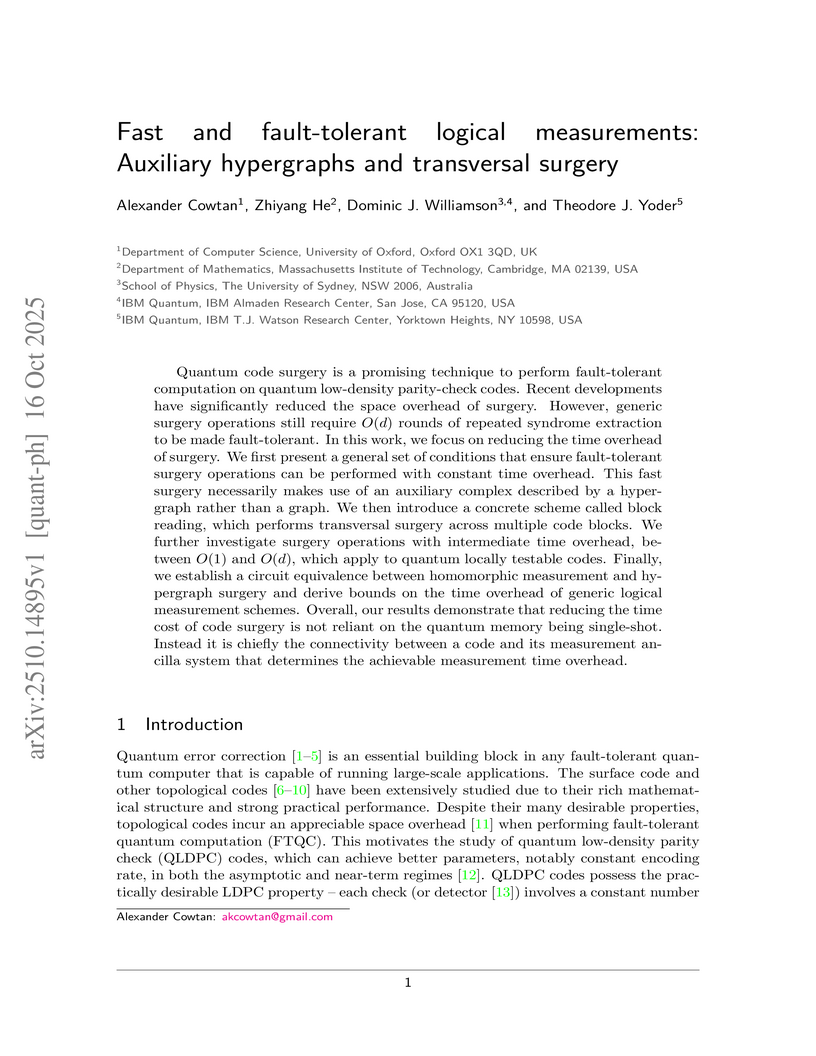

16 Oct 2025

This research introduces methods for performing fault-tolerant logical measurements with amortized constant time overhead in quantum low-density parity-check (QLDPC) codes, overcoming the conventional O(d) time cost of code surgery. The approach leverages auxiliary hypergraphs and 'block reading' protocols, enabling faster operations crucial for scalable quantum computing.

IBM researchers developed AUTOCIRCUIT-RL, a reinforcement learning framework that combines Large Language Models with performance-driven optimization for analog circuit topology synthesis. This system generates optimized circuits in 2.7 seconds, a 50x speedup over traditional methods, while achieving higher validity rates and better adherence to multi-objective design constraints.

01 Aug 2000

We present a general method to construct fault-tolerant quantum logic gates

with a simple primitive, which is an analog of quantum teleportation. The

technique extends previous results based on traditional quantum teleportation

(Gottesman and Chuang, Nature {\bf 402}, 390, 1999) and leads to

straightforward and systematic construction of many fault-tolerant encoded

operations, including the π/8 and Toffoli gates. The technique can also be

applied to the construction of remote quantum operations that cannot be

directly performed.

We construct a family of two-dimensional topological stabilizer codes on

continuous variable (CV) degrees of freedom, which generalize homological rotor

codes and the toric-GKP code. Our topological codes are built using the concept

of boson condensation -- we start from a parent stabilizer code based on an

R gauge theory and condense various bosonic excitations. This

produces a large class of topological CV stabilizer codes, including ones that

are characterized by the anyon theories of U(1)2n×U(1)−2m

Chern-Simons theories, for arbitrary pairs of positive integers (n,m). Most

notably, this includes anyon theories that are non-chiral and nevertheless do

not admit a gapped boundary. It is widely believed that such anyon theories

cannot be realized by any stabilizer model on finite-dimensional systems. We

conjecture that these CV codes go beyond codes obtained from concatenating a

topological qudit code with a local encoding into CVs, and thus, constitute the

first example of topological codes that are intrinsic to CV systems. Moreover,

we study the Hamiltonians associated to the topological CV stabilizer codes and

show that, although they have a gapless spectrum, they can become gapped with

the addition of a quadratic perturbation. We show that similar methods can be

used to construct a gapped Hamiltonian whose anyon theory agrees with a

U(1)2 Chern-Simons theory. Our work initiates the study of scalable

stabilizer codes that are intrinsic to CV systems and highlights how

error-correcting codes can be used to design and analyze many-body systems of

CVs that model lattice gauge theories.

Researchers from Cornell University and IBM Almaden Research Center systematically investigated the application of supervised machine learning to sentiment classification, demonstrating that these methods achieved up to 82.9% accuracy on movie reviews, outperforming human baselines. The study established that feature presence was more effective than frequency for sentiment analysis.

05 Feb 2025

A perturbative method using Magnus expansion and Dyson series is introduced to efficiently synthesize effective operational noise models, such as Pauli-Lindblad generators, directly from Lindbladian descriptions of noisy multi-qubit quantum operations. This framework provides first-principles derivations demonstrating the additive nature of noise contributions and how local physical noise and crosstalk can spread into higher-weight error terms depending on the ideal gate performed.

Development of efficient and high-performing electrolytes is crucial for advancing energy storage technologies, particularly in batteries. Predicting the performance of battery electrolytes rely on complex interactions between the individual constituents. Consequently, a strategy that adeptly captures these relationships and forms a robust representation of the formulation is essential for integrating with machine learning models to predict properties accurately. In this paper, we introduce a novel approach leveraging a transformer-based molecular representation model to effectively and efficiently capture the representation of electrolyte formulations. The performance of the proposed approach is evaluated on two battery property prediction tasks and the results show superior performance compared to the state-of-the-art methods.

21 Mar 2022

The use of kernel functions is a common technique to extract important features from data sets. A quantum computer can be used to estimate kernel entries as transition amplitudes of unitary circuits. Quantum kernels exist that, subject to computational hardness assumptions, cannot be computed classically. It is an important challenge to find quantum kernels that provide an advantage in the classification of real-world data. We introduce a class of quantum kernels that can be used for data with a group structure. The kernel is defined in terms of a unitary representation of the group and a fiducial state that can be optimized using a technique called kernel alignment. We apply this method to a learning problem on a coset-space that embodies the structure of many essential learning problems on groups. We implement the learning algorithm with 27 qubits on a superconducting processor.

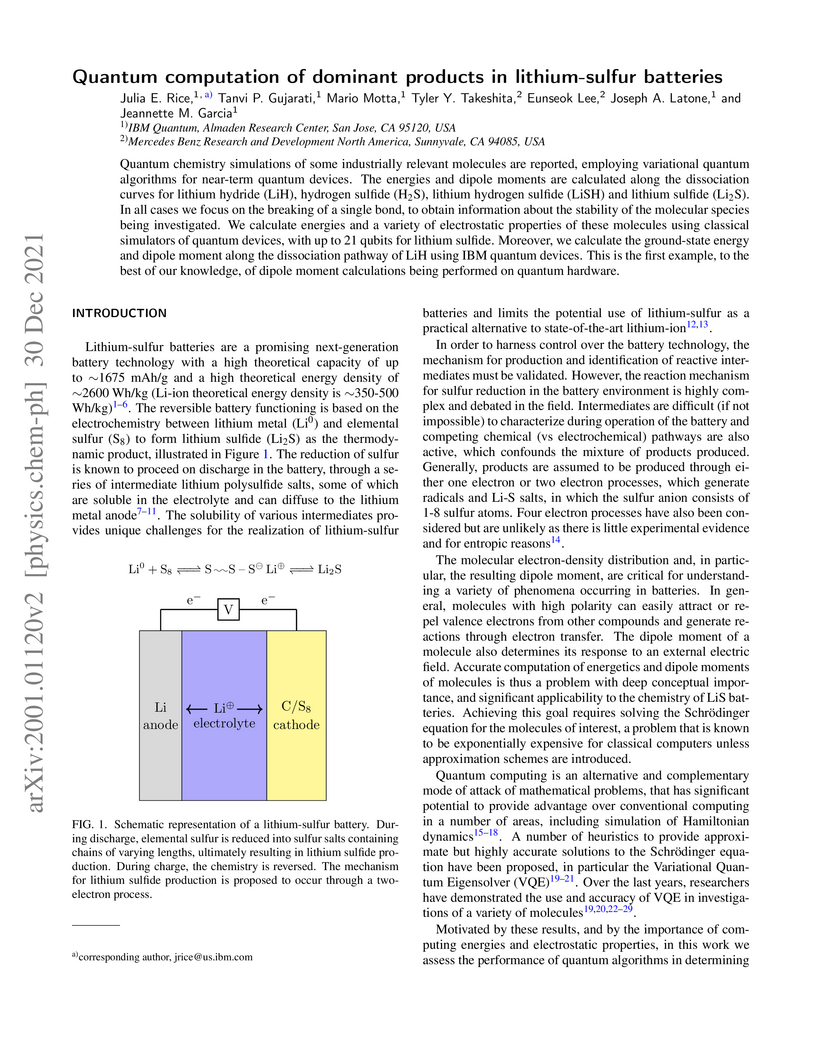

30 Dec 2021

Quantum chemistry simulations of some industrially relevant molecules are reported, employing variational quantum algorithms for near-term quantum devices. The energies and dipole moments are calculated along the dissociation curves for lithium hydride (LiH), hydrogen sulfide, lithium hydrogen sulfide and lithium sulfide. In all cases we focus on the breaking of a single bond, to obtain information about the stability of the molecular species being investigated. We calculate energies and a variety of electrostatic properties of these molecules using classical simulators of quantum devices, with up to 21 qubits for lithium sulfide. Moreover, we calculate the ground-state energy and dipole moment along the dissociation pathway of LiH using IBM quantum devices. This is the first example, to the best of our knowledge, of dipole moment calculations being performed on quantum hardware.

20 Apr 2022

Quantum error correction offers a promising path for performing quantum computations with low errors. Although a fully fault-tolerant execution of a quantum algorithm remains unrealized, recent experimental developments, along with improvements in control electronics, are enabling increasingly advanced demonstrations of the necessary operations for applying quantum error correction. Here, we perform quantum error correction on superconducting qubits connected in a heavy-hexagon lattice. The full processor can encode a logical qubit with distance three and perform several rounds of fault-tolerant syndrome measurements that allow the correction of any single fault in the circuitry. Furthermore, by using dynamic circuits and classical computation as part of our syndrome extraction protocols, we can exploit real-time feedback to reduce the impact of energy relaxation error in the syndrome and flag qubits. We show that the logical error varies depending on the use of a perfect matching decoder compared to a maximum likelihood decoder. We observe a logical error per syndrome measurement round as low as ∼0.04 for the matching decoder and as low as ∼0.03 for the maximum likelihood decoder. Our results suggest that more significant improvements to decoders are likely on the horizon as quantum hardware has reached a new stage of development towards fully fault-tolerant operations.

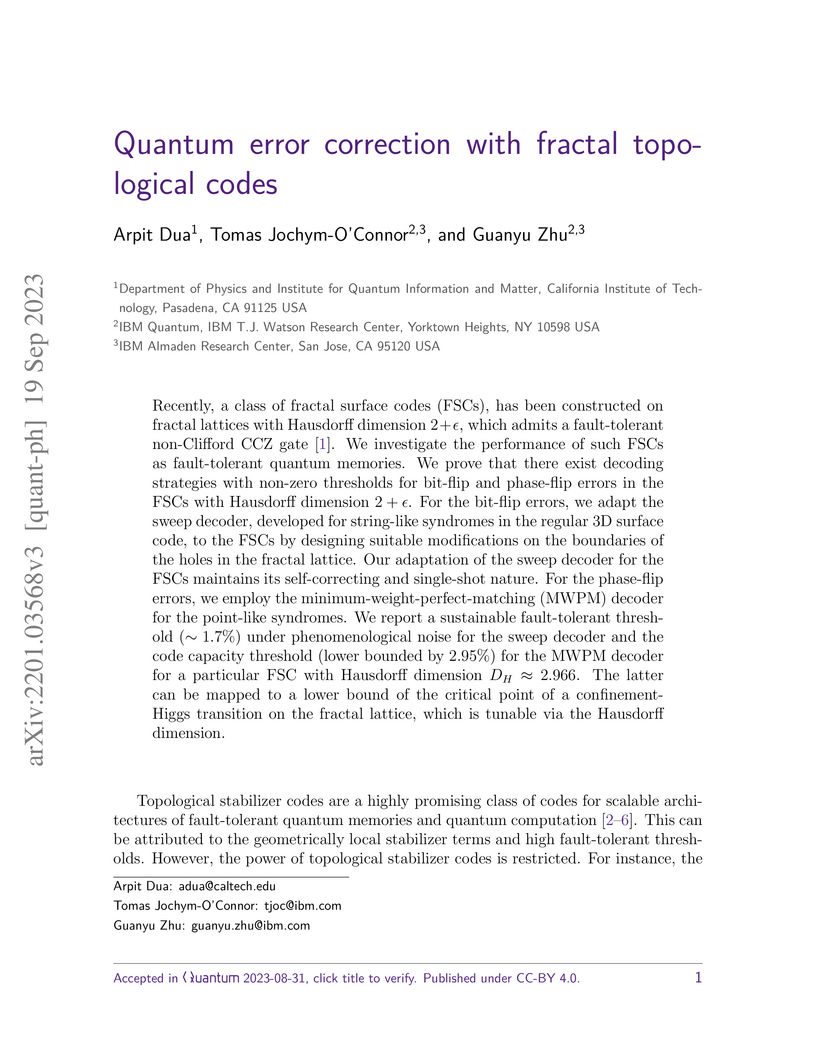

Recently, a class of fractal surface codes (FSCs), has been constructed on

fractal lattices with Hausdorff dimension 2+ϵ, which admits a

fault-tolerant non-Clifford CCZ gate. We investigate the performance of such

FSCs as fault-tolerant quantum memories. We prove that there exist decoding

strategies with non-zero thresholds for bit-flip and phase-flip errors in the

FSCs with Hausdorff dimension 2+ϵ. For the bit-flip errors, we adapt

the sweep decoder, developed for string-like syndromes in the regular 3D

surface code, to the FSCs by designing suitable modifications on the boundaries

of the holes in the fractal lattice. Our adaptation of the sweep decoder for

the FSCs maintains its self-correcting and single-shot nature. For the

phase-flip errors, we employ the minimum-weight-perfect-matching (MWPM) decoder

for the point-like syndromes. We report a sustainable fault-tolerant threshold

(∼1.7%) under phenomenological noise for the sweep decoder and the code

capacity threshold (lower bounded by 2.95%) for the MWPM decoder for a

particular FSC with Hausdorff dimension DH≈2.966. The latter can be

mapped to a lower bound of the critical point of a confinement-Higgs transition

on the fractal lattice, which is tunable via the Hausdorff dimension.

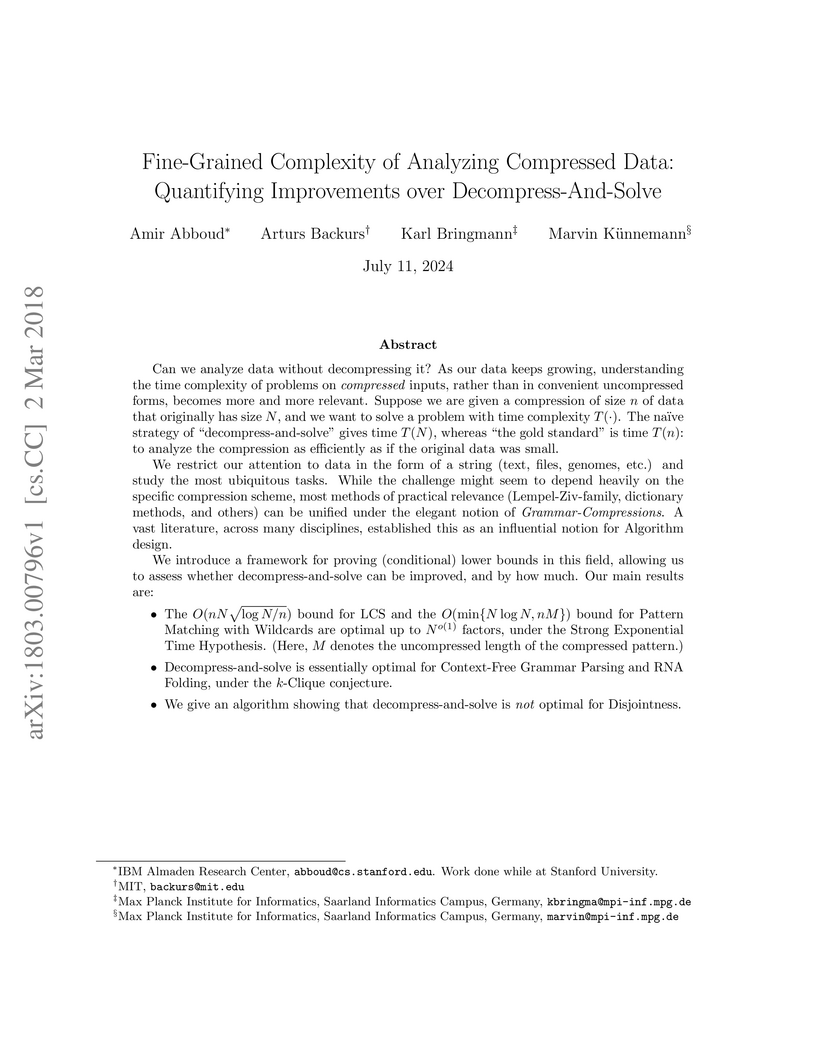

Can we analyze data without decompressing it? As our data keeps growing,

understanding the time complexity of problems on compressed inputs, rather than

in convenient uncompressed forms, becomes more and more relevant. Suppose we

are given a compression of size n of data that originally has size N, and

we want to solve a problem with time complexity T(⋅). The naive strategy

of "decompress-and-solve" gives time T(N), whereas "the gold standard" is

time T(n): to analyze the compression as efficiently as if the original data

was small.

We restrict our attention to data in the form of a string (text, files,

genomes, etc.) and study the most ubiquitous tasks. While the challenge might

seem to depend heavily on the specific compression scheme, most methods of

practical relevance (Lempel-Ziv-family, dictionary methods, and others) can be

unified under the elegant notion of Grammar Compressions. A vast literature,

across many disciplines, established this as an influential notion for

Algorithm design.

We introduce a framework for proving (conditional) lower bounds in this

field, allowing us to assess whether decompress-and-solve can be improved, and

by how much. Our main results are:

- The O(nNlogN/n) bound for LCS and the O(min{NlogN,nM})

bound for Pattern Matching with Wildcards are optimal up to No(1) factors,

under the Strong Exponential Time Hypothesis. (Here, M denotes the

uncompressed length of the compressed pattern.)

- Decompress-and-solve is essentially optimal for Context-Free Grammar

Parsing and RNA Folding, under the k-Clique conjecture.

- We give an algorithm showing that decompress-and-solve is not optimal for

Disjointness.

19 Jan 2021

Implementation of high-fidelity two-qubit operations is a key ingredient for

scalable quantum error correction. In superconducting qubit architectures

tunable buses have been explored as a means to higher fidelity gates. However,

these buses introduce new pathways for leakage. Here we present a modified

tunable bus architecture appropriate for fixed-frequency qubits in which the

adiabaticity restrictions on gate speed are reduced. We characterize this

coupler on a range of two-qubit devices achieving a maximum gate fidelity of

99.85%. We further show the calibration is stable over one day.

Interest in the magnetism of organic compounds is growing because of new

organic magnets, spin-based electronics and the diverse properties of magnetic

edge states in graphene nanoribbons. Electron spin resonance spectroscopy

combined with the scanning tunneling microscopy has recently been developed as

a powerful tool to address individual magnetic atoms and molecules at the

atomic scale. Here we demonstrate electron spin resonance and magnetic

resonance imaging of all-organic radical anions adsorbed on a protective thin

insulating film grown on a metal support. We show that using the highly

localized exchange field of the magnetic tip apex allows visualization of the

delocalized spin density with sub-molecular resolution, enabling spin-density

tomography that can distinguish similar molecular species. These results

provide new opportunities for visualizing spin density and magnetic

interactions at the atomic scale.

23 Oct 2025

High-fidelity, meter-scale microwave interconnects between superconducting quantum processor modules are a key technology for extending system size beyond constraints imposed by device manufacturing equipment, yield, and signal delivery. Although tomographic experiments have been used in previous demonstrations for benchmarking remote state transfer between modules, they do not reliably separate State Preparation and Measurement (SPAM) error from the error per state transfer. Recent developments based on randomized benchmarking provide a compatible theory for separating these two errors. In this work, we present a module-to-module interconnect based on Tunable-Coupling Qubits (TCQs) and benchmark, in a SPAM-error-tolerant manner enabled by a frame-tracking technique, a remote state transfer fidelity of 0.988 across a 60cm-long coplanar waveguide (CPW). The state transfer is implemented via a superadiabatic transitionless driving method, which suppresses intermediate excitation in the internal modes of the CPW. We further propose and construct a remote CNOT gate between modules, composed of local CZ gates in each module and remote state transfers, and report a gate fidelity of 0.933 using the randomized benchmarking method. The remote CNOT construction and benchmarking we present provide a way to fully characterize the module-to-module link operation and standardize reporting fidelity, analogous to randomized benchmarking protocols for other quantum gates.

There are no more papers matching your filters at the moment.