Ikerbasque Foundation for Science

This research from Multiverse Computing and academic partners introduces CompactifAI, a method leveraging quantum-inspired tensor networks for extreme compression of Large Language Models. It successfully reduced the LLaMA-2 7B model by 93% in memory and 70% in parameters, while maintaining accuracy within a 2-3% deviation and accelerating training by 50% and inference by over 25%.

The classical Heisenberg model in two spatial dimensions constitutes one of the most paradigmatic spin models, taking an important role in statistical and condensed matter physics to understand magnetism. Still, despite its paradigmatic character and the widely accepted ban of a (continuous) spontaneous symmetry breaking, controversies remain whether the model exhibits a phase transition at finite temperature. Importantly, the model can be interpreted as a lattice discretization of the O(3) non-linear sigma model in 1+1 dimensions, one of the simplest quantum field theories encompassing crucial features of celebrated higher-dimensional ones (like quantum chromodynamics in 3+1 dimensions), namely the phenomenon of asymptotic freedom. This should also exclude finite-temperature transitions, but lattice effects might play a significant role in correcting the mainstream picture. In this work, we make use of state-of-the-art tensor network approaches, representing the classical partition function in the thermodynamic limit over a large range of temperatures, to comprehensively explore the correlation structure for Gibbs states. By implementing an SU(2) symmetry in our two-dimensional tensor network contraction scheme, we are able to handle very large effective bond dimensions of the environment up to χEeff∼1500, a feature that is crucial in detecting phase transitions. With decreasing temperatures, we find a rapidly diverging correlation length, whose behaviour is apparently compatible with the two main contradictory hypotheses known in the literature, namely a finite-T transition and asymptotic freedom, though with a slight preference for the second.

Variational quantum algorithms are gaining attention as an early application of Noisy Intermediate-Scale Quantum (NISQ) devices. One of the main problems of variational methods lies in the phenomenon of Barren Plateaus, present in the optimization of variational parameters. Adding geometric inductive bias to the quantum models has been proposed as a potential solution to mitigate this problem, leading to a new field called Geometric Quantum Machine Learning. In this work, an equivariant architecture for variational quantum classifiers is introduced to create a label-invariant model for image classification with C4 rotational label symmetry. The equivariant circuit is benchmarked against two different architectures, and it is experimentally observed that the geometric approach boosts the model's performance. Finally, a classical equivariant convolution operation is proposed to extend the quantum model for the processing of larger images, employing the resources available in NISQ devices.

We propose a method to enhance the performance of Large Language Models (LLMs) by integrating quantum computing and quantum-inspired techniques. Specifically, our approach involves replacing the weight matrices in the Self-Attention and Multi-layer Perceptron layers with a combination of two variational quantum circuits and a quantum-inspired tensor network, such as a Matrix Product Operator (MPO). This substitution enables the reproduction of classical LLM functionality by decomposing weight matrices through the application of tensor network disentanglers and MPOs, leveraging well-established tensor network techniques. By incorporating more complex and deeper quantum circuits, along with increasing the bond dimensions of the MPOs, our method captures additional correlations within the quantum-enhanced LLM, leading to improved accuracy beyond classical models while maintaining low memory overhead.

In this paper we tackle the problem of dynamic portfolio optimization, i.e.,

determining the optimal trading trajectory for an investment portfolio of

assets over a period of time, taking into account transaction costs and other

possible constraints. This problem is central to quantitative finance. After a

detailed introduction to the problem, we implement a number of quantum and

quantum-inspired algorithms on different hardware platforms to solve its

discrete formulation using real data from daily prices over 8 years of 52

assets, and do a detailed comparison of the obtained Sharpe ratios, profits and

computing times. In particular, we implement classical solvers (Gekko,

exhaustive), D-Wave Hybrid quantum annealing, two different approaches based on

Variational Quantum Eigensolvers on IBM-Q (one of them brand-new and tailored

to the problem), and for the first time in this context also a quantum-inspired

optimizer based on Tensor Networks. In order to fit the data into each specific

hardware platform, we also consider doing a preprocessing based on clustering

of assets. From our comparison, we conclude that D-Wave Hybrid and Tensor

Networks are able to handle the largest systems, where we do calculations up to

1272 fully-connected qubits for demonstrative purposes. Finally, we also

discuss how to mathematically implement other possible real-life constraints,

as well as several ideas to further improve the performance of the studied

methods.

The framework employs Matrix Product Operators (MPOs) for deep neural network weights and a variational DMRG-like training algorithm to address scalability and interpretability challenges. It demonstrates competitive accuracy on various tasks, including 95.8% on MNIST after one epoch, and provides novel insights into model parameter correlations via entanglement entropy, adapting its structure based on data complexity.

Tensor network states and methods have erupted in recent years. Originally developed in the context of condensed matter physics and based on renormalization group ideas, tensor networks lived a revival thanks to quantum information theory and the understanding of entanglement in quantum many-body systems. Moreover, it has been not-so-long realized that tensor network states play a key role in other scientific disciplines, such as quantum gravity and artificial intelligence. In this context, here we provide an overview of basic concepts and key developments in the field. In particular, we briefly discuss the most important tensor network structures and algorithms, together with a sketch on advances related to global and gauge symmetries, fermions, topological order, classification of phases, entanglement Hamiltonians, AdS/CFT, artificial intelligence, the 2d Hubbard model, 2d quantum antiferromagnets, conformal field theory, quantum chemistry, disordered systems, and many-body localization.

In this work, we demonstrate how to apply non-linear cardinality constraints, important for real-world asset management, to quantum portfolio optimization. This enables us to tackle non-convex portfolio optimization problems using quantum annealing that would otherwise be challenging for classical algorithms. Being able to use cardinality constraints for portfolio optimization opens the doors to new applications for creating innovative portfolios and exchange-traded-funds (ETFs). We apply the methodology to the practical problem of enhanced index tracking and are able to construct smaller portfolios that significantly outperform the risk profile of the target index whilst retaining high degrees of tracking.

Here we introduce an improved approach to Variational Quantum Attack

Algorithms (VQAA) on crytographic protocols. Our methods provide robust quantum

attacks to well-known cryptographic algorithms, more efficiently and with

remarkably fewer qubits than previous approaches. We implement simulations of

our attacks for symmetric-key protocols such as S-DES, S-AES and Blowfish. For

instance, we show how our attack allows a classical simulation of a small

8-qubit quantum computer to find the secret key of one 32-bit Blowfish instance

with 24 times fewer number of iterations than a brute-force attack. Our work

also shows improvements in attack success rates for lightweight ciphers such as

S-DES and S-AES. Further applications beyond symmetric-key cryptography are

also discussed, including asymmetric-key protocols and hash functions. In

addition, we also comment on potential future improvements of our methods. Our

results bring one step closer assessing the vulnerability of large-size

classical cryptographic protocols with Noisy Intermediate-Scale Quantum (NISQ)

devices, and set the stage for future research in quantum cybersecurity.

Convolutional neural networks (CNNs) are one of the most widely used neural

network architectures, showcasing state-of-the-art performance in computer

vision tasks. Although larger CNNs generally exhibit higher accuracy, their

size can be effectively reduced by ``tensorization'' while maintaining

accuracy, namely, replacing the convolution kernels with compact decompositions

such as Tucker, Canonical Polyadic decompositions, or quantum-inspired

decompositions such as matrix product states, and directly training the factors

in the decompositions to bias the learning towards low-rank decompositions. But

why doesn't tensorization seem to impact the accuracy adversely? We explore

this by assessing how \textit{truncating} the convolution kernels of

\textit{dense} (untensorized) CNNs impact their accuracy. Specifically, we

truncated the kernels of (i) a vanilla four-layer CNN and (ii) ResNet-50

pre-trained for image classification on CIFAR-10 and CIFAR-100 datasets. We

found that kernels (especially those inside deeper layers) could often be

truncated along several cuts resulting in significant loss in kernel norm but

not in classification accuracy. This suggests that such ``correlation

compression'' (underlying tensorization) is an intrinsic feature of how

information is encoded in dense CNNs. We also found that aggressively truncated

models could often recover the pre-truncation accuracy after only a few epochs

of re-training, suggesting that compressing the internal correlations of

convolution layers does not often transport the model to a worse minimum. Our

results can be applied to tensorize and compress CNN models more effectively.

The growing complexity of radar signals demands responsive and accurate detection systems that can operate efficiently on resource-constrained edge devices. Existing models, while effective, often rely on substantial computational resources and large datasets, making them impractical for edge deployment. In this work, we propose an ultralight hybrid neural network optimized for edge applications, delivering robust performance across unfavorable signal-to-noise ratios (mean accuracy of 96.3% at 0 dB) using less than 100 samples per class, and significantly reducing computational overhead.

18 Nov 2024

The application of Tensor Networks (TN) in quantum computing has shown promise, particularly for data loading. However, the assumption that data is readily available often renders the integration of TN techniques into Quantum Monte Carlo (QMC) inefficient, as complete probability distributions would have to be calculated classically. In this paper the tensor-train cross approximation (TT-cross) algorithm is evaluated as a means to address the probability loading problem. We demonstrate the effectiveness of this method on financial distributions, showcasing the TT-cross approach's scalability and accuracy. Our results indicate that the TT-cross method significantly improves circuit depth scalability compared to traditional methods, offering a more efficient pathway for implementing QMC on near-term quantum hardware. The approach also shows high accuracy and scalability in handling high-dimensional financial data, making it a promising solution for quantum finance applications.

The research introduces a tensor network (TN) approach to overcome the curse of dimensionality in Next-Generation Reservoir Computing (NGRC) for chaotic time series prediction. This method represents Volterra kernel coefficients using a Matrix Product Operator, significantly reducing parameter count and training time while achieving superior prediction accuracy across a wide range of chaotic systems compared to Echo State Networks.

Partial Differential Equations (PDEs) are used to model a variety of dynamical systems in science and engineering. Recent advances in deep learning have enabled us to solve them in a higher dimension by addressing the curse of dimensionality in new ways. However, deep learning methods are constrained by training time and memory. To tackle these shortcomings, we implement Tensor Neural Networks (TNN), a quantum-inspired neural network architecture that leverages Tensor Network ideas to improve upon deep learning approaches. We demonstrate that TNN provide significant parameter savings while attaining the same accuracy as compared to the classical Dense Neural Network (DNN). In addition, we also show how TNN can be trained faster than DNN for the same accuracy. We benchmark TNN by applying them to solve parabolic PDEs, specifically the Black-Scholes-Barenblatt equation, widely used in financial pricing theory, empirically showing the advantages of TNN over DNN. Further examples, such as the Hamilton-Jacobi-Bellman equation, are also discussed.

In this paper we show how to implement in a simple way some complex real-life constraints on the portfolio optimization problem, so that it becomes amenable to quantum optimization algorithms. Specifically, first we explain how to obtain the best investment portfolio with a given target risk. This is important in order to produce portfolios with different risk profiles, as typically offered by financial institutions. Second, we show how to implement individual investment bands, i.e., minimum and maximum possible investments for each asset. This is also important in order to impose diversification and avoid corner solutions. Quite remarkably, we show how to build the constrained cost function as a quadratic binary optimization (QUBO) problem, this being the natural input of quantum annealers. The validity of our implementation is proven by finding the optimal portfolios, using D-Wave Hybrid and its Advantage quantum processor, on portfolios built with all the assets from S&P100 and S&P500. Our results show how practical daily constraints found in quantitative finance can be implemented in a simple way in current NISQ quantum processors, with real data, and under realistic market conditions. In combination with clustering algorithms, our methods would allow to replicate the behaviour of more complex indexes, such as Nasdaq Composite or others, in turn being particularly useful to build and replicate Exchange Traded Funds (ETF).

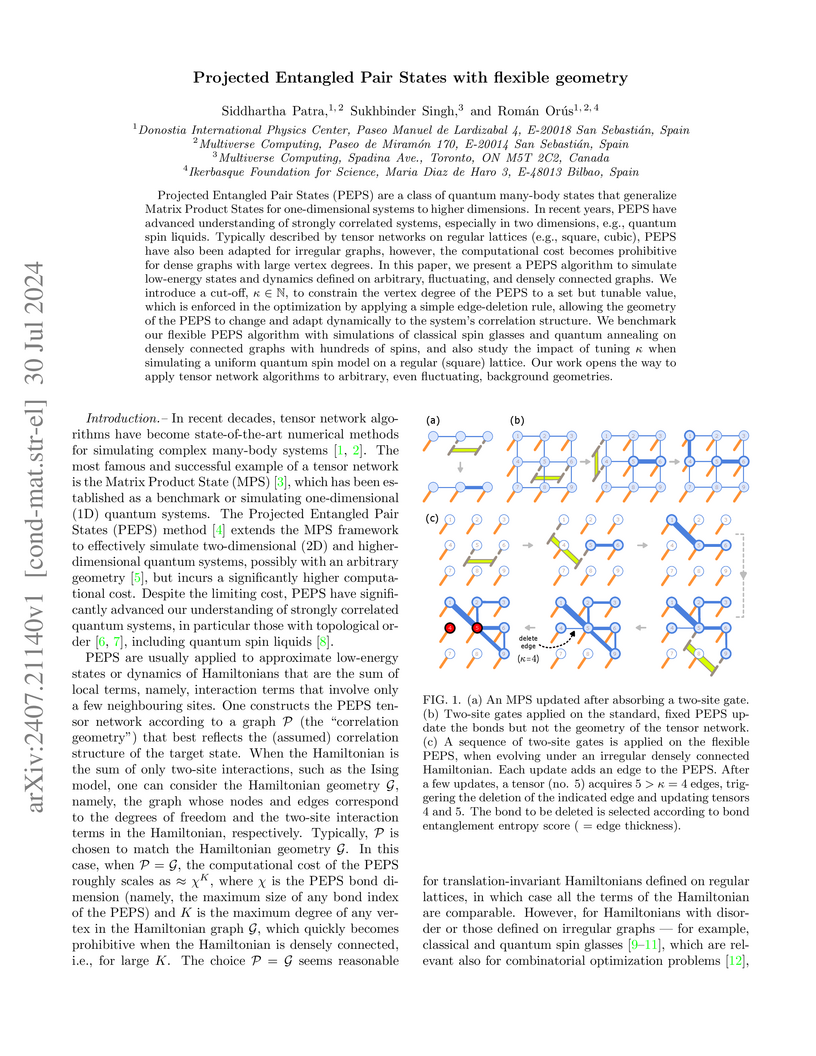

Projected Entangled Pair States (PEPS) are a class of quantum many-body

states that generalize Matrix Product States for one-dimensional systems to

higher dimensions. In recent years, PEPS have advanced understanding of

strongly correlated systems, especially in two dimensions, e.g., quantum spin

liquids. Typically described by tensor networks on regular lattices (e.g.,

square, cubic), PEPS have also been adapted for irregular graphs, however, the

computational cost becomes prohibitive for dense graphs with large vertex

degrees. In this paper, we present a PEPS algorithm to simulate low-energy

states and dynamics defined on arbitrary, fluctuating, and densely connected

graphs. We introduce a cut-off, κ∈N, to constrain the

vertex degree of the PEPS to a set but tunable value, which is enforced in the

optimization by applying a simple edge-deletion rule, allowing the geometry of

the PEPS to change and adapt dynamically to the system's correlation structure.

We benchmark our flexible PEPS algorithm with simulations of classical spin

glasses and quantum annealing on densely connected graphs with hundreds of

spins, and also study the impact of tuning κ when simulating a uniform

quantum spin model on a regular (square) lattice. Our work opens the way to

apply tensor network algorithms to arbitrary, even fluctuating, background

geometries.

03 Jul 2025

Photonic Integrated Circuits (PICs) provide superior speed, bandwidth, and energy efficiency, making them ideal for communication, sensing, and quantum computing applications. Despite their potential, PIC design workflows and integration lag behind those in electronics, calling for groundbreaking advancements. This review outlines the state of PIC design, comparing traditional simulation methods with machine learning approaches that enhance scalability and efficiency. It also explores the promise of quantum algorithms and quantum-inspired methods to address design challenges.

Tensor network methods have become a powerful class of tools to capture strongly correlated matter, but methods to capture the experimentally ubiquitous family of models at finite temperature beyond one spatial dimension are largely lacking. We introduce a tensor network algorithm able to simulate thermal states of two-dimensional quantum lattice systems in the thermodynamic limit. The method develops instances of projected entangled pair states and projected entangled pair operators for this purpose. It is the key feature of this algorithm to resemble the cooling down of the system from an infinite temperature state until it reaches the desired finite-temperature regime. As a benchmark we study the finite-temperature phase transition of the Ising model on an infinite square lattice, for which we obtain remarkable agreement with the exact solution. We then turn to study the finite-temperature Bose-Hubbard model in the limits of two (hard-core) and three bosonic modes per site. Our technique can be used to support the experimental study of actual effectively two-dimensional materials in the laboratory, as well as to benchmark optical lattice quantum simulators with ultra-cold atoms.

17 Feb 2019

We present a general graph-based Projected Entangled-Pair State (gPEPS)

algorithm to approximate ground states of nearest-neighbor local Hamiltonians

on any lattice or graph of infinite size. By introducing the structural-matrix

which codifies the details of tensor networks on any graphs in any dimension

d, we are able to produce a code that can be essentially launched to simulate

any lattice. We further introduce an optimized algorithm to compute simple

tensor updates as well as expectation values and correlators with a

mean-field-like effective environments. Though not being variational, this

strategy allows to cope with PEPS of very large bond dimension (e.g., D=100),

and produces remarkably accurate results in the thermodynamic limit in many

situations, and specially when the correlation length is small and the

connectivity of the lattice is large. We prove the validity of our approach by

benchmarking the algorithm against known results for several models, i.e., the

antiferromagnetic Heisenberg model on a chain, star and cubic lattices, the

hardcore Bose-Hubbard model on square lattice, the ferromagnetic Heisenberg

model in a field on the pyrochlore lattice, as well as the 3-state quantum

Potts model in field on the kagome lattice and the spin-1

bilinear-biquadratic Heisenberg model on the triangular lattice. We further

demonstrate the performance of gPEPS by studying the quantum phase transition

of the 2d quantum Ising model in transverse magnetic field on the square

lattice, and the phase diagram of the Kitaev-Heisenberg model on the

hyperhoneycomb lattice. Our results are in excellent agreement with previous

studies.

Coupling a quantum many-body system to an external environment dramatically

changes its dynamics and offers novel possibilities not found in closed

systems. Of special interest are the properties of the steady state of such

open quantum many-body systems, as well as the relaxation dynamics towards the

steady state. However, new computational tools are required to simulate open

quantum many-body systems, as methods developed for closed systems cannot be

readily applied. We review several approaches to simulate open many-body

systems and point out the advances made in recent years towards the simulation

of large system sizes.

There are no more papers matching your filters at the moment.