Kyushu University

11 Jun 2024

Addressing collective issues in social development requires a high level of social cohesion, characterized by cooperation and close social connections. However, social cohesion is challenged by selfish, greedy individuals. With the advancement of artificial intelligence (AI), the dynamics of human-machine hybrid interactions introduce new complexities in fostering social cohesion. This study explores the impact of simple bots on social cohesion from the perspective of human-machine hybrid populations within network. By investigating collective self-organizing movement during migration, results indicate that cooperative bots can promote cooperation, facilitate individual aggregation, and thereby enhance social cohesion. The random exploration movement of bots can break the frozen state of greedy population, help to separate defectors in cooperative clusters, and promote the establishment of cooperative clusters. However, the presence of defective bots can weaken social cohesion, underscoring the importance of carefully designing bot behavior. Our research reveals the potential of bots in guiding social self-organization and provides insights for enhancing social cohesion in the era of human-machine interaction within social networks.

We propose a method to explore the flavor structure of quarks and leptons

with reinforcement learning. As a concrete model, we utilize a basic

value-based algorithm for models with U(1) flavor symmetry. By training

neural networks on the U(1) charges of quarks and leptons, the agent finds 21

models to be consistent with experimentally measured masses and mixing angles

of quarks and leptons. In particular, an intrinsic value of normal ordering

tends to be larger than that of inverted ordering, and the normal ordering is

well fitted with the current experimental data in contrast to the inverted

ordering. A specific value of effective mass for the neutrinoless double beta

decay and a sizable leptonic CP violation induced by an angular component of

flavon field are predicted by autonomous behavior of the agent. Our finding

results indicate that the reinforcement learning can be a new method for

understanding the flavor structure.

17 Apr 2025

A scalable and exact optimization framework is developed for cardinality-constrained Poisson regression, allowing precise feature selection in high-dimensional count data. The approach solves problems with tens of thousands of features to near-perfect optimality, a scale previously intractable for exact methods.

14 Nov 2025

To simulate plasma phenomena, large-scale computational resources have been employed in developing high-precision and high-resolution plasma simulations. One of the main obstacles in plasma simulations is the requirement of computational resources that scale polynomially with the number of spatial grids, which poses a significant challenge for large-scale modeling. To address this issue, this study presents a quantum algorithm for simulating the nonlinear electromagnetic fluid dynamics that govern space plasmas. We map it, by applying Koopman-von Neumann linearization, to the Schrödinger equation and evolve the system using Hamiltonian simulation via quantum singular value transformation. Our algorithm scales O(sNxpolylog(Nx)T) in time complexity with s, Nx, and T being the spatial dimension, the number of spatial grid points per dimension, and the evolution time, respectively. Comparing the scaling O(sNxs(T5/4+TNx)) for the classical method with the finite volume scheme, this algorithm achieves polynomial speedup in Nx. The space complexity of this algorithm is exponentially reduced from O(sNxs) to O(spolylog(Nx)). Numerical experiments validate that accurate solutions are attainable with smaller m than theoretically anticipated and with practical values of m and R, underscoring the feasibility of the approach. As a practical demonstration, the method accurately reproduces the Kelvin-Helmholtz instability, underscoring its capability to tackle more intricate nonlinear dynamics. These results suggest that quantum computing can offer a viable pathway to overcome the computational barriers of multiscale plasma modeling.

We use the thermal effective theory to prove that, for the vacuum state in

any conformal field theory in d dimensions, the n-th R\'enyi entropy

SA(n) behaves as $S_A^{(n)} = \frac{f}{(2\pi n)^{d-1}} \frac{ {\rm

Area}(\partial A)}{(d-2)\epsilon^{d-2}}\left(1+O(n)\right)inthen

\rightarrow 0limitwhentheboundaryoftheentanglementdomainA$ is

spherical with the UV cutoff ϵ.The theory dependence is encapsulated

in the cosmological constant f in the thermal effective action. Using this

result, we estimate the density of states for large eigenvalues of the modular

Hamiltonian for the domain A. In two dimensions, we can use the hot spot idea

to derive more powerful formulas valid for arbitrary positive n. We discuss

the difference between two and higher dimensions and clarify the applicability

of the hot spot idea. We also use the thermal effective theory to derive an

analog of the Cardy formula for boundary operators in higher dimensions.

25 Aug 2025

We investigate crosscap states in two-dimensional rational conformal field theories (RCFTs), with an emphasis on the role of non-invertible symmetries. In particular, we argue for the existence of crosscap states labelled by each Verlinde line in the RCFT, extending previous constructions involving simple currents. Evidence for the existence of these new states is obtained by deriving a generalized Cardy condition incorporating both crosscaps and topological defects, which we check in some concrete examples. Finally, we briefly discuss how these crosscap states transform under the action of Verlinde lines, as well as the connection to mixed anomalies between parity and internal symmetries.

A survey from researchers across leading institutions in China, New Zealand, and Japan provides an integrated overview of cooperation within social dilemmas by examining multi-agent, human-agent, and AI-enhanced human-human interactions. The work synthesizes approaches for designing cooperative AI agents, analyzes the complexities of human-AI collaboration including human biases, and explores how AI can foster greater cooperation among humans.

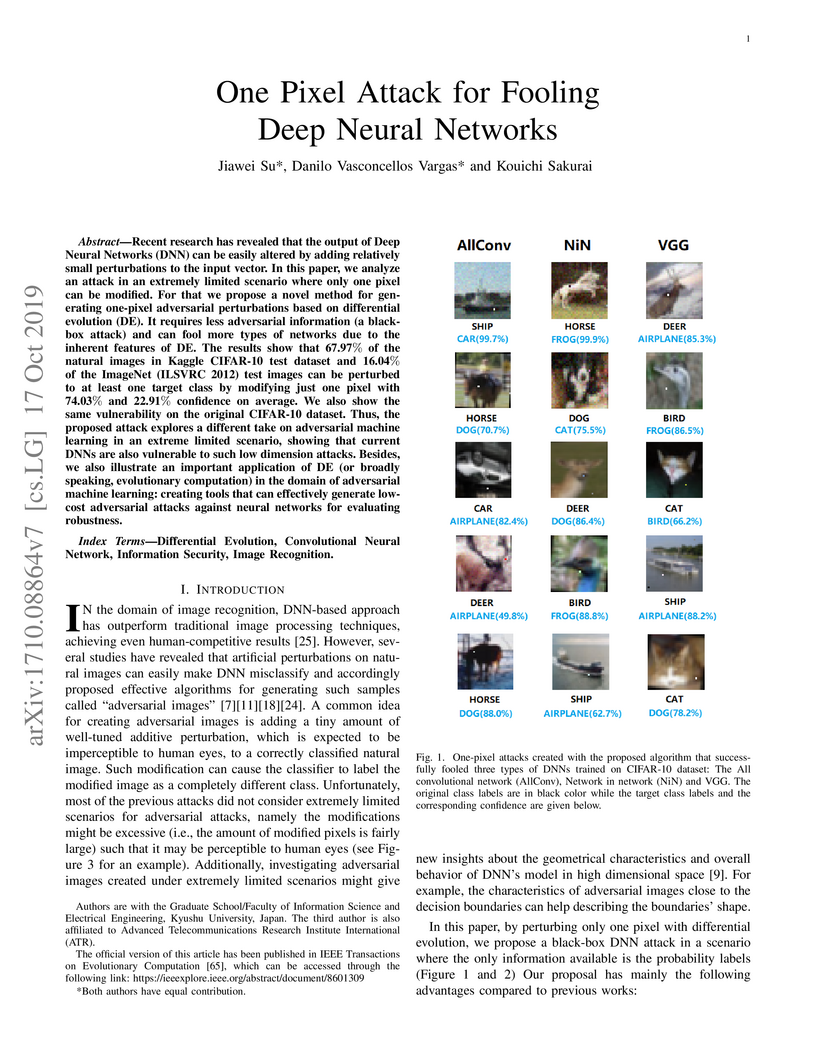

Researchers from Kyushu University demonstrated that Deep Neural Networks are vulnerable to adversarial examples crafted by modifying just one pixel in an image, a perturbation virtually imperceptible to humans. Using a black-box Differential Evolution approach, the method achieved up to 71.66% non-targeted misclassification on CIFAR-10 datasets and a 16.04% success rate on ImageNet, highlighting a fundamental local fragility in DNN decision boundaries.

14 Apr 2025

LSQCA: Resource-Efficient Load/Store Architecture for Limited-Scale Fault-Tolerant Quantum Computing

LSQCA: Resource-Efficient Load/Store Architecture for Limited-Scale Fault-Tolerant Quantum Computing

Current fault-tolerant quantum computer (FTQC) architectures utilize several

encoding techniques to enable reliable logical operations with restricted qubit

connectivity. However, such logical operations demand additional memory

overhead to ensure fault tolerance. Since the main obstacle to practical

quantum computing is the limited qubit count, our primary mission is to design

floorplans that can reduce memory overhead without compromising computational

capability. Despite extensive efforts to explore FTQC architectures, even the

current state-of-the-art floorplan strategy devotes 50% of memory space to this

overhead, not to data storage, to ensure unit-time random access to all logical

qubits.

In this paper, we propose an FTQC architecture based on a novel floorplan

strategy, Load/Store Quantum Computer Architecture (LSQCA), which can achieve

almost 100% memory density. The idea behind our architecture is to separate all

memory regions into small computational space called Computational Registers

(CR) and space-efficient memory space called Scan-Access Memory (SAM). We

define an instruction set for these abstract structures and provide concrete

designs named point-SAM and line-SAM architectures. With this design, we can

improve the memory density by allowing variable-latency memory access while

concealing the latency with other bottlenecks. We also propose optimization

techniques to exploit properties of quantum programs observed in our static

analysis, such as access locality in memory reference timestamps. Our numerical

results indicate that LSQCA successfully leverages this idea. In a

resource-restricted situation, a specific benchmark shows that we can achieve

about 90% memory density with 5% increase in the execution time compared to a

conventional floorplan, which achieves at most 50% memory density for unit-time

random access. Our design ensures broad quantum applicability.

18 Oct 2025

Software vulnerabilities are constantly being reported and exploited in software products, causing significant impacts on society. In recent years, the main approach to vulnerability detection, fuzzing, has been integrated into the continuous integration process to run in short and frequent cycles. This continuous fuzzing allows for fast identification and remediation of vulnerabilities during the development process. Despite adoption by thousands of projects, however, it is unclear how continuous fuzzing contributes to vulnerability detection. This study aims to elucidate the role of continuous fuzzing in vulnerability detection. Specifically, we investigate the coverage and the total number of fuzzing sessions when fuzzing bugs are discovered. We collect issue reports, coverage reports, and fuzzing logs from OSS-Fuzz, an online service provided by Google that performs fuzzing during continuous integration. Through an empirical study of a total of approximately 1.12 million fuzzing sessions from 878 projects participating in OSS-Fuzz, we reveal that (i) a substantial number of fuzzing bugs exist prior to the integration of continuous fuzzing, leading to a high detection rate in the early stages; (ii) code coverage continues to increase as continuous fuzzing progresses; and (iii) changes in coverage contribute to the detection of fuzzing bugs. This study provides empirical insights into how continuous fuzzing contributes to fuzzing bug detection, offering practical implications for future strategies and tool development in continuous fuzzing.

Recent advancements in text-guided diffusion models have shown promise for general image editing via inversion techniques, but often struggle to maintain ID and structural consistency in real face editing tasks. To address this limitation, we propose a zero-shot face editing method based on ID-Attribute Decoupled Inversion. Specifically, we decompose the face representation into ID and attribute features, using them as joint conditions to guide both the inversion and the reverse diffusion processes. This allows independent control over ID and attributes, ensuring strong ID preservation and structural consistency while enabling precise facial attribute manipulation. Our method supports a wide range of complex multi-attribute face editing tasks using only text prompts, without requiring region-specific input, and operates at a speed comparable to DDIM inversion. Comprehensive experiments demonstrate its practicality and effectiveness.

The primary aim of these lecture notes is to introduce the modern approach to two-dimensional conformal field theory (2D CFT). The study of analytical methods in two-dimensional conformal field theory has developed over several decades, starting with BPZ. The development of analytical methods, particularly in rational conformal field theory (RCFT), has been remarkable, with complete classifications achieved for certain model groups. One motivation for studying CFT comes from its ability to describe quantum critical systems. Given that realistic quantum critical systems are fundamentally RCFTs, it is somewhat natural that the analytical methods of RCFT have evolved significantly.

CFTs other than RCFTs are called irrational conformal field theories (ICFTs). Compared to RCFTs, the study of ICFTs has not progressed as much. Leaving aside whether there is physical motivation or not, ICFTs inherently possess a difficulty that makes them challenging to approach. However, with the development of quantum gravity, the advancement of analytical methods for ICFTs has become essential. The reason lies in the AdS/CFT correspondence. AdS/CFT refers to the relationship between d+1 dimensional quantum gravity and d dimensional CFT. Within this correspondence, the CFT appears as a non-perturbative formulation of quantum gravity. Except in special cases, this CFT belongs to ICFT. Against this backdrop, the methods for ICFTs have developed rapidly in recent years. Many of these ICFT methods are indispensable for modern quantum gravity research. Unfortunately, these cannot be learned from textbooks on 2D CFTs, such as Yellow book. These lecture notes aim to fill this gap. Specifically, we will cover techniques that have already been applied in many studies, such as HHLL block and monodromy method, and significant results that have become proper nouns, such as Hellerman bound and HKS bound.

The paper proposes a novel multi-class Multiple-Instance Learning (MIL) problem called Learning from Majority Label (LML). In LML, the majority class of instances in a bag is assigned as the bag-level label. The goal of LML is to train a classification model that estimates the class of each instance using the majority label. This problem is valuable in a variety of applications, including pathology image segmentation, political voting prediction, customer sentiment analysis, and environmental monitoring. To solve LML, we propose a Counting Network trained to produce bag-level majority labels, estimated by counting the number of instances in each class. Furthermore, analysis experiments on the characteristics of LML revealed that bags with a high proportion of the majority class facilitate learning. Based on this result, we developed a Majority Proportion Enhancement Module (MPEM) that increases the proportion of the majority class by removing minority class instances within the bags. Experiments demonstrate the superiority of the proposed method on four datasets compared to conventional MIL methods. Moreover, ablation studies confirmed the effectiveness of each module. The code is available at \href{this https URL}{here}.

The minimum sum coloring problem with bundles was introduced by Darbouy and Friggstad (SWAT 2024) as a common generalization of the minimum coloring problem and the minimum sum coloring problem. During their presentation, the following open problem was raised: whether the minimum sum coloring problem with bundles could be solved in polynomial time for trees. We answer their question in the negative by proving that the minimum sum coloring problem with bundles is NP-hard even for paths. We complement this hardness by providing algorithms of the following types. First, we provide a fixed-parameter algorithm for trees when the number of bundles is a parameter; this can be extended to graphs of bounded treewidth. Second, we provide a polynomial-time algorithm for trees when bundles form a partition of the vertex set and the difference between the number of vertices and the number of bundles is constant. Third, we provide a polynomial-time algorithm for trees when bundles form a partition of the vertex set and each bundle induces a connected subgraph. We further show that for bipartite graphs, the problem with weights is NP-hard even when the number of bundles is at least three, but is polynomial-time solvable when the number of bundles is at most two. The threshold shifts to three versus four for the problem without weights.

08 Oct 2025

Design-level decisions in open-source software (OSS) projects are often made through structured mechanisms such as proposals, which require substantial community discussion and review. Despite their importance, the proposal process is resource-intensive and often leads to contributor frustration, especially when proposals are declined without clear feedback. Yet, the reasons behind proposal rejection remain poorly understood, limiting opportunities to streamline the process or guide contributors effectively. This study investigates the characteristics and outcomes of proposals in the Go programming language to understand why proposals are declined and how such outcomes might be anticipated. We conduct a mixed-method empirical study on 1,091 proposals submitted to the Go project. We quantify proposal outcomes, build a taxonomy of decline reasons, and evaluate large language models (LLMs) for predicting these outcomes. We find that proposals are more often declined than accepted, and resolution typically takes over a month. Only 14.7% of declined proposals are ever resubmitted. Through qualitative coding, we identify nine key reasons for proposal decline, such as duplication, limited use cases, or violations of project principles. This taxonomy can help contributors address issues in advance, e.g., checking for existing alternatives can reduce redundancy. We also demonstrate that GPT-based models can predict decline decisions early in the discussion (F1 score = 0.71 with partial comments), offering a practical tool for prioritizing review effort. Our findings reveal inefficiencies in the proposal process and highlight actionable opportunities for improving both contributor experience and reviewer workload by enabling early triage and guiding contributors to strengthen their proposals using a structured understanding of past decline reasons.

We investigate coupling selection rules in heterotic string theory on non-Abelian orbifolds. Since boundary conditions on the orbifolds are classified by conjugacy classes of space group elements, non-Abelian orbifolds give rise to non-invertible selection rules on couplings among twisted sectors as well as ones including untwisted sectors. Furthermore, we find that non-invertible selection rules lead to characteristic patterns of Yukawa matrices.

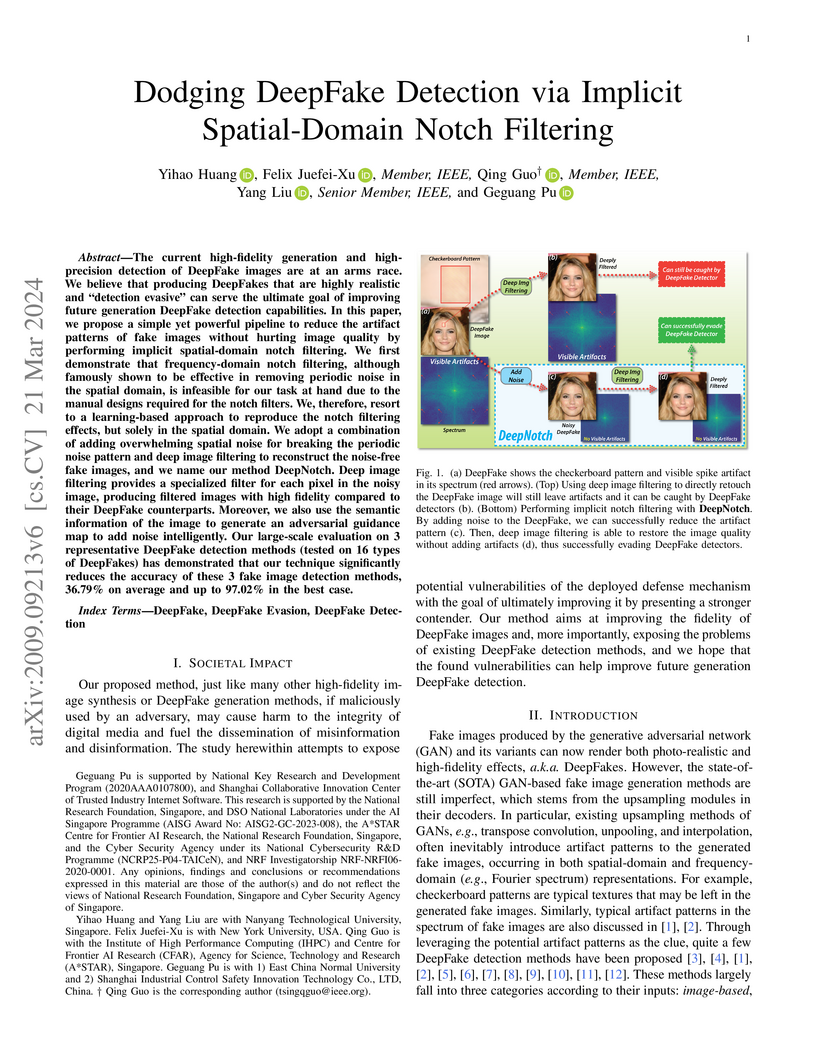

The current high-fidelity generation and high-precision detection of DeepFake images are at an arms race. We believe that producing DeepFakes that are highly realistic and 'detection evasive' can serve the ultimate goal of improving future generation DeepFake detection capabilities. In this paper, we propose a simple yet powerful pipeline to reduce the artifact patterns of fake images without hurting image quality by performing implicit spatial-domain notch filtering. We first demonstrate that frequency-domain notch filtering, although famously shown to be effective in removing periodic noise in the spatial domain, is infeasible for our task at hand due to the manual designs required for the notch filters. We, therefore, resort to a learning-based approach to reproduce the notch filtering effects, but solely in the spatial domain. We adopt a combination of adding overwhelming spatial noise for breaking the periodic noise pattern and deep image filtering to reconstruct the noise-free fake images, and we name our method DeepNotch. Deep image filtering provides a specialized filter for each pixel in the noisy image, producing filtered images with high fidelity compared to their DeepFake counterparts. Moreover, we also use the semantic information of the image to generate an adversarial guidance map to add noise intelligently. Our large-scale evaluation on 3 representative state-of-the-art DeepFake detection methods (tested on 16 types of DeepFakes) has demonstrated that our technique significantly reduces the accuracy of these 3 fake image detection methods, 36.79% on average and up to 97.02% in the best case.

Researchers at RIKEN and Kyushu University formulated the projective-representation Eigenstate Thermalization Hypothesis (prETH), a generalization of ETH for quantum systems with Abelian symmetries acting projectively. This framework classifies charged operators, shows the standard Gibbs ensemble fails for certain "Type II" operators, and demonstrates a non-commutative Generalized Gibbs Ensemble correctly predicts stationary states, numerically verified in spin chains and lattice gauge theory.

The Oliva framework enhances neural network verification by introducing an intelligent, order-guided exploration of Branch-and-Bound (BaB) trees, replacing naive search strategies. It achieved speedups of up to 80x on CIFAR-10 models and substantially increased the number of solved verification problems compared to existing state-of-the-art BaB approaches.

Generative modeling of 3D LiDAR data is an emerging task with promising

applications for autonomous mobile robots, such as scalable simulation, scene

manipulation, and sparse-to-dense completion of LiDAR point clouds. While

existing approaches have demonstrated the feasibility of image-based LiDAR data

generation using deep generative models, they still struggle with fidelity and

training stability. In this work, we present R2DM, a novel generative model for

LiDAR data that can generate diverse and high-fidelity 3D scene point clouds

based on the image representation of range and reflectance intensity. Our

method is built upon denoising diffusion probabilistic models (DDPMs), which

have shown impressive results among generative model frameworks in recent

years. To effectively train DDPMs in the LiDAR domain, we first conduct an

in-depth analysis of data representation, loss functions, and spatial inductive

biases. Leveraging our R2DM model, we also introduce a flexible LiDAR

completion pipeline based on the powerful capabilities of DDPMs. We demonstrate

that our method surpasses existing methods in generating tasks on the KITTI-360

and KITTI-Raw datasets, as well as in the completion task on the KITTI-360

dataset. Our project page can be found at this https URL

There are no more papers matching your filters at the moment.